1 Introduction to APP4MC

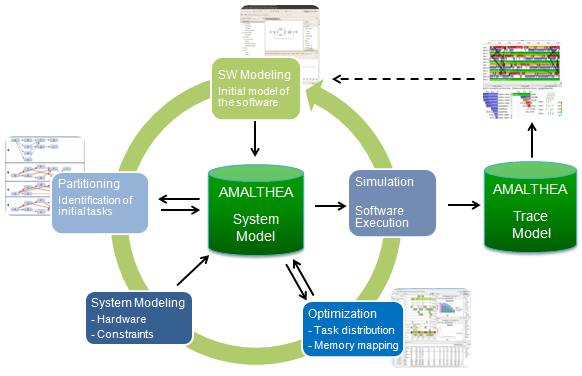

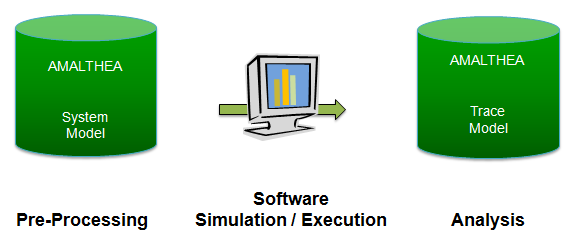

The goal of the project is the development of a consistent, open, expandable tool platform for embedded software engineering. It is based on the model driven approach as basic engineering methodology. The main focus is the optimization of embedded multi-core systems.

Most functions in a modern car are controlled by embedded systems. Also more and more driver assistance functions are introduced. This implies a continuous increase of computing power accompanied by the request for reduction of energy and costs. To handle these requirements the multi-core technology permeates the control units in cars. This is today one of the biggest challenges for automotive systems. Existing applications can not realize immediate benefit from these multi-core ECUs because they are not designed to run on such architectures. In addition applications and systems have to be migrated into AUTOSAR compatible architectures. Both trends imply the necessity for new development environments which cater for these requirements.

The tool platform shall be capable to support all aspects of the development cycle. This addresses predominantly the automotive domain but is also applicable to telecommunication by extensions which deal with such systems in their native environment and integrated in a car.

Future extensions will add support for visualization tools, graphical editors. But not only design aspects will be supported but also verification and validation of the systems will be taken into account and support tools for optimal multi-core real-time scheduling and validation of timing requirements will be provided. In the course of this project not all of the above aspects will be addressed in the same depth. Some will be defined and some will be implemented on a prototype basis. But the basis platform and the overall architecture will be finalized as much as possible.

The result of the project is an open tool platform in different aspects. On the one hand it is published under the Eclipse Public License (EPL) and on the other hand it is open to be integrated with existing or new tools either on a company individual basis or with commercially available tools.

2 User Guide

2.1 Introduction

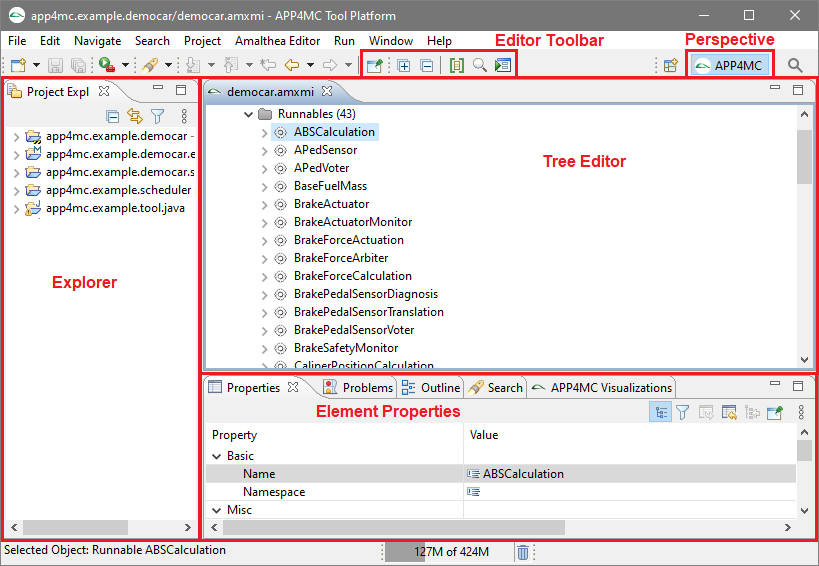

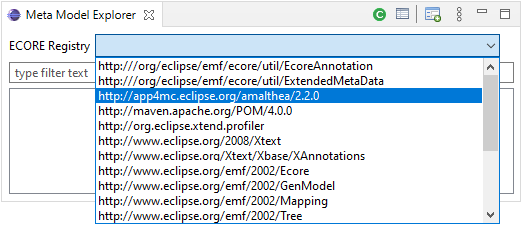

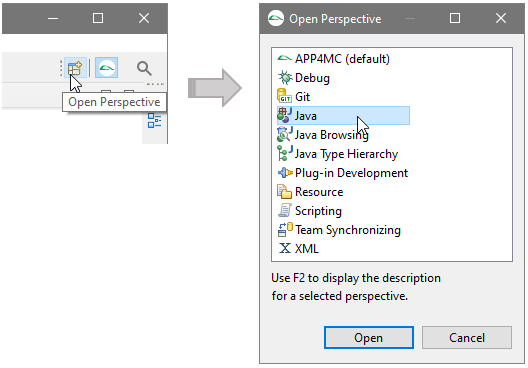

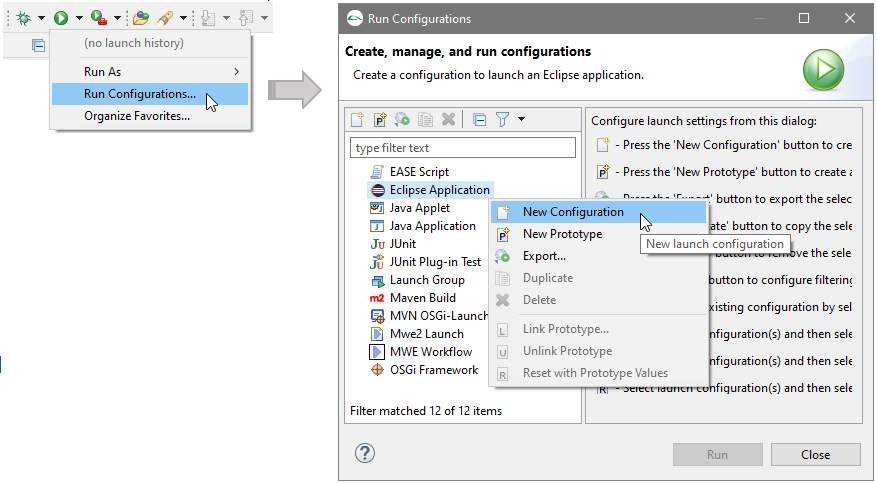

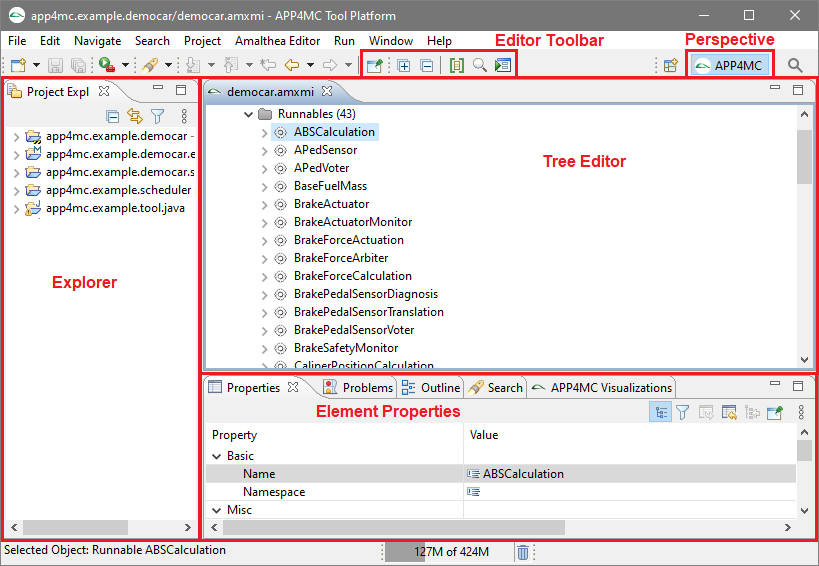

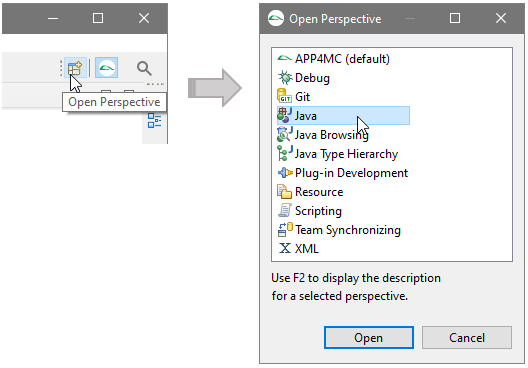

APP4MC comes with a predefined perspective available in the Eclipse menu under Window -> Open Perspective -> Other -> APP4MC. This perspective consists of the following elements:

- Project Explorer

- Editor

- Tree Editor showing the structure of the model content

- Standard Properties Tab is used to work on elements attributes

The following screenshot is showing this perspective and its contained elements.

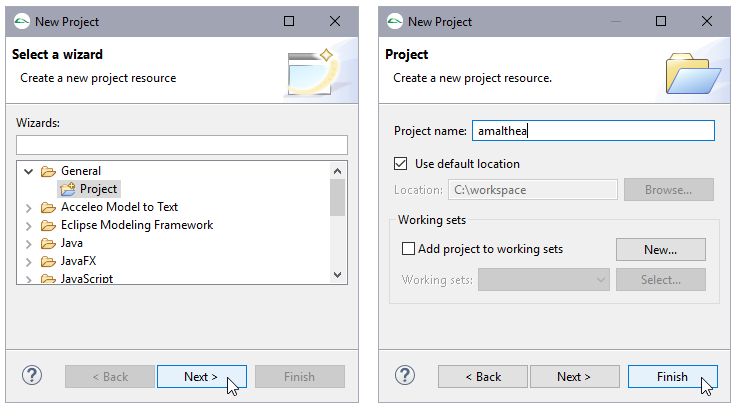

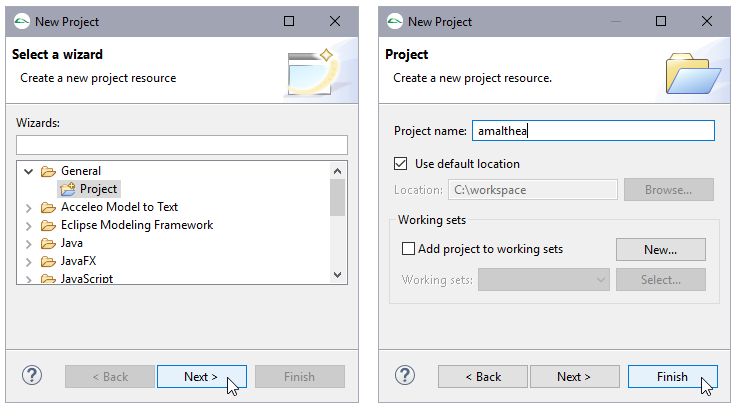

2.1.1 Steps to create a new AMALTHEA model

APP4MC provides a standard wizard to create a new AMALTHEA model from scratch.

Step 1: Create a new general project

The scope of an AMALTHEA model is defined by its enclosing container (project or folder).

Therefore a project is required.

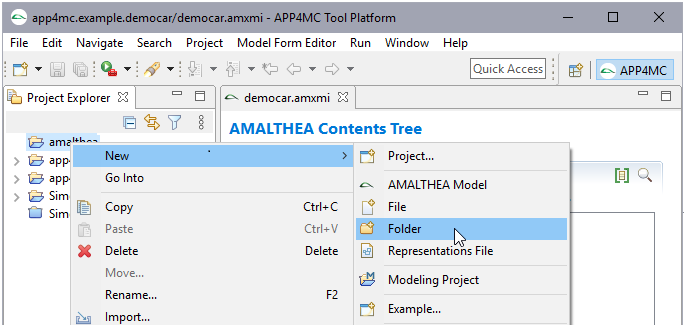

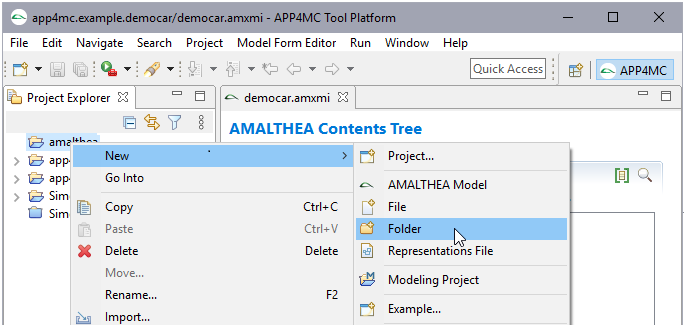

Step 2: Create a new folder inside of the created project

It is recommended to create a folder (

although a project is also a possible container ).

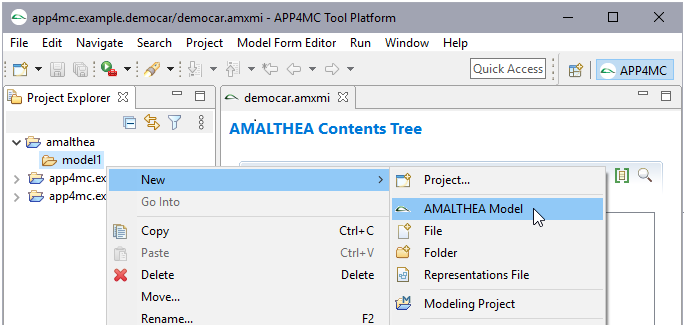

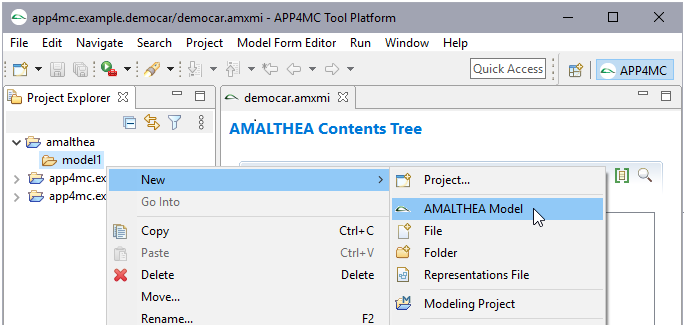

Step 3: Create a new AMALTHEA model

In the context menu (right mouse button) an entry for a new AMALTHEA model can be found.

Another starting point is

File ->

New ->

Other

In the dialog you can select the parent folder and the file name.

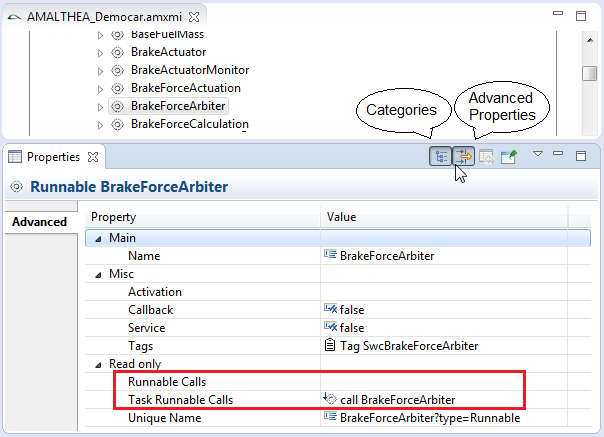

2.1.2 AMALTHEA Editor

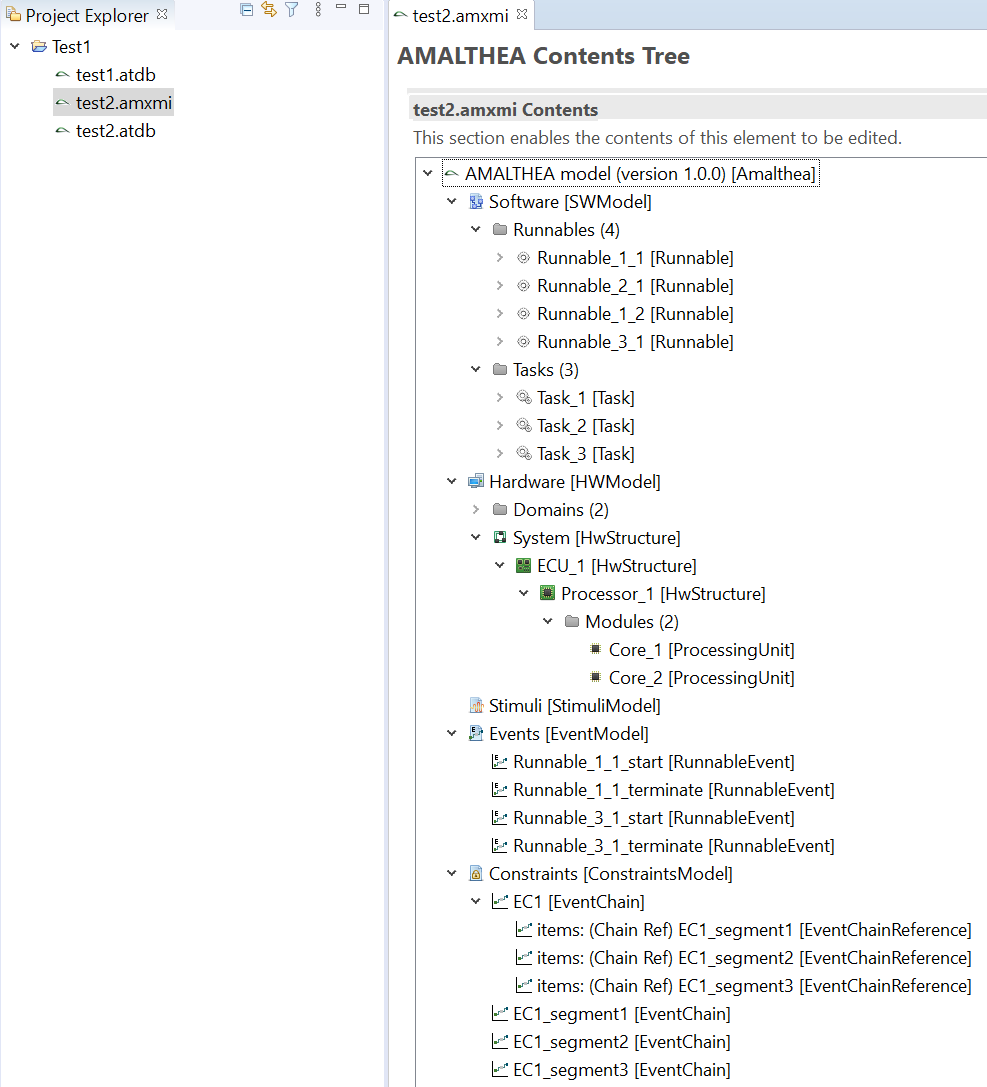

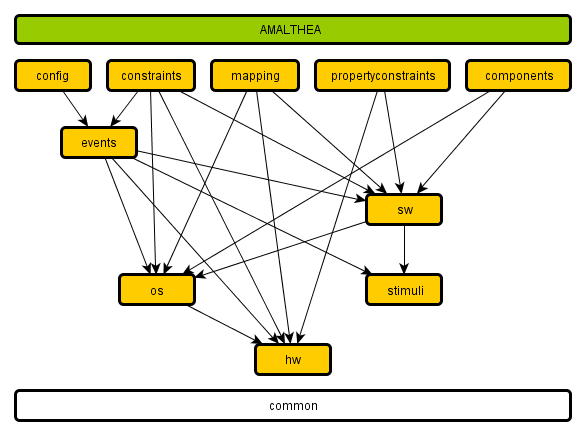

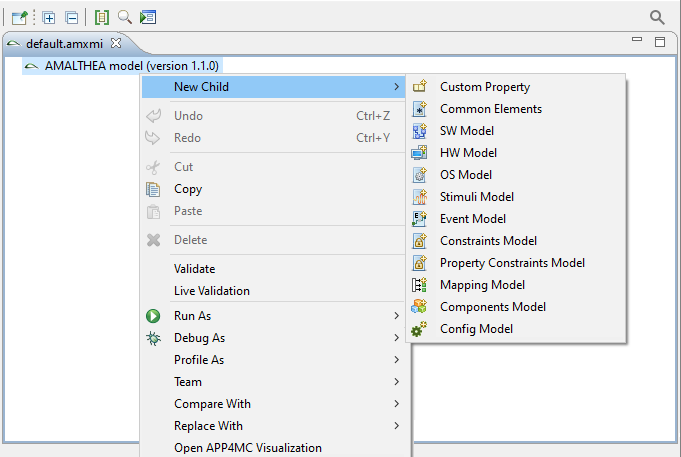

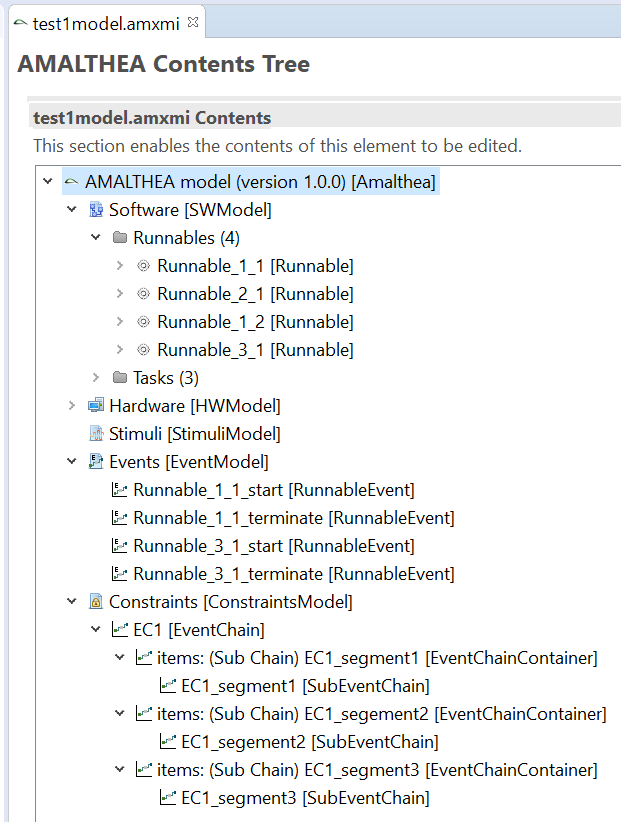

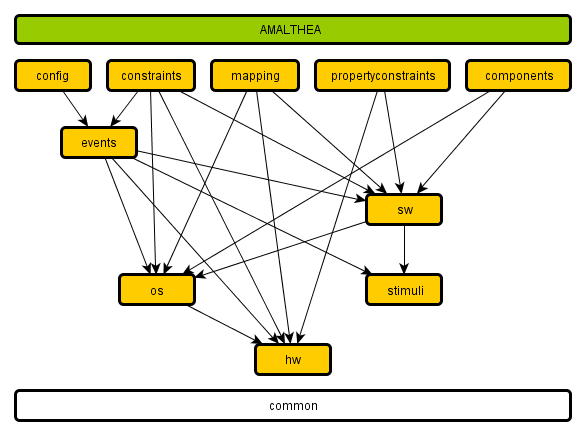

The AMALTHEA Editor shows the entire model that contains sub models.

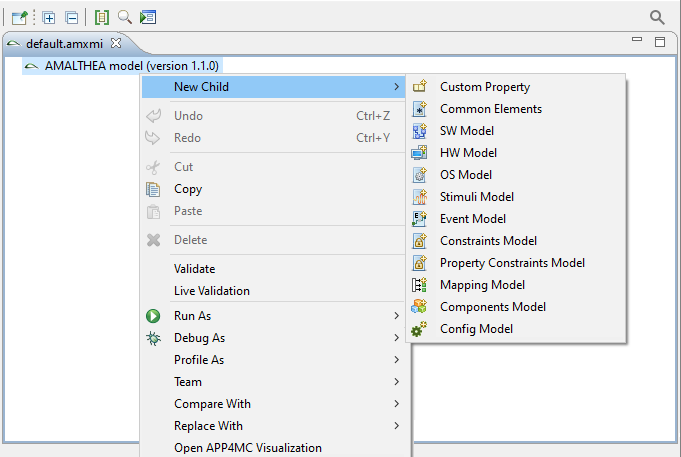

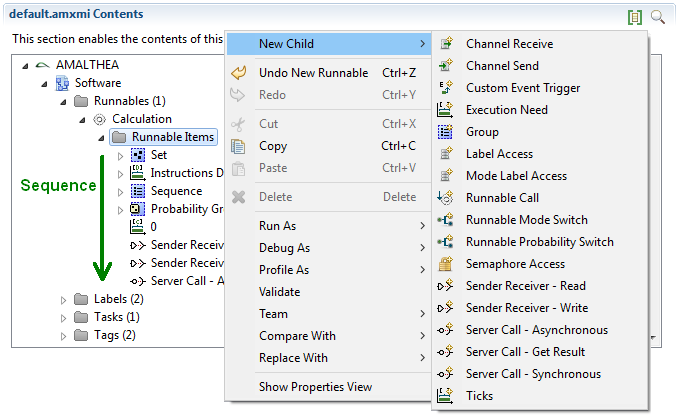

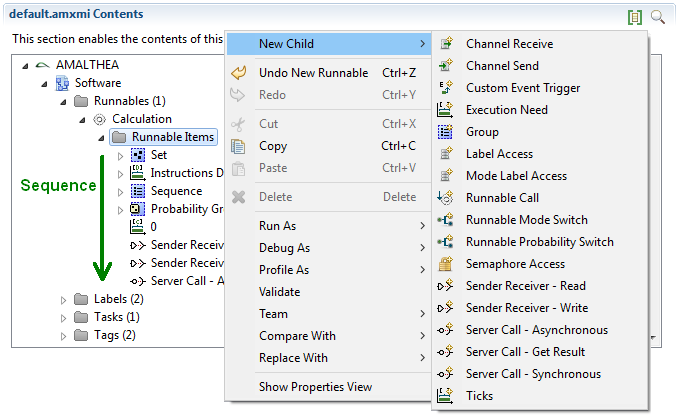

The next screenshot shows the "New Child" menu with all its possibilities.

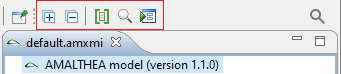

The AMALTHEA Editor has additional commands available in the editor toolbar.

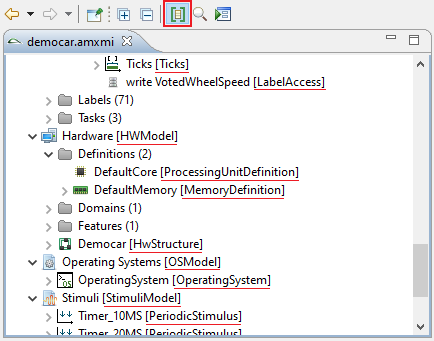

Show types of model elements

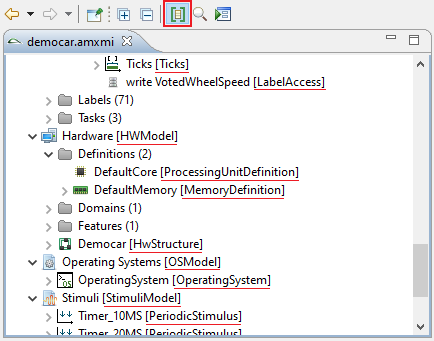

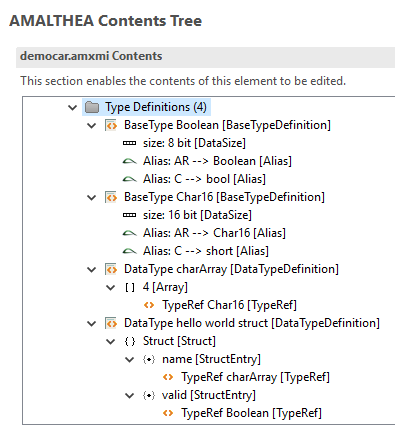

The Show types of elements button triggers the editor to show the direct type of the element in the tree editor using [element_type]. The following screenshot shows the toggle and the types marked with an underline.

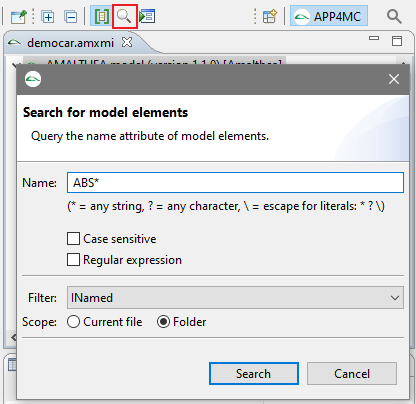

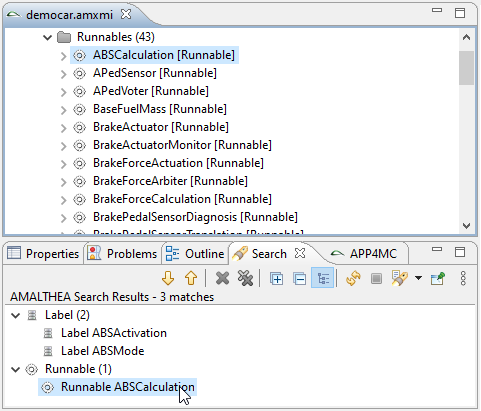

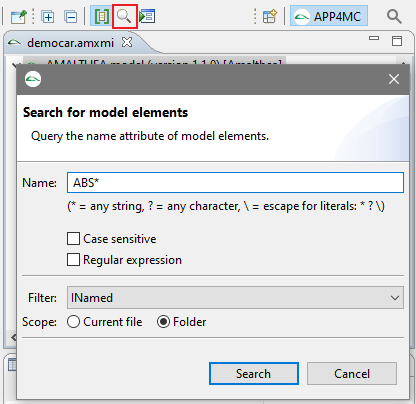

Search for model elements

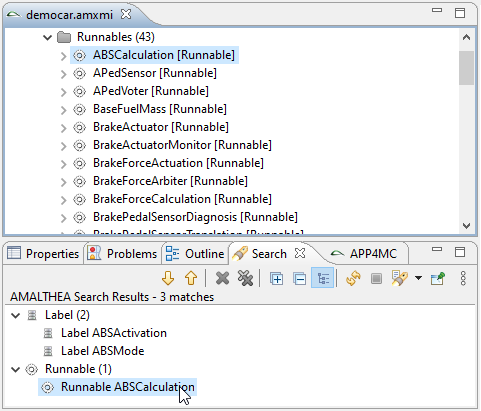

The editor provides the possibility to search for model elements by using the available name attribute. For example this can be used to find all elements in the model with "ABS" at the beginning of their name. The result are shown in the Eclipse search result view.

A double click on a result selects the element in the editor view.

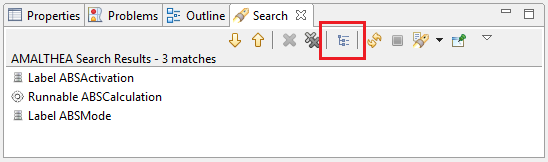

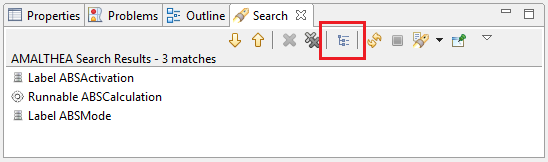

An additional option is to toggle the search results to show them as a plain list instead of a tree grouped by type.

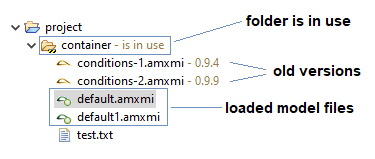

2.1.3 Handling of multiple files (folder scope)

Amalthea model

- Amalthea files have the extension ".amxmi".

- Amalthea models support references to other model files in the same folder.

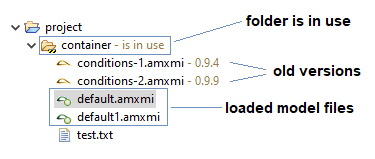

When the

first Amalthea file in a folder is opened in the Amalthea editor

- all valid model files are loaded

- a common editing environment is established

- files and folder are decorated with markers

- loaded model files are decorated with a green dot

- model files that contain older versions are extended with the version

- the folder is marked as "is in use" and decorated with a construction barrier

When the

last Amalthea editor of a folder is closed

- the common editing environment is released

- markers are removed

Warning:

Do not modify the contents of a folder while editors are open.

If you want to add or remove model files then first close all editors.

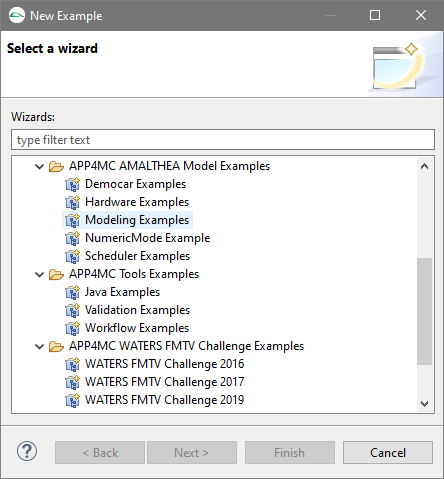

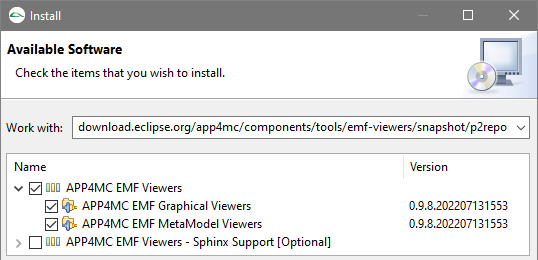

2.1.4 AMALTHEA Examples

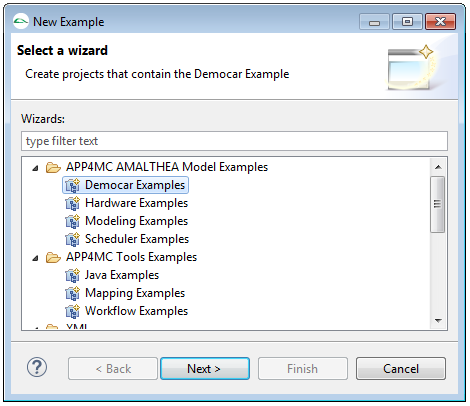

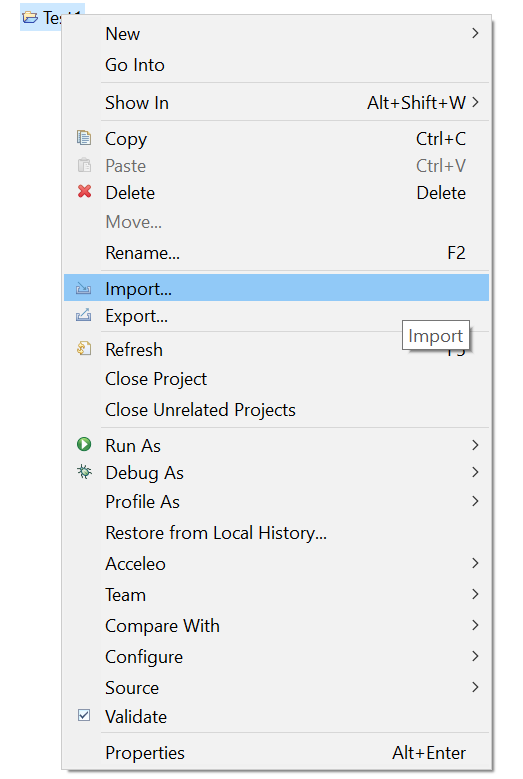

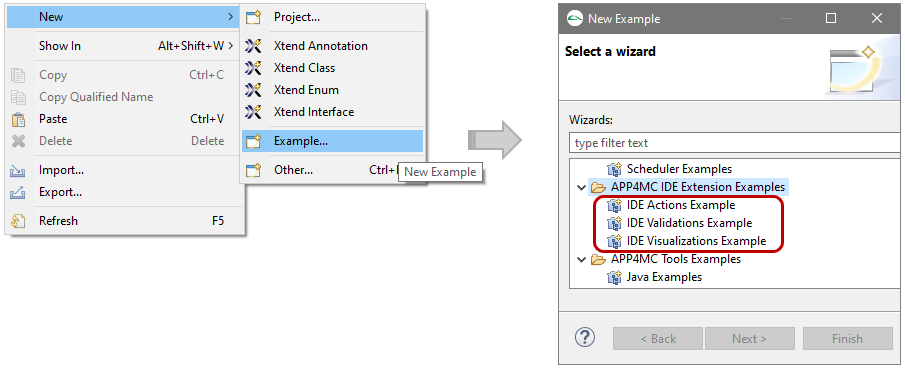

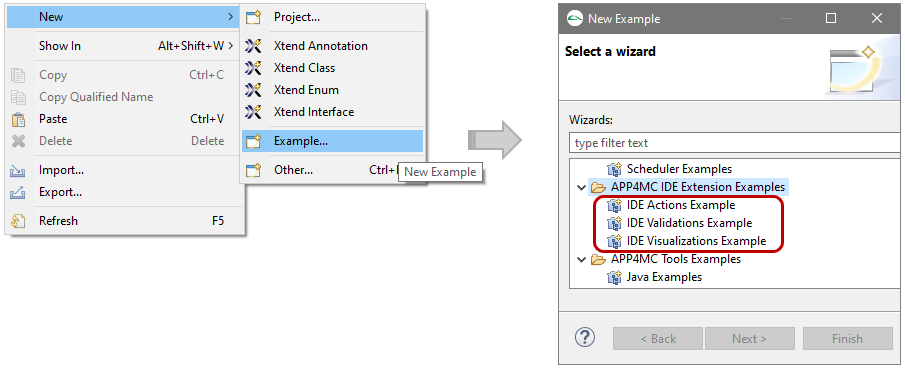

The AMALTHEA tool platform comes with several examples. This section will describe how a new project based on these examples can be created.

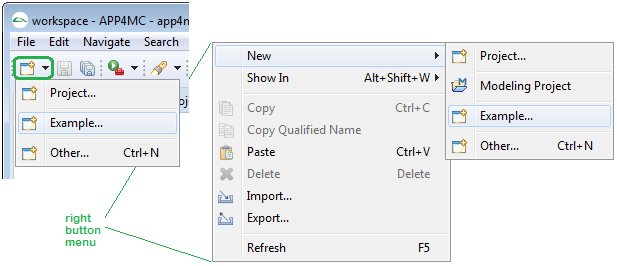

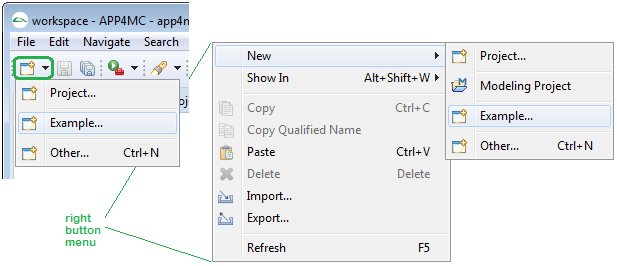

Step 1

Click the "new" icon in the top left corner and select "Example..." or use the right mouse button.

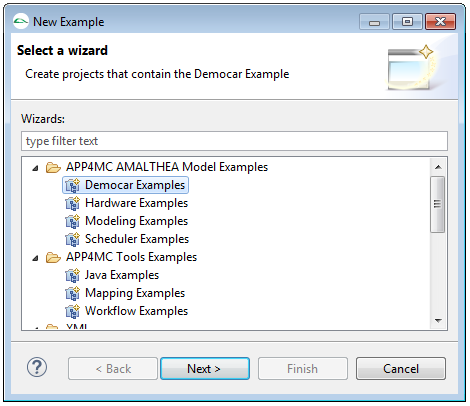

Step 2

The "New Example" wizard will pop up and shows several examples.

Select one examples and hit continue.

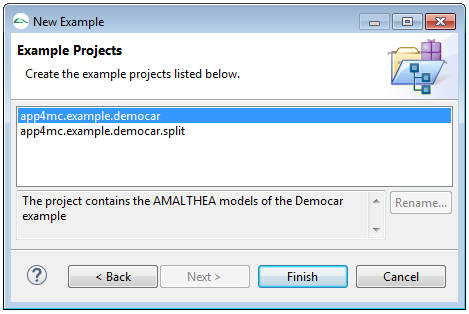

Step 3

You will see a summary of the example projects that will be created.

Click "Finish" to exit this dialog.

You can now open the editor to inspect the models.

2.2 Concepts

2.2.1 Timing in Amalthea Primer

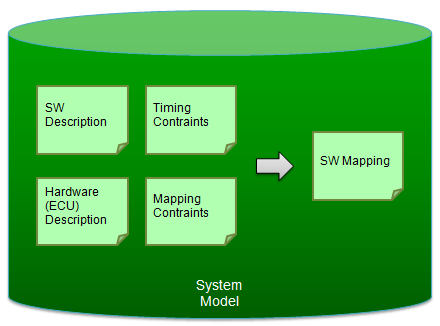

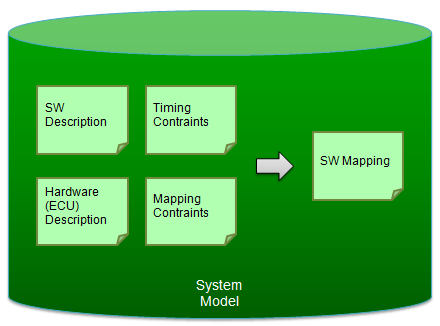

An Amalthea model per se is a static, structural model of a hardware/software system. The basic structural model consists of software model elements (tasks, runnables), hardware model elements (processing units, memories, connection handlers), stimuli that are used to activate execution, and mappings of software model elements to hardware model elements. Semantics of the model then allows for definite and clear interpretation of the static, structural model regarding its behavior over time.

Different Levels of Model Detail

Amalthea provides a meta-model suitable for many different purposes in the design of hardware/software systems. Consequently, there is not

the level of Amalthea model detail – modeling often is purpose driven. Regarding timing analysis, we exemplary discuss three different levels of detail to clarify this aspect of Amalthea. Note that we focus on level of detail of the hardware model and assume other parts of the model (software, mapping, etc.) fixed.

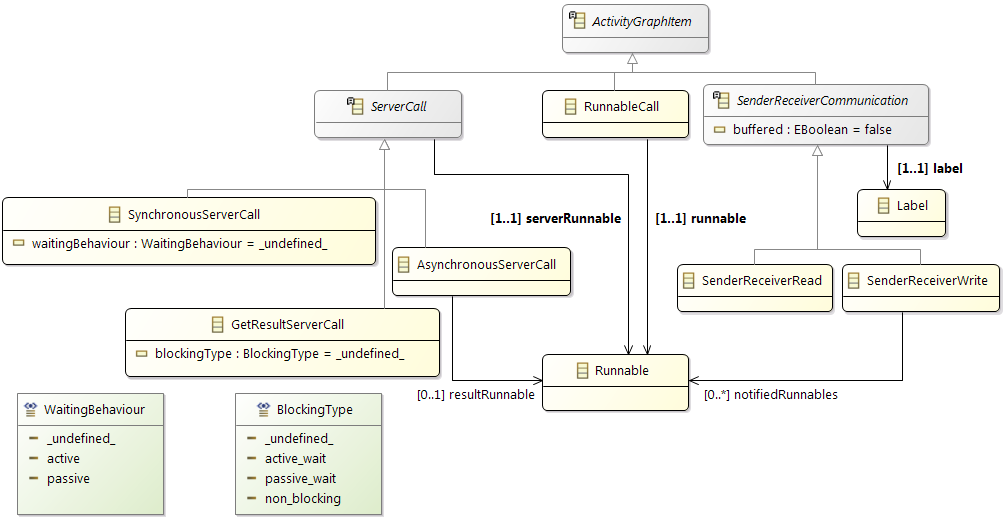

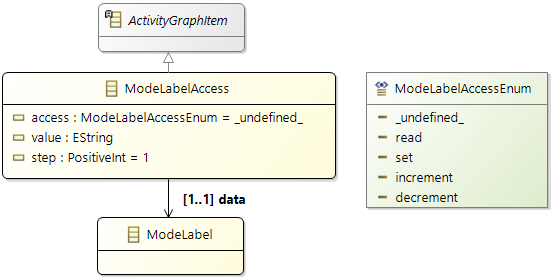

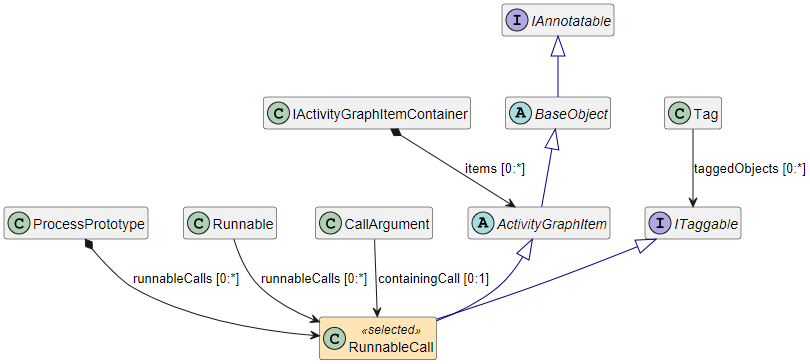

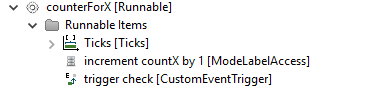

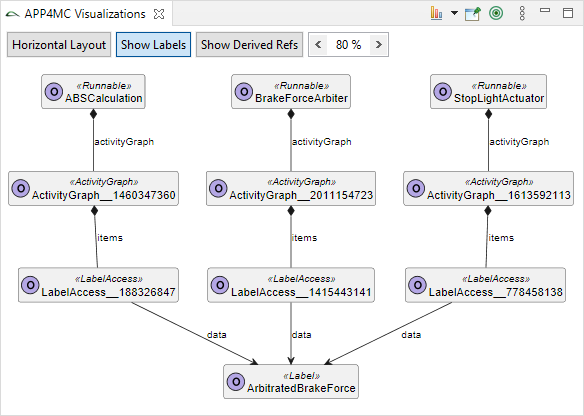

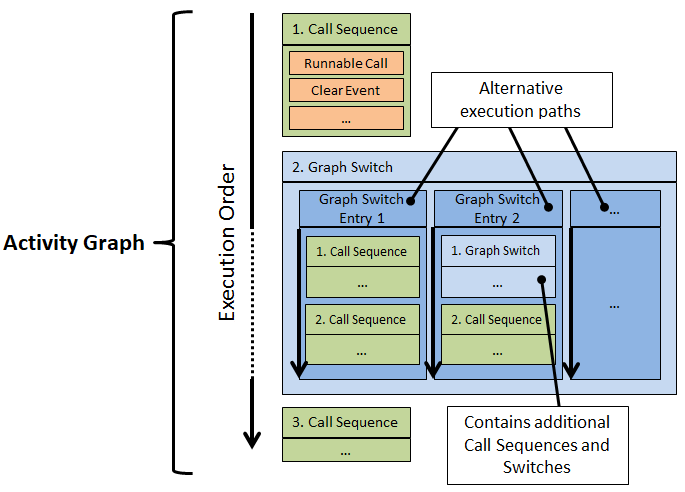

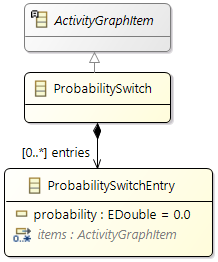

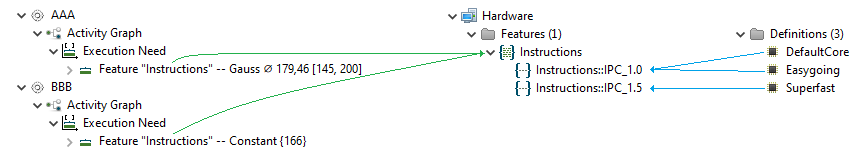

Essential software model elements are runnables, tasks, and data elements (labels and channels). Runnable items of a runnable specifies its runtime behavior. You may specify the runnable items as a directed, acyclic graph (DAG). Amalthea has different categories of runnable items, we focus on the following four:

- Items that

branch paths of the DAG (runnable mode switch, runnable probability switch).

- Items that

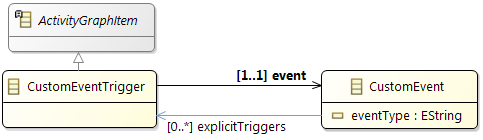

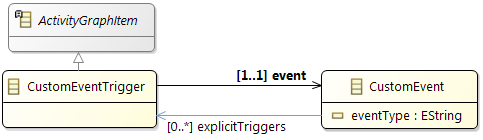

signal or call other parts of the model (custom event trigger, runnable call, etc.).

- Items that specify

data access of data elements (channel receive/send, label access).

- Items that specify

execution time (ticks, execution needs).

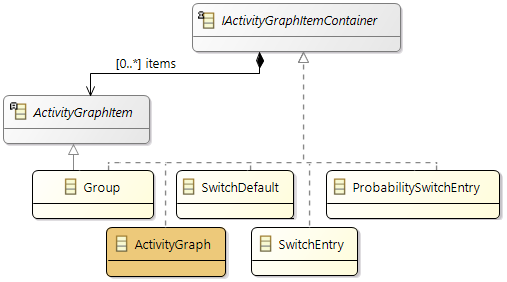

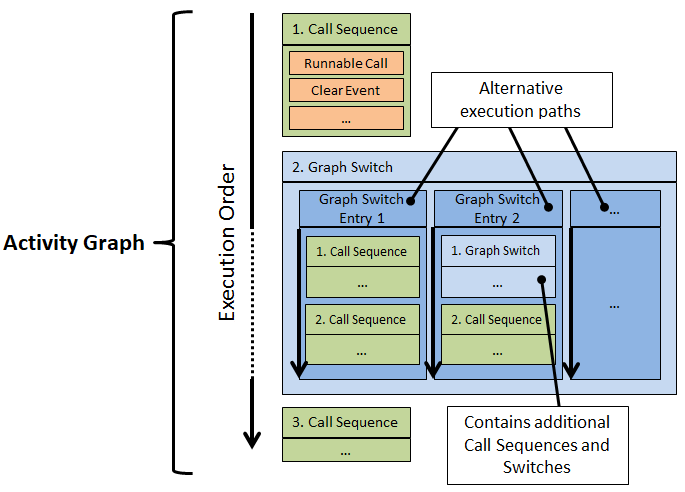

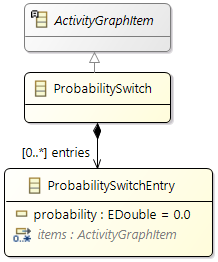

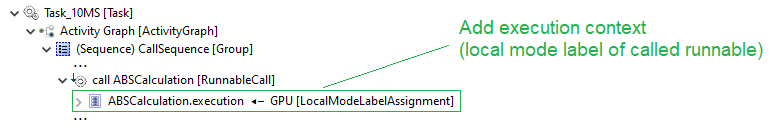

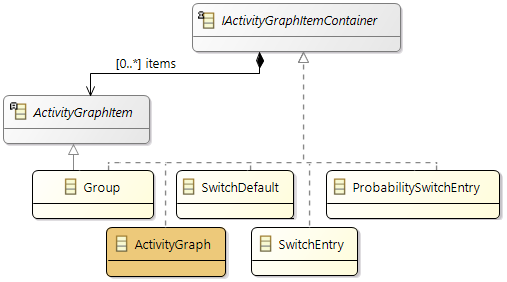

Tasks may call runnables in a activity graph. Note that a runnable can be called by several tasks. We then map tasks to task schedulers in the operating system model, and we map every task scheduler to one or more processing units of the hardware model. Further, we map data elements to memories of the hardware model.

This coarse level of hardware model detail – the hardware model consists only of

mapping targets, without routing or timing for data accesses – may already be sufficient for analysis focusing on event-chains or scheduling.

As the second example of model detail, we now add access elements to all processing units of the hardware model,

modeling data access latencies or data rates when accessing data in memories – still ignoring routing and contention. This level of detail is sufficient, for example, for optimizing data placement in microcontrollers using static timing analysis.

A more detailed hardware model, our third and last example of model detail, will contain information about

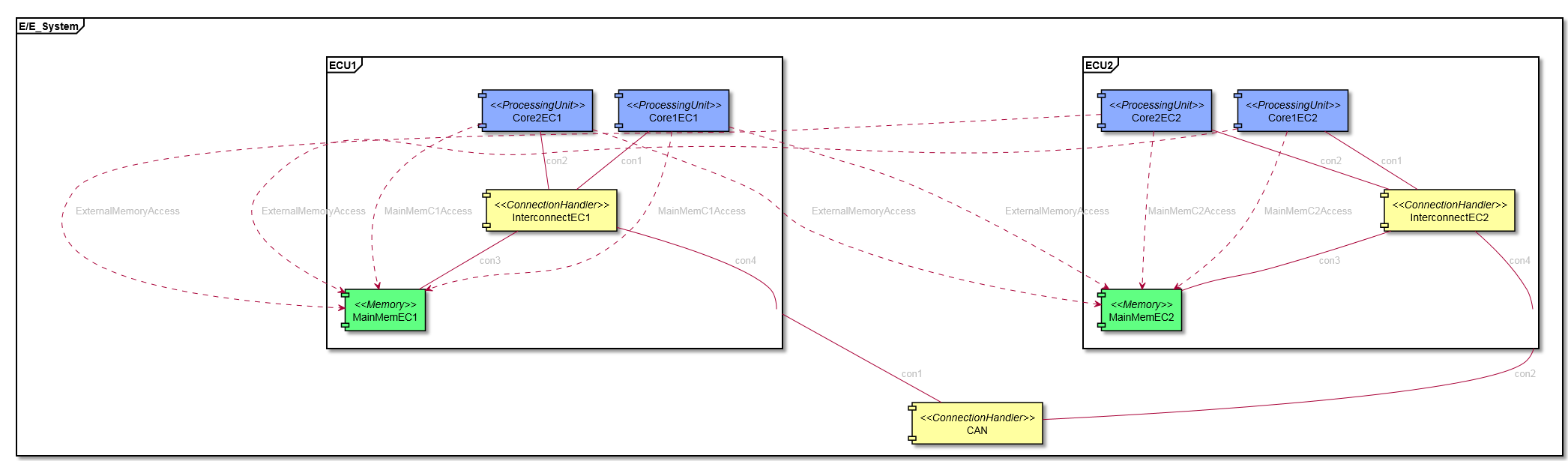

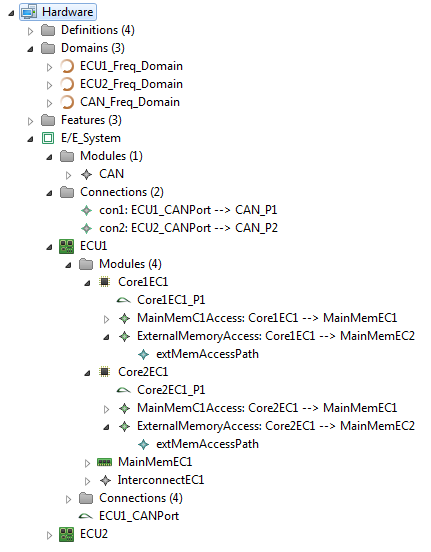

data routing and congestion handling. Therefore, we add connection handlers to the hardware model for every possible contention point, we add ports to the hardware elements, and we add connections between ports. For every access element of the processing units, we add an access path, modeling the access route over different connections and connectionHandlers. The combination of all access elements are able to represent the full address space of a processing unit. This level of detail is well suited for dynamic timing simulation considering arbitration effects for data accesses.

In the following, we discuss 'timing' of Amalthea in context of level of detail of the last example and discrete-event simulation.

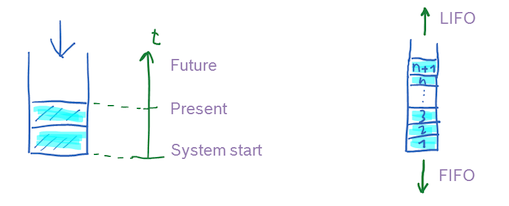

Discrete-Event Simulation

For dynamic timing analysis, the

initial state of the static system model is the starting point, and a series of

state changes is subject to model analysis. The

state of a model mainly consist of states of HW elements (processing units and connection handlers). During analysis, a state change is then, for example, a processing unit changing from

idle to

execute.

When we are interested in the

timing of a model, a common way is using discrete-event simulation. In discrete-event simulation, a series of

events changes the state of the system and a simulated clock. Such a simulation event is, for instance, a

stimuli event for a task to execute on a processing unit, what in turn may change the

state of this processing unit from

idle to

execute.

Note that every event occurs at a specified point in simulated time; for instance, think of a new

stimuli event that shall activate a task in 10ms from the current value of the simulated clock. Unprocessed (future) events are stored in an event list. The simulator then continuously processes the event occurring next to the current simulated time, and forwards the simulated clock correspondingly, thereby removing the event from the event list. Note that this is a very simplified description. For instance, multiple events at the same point in simulated time are possible. Processing events may lead to generation of new events. For instance, processing an end

task execution event may lead to a new

stimuli event that shall occur 5ms from the current value of the simulation clock added to the event list.

After sketching the basic idea of discrete-event simulation, we can be more precise with the term

Amalthea timing: we call the trace of state changes and events over time the

dynamic behavior or simply the

timing of the Amalthea model. This timing of the model than may be further analyzed, for instance, regarding timing constraints.

Stimulation of task execution with corresponding stimuli events, and scheduling in general, is not further discussed here. In the following, we focus on timing of execution at processing units and data accesses.

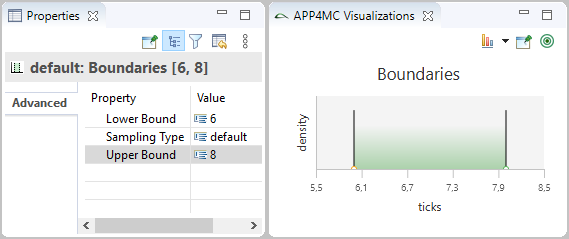

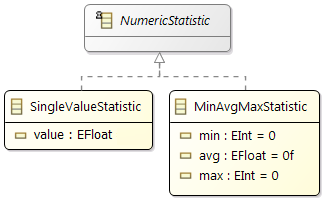

Execution Time

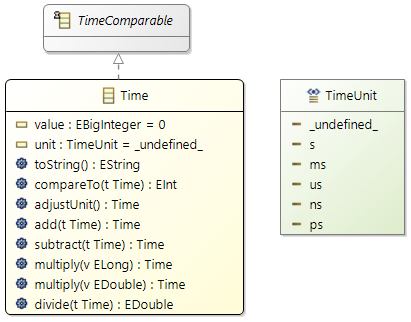

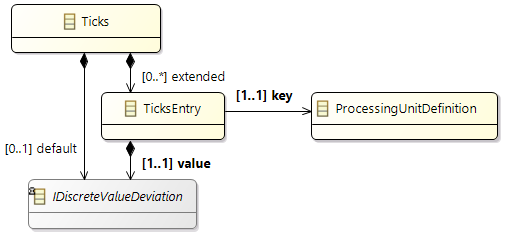

The basic mechanism to specify execution time at a processing unit is the modeling element

ticks.

Ticks are a generic abstraction of time for software, independent of hardware. Regarding hardware, one may think of

ticks as clock ticks or cycles of a processing unit. You can specify

ticks at several places in the model, most prominently as a runnable item of a runnable. The

ticks value together with a mapping to a specific processing unit that has a defined frequency then allows computation of an

execution time. For instance, 100 ticks require 62.5ns simulated time at a processing unit with 1.6GHz, while 100 ticks require 41.6ns at a processing unit with 2.4GHz.

When the discrete-event simulator simulates execution of a runnable at a processing unit, it actually processes runnable items and translates their semantics into simulation events. We already discussed the runnable item

ticks: when

ticks are processed, we compute a corresponding simulation time value based on the executing processing unit's frequency, and store a simulation event in the list of simulation events for when the execution will finish.

In that sense, ticks translate into a fixed or

static timing behavior - when execution starts, it is always clear when this execution will end. Note that the current version of Amalthea (0.9.3) also prepares an additional concept for specification of execution timing besides using

ticks:

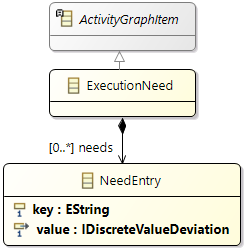

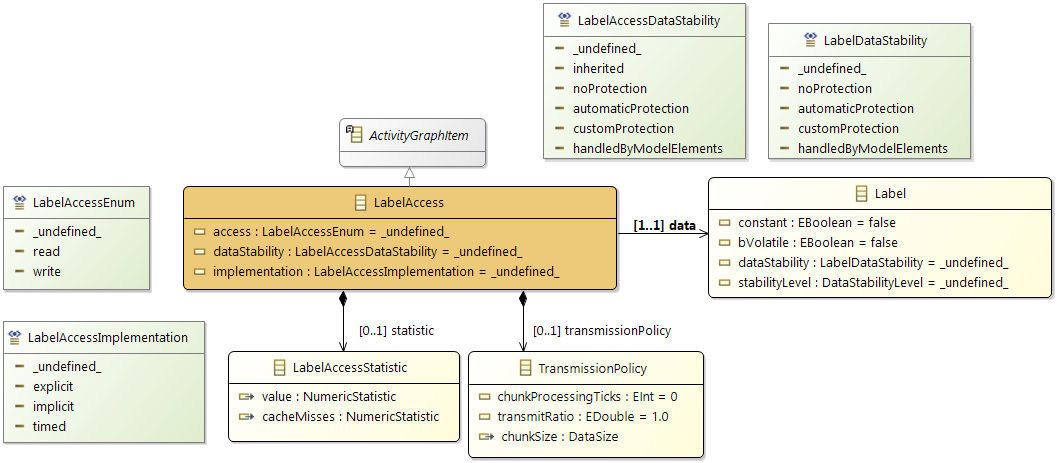

execution needs. Execution needs will allow sophisticated ways of execution time specification, as required for heterogeneous systems.

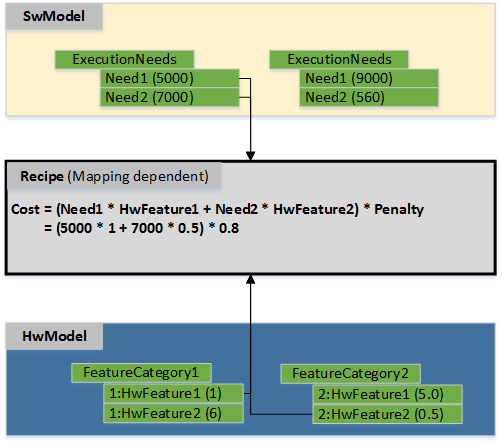

Execution needs define the number of usages of user-defined needs; a later version of Amalthea (> 0.9.3) then will introduce

recipes that translate such execution needs into ticks, taking

hardware features of the executing processing unit into account. Note that, by definition, a sound model for timing simulation always allows to compute ticks from execution needs. Consequently, for timing analysis using discrete-event simulation as described above, we first translate execution needs into ticks, resulting in a model we call

ticks-only model. Thus, we can ignore

execution needs for timing analysis.

Data Accesses

For data accesses, in contrast to ticks, the duration in simulation time is not always clear. A single data access may result in series of simulation events. Occurrence of these events in simulation time depends on several other model elements, for example, access paths, mapping of data to memory, and state of connection handlers. Thus, a data access may result in

dynamic timing behavior. Note that there are plenty of options in Amalthea for hardware modeling; consequently, options for modeling and simulation of data accesses are manifold (see above

Different Levels of Model Detail). In the following, we discuss some modeling patterns for data accesses.

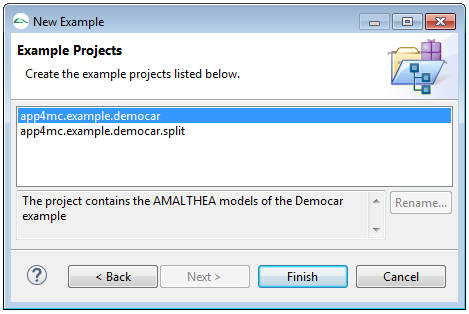

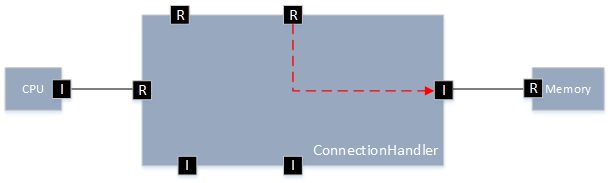

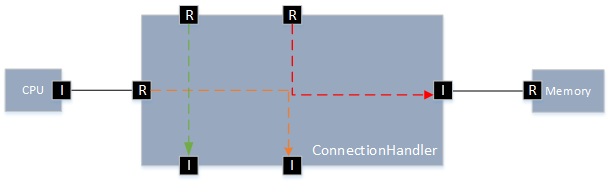

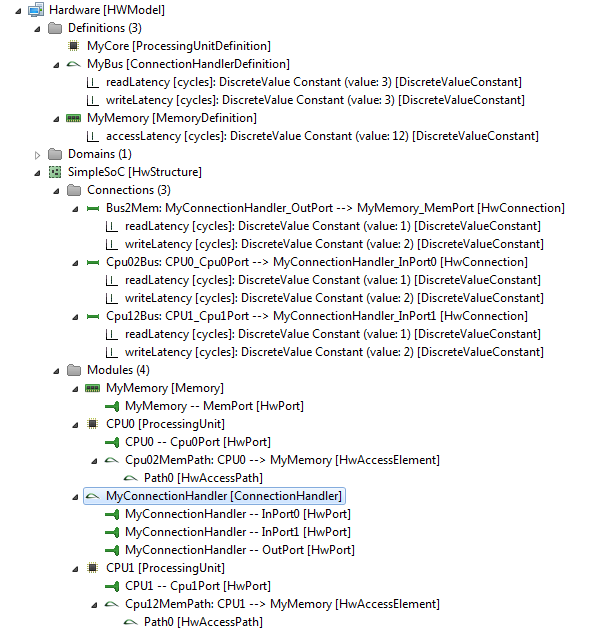

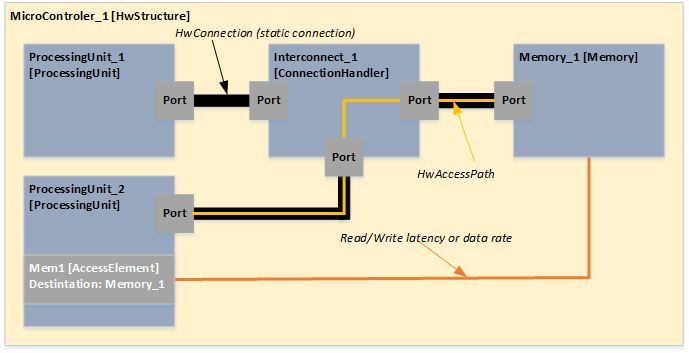

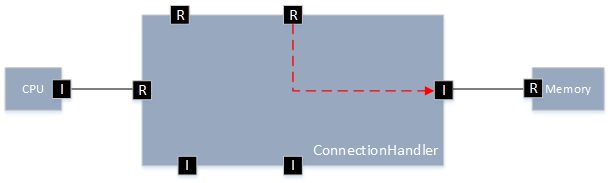

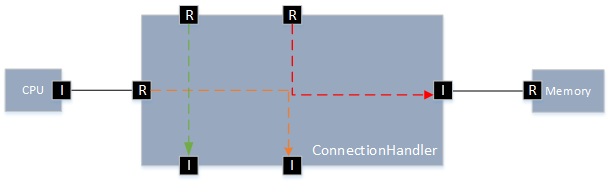

Consider a hardware model consisting of two processing units, a connection handler, and a single memory. We add a read and a write latency to all connections and the connection handler. Additionally, we add an access latency to the memory. There are no read or write latency added to access elements of the processing units. Only access paths specify the routes from the specific processing unit to the memory across the connection handler, see the following screenshot.

As described in the beginning of this section, a single data access may result in a series of events. Expected simulation behavior is as follows: When the discrete-event simulator encounters a runnable item for a data read access at CPU0, we add an event for one tick later to the event queue of the simulator, denoting passing the connection from CPU0 to the connection handler MyConnectionHandler. (For simplicity, we do not use time durations calculated from the CPU0's frequency here, what would be required to determine the correct point in simulated time for the event). After passing the connection, the state of MyConnectionHandler is relevant for the next event: Either MyConnectionHandler is occupied by a data access (from CPU1), a data access arrives at the same time, or MyConnectionHandler is available.

- If MyConnectionHandler is available, we add an event for three ticks later-this is the read latency of the connection handler.

- If a data access from CPU1 arrives at the same time, the connection handler handles this data access based on the selected arbitration mechanism (attribute not shown in the screen shot – we assume priority based with a higher priority for CPU1). We add an event for three ticks later, when MyConnectionHandler is available again. We then check again for data accesses from CPU1 at the same time and set a corresponding event.

- If MyConnectionHandler is occupied, we add an event for whenever the connection handler is not occupied anymore. We then check for data accesses from CPU1 at the same time and set a corresponding event.

Eventually, MyConnectionHandler may handle the read access from CPU0, and when the simulator reacts on the corresponding event, we add an event for one tick later, as this is the read latency for the connection between the connection handler and the memory. The final event for this read data access from CPU0 results from the access latency of the memory (twelve ticks). When the simulator reacts on that final event, the read access from CPU0 is completed and the simulator can handle the next runnable item at CPU0, if available.

Note that in the above example there is only one contention point. We thus could reduce the number of events for an optimized simulation. Further, note that we ignore size of data elements in this example. We could use

data rates instead of latencies at connections, the connection handler, and the memory to respect data sizes in timing simulation or work with the bit width of ports. Furthermore beside constant latency values it is also possible to use distributions, in this case the simulator role the dice regarding the distribution. At a last note, adding to the discussion of different detail levels: Depending on use-case, modeling purpose, and timing analysis tool, there may be best practice defined for modeling. For instance, one tool may rely on data rates, while other tools require latencies but only at memories and connection handlers, not connections. Tool specific model transformations and validation rules should handle and define such restrictions.

Structural Modeling of Heterogeneous Platforms

To master the rising demands of performance and power efficiency, hardware becomes more and more diverse with a wide spectrum of different cores and hardware accelerators. On the computation front, there is an emergence of specialized processing units that are designed to boost a specific kind of algorithm, like a cryptographic algorithm, or a specific math operation like "multiply and accumulate". As one result, the benefit of a given function from hardware units specialized in different kinds may lead to nonlinear effects between processing units in terms of execution performance of the algorithm: while one function may be processed twice as fast when changing the processing unit, another function may have no benefit at all from the same change. Furthermore the memory hierarchy in modern embedded microprocessor architectures becomes more complex due to multiple levels of caches, cache coherency support, and the extended use of DRAM. In addition to crossbars, modern SoCs connect different clusters of potentially different hardware components via a Network on Chip. Additionally, power and frequency scaling is supported by state of the art SoCs. All these characteristics of modern and performant SoCs (specialized processing units, complex memory hierarchy, network like interconnects and power and frequency scaling) were only partially supported by the former Amalthea hardware model. Therefore, to create models of modern heterogeneous systems, new concepts of representing hardware components in a flexible and easy way are necessary: Our approach supports modeling of manifold hierarchical structures and also domains for power and frequencies. Furthermore, explicit cache modules are available and the possibilities for modeling the whole memory subsystem are extended, the connection between hardware components can be modeled over different abstraction layers. Only with such an extended modeling approach, a more accurate estimation of the system performance of state of the art SoCs becomes feasible.

Our intention is allowing to create a hardware model once at the beginning of a development process. Ideally, the hardware model will be provided by the vendor. All performance relevant attributes regarding the different features of hardware components like a floating point unit or how hardware components are interconnected should be explicitly represented in the model. The main challenge for a hardware/software performance model is then to determine certain costs, e.g. the net execution time of a software functionality mapped to a processing unit. Costs such as execution time, in contrast to the hardware structure, may change during development time – either because the implementation details evolve from initial guess to real-world measurements, the implementation is changed, or the tooling is changed. Therefore, the inherent attributes of the hardware, e.g. latency of an access path, should be decoupled from the mapping or implementation dependent costs of executing functions. We know from experience that it is necessary to refine these costs constantly in the development process to increase accuracy of performance estimation. Refinement denotes incorporation of increasing knowledge about the system. Therefore, such a refinement should be possible in an efficient way and also support re-use of the hardware model. The corresponding concepts are detailed in the following section.

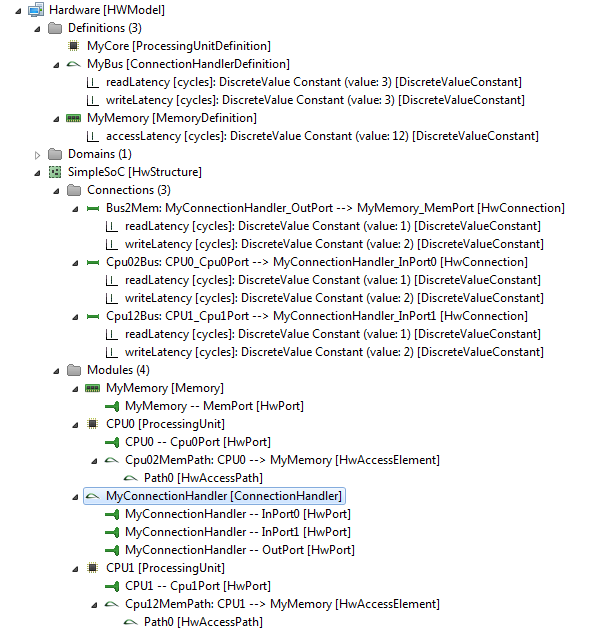

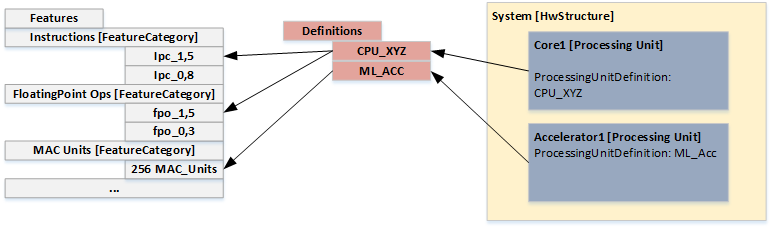

Recipe and Feature concept: An outlook of an upcoming approach

Disclaimer: Please note that the following describes work in progress – what we call "recipes" later is not yet part of the meta-model, and the concept of "features" is not final.

The main driver of the concept described here is separation of implementation dependent details from structural or somehow "solid" information about a hardware/software system. This follows the separation of concerns paradigm, mainly to reduce refinement effort, and foster model re-use: As knowledge about a system grows during development, e.g. by implementing or optimizing functionality as software, the system model should be updated and refined efficiently, while inherent details shall be kept constant and not modified depending on the implementation.

An example should clarify this approach: For timing simulation, we require the net execution time of a software function executed on the processing unit it is mapped onto. This cost of the execution depends on the implementation of the algorithm, for instance, as C++ code, and the tool chain producing the binary code that eventually is executed. In that sense, the

execution needs of the algorithm (for instance, a certain number of "multiply and accumulate" operations for a matrix operation) are naturally fixed, as well as the

features provided by the processing unit (for instance, a dedicated MAC unit requiring one tick for one operation, and a generic integer unit requiring 0.5 ticks per operation). However is implementation- and tool-chain-dependent how the actual execution needs of the algorithm are served by the execution units. Without changing the algorithm or the hardware, optimization of the implementation may make better use of the hardware, resulting in reduced execution time. The above naturally draws the lines for our modeling approach: Execution needs (on an algorithmic level) are inherent, as well as features of the hardware. Keeping these information constant in the model is the key for re-use; implementation dependent change of costs, such as lower execution time by an optimized implementation in C++ or better compiler options, change during development and are modeled as

recipe. A "recipe" thus takes execution needs of software and features of the hardware as input and results in costs, such as the net execution time. Consequently, recipes are the main area of model refinement during development. The concept is illustrated below.

Note that flexibility is part of the design of this approach. Execution needs and features are not limited to a given set, and recipes can be almost arbitrary prescripts of computation. This allows to introduce new execution needs when required to favorable detail an algorithm. For instance, the execution need "convolution-VGG16" can be introduced to model a specific need for a deep learning algorithm. The feature "MAC" of the executing processing unit provides costs in ticks corresponding to perform a MAC operation. The recipe valid for the mapping then uses these two attributes to compute the net execution time of "convolution-VGG16" in ticks, for instance, by multiplying the costs of xyz MAC operations with a penalty factor of 0.8. Note that with this approach execution needs may be translated very differently into costs, using different features.

To further motivate this approach, we give some more benefits and examples of beneficial use of the model:

- Given execution needs of a software function that directly correspond the features of processing units, the optimal execution time may be computed (peak performance).

- While net execution time is the prime example of execution needs, features, and recipes, the concept is not limited to "net execution time recipes", recipes for other performance numbers such as power consumption are possible.

- Recipes can be attached at different "levels" in the model: At a processing unit and at a mapping. If present, the recipe at mapping level has precedence.

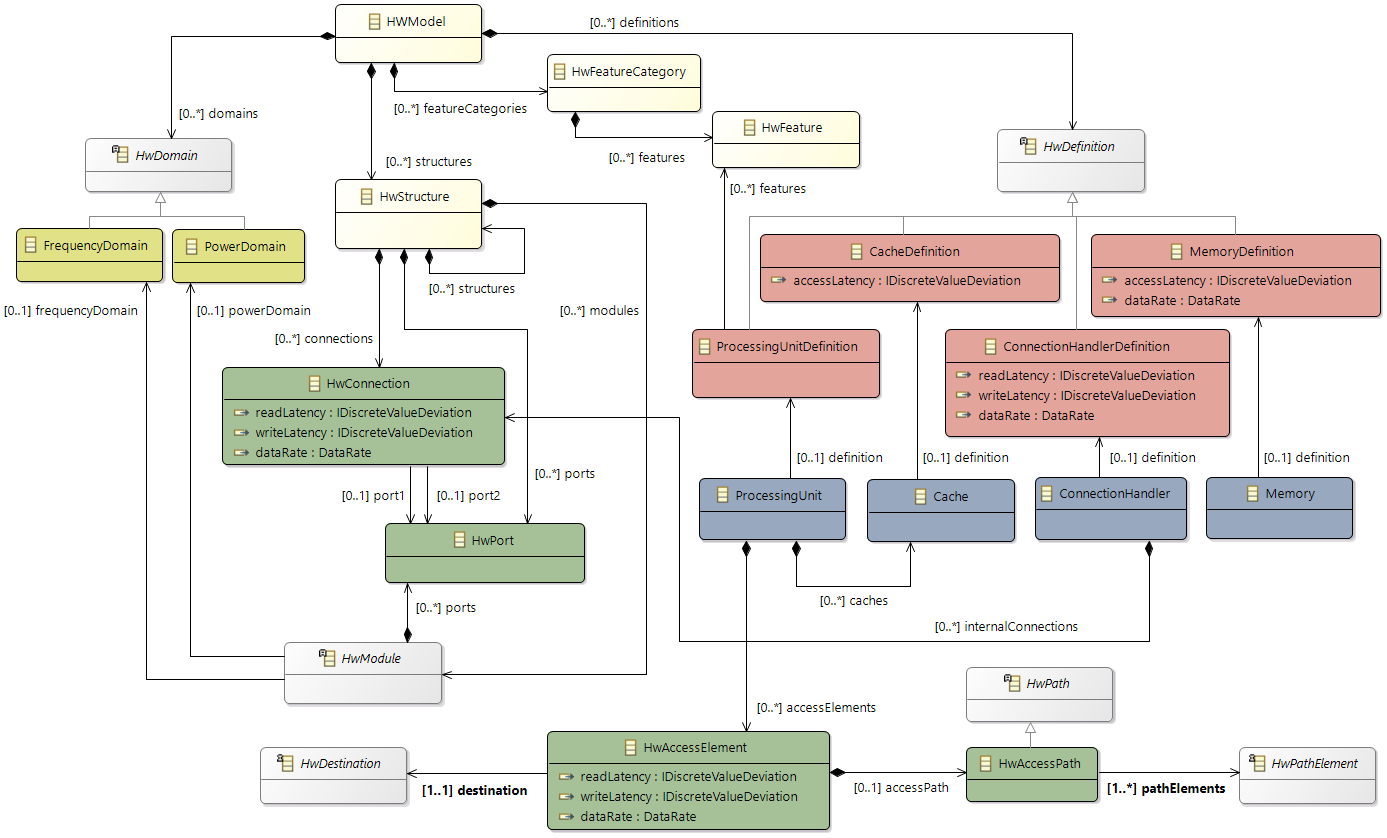

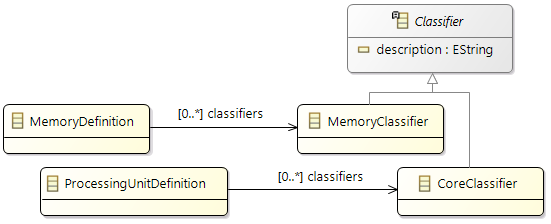

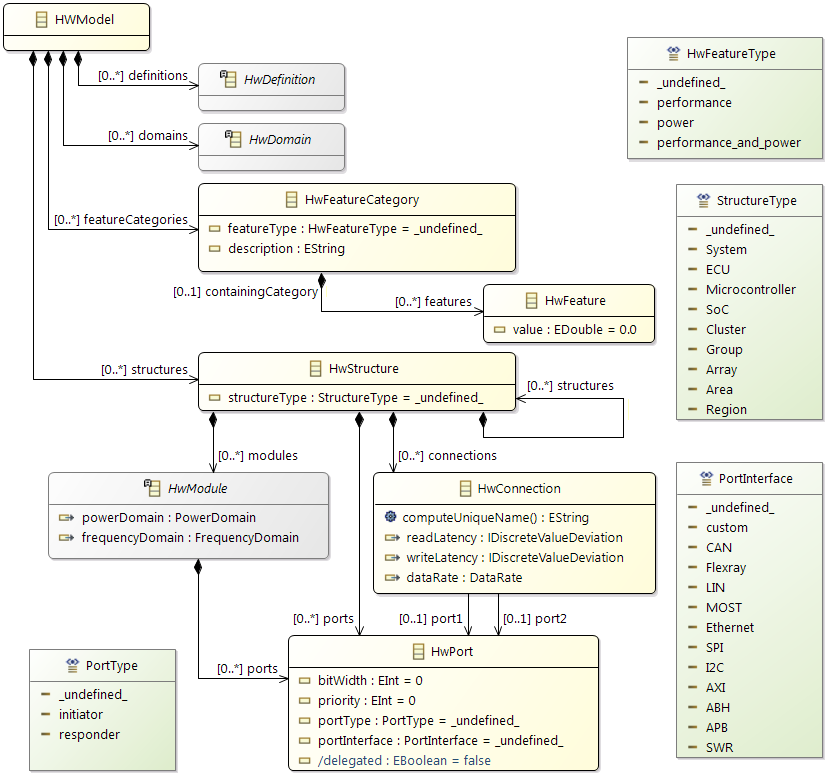

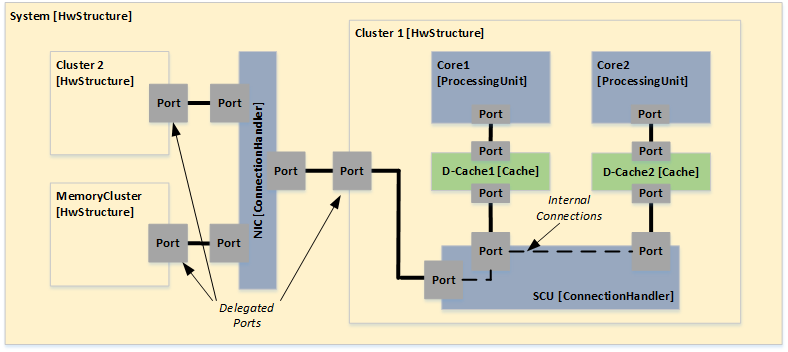

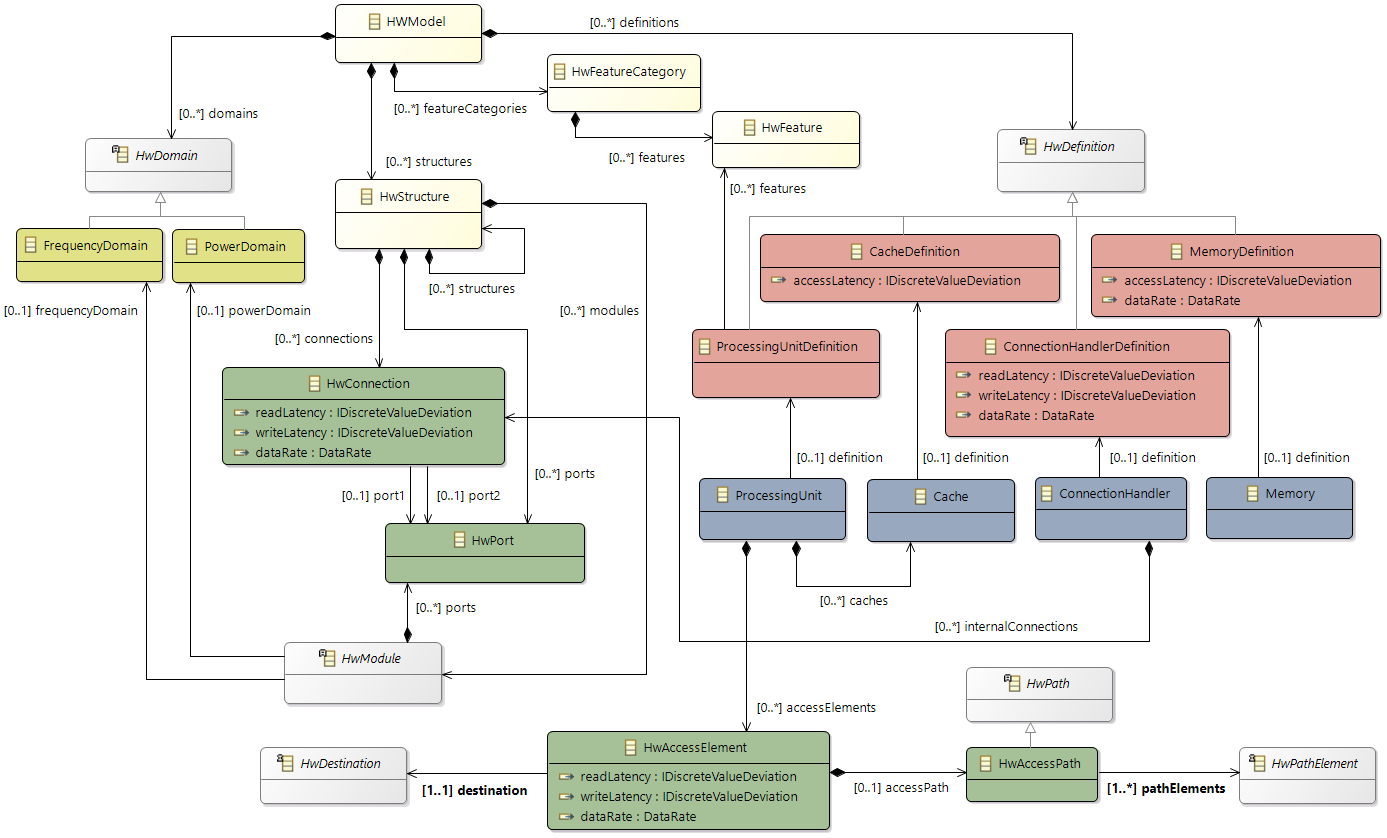

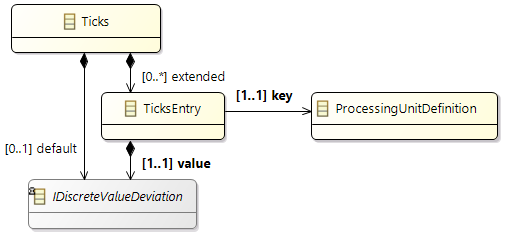

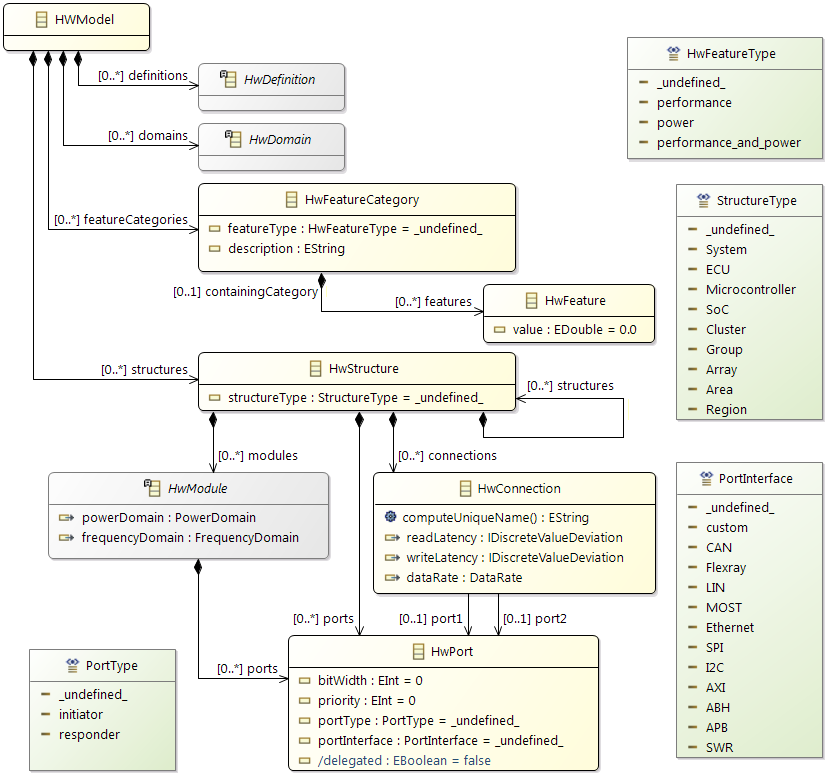

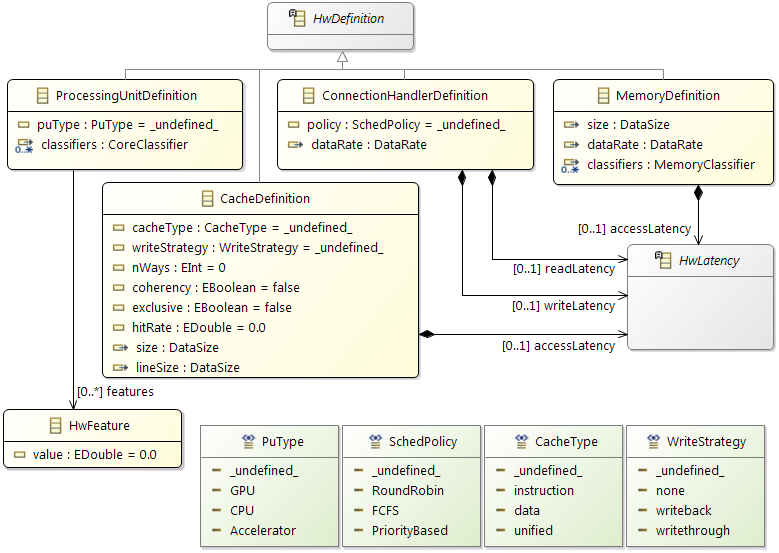

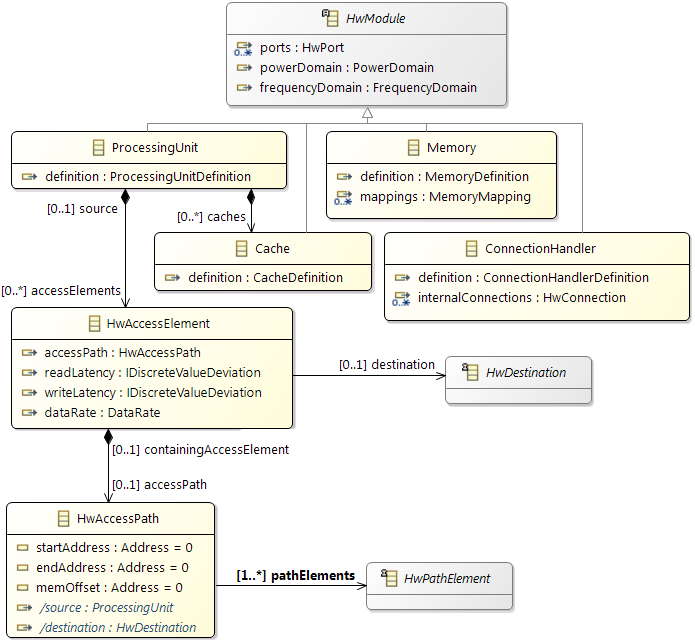

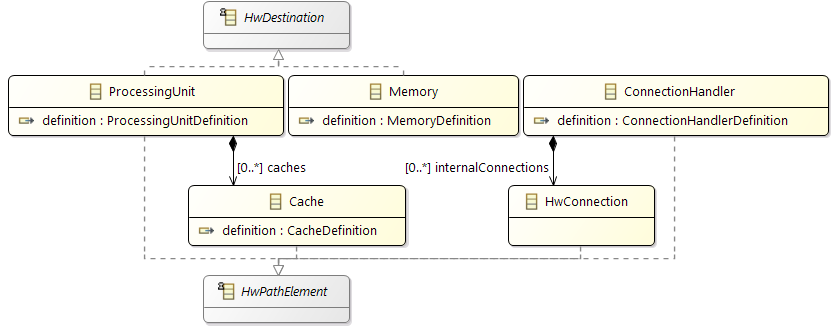

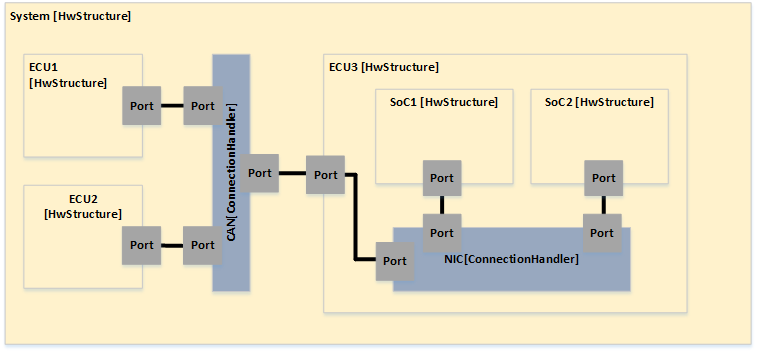

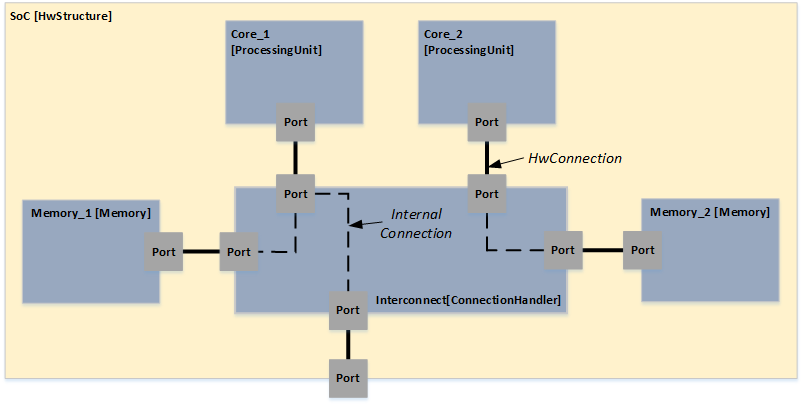

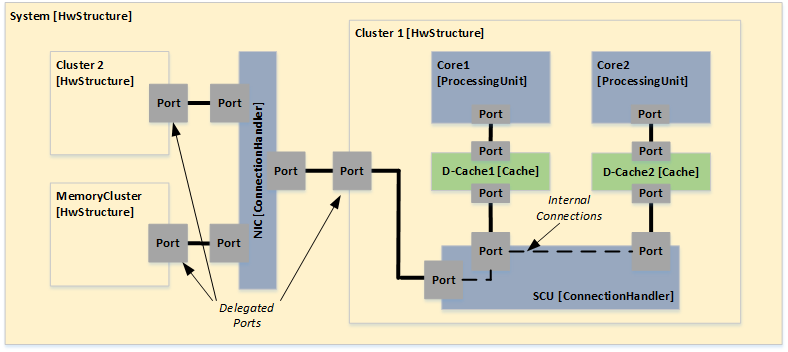

General Hardware Model Overview

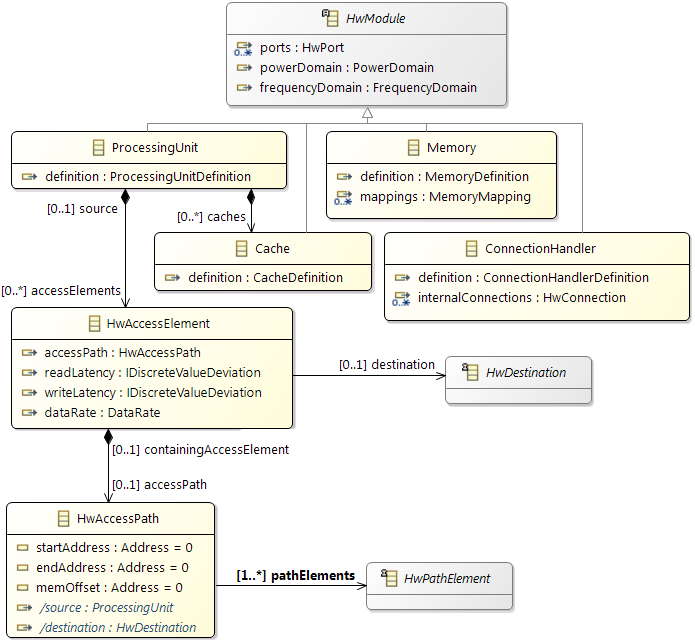

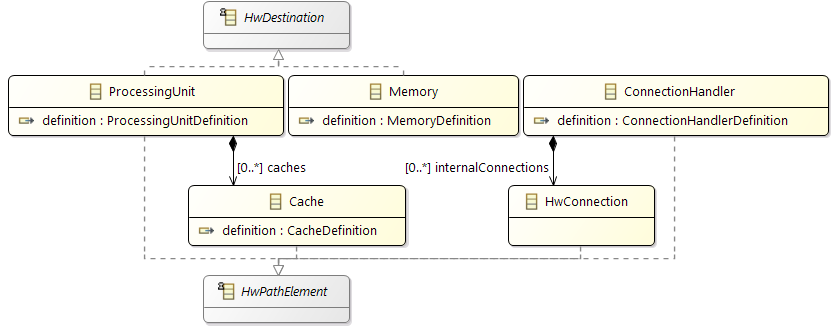

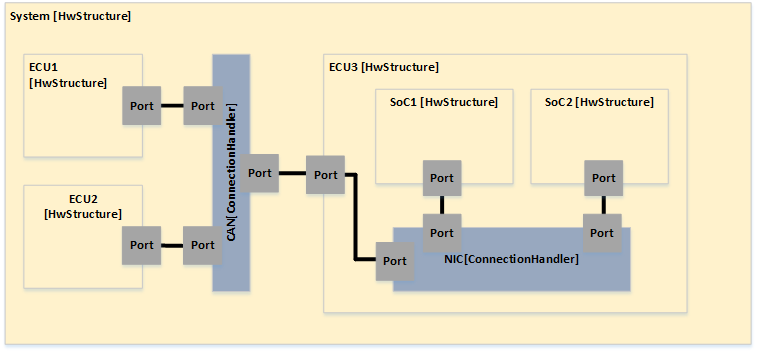

The design of the new hardware model is focusing on flexibility and variety to cover different kind of designs to cope with future extensions, and also to support different levels of abstraction. To reduce the complexity of the meta model for representing modern hardware architectures, as less elements as possible are introduced. For example, dependent of the abstraction level, a component called

ConnectionHandler can express different kind of connection elements, e.g. a crossbar within a SoC or a CAN bus within an E/E-architecture. A simplified overview of the meta model to specify hardware as a model is shown below. The components

ConnectionHandler, ProcessingUnit, Memory and

Cache are referred in the following as basic components.

Class diagram of the hardware model

The root element of a hardware model is always the

HwModel class that contains all domains (power and frequency), definitions, and hardware features of the different component definitions. The hierarchy within the model is represented by the

HwStructure class, with the ability to contain further

HwStructure elements. Therewith arbitrary levels of hierarchy could be expressed. Red and blue classes in the figure are the definitions and the main components of a system like a memory or a core.

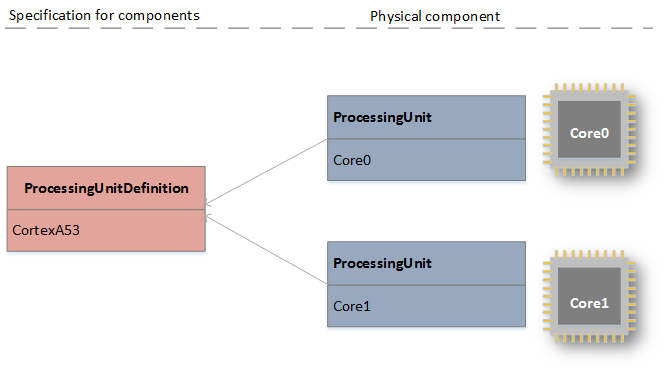

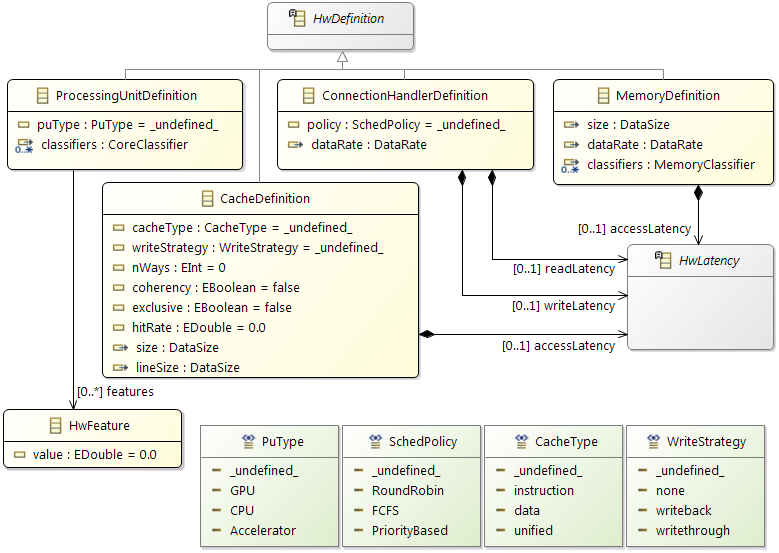

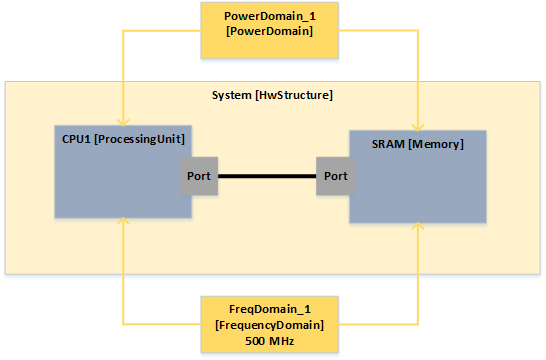

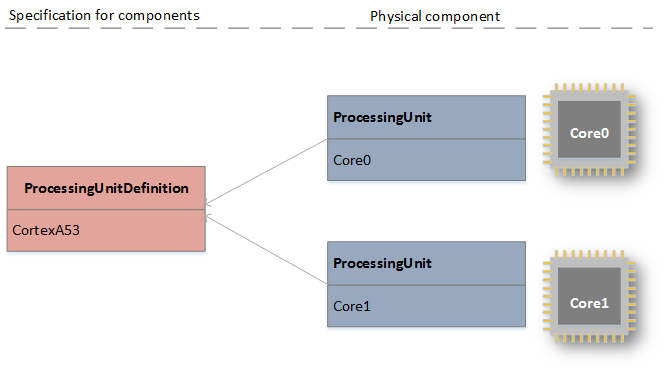

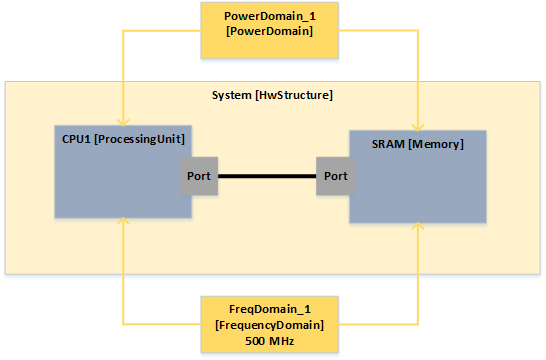

The next figure shows the modeling of a processor. The

ProcessingUnitDefiniton, which is created once, specifies a processing unit with general information (which can be a CPU, GPU, DSP or any kind of hardware accelerator). Using a definition that may be re-used supports quick modeling for multiple homogeneous components within a heterogeneous architecture.

ProcessingUnits then represent the physical instances in the hardware model, referencing the

ProcessingUnitDefiniton for generic information, supplemented only with instance specific information like the

FrequencyDomain.

Link between definitions and module instances (physical components)

Yellow represents the power and frequency domains that are always created at the top level of the hardware model. It is possible to model different frequency or voltage values, e.g., when it is possible to set a systems into a power safe mode. All components that reference the domain are then supplied with the corresponding value of the domain.

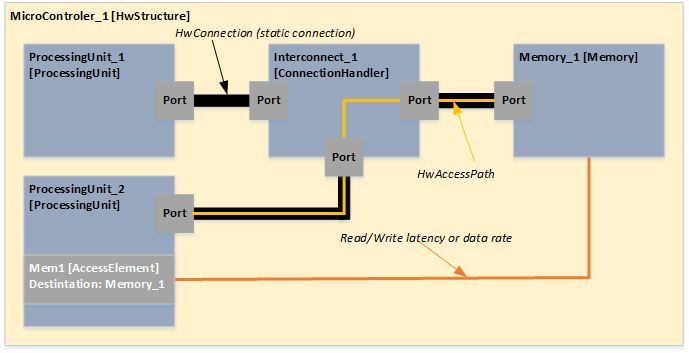

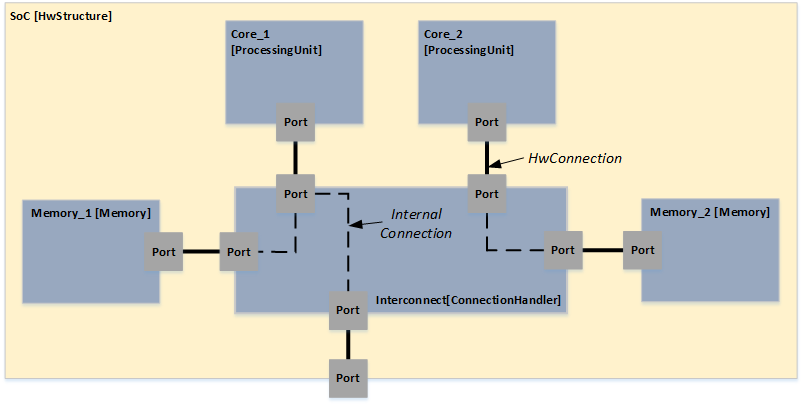

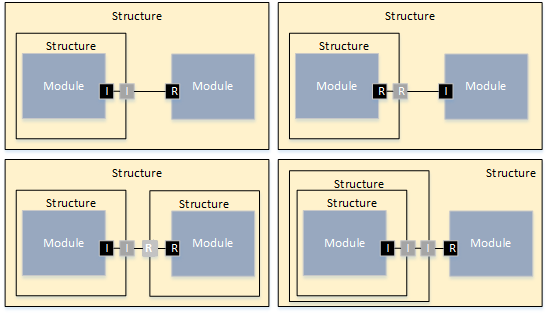

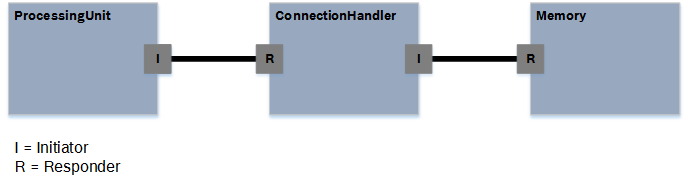

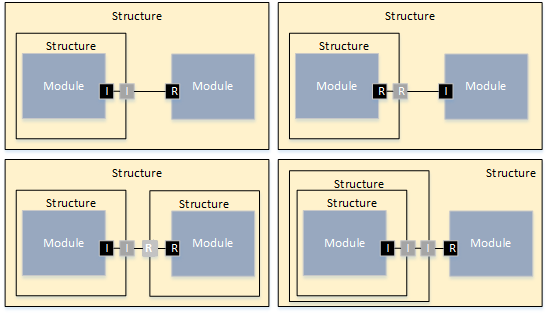

All the green elements in the figure are related to communication (together with the blue base component

ConnectionHandler). Green modeling elements represent ports, static connections, and the access elements for the

ProcessingUnits. These

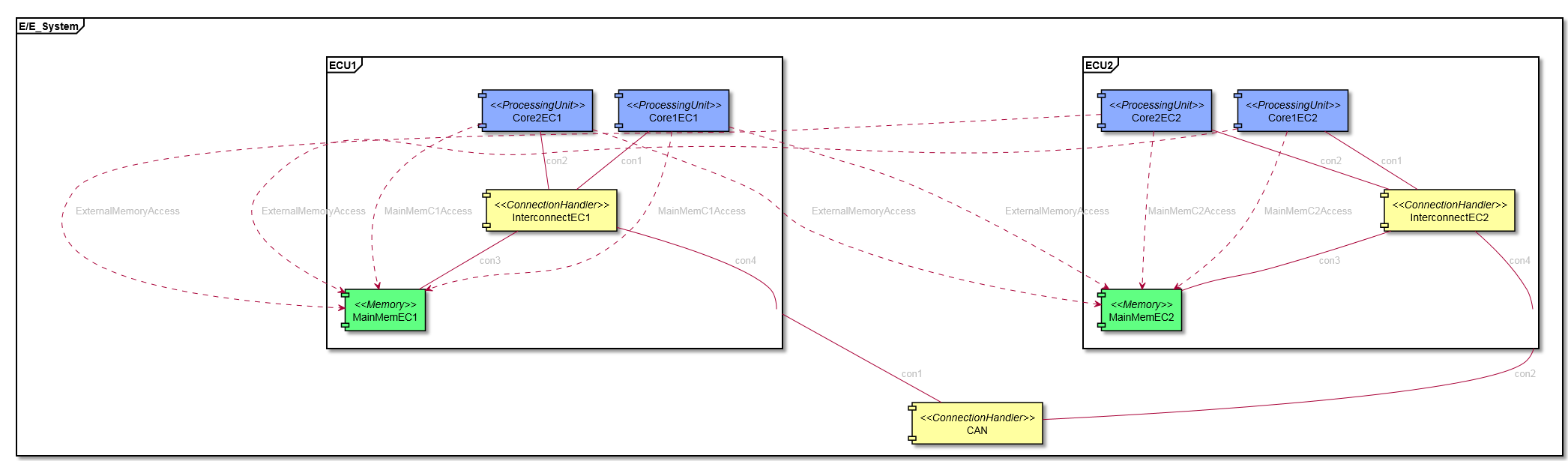

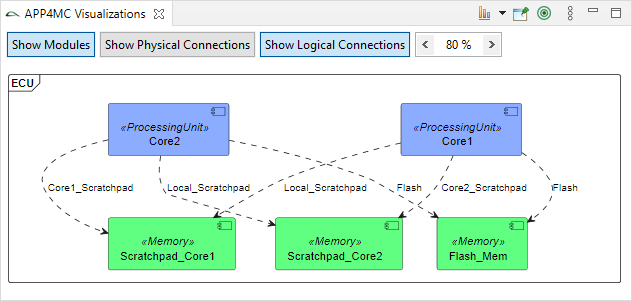

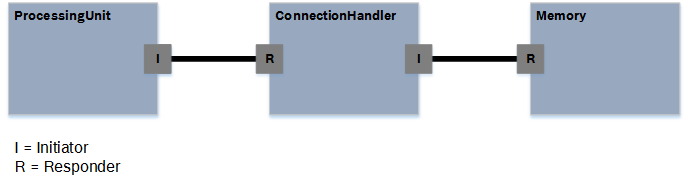

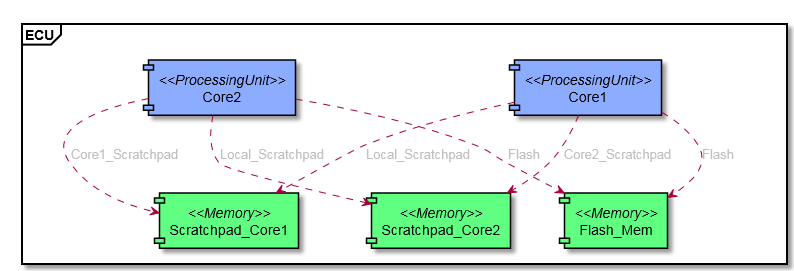

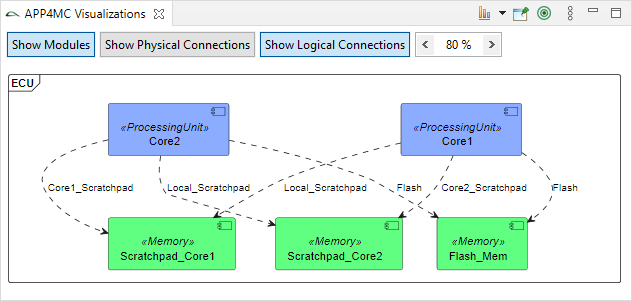

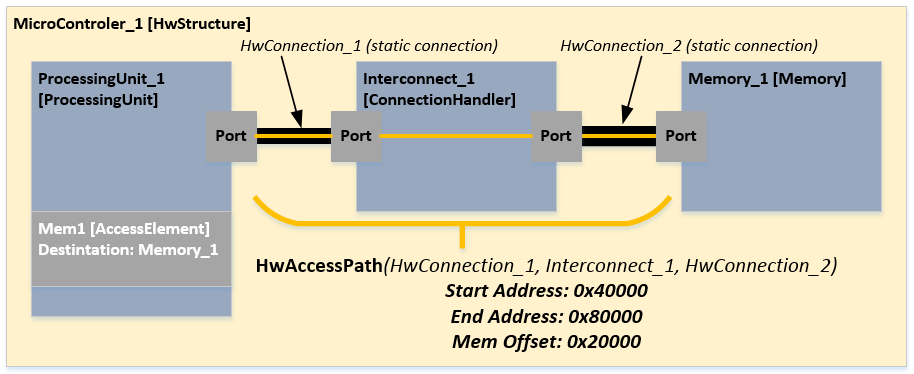

ProcessingUnits are the master modules in the hardware model. The following example shows two

ProcessingUnits that are connected via a

ConnectionHandler to a

Memory. There are two different possibilities to specify the access paths for

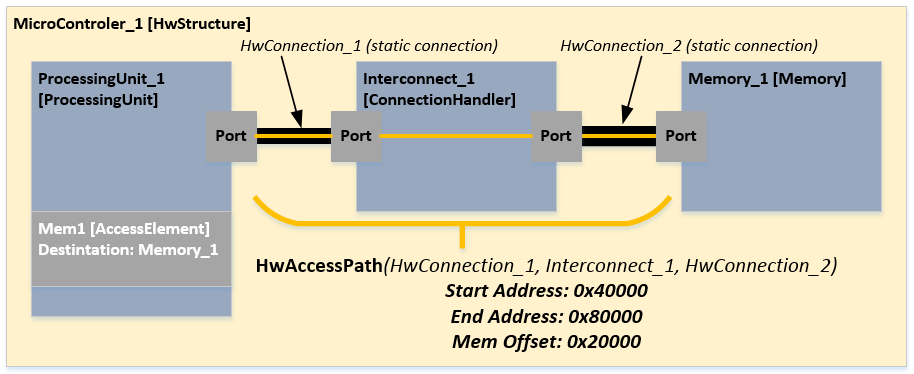

ProcessingUnits like it is shown for ProcessingUnit_2 in the next figure. Every time a

HwAccessElement is necessary to assign the destination e.g. a

Memory component. This

HwAccessElement can contain a latency or a data rate dependent on the use case. The second possibility is to create a

HwAccessPath within the

HwAccessElement which describes the detailed path to the destination by referencing all the

HwConnections and

ConnectionHandlers. It is even possible to reference a cache component within the

HwAccessPath to express if the access is cached or non-cached. Furthermore it is possible to set addresses for these

HwAccessPath to represent the whole address space of a

ProcessingUnit. A typical approach would be starting with just latency or data rates for the communication between components and enhance the model over time to by switching to the

HwAccessPaths.

Access elements in the hardware model

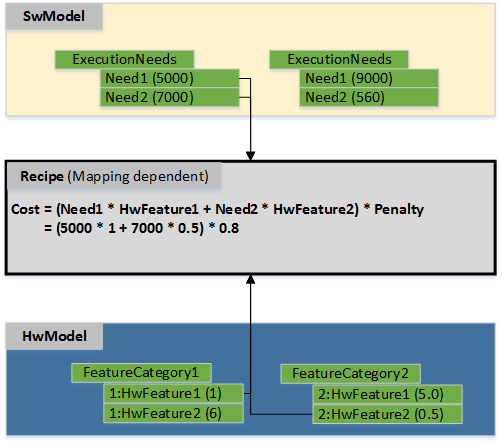

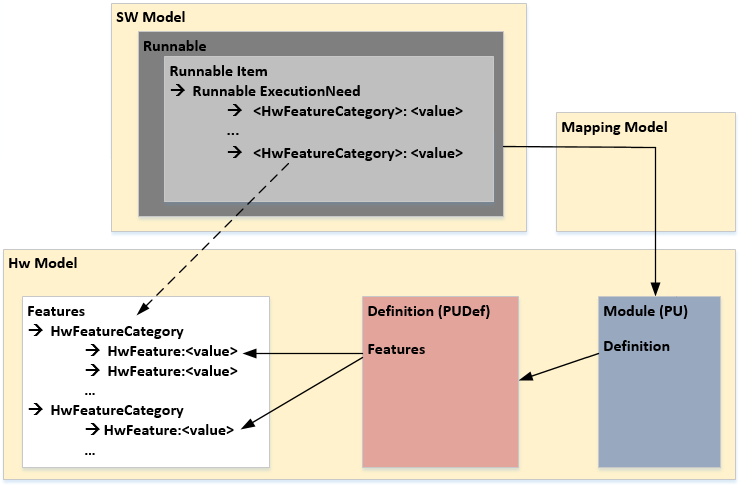

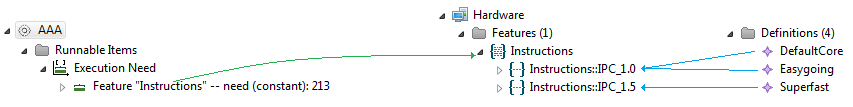

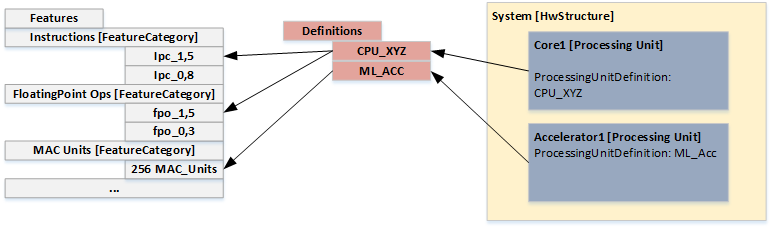

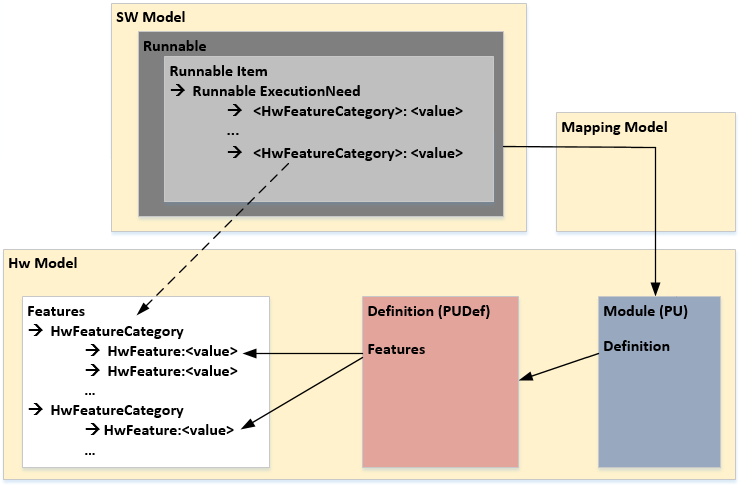

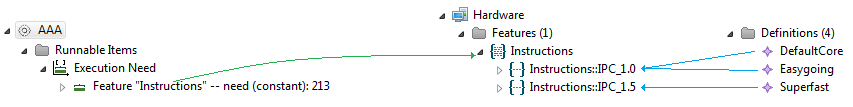

Current implementation with features and the connection to the SW Model

In the previous chapter the long time goal of the feature and recipe concept is explained. As an intermediate step before introducing the recipes we decided to connect the HwModel and SwModel by referencing to the name of the hardware

FeatureCategories from the

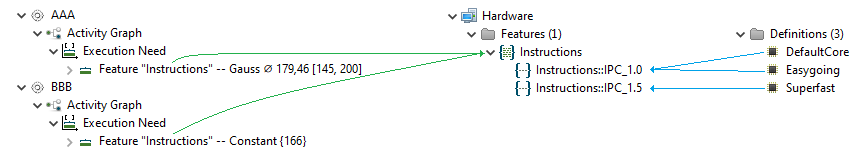

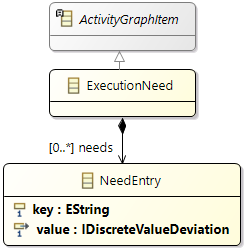

ExecutionNeed element in a Runnable. The following figure shows this connection between the grey Runnable item block and the white Features block. Due to the mapping (Task or Runnable to ProcessingUnit) the corresponding feature value can be extracted out of the ProcessingUnitDefinition.

An example based on the old hardware model is the "instruction per cycle" value (IPC). To model an IPC with the new approach a

HwFeatureCategory is created with the name

Instructions. Inside this category multiple IPC values can be created for different

ProcessingUnitDefinitions.

Note: In version 0.9.0 to 0.9.2 exists a default ExecutionNeed Instructions together with a the HwFeatre IPC the cycles can be calculated by dividing the Instructions by the IPC value.

Execution needs example

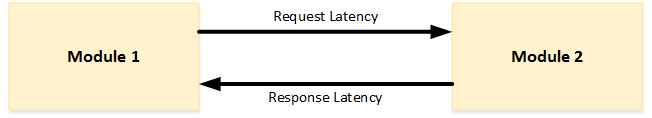

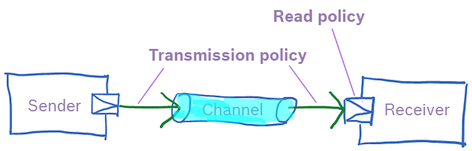

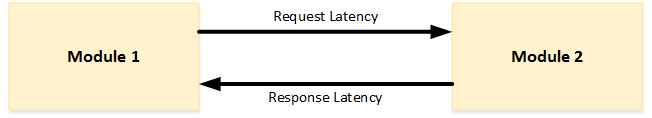

Interpretation of latencies in the model

In the model read and write access latencies are used. An alternative which is usually used in specifications or by measurements are request and response latencies. The following figure shows a typical communication between two components. The interpretation of a read and write latency for example at

ConnectionHandlers is the following:

-

readLatency = requestLatency + response Latency

-

writeLatency = requestLatency

The access latency of a

Memory component is always added to the read or write latency from the communication elements, independent if its one latency from an

HwAccessElement or multiple latencies from a

HwAccessPath.

As example in case of using only read and write latencies:

-

totalReadLatency = readLatency (HwAccessElement) + accessLatency (Memory)

-

totalWriteLatency = writeLatency (HwAccessElement) + accessLatency (Memory)

An example in case of using an access element with a hardware access path:

n = Number of path elements

-

totalReadLatency = Sum 1..n ( readLatency(p) ) + accessLatency (Memory)

-

totalWriteLatency = Sum 1..n ( writeLatency(p) ) + accessLatency (Memory)

PathElements could be

Caches,

ConnectionHandlers and

HwConnections. In very special cases also a

ProcessingUnit can be a

PathElement the

ProcessingUnit has no direct effect on the latency. In case the user want to express a latency it has to be annotated as

HwFeature.

2.2.3 Software (development)

The AMALTHEA System Model can also be used in early phases of the development process when only limited information about the resulting software is available.

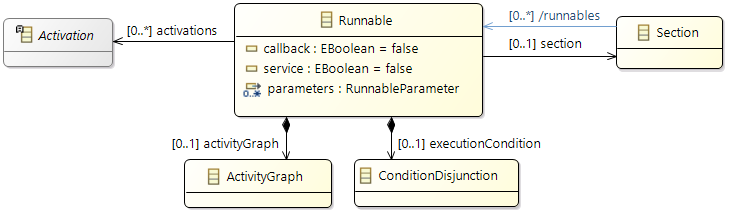

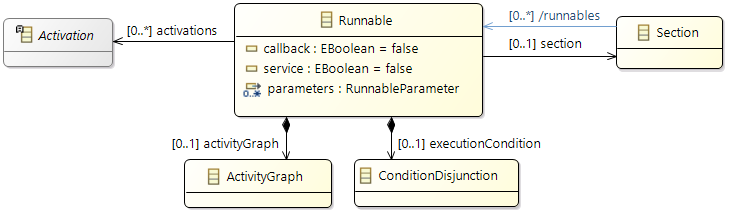

Runnables

The

Runnable element is the basic software unit that defines the behavior of the software in terms of runtime and communication. It can be described on different levels of abstraction:

- timing only (activation and runtime)

- including communication (in general)

- adding detailed activity graphs

To allow a more detailed simulation a description can also include statistical values like deviations or probabilities. This requires additional information that is typically derived from an already implemented function. The modeling of observed behavior is described in more detail in chapter

Software (runtime).

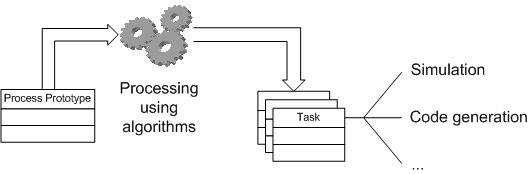

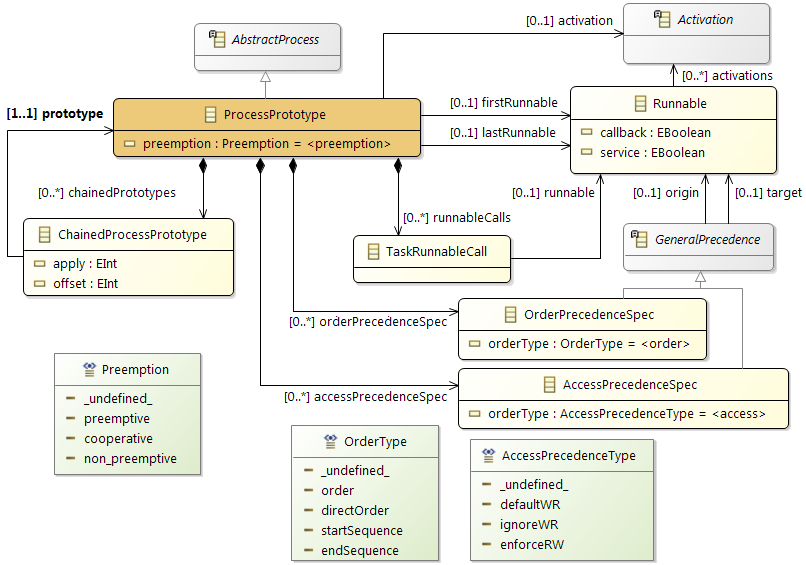

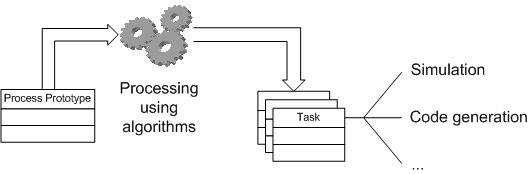

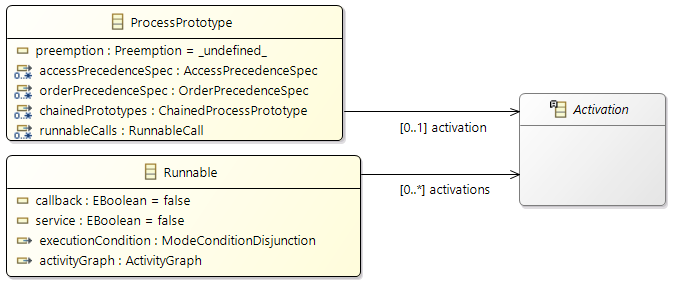

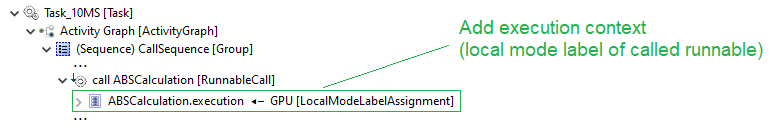

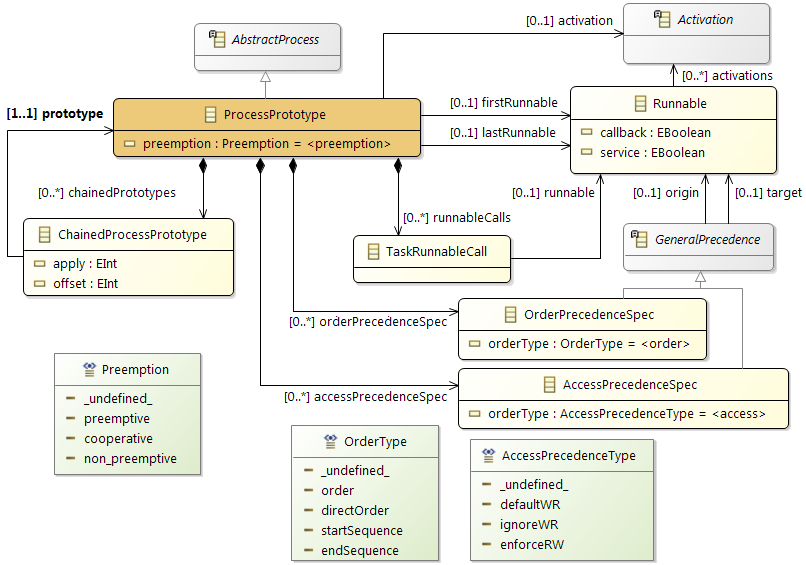

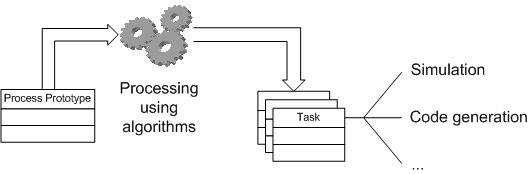

Process Prototypes

Process Prototypes are used to define the basic data of a task. This is another possibility to describe that a set of Runnables has a similar characteristic (e.g. they have the same periodic activation).

A prototype can then be processed and checked by different algorithms. Finally a partitioning algorithm generates (one or more) tasks that are the runtime equivalents of the prototype.

This processing can be guided by specifications that are provided by the function developers:

- The

Order Specification is a predefined execution order that has to be guaranteed.

- An

Access Specification defines exceptions from the standard write-before-read semantics.

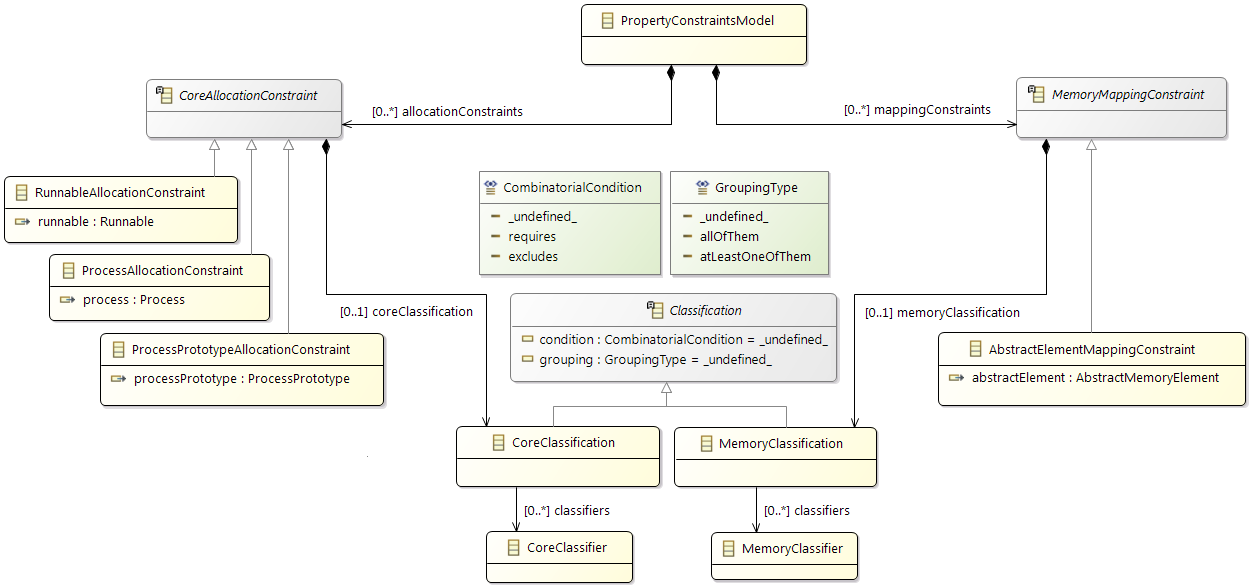

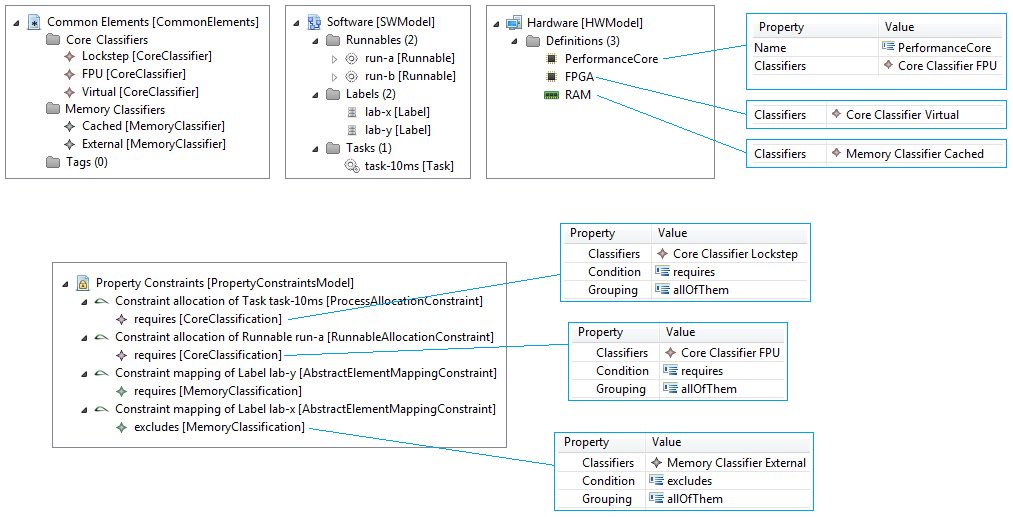

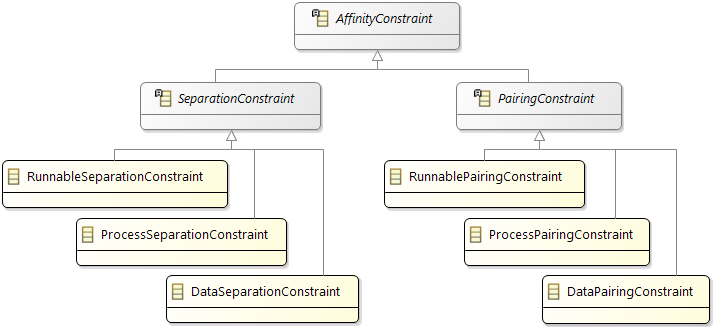

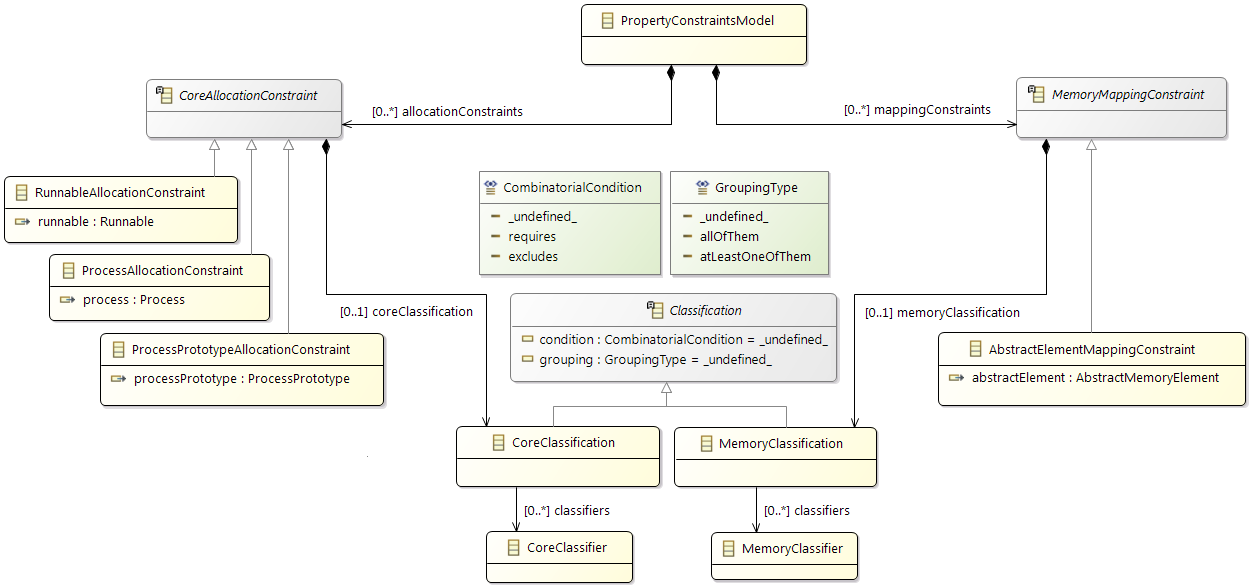

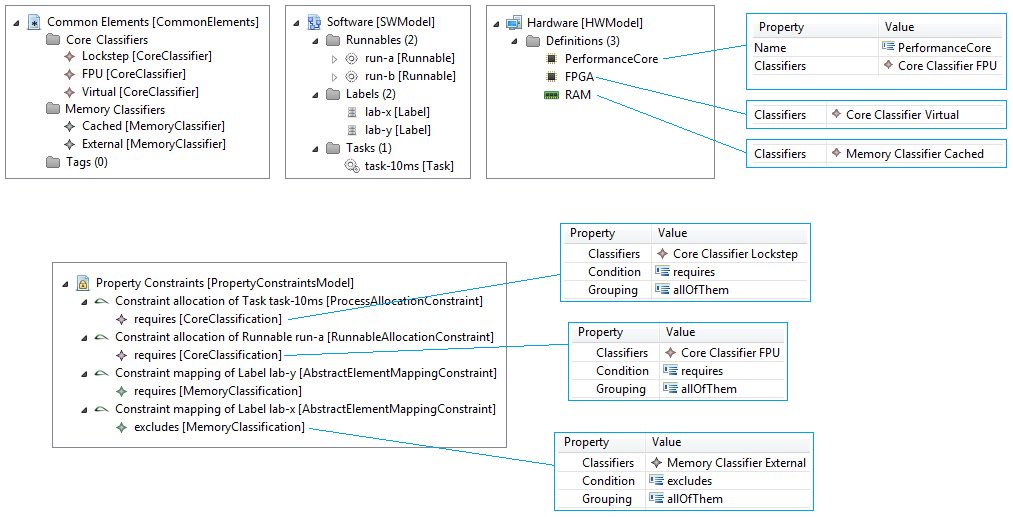

Constraints

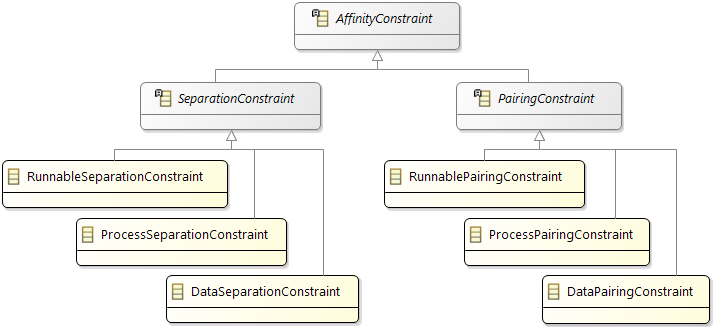

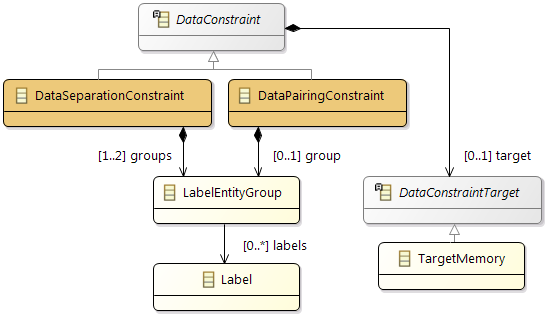

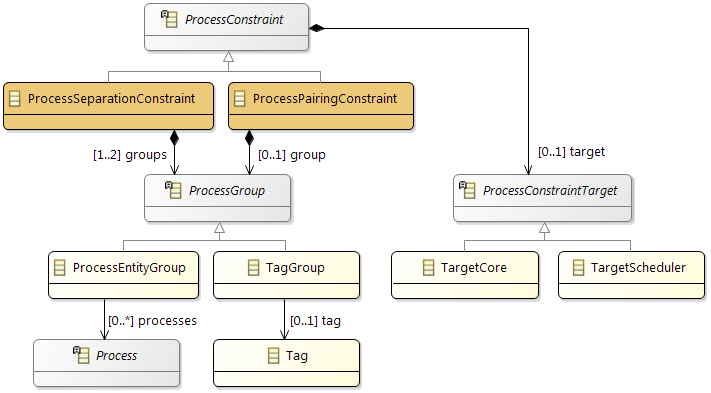

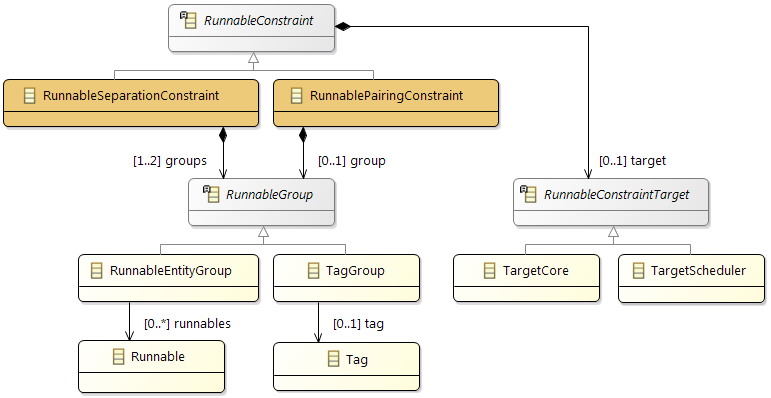

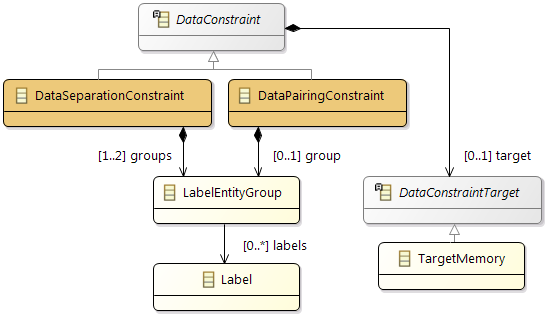

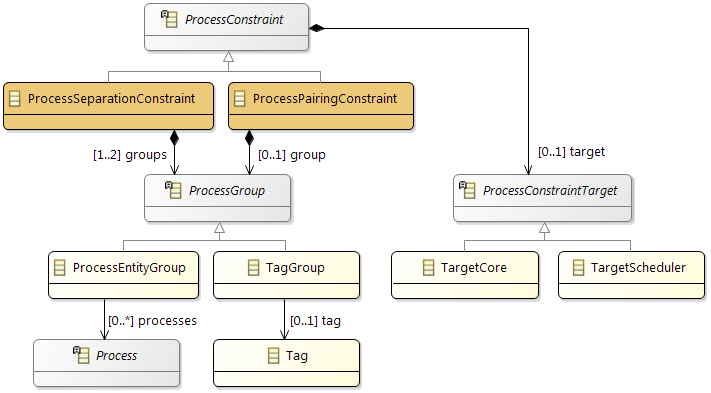

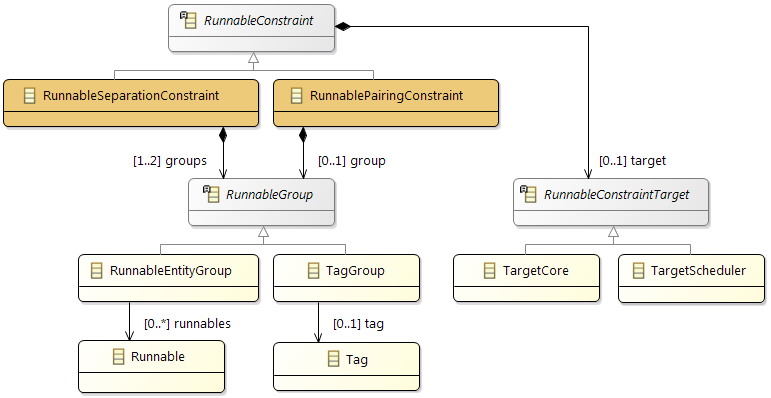

In addition the partitioning and mapping can be restricted by

Affinity Constraints that enforce the pairing or separation of software elements and by

Property Constraints that connect hardware capabilities and the corresponding software requirements.

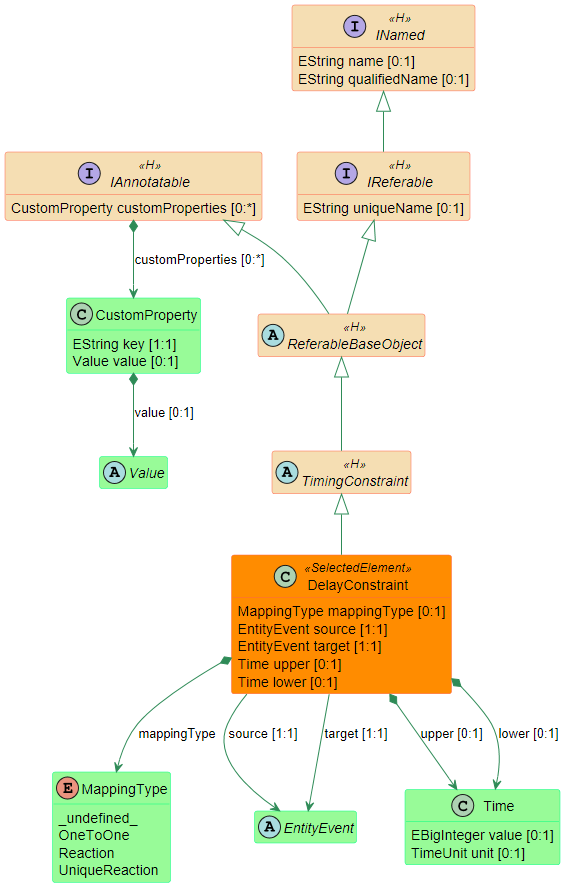

The

Timing Constraints will typically be used to check if the resulting system fulfills all the requirements.

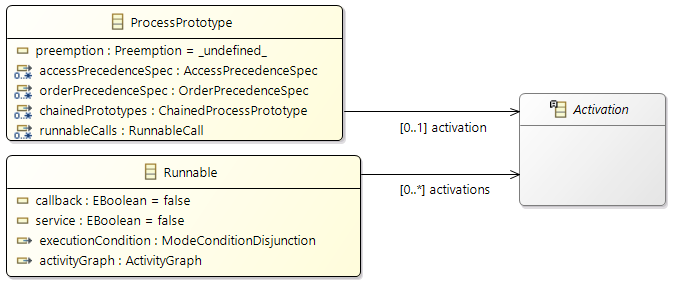

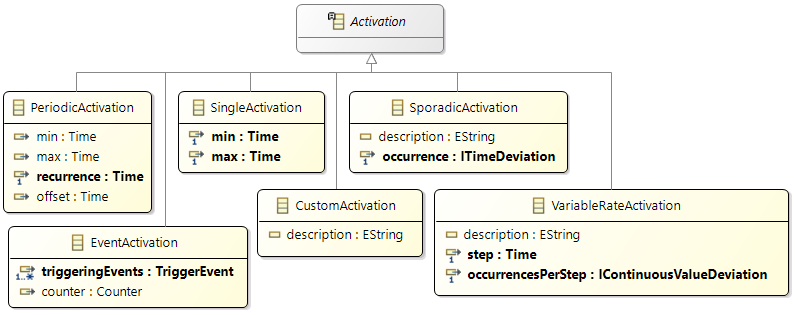

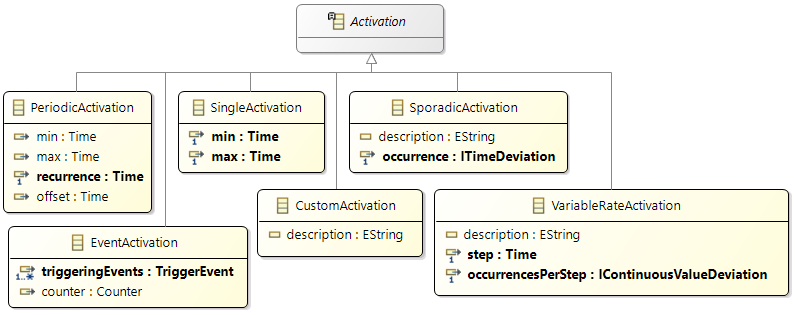

Activations

Activations are used to specify the intended activation behavior of

Runnables and

ProcessPrototypes. Typically they are defined before the creation of tasks (and the runnable to task mappings). So this is a way to cluster runnables and to document when the runnables should be executed.

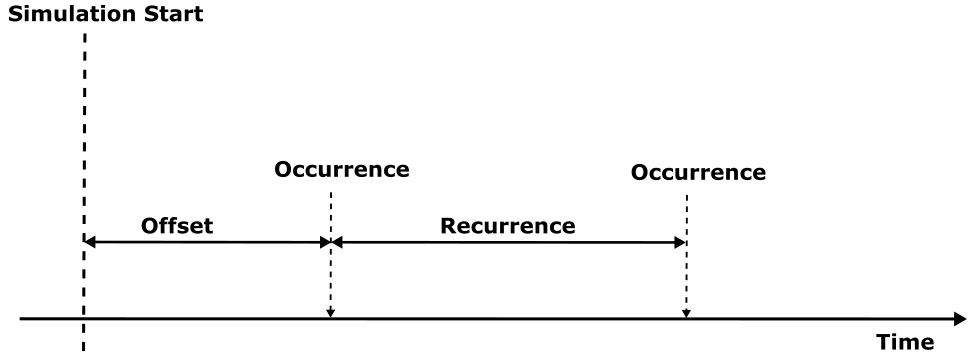

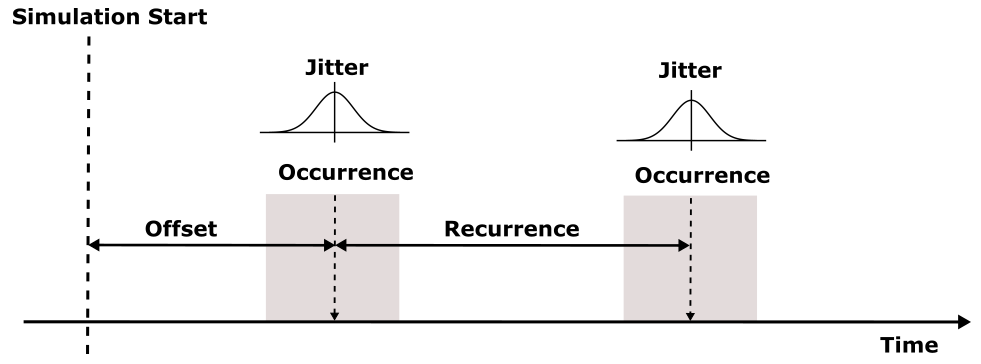

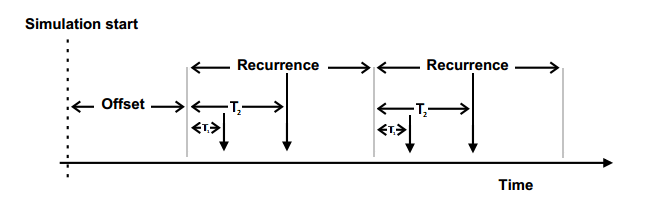

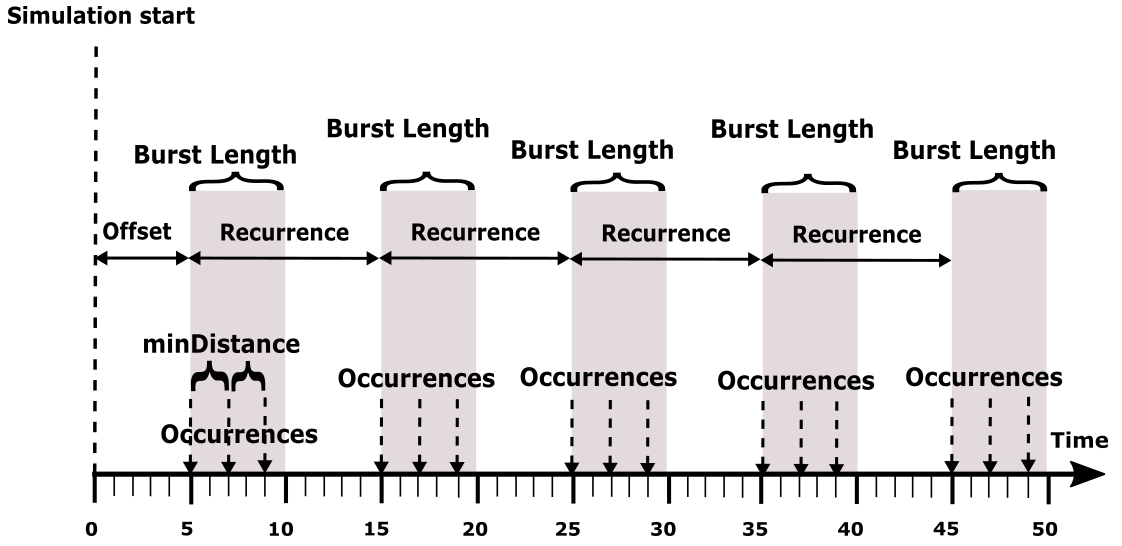

The following activation patterns can be distinguished:

- Single: single activation

- Periodic: periodic activation with a specific frequency

- Sporadic: recurring activation without following a specific pattern

- Event: activation triggered by a

TriggerEvent

- Custom: custom activation (free textual description)

To describe a specific (observed) behavior at runtime there are

Stimuli in the AMALTHEA model. They can be created based on the information of the specified activations.

2.2.4 Software (runtime)

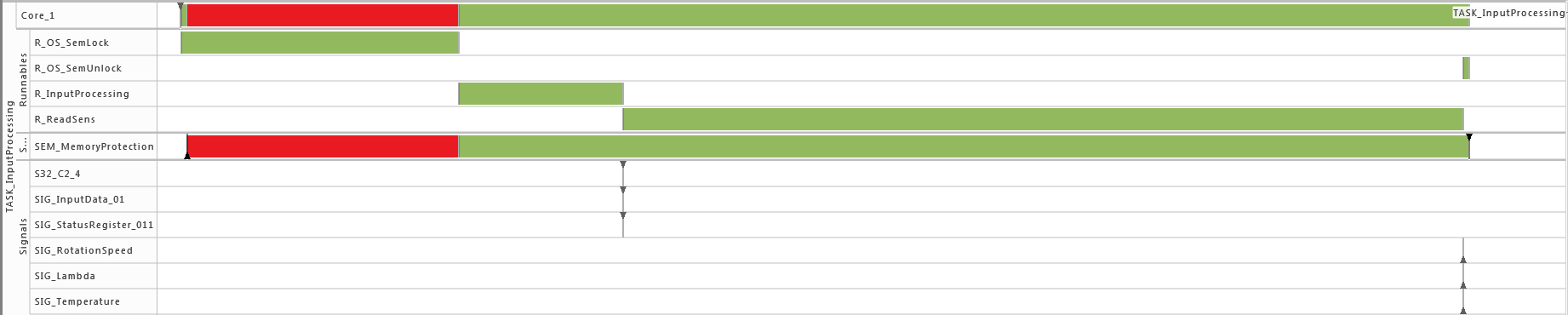

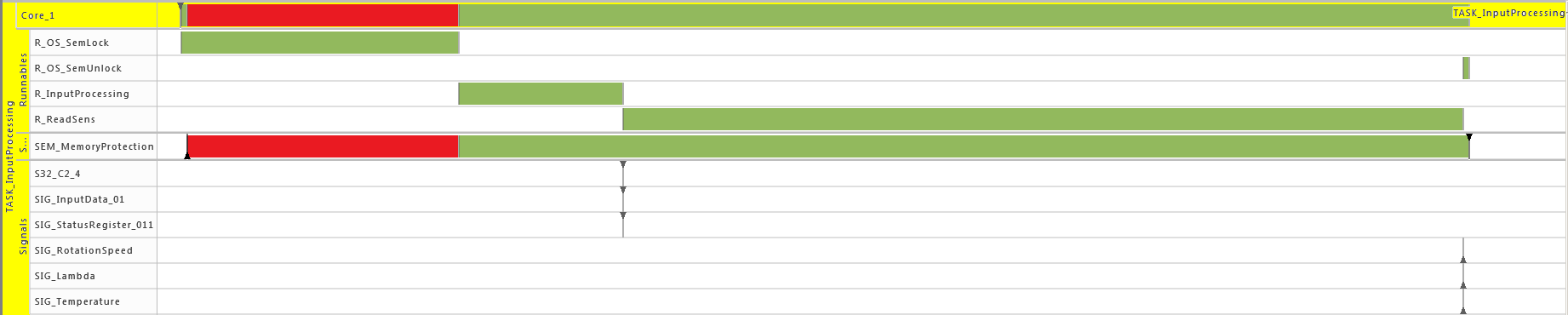

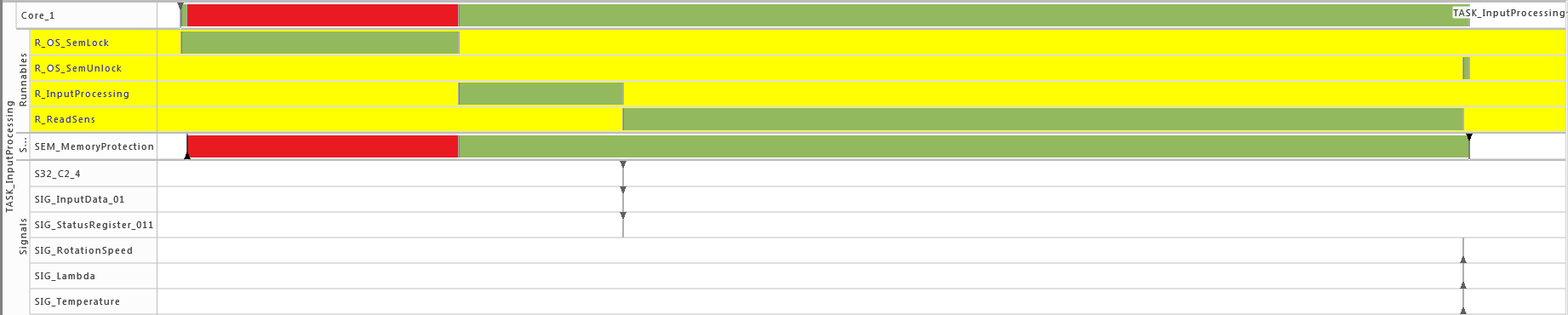

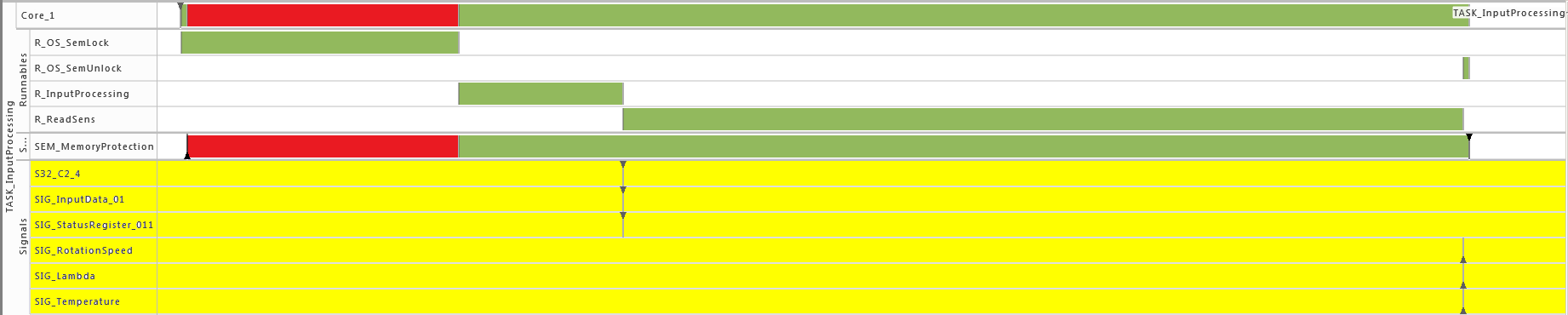

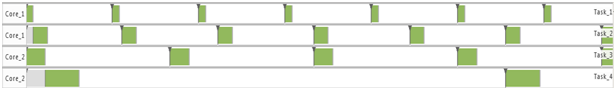

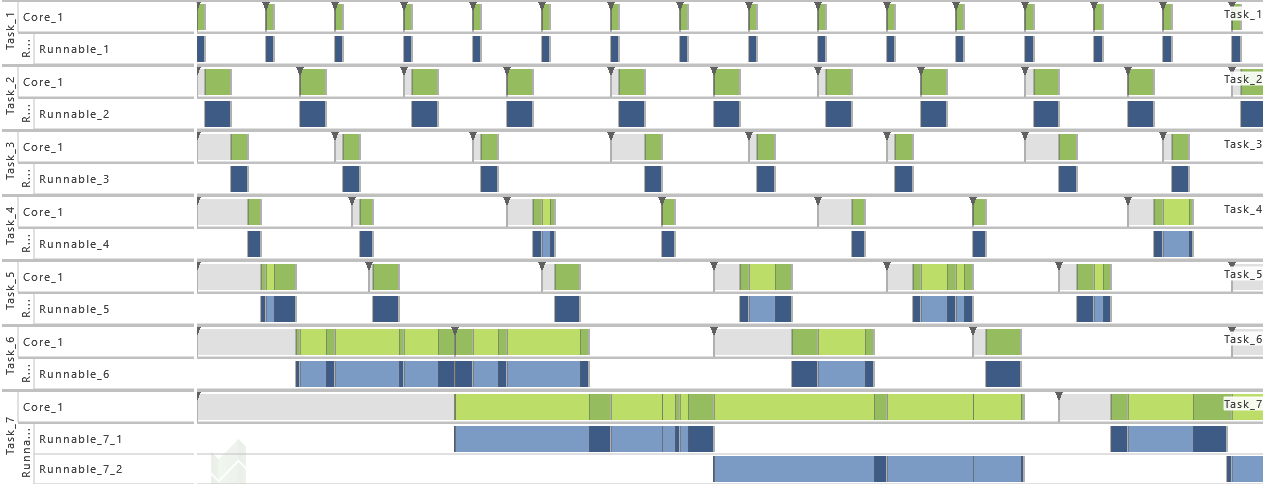

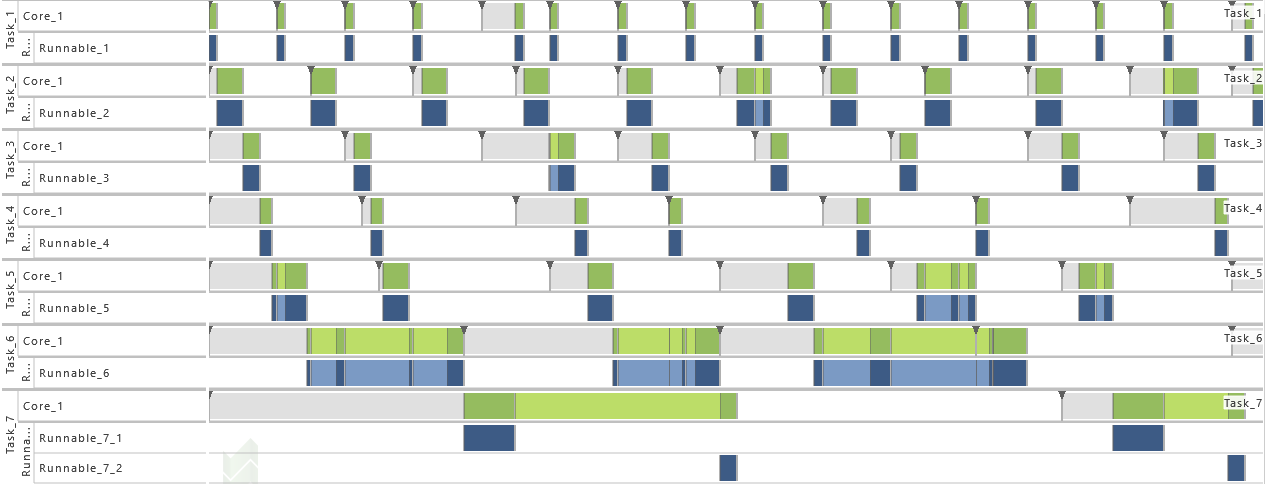

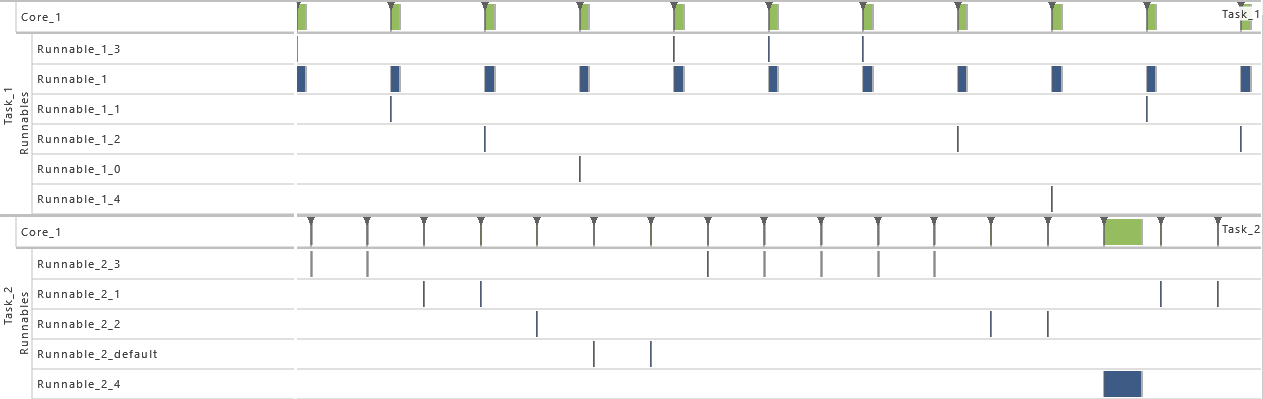

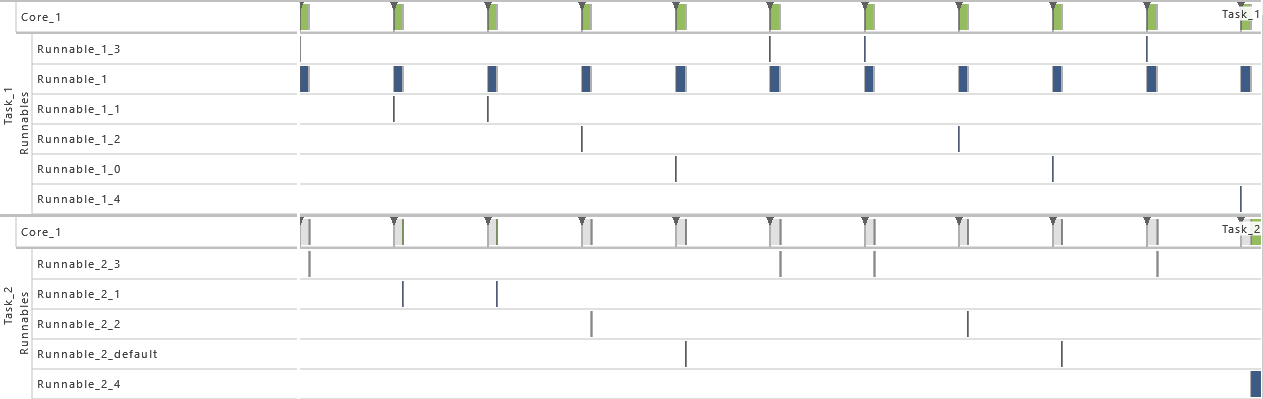

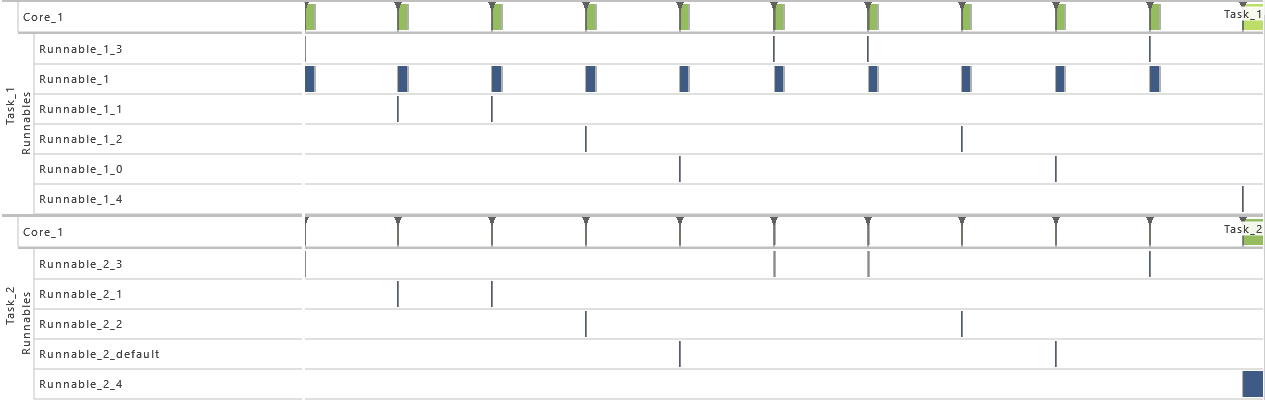

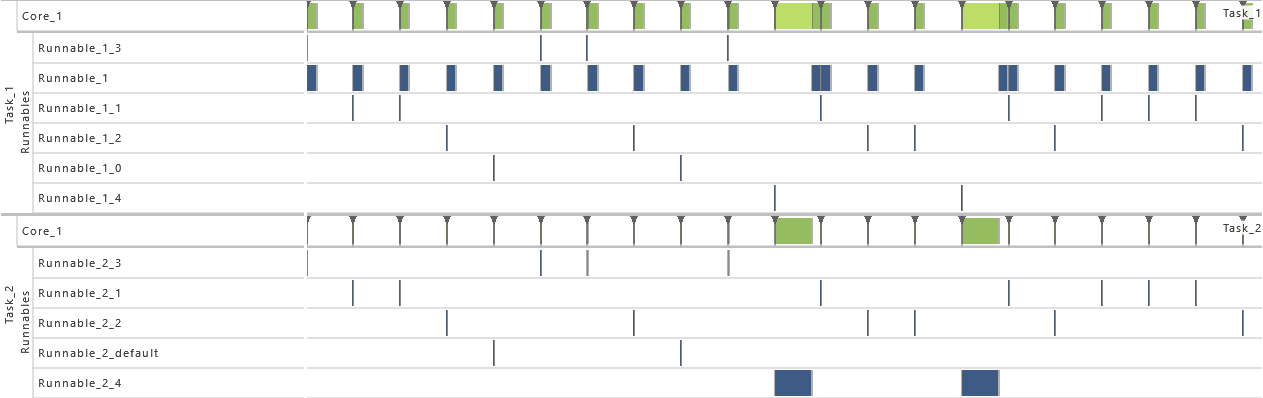

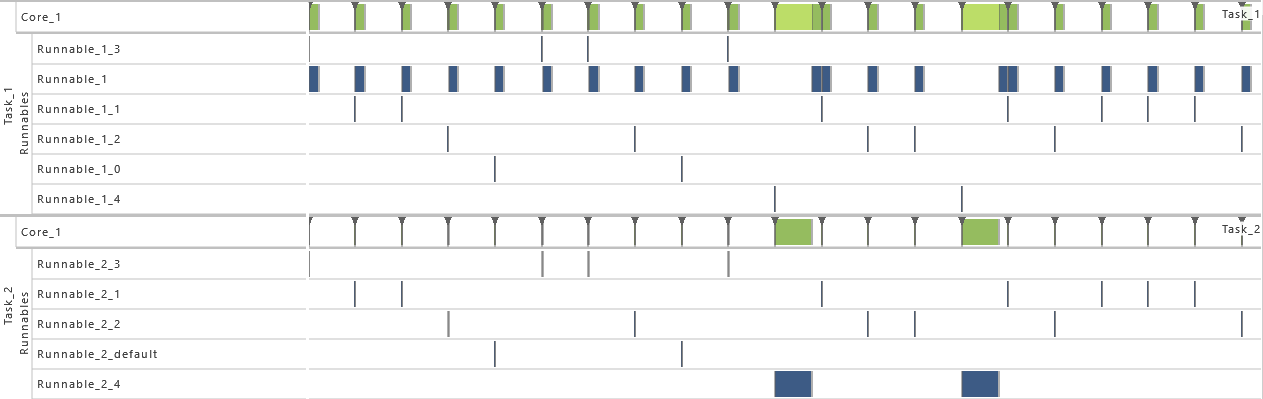

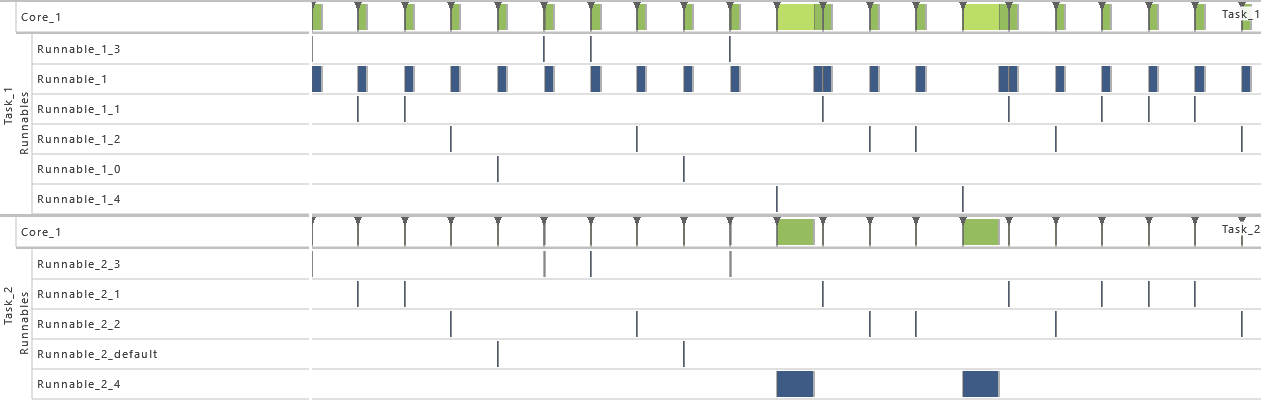

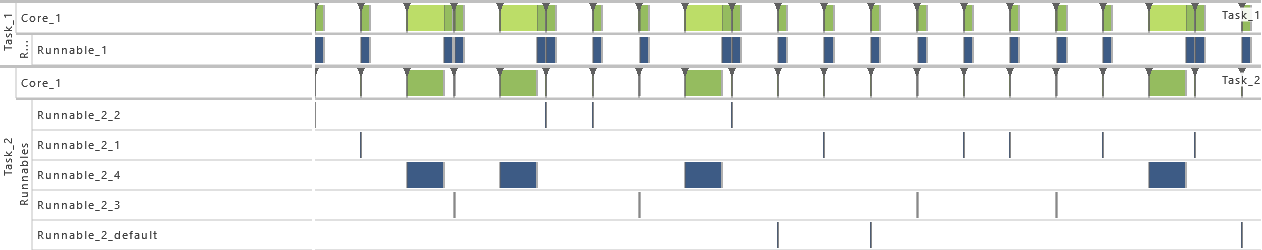

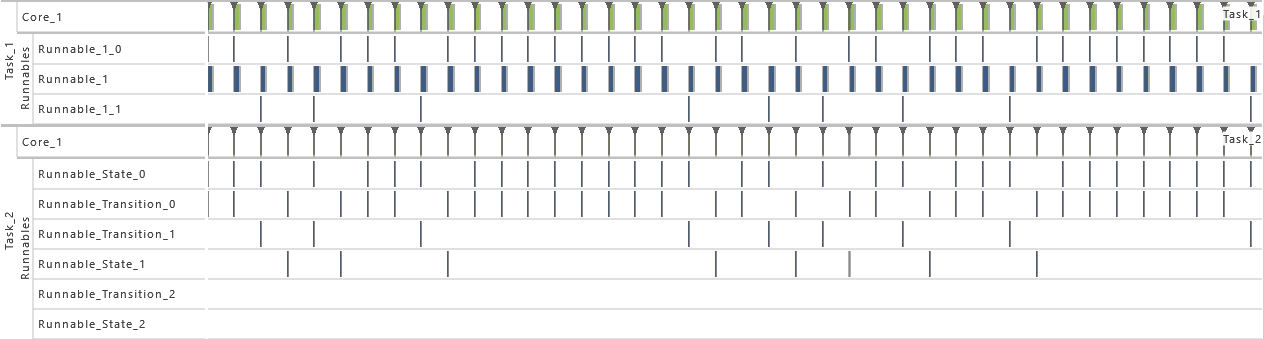

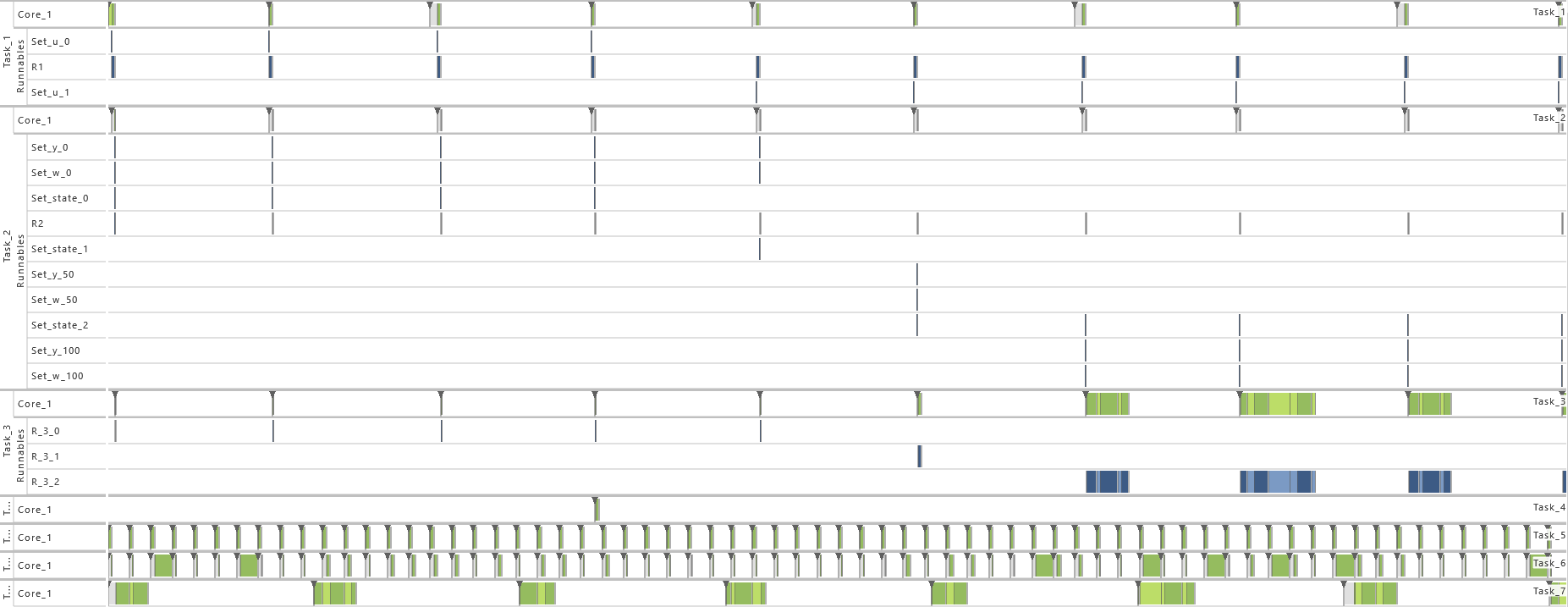

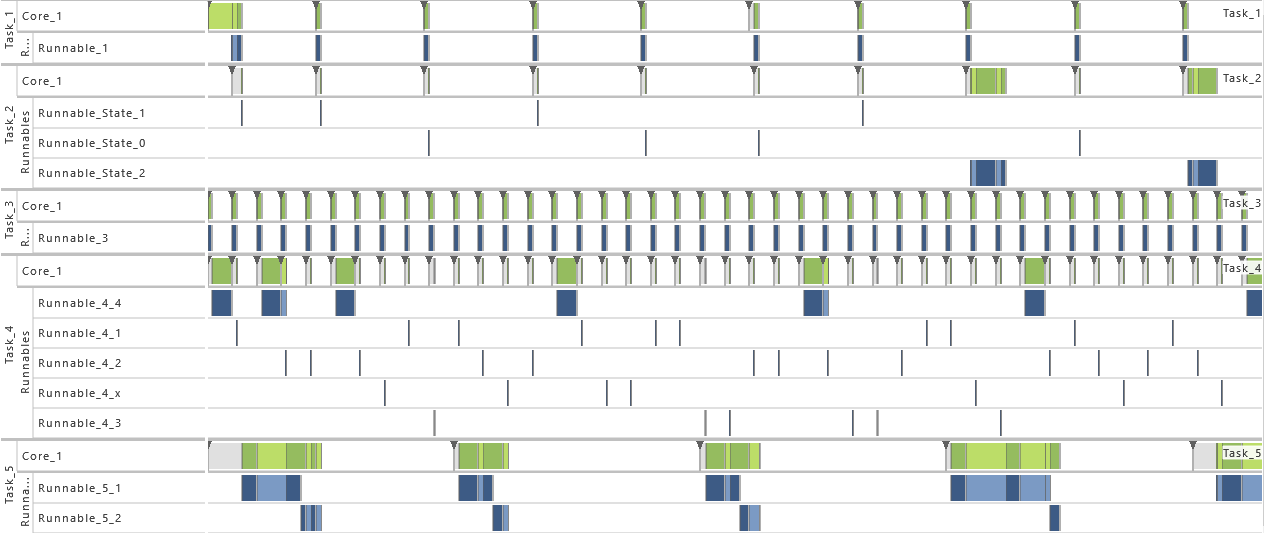

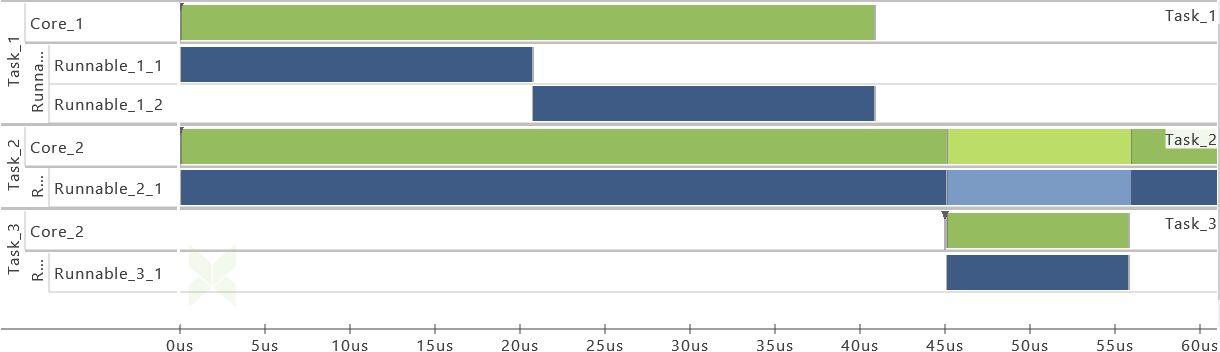

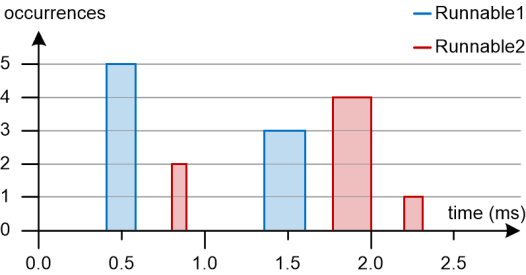

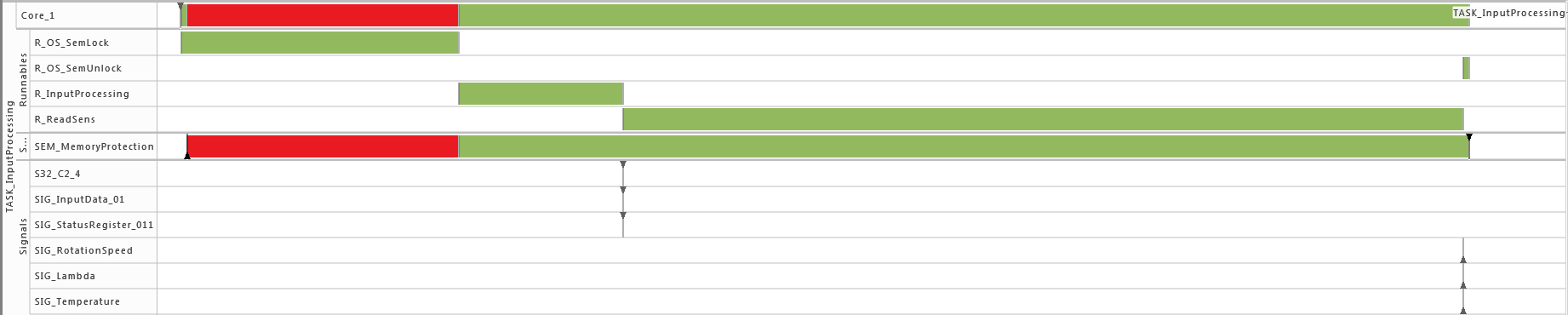

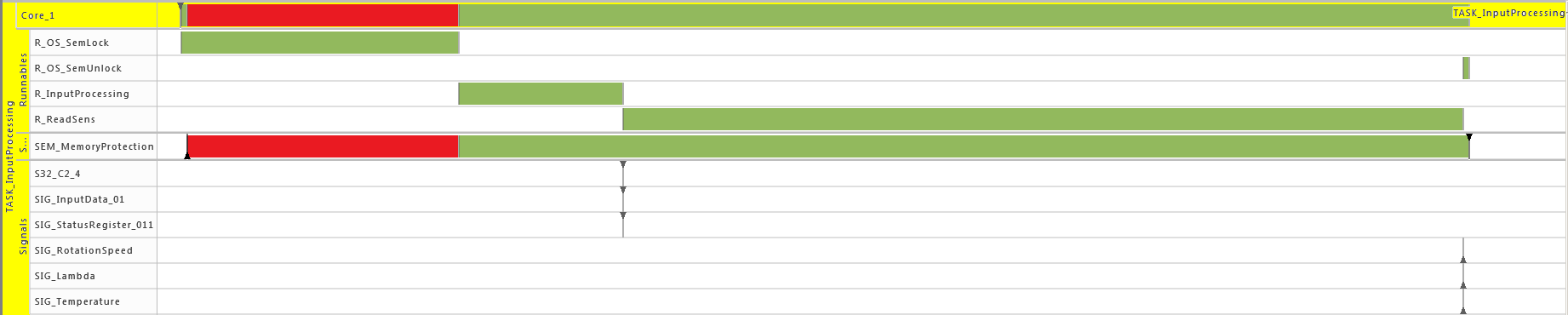

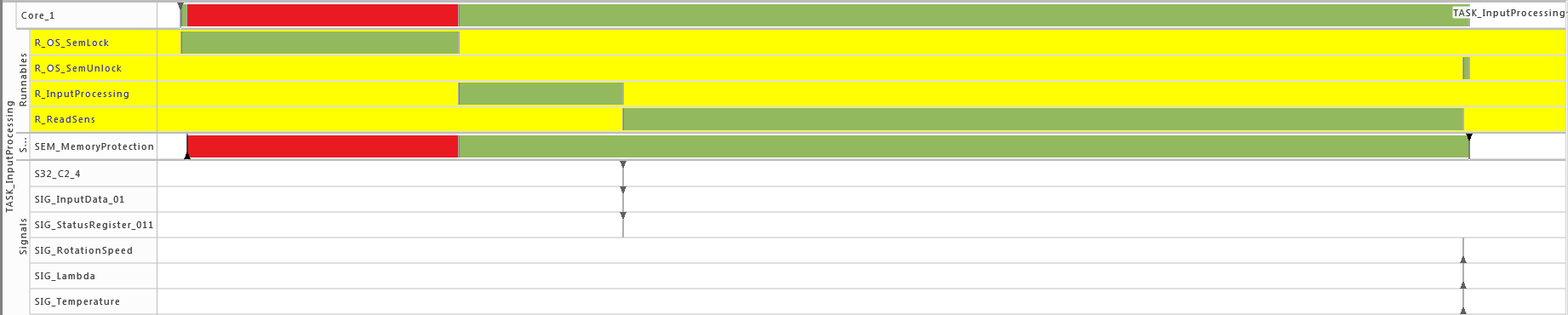

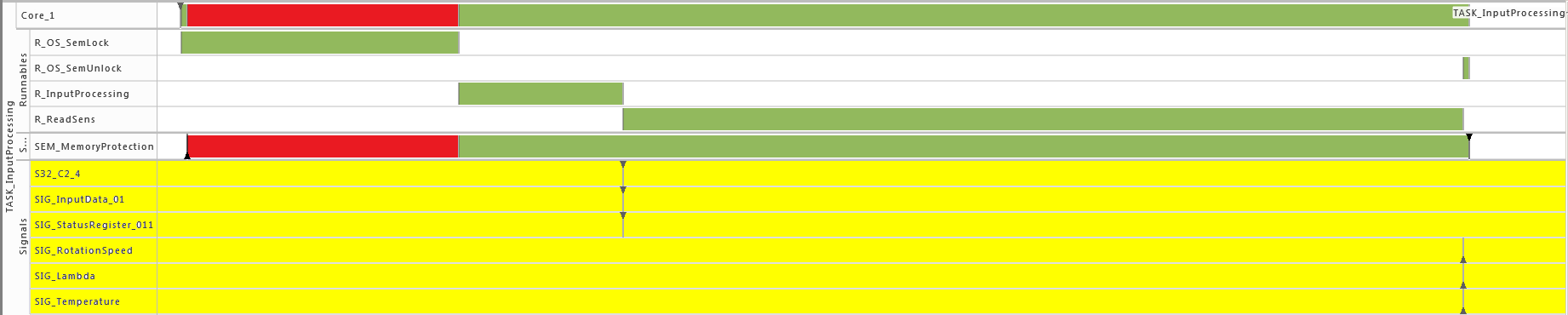

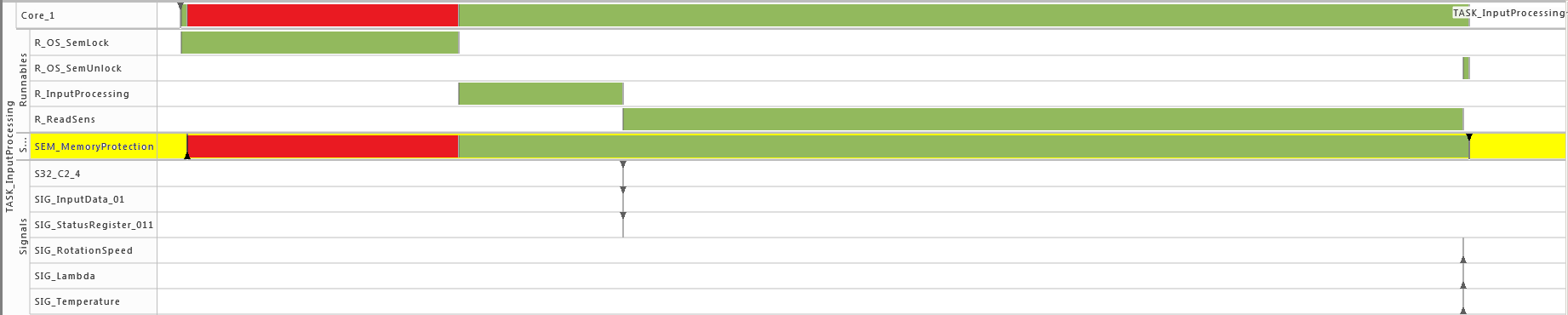

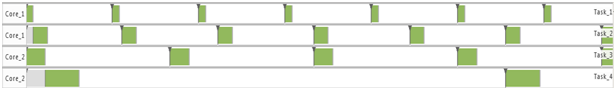

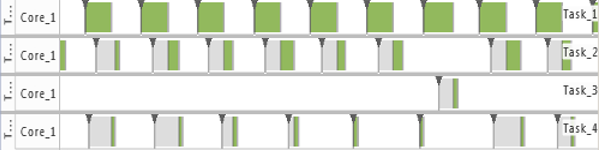

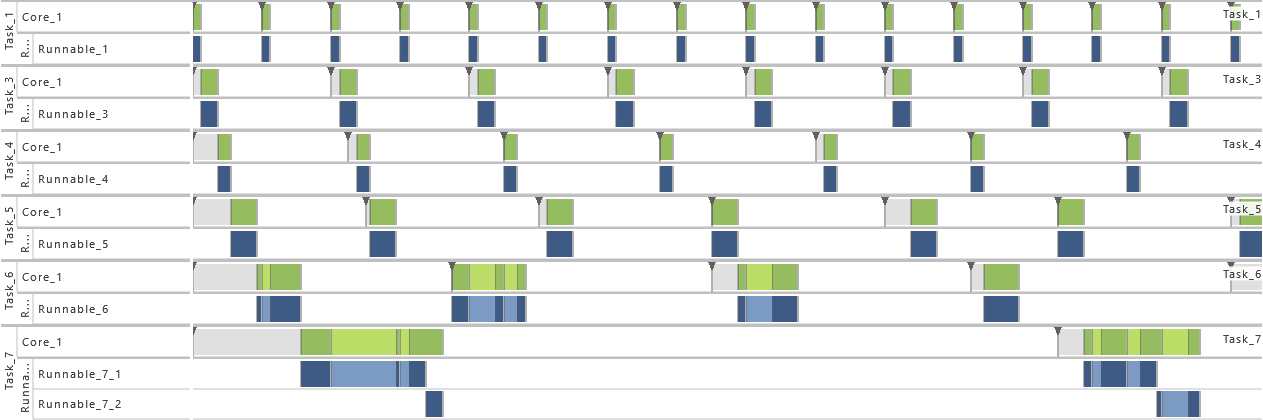

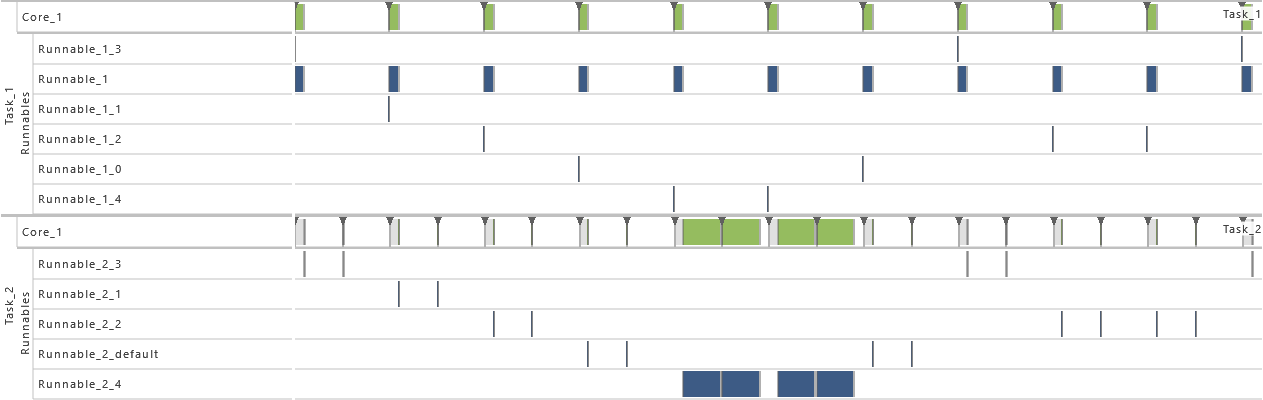

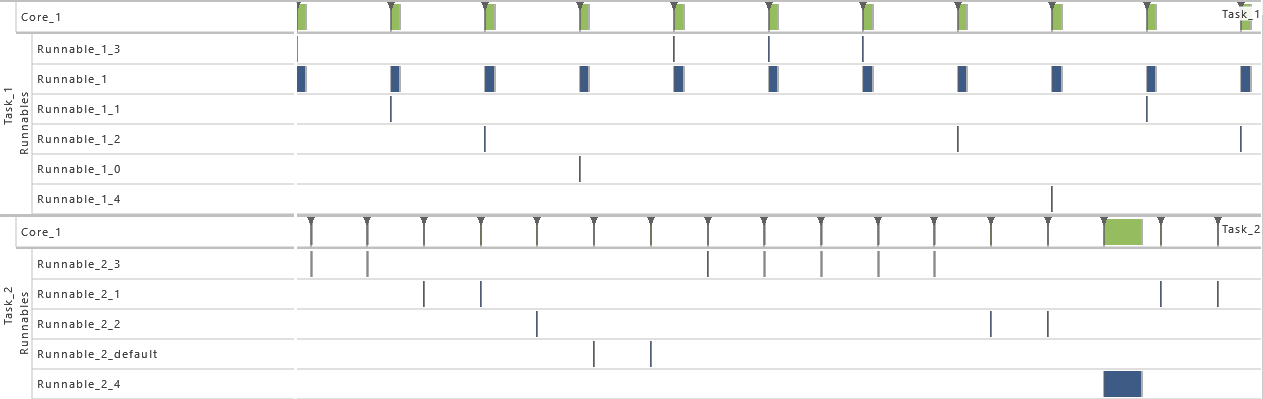

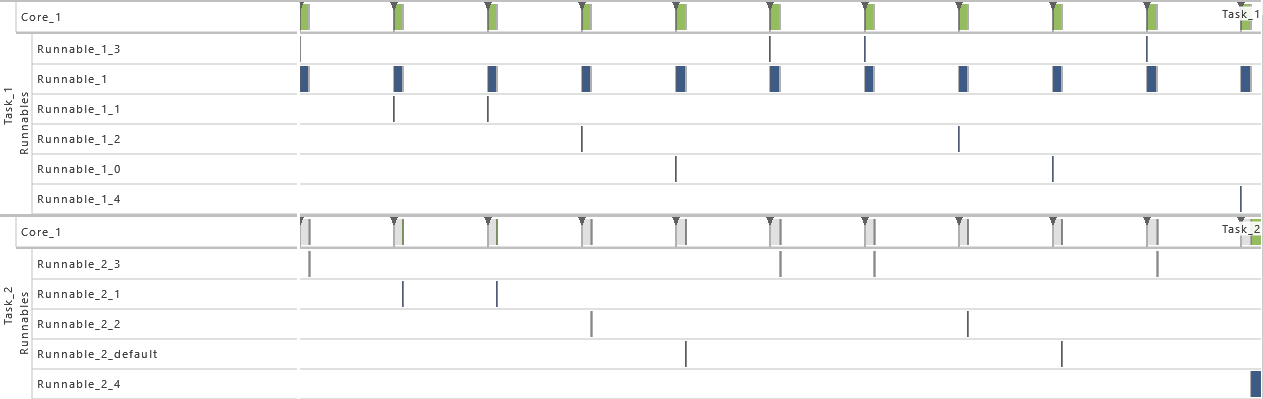

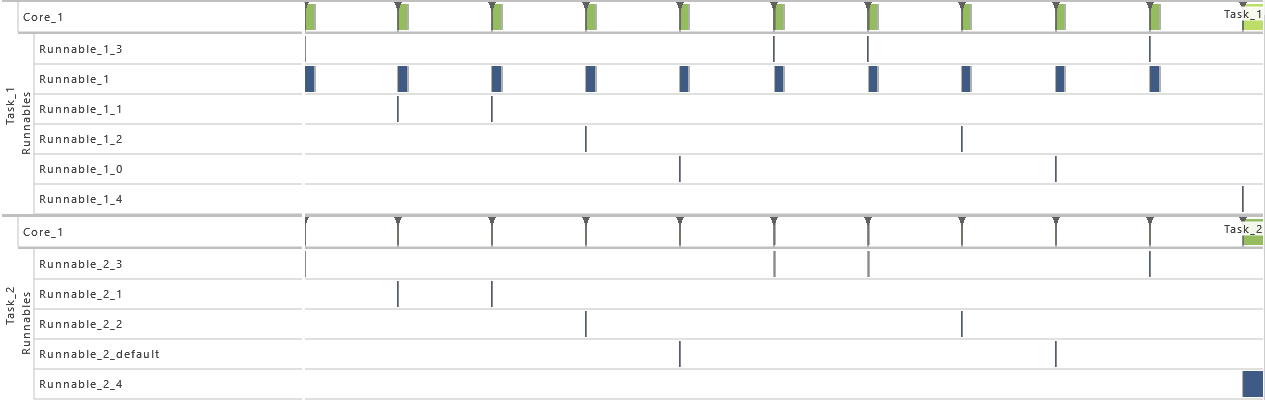

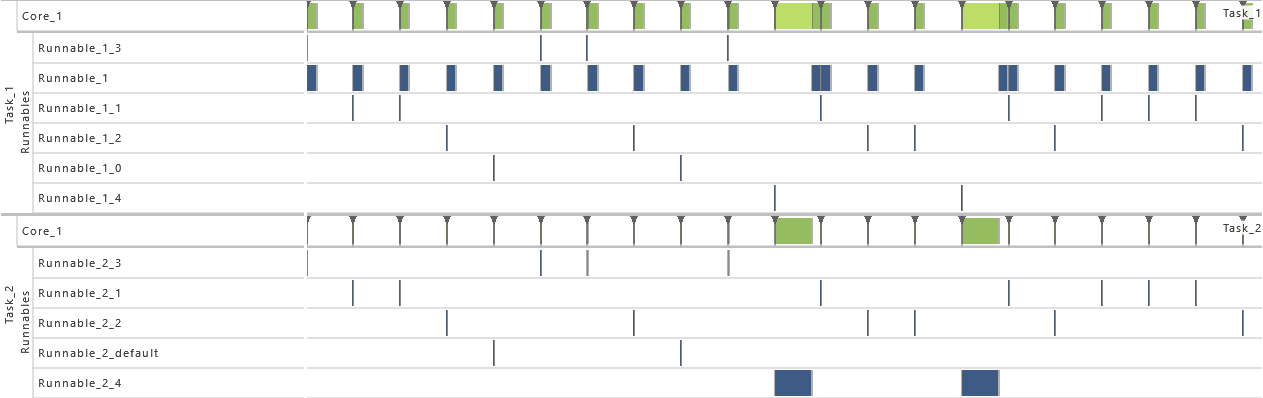

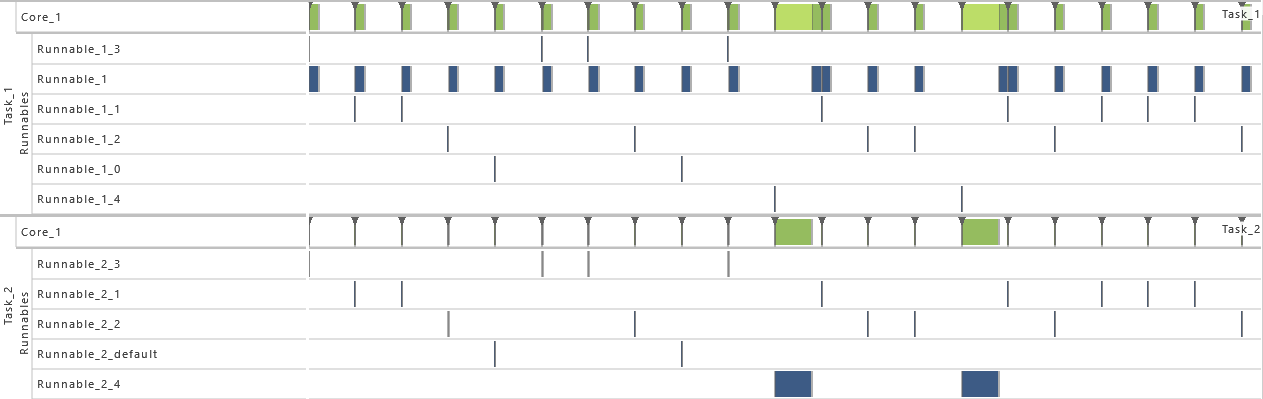

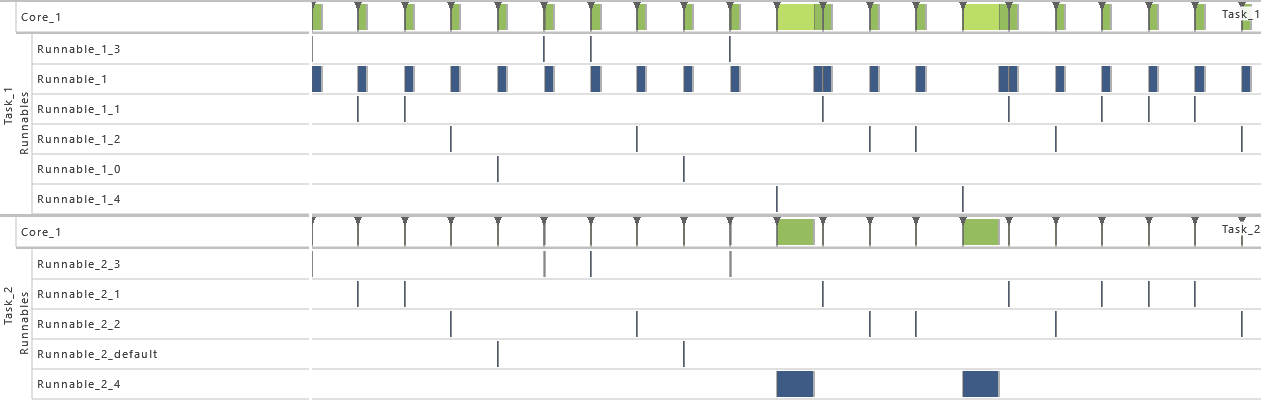

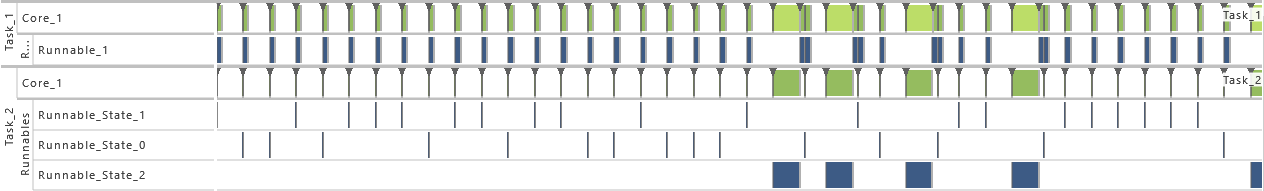

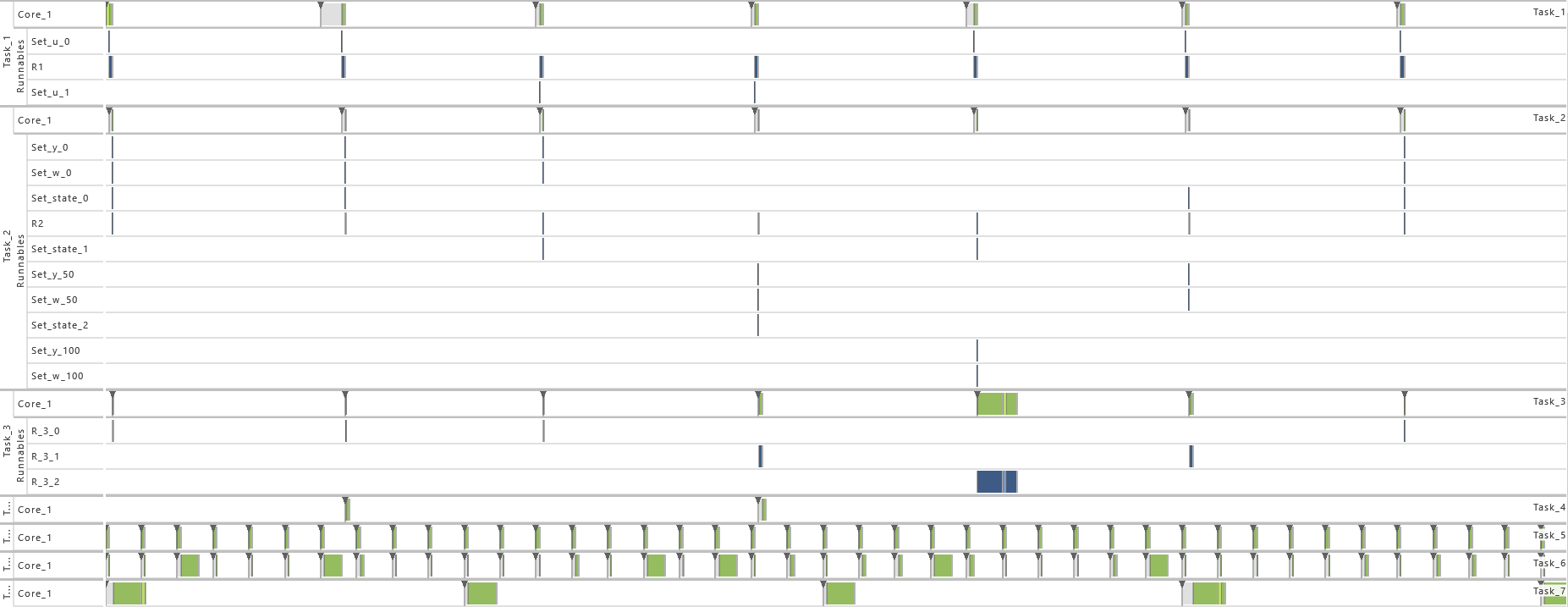

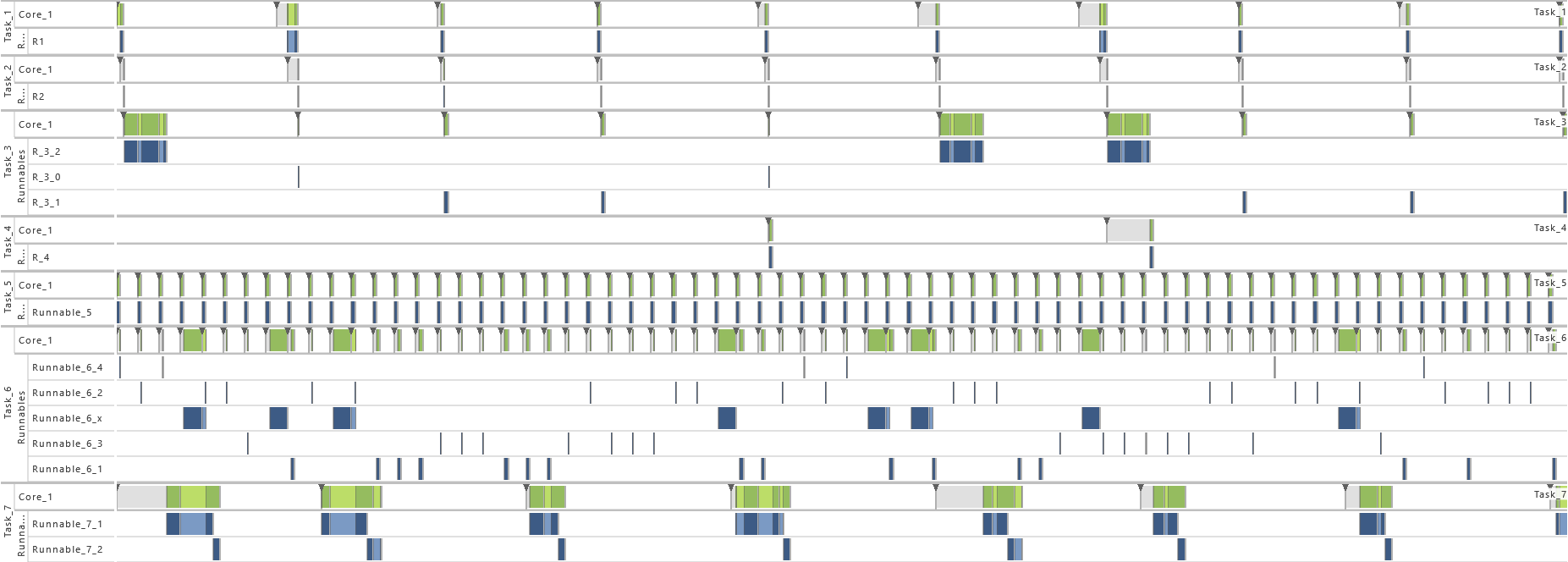

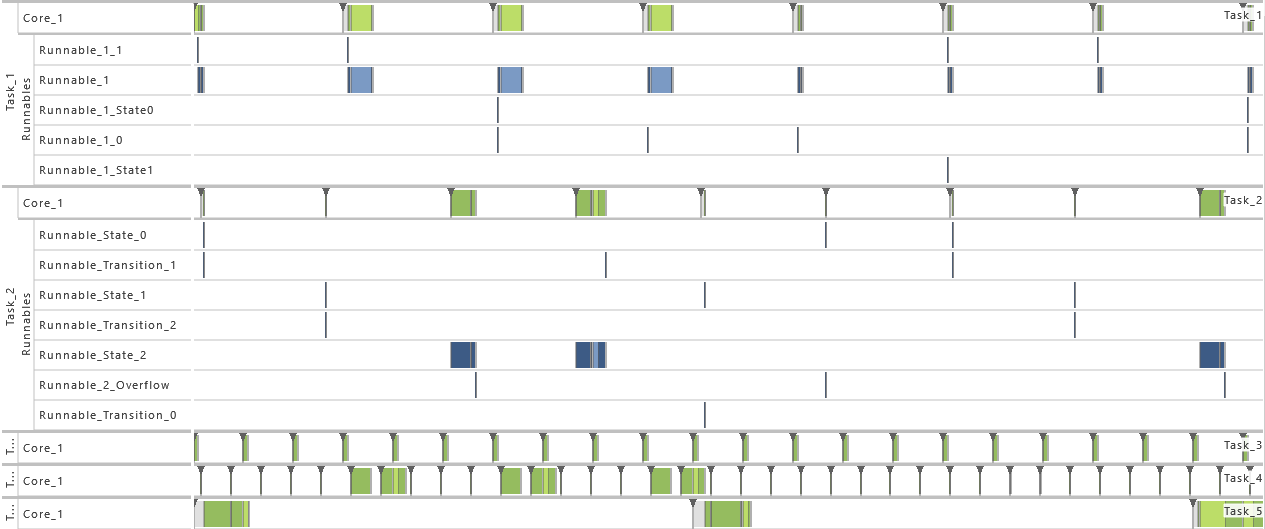

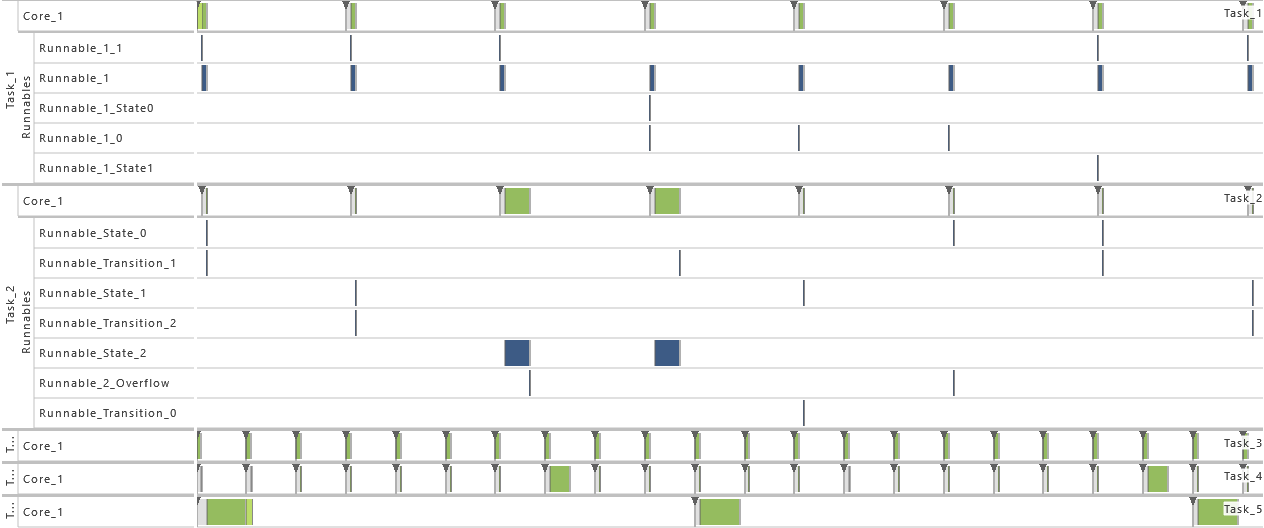

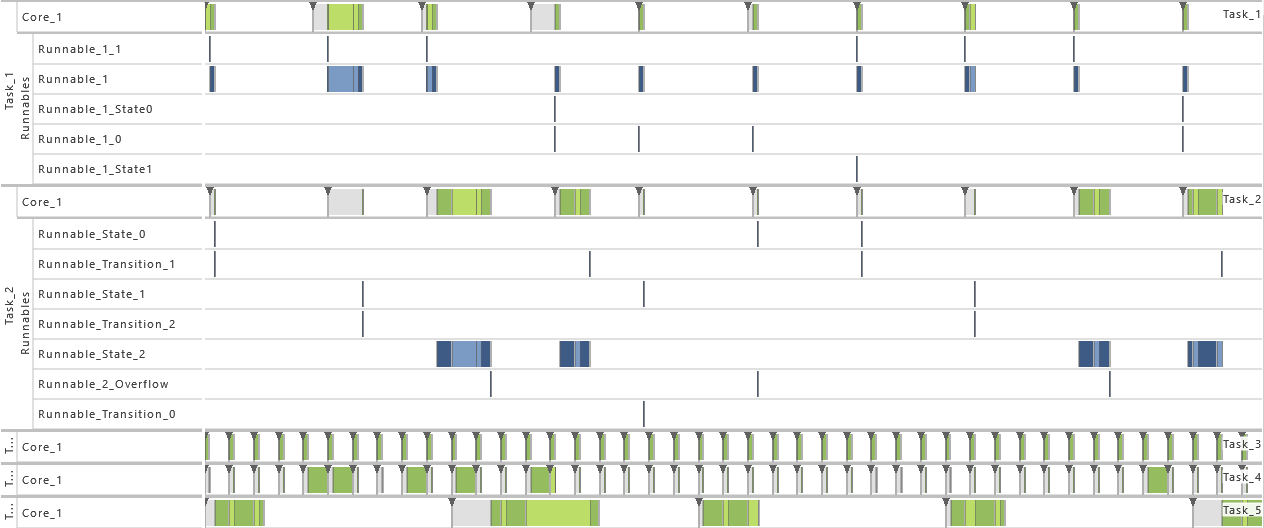

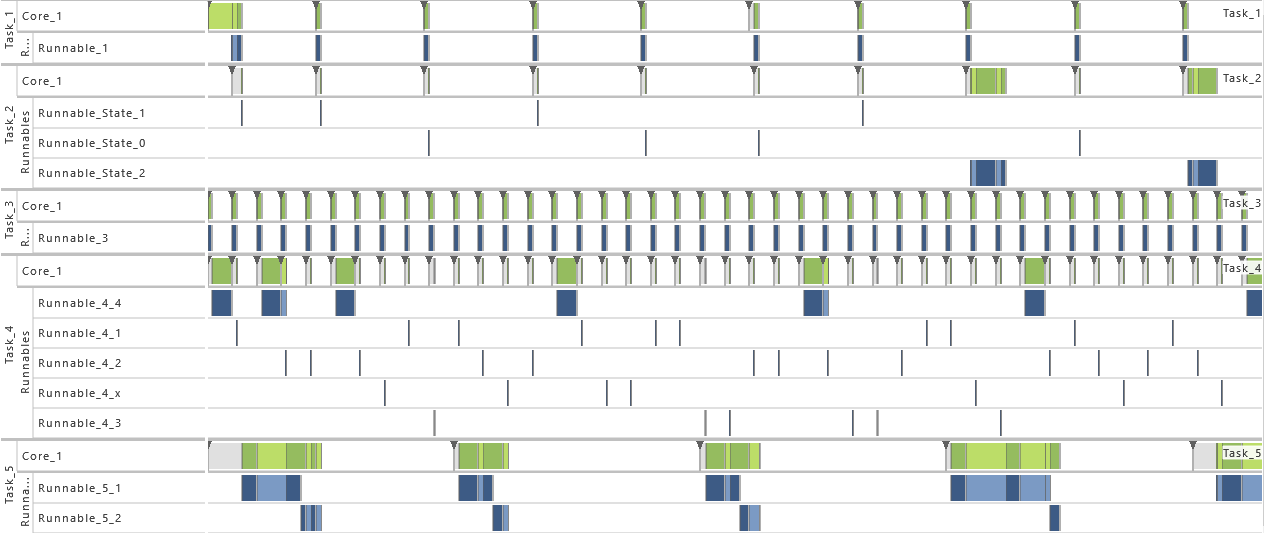

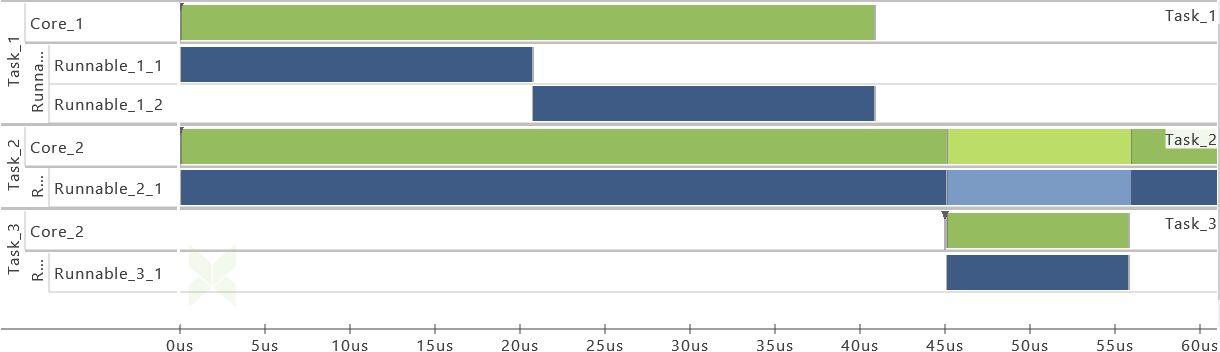

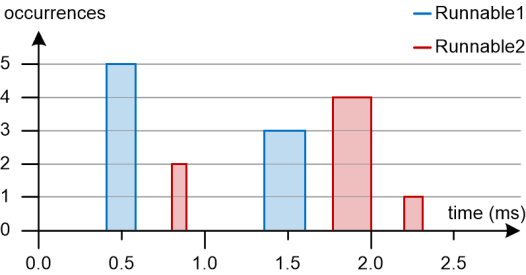

During runtime, the dynamic behavior of the software can be observed. The following Gantt chart shows an excerpt of such a dynamic behavior.

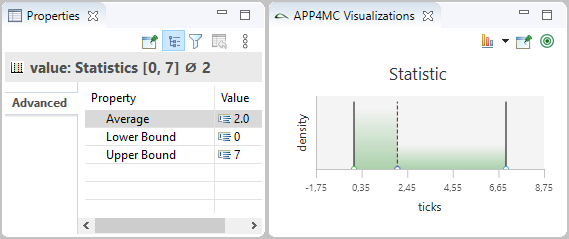

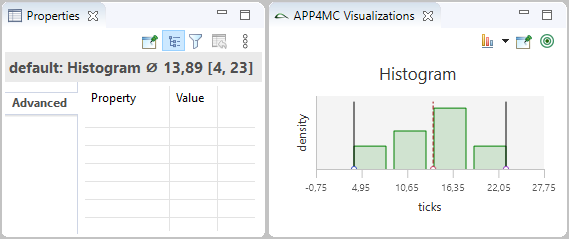

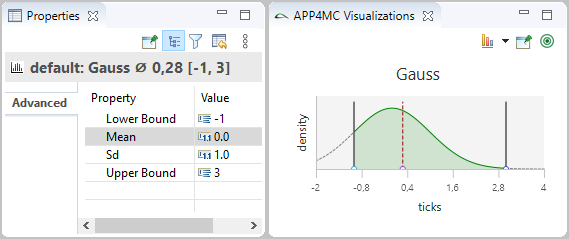

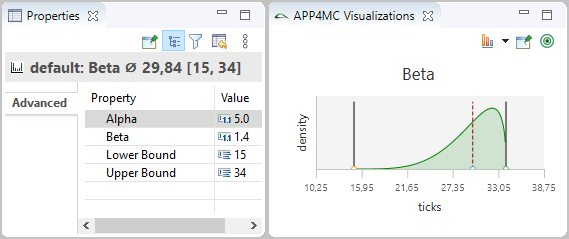

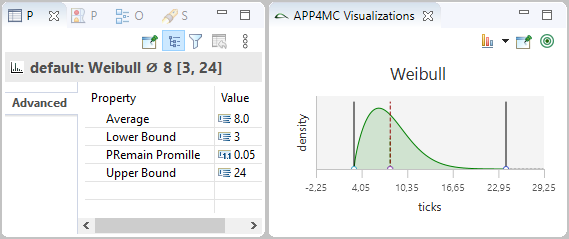

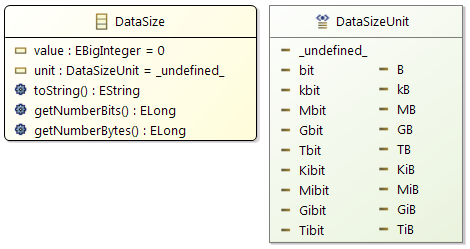

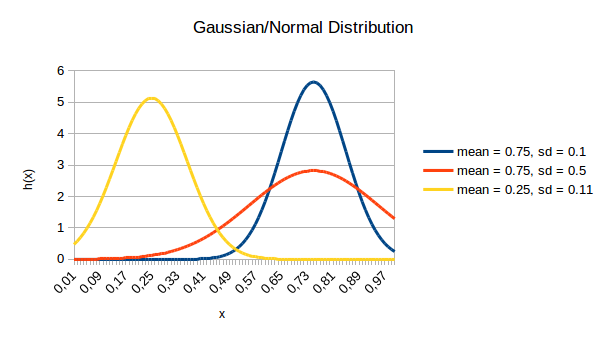

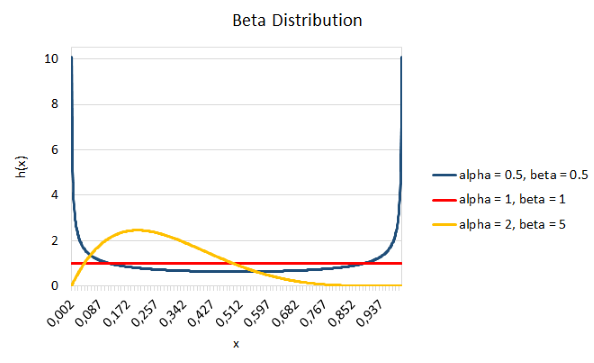

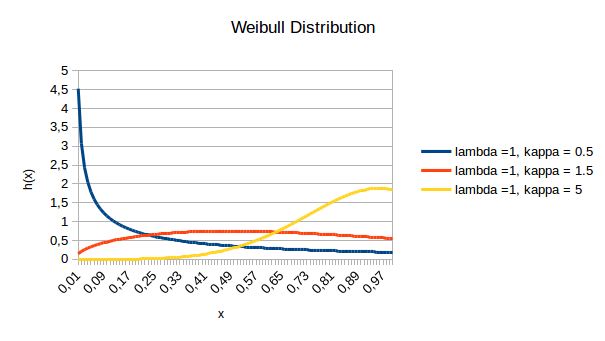

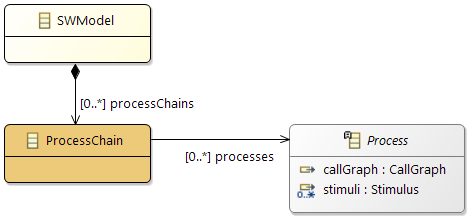

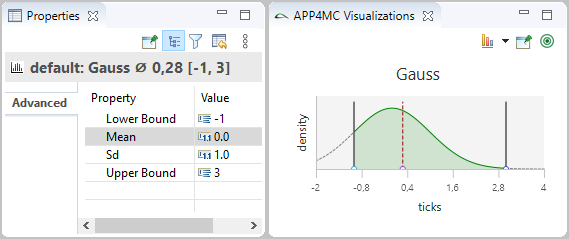

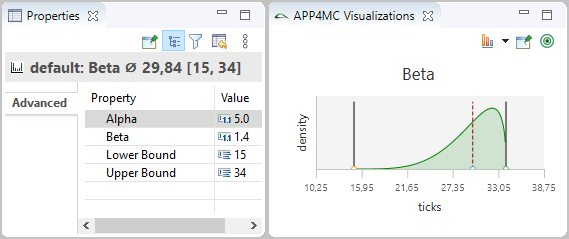

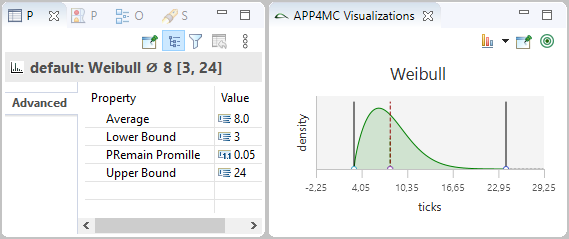

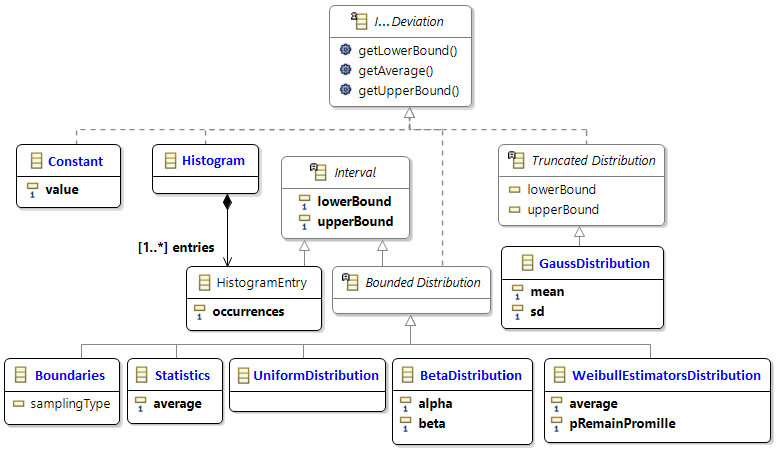

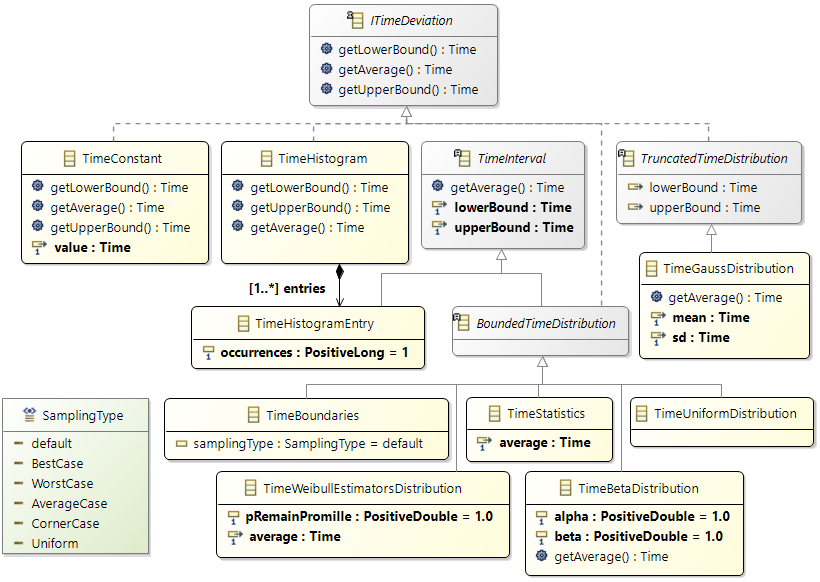

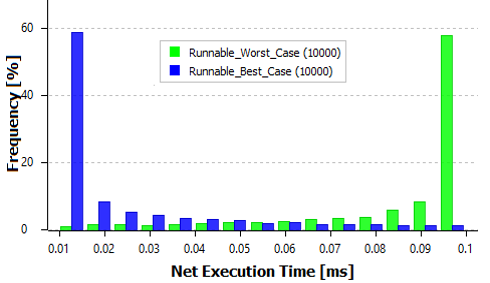

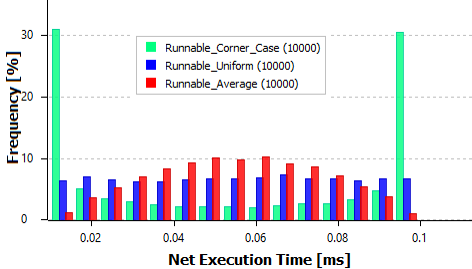

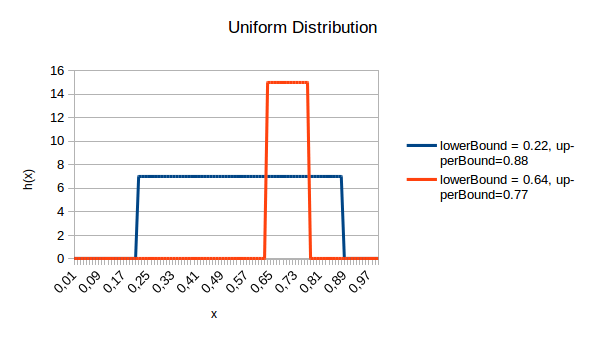

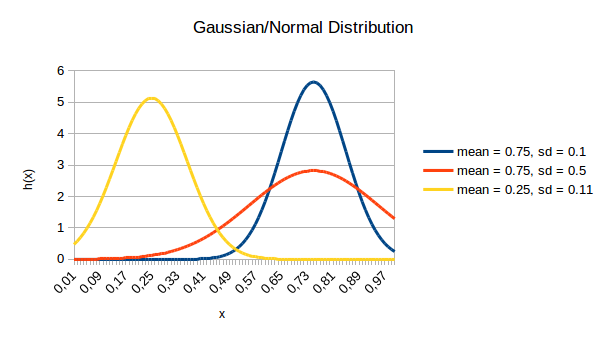

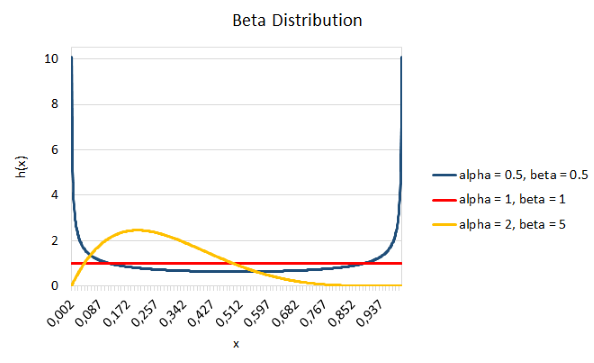

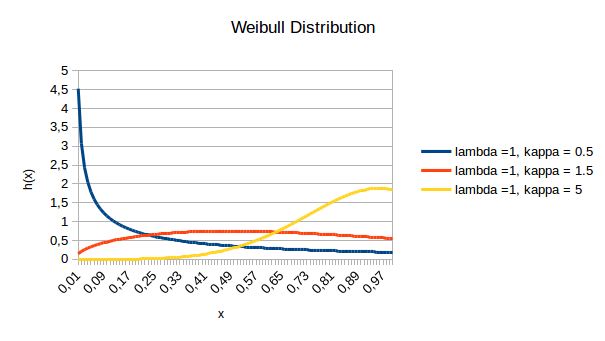

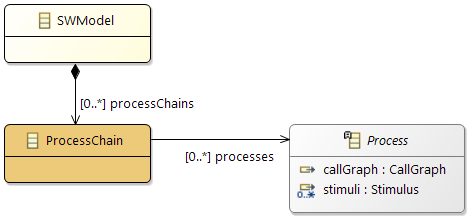

To model the observed behavior in the AMALTHEA model there are schedulable units (Processes) that contain the basic software units (Runnables) and stimuli that describe when the processes are executed. Statistical elements like distributions (Gauss, Weibull, ...) are also available in the model. They allow describing the variation of values if there are multiple occurrences.

In the following sections, a high level description of the individual elements of a software description that define the dynamic behavior are presented.

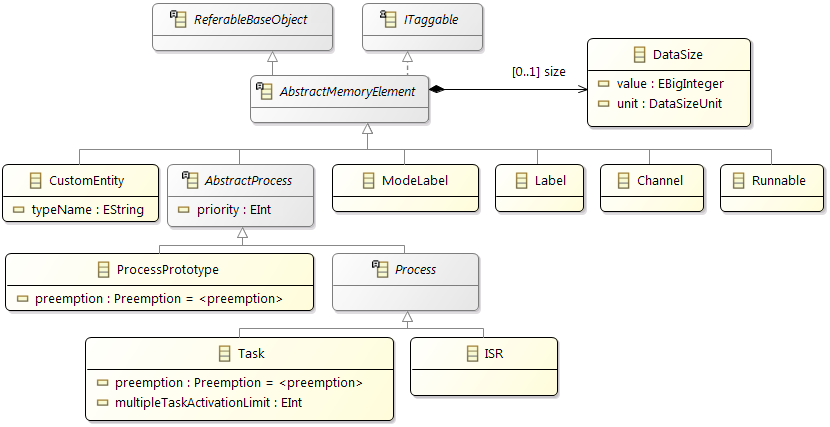

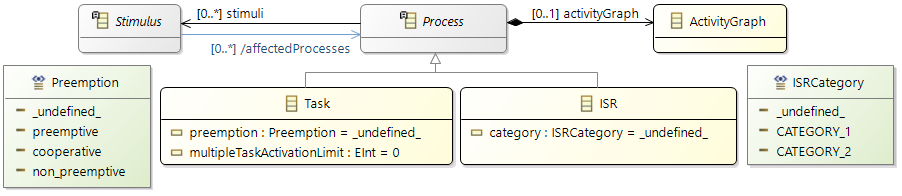

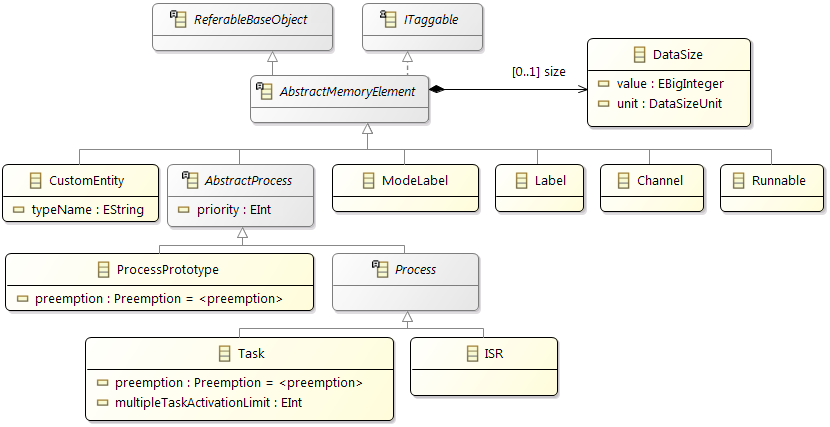

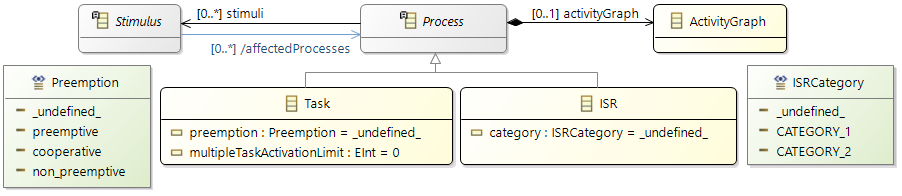

Processes (Tasks or ISRs)

Processes represent executable units that are managed by an operating system scheduler. A process is thus the smallest schedulable unit managed by the operating system. Each process also has its own name space and resources (including memory) protected against use from other processes. In general, two different kinds of processes can be distinguished: task and Interrupt Service Routine (ISR). Latter is a software routine called in case of an interrupt. ISRs have normally higher priority than tasks and can only be suspended by another ISR which presents a higher priority than the one running. In the Gantt chart above, a task called 'TASK_InputProcessing' can be seen. All elements that run within the context of a process are described in the following sections.

Runnables

Runnables are basic software units. In general it can be said that a Runnable is comparable to a function. It runs within the context of a process and is described by a sequence of instructions. Those instructions can again represent different actions that define the dynamic behavior of the software. Following, such possible actions are listed:

- Semaphore Access: request/release of a semaphore

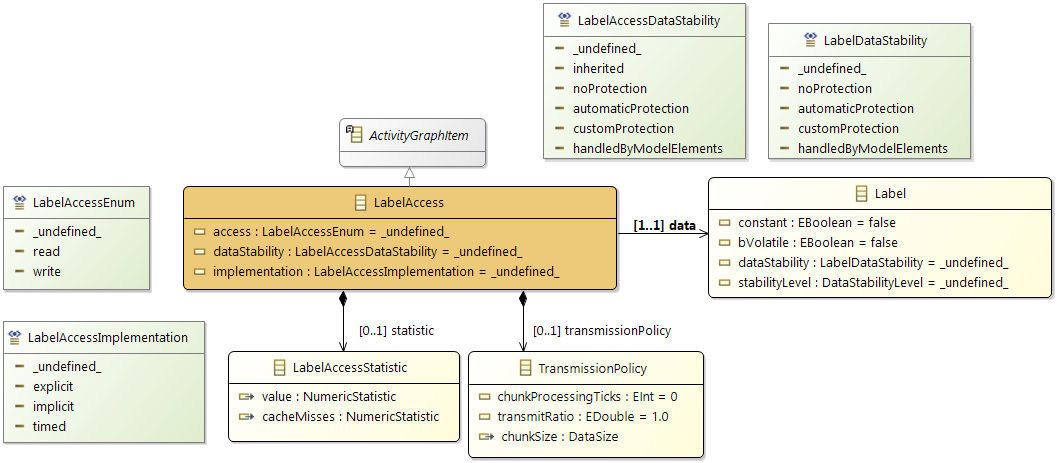

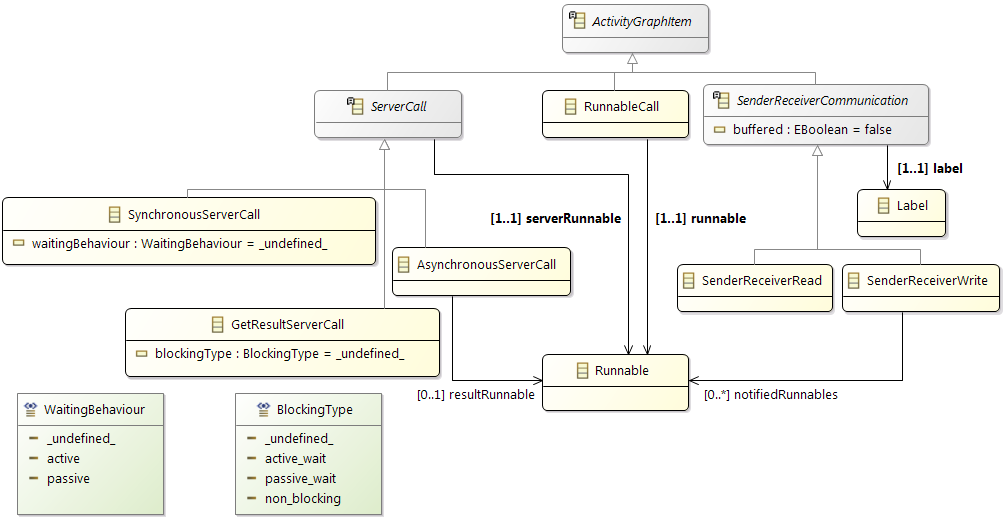

- Label Access: reading/writing a data signal

- Ticks: number of ticks (cycles) to be executed

- ...

In the following sections elements, that can be of concern within a runnable, are described in more detail.

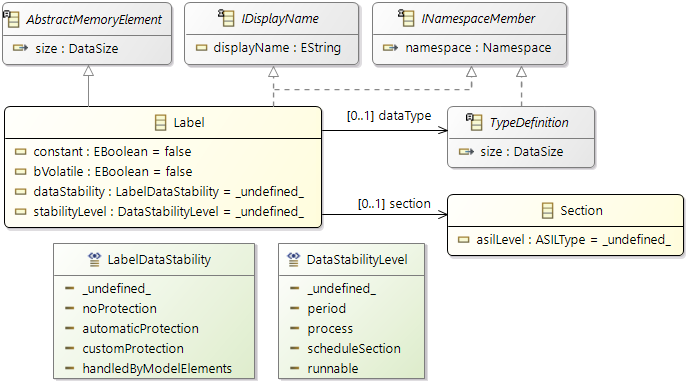

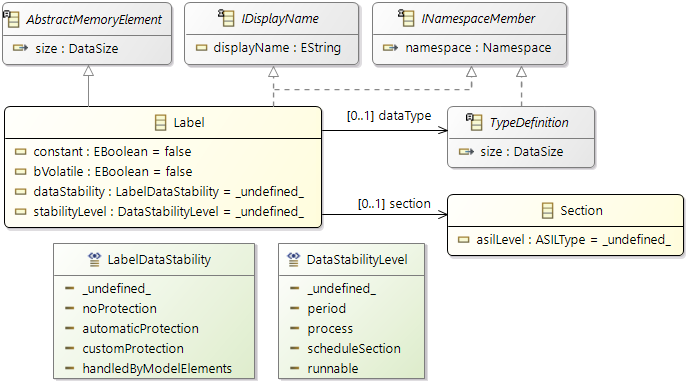

Labels

Labels represent the system's view of data exchange. As a consequence, labels are used to represent communication in a flattened structure, with (at least) one label defined for each data element sent or received by a Runnable instance.

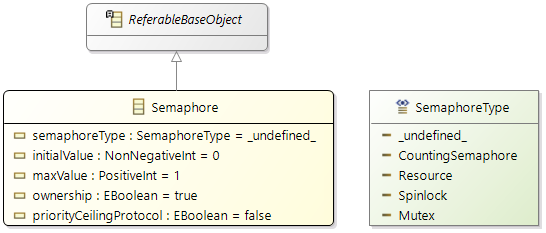

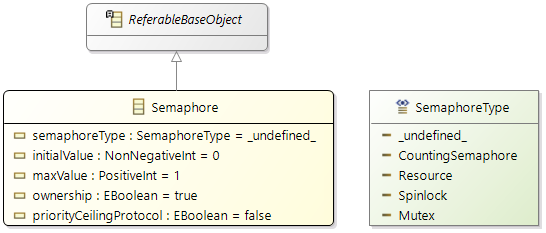

Semaphore

The main functionality of a semaphore is to control simultaneous use of a single resource by several entities, e.g. scheduling of requests, multiple access protection.

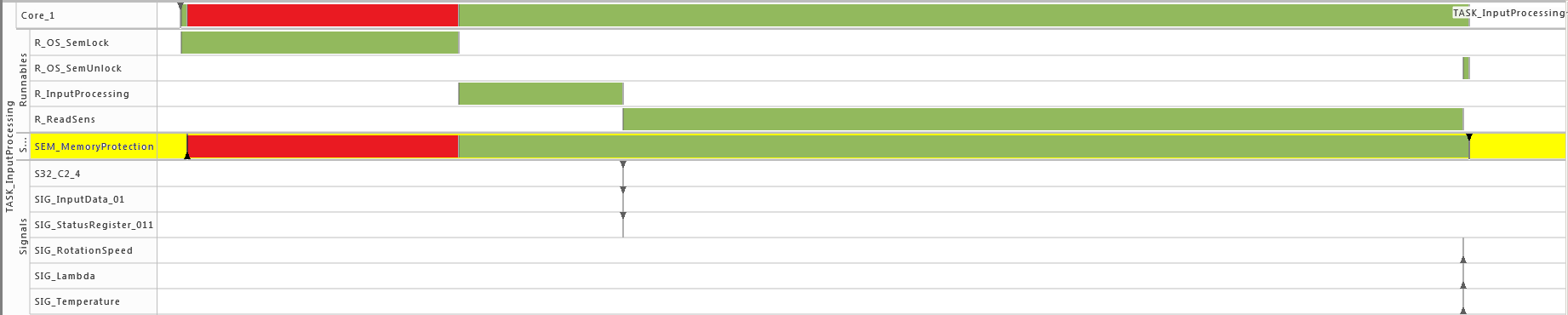

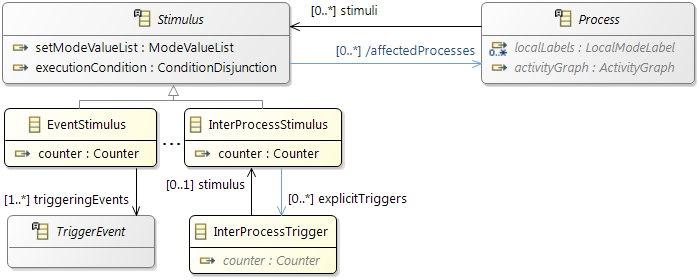

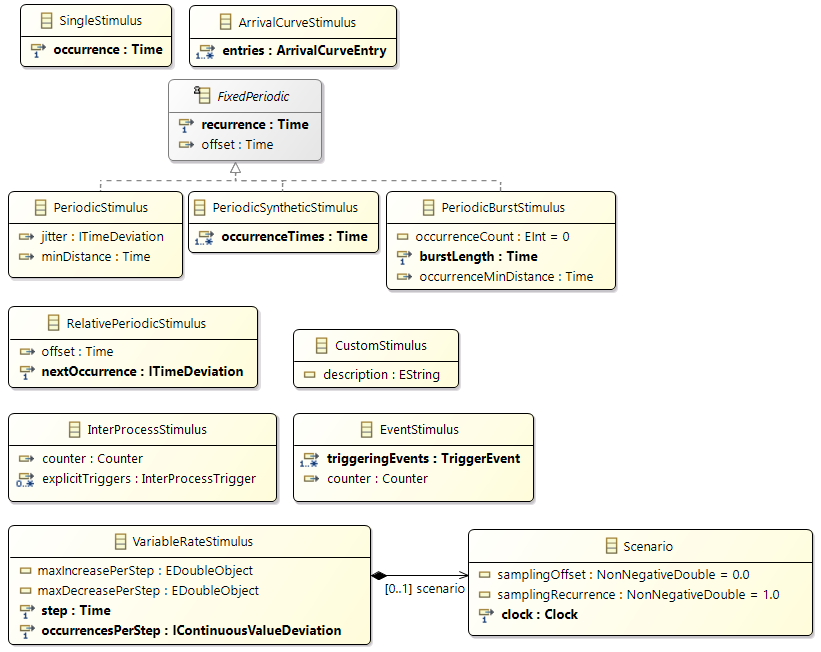

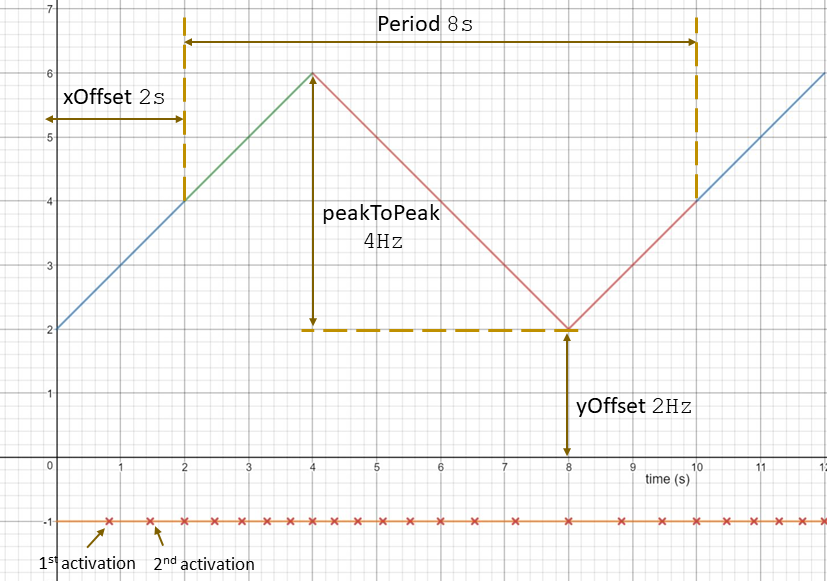

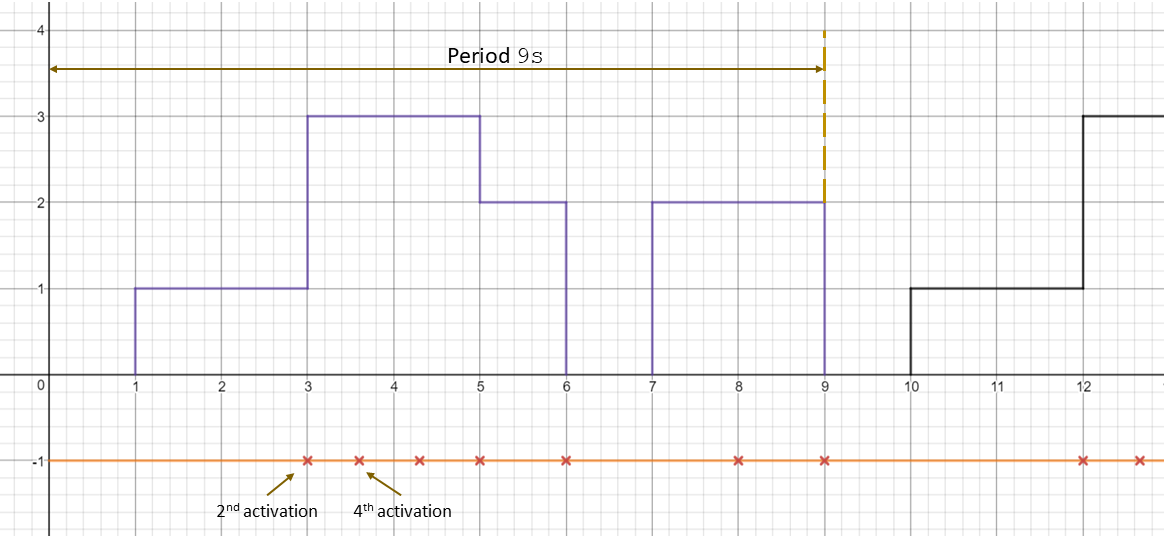

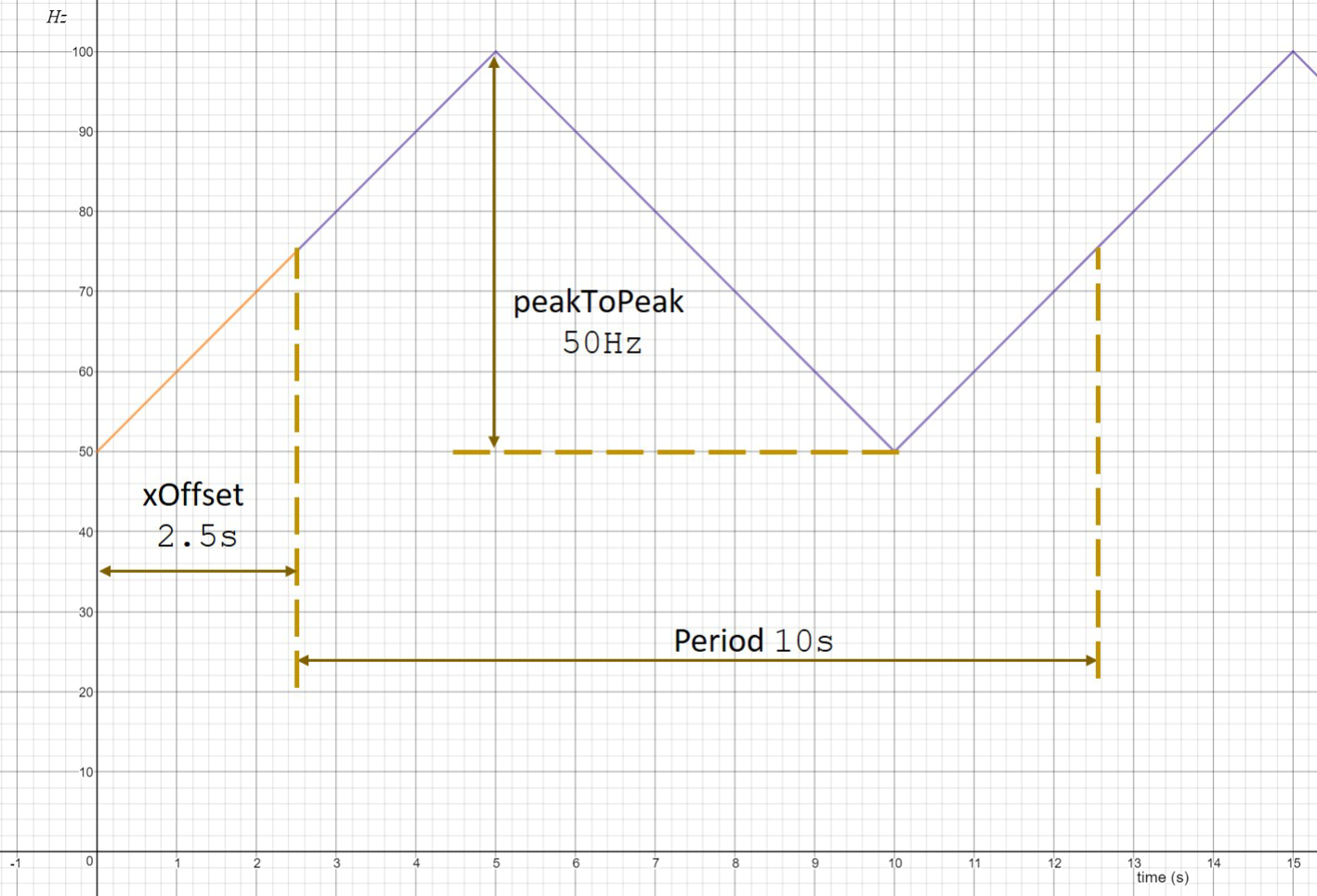

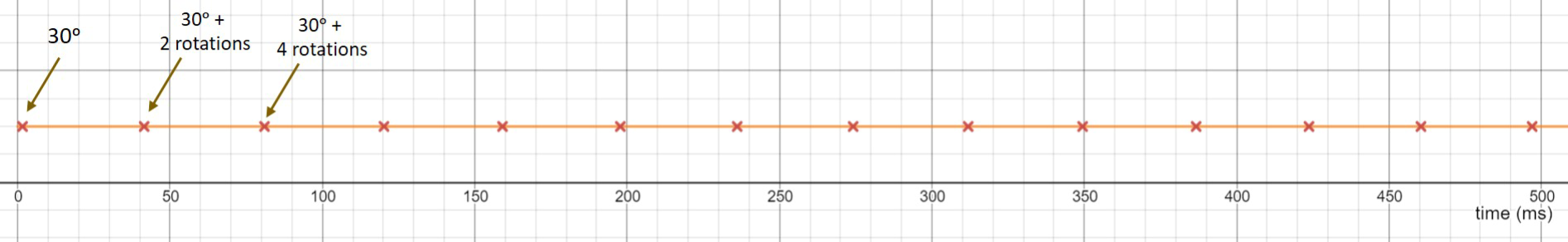

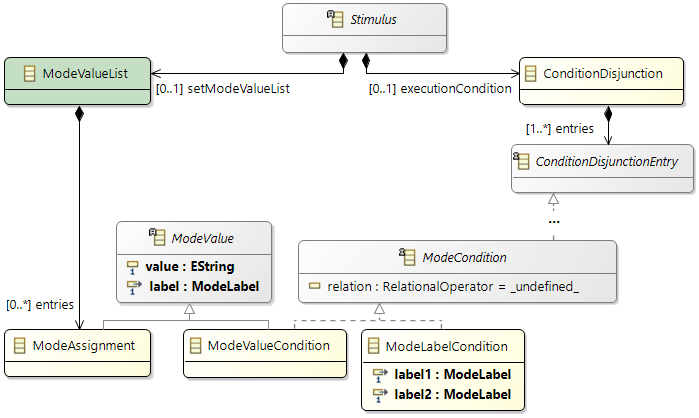

Stimulation

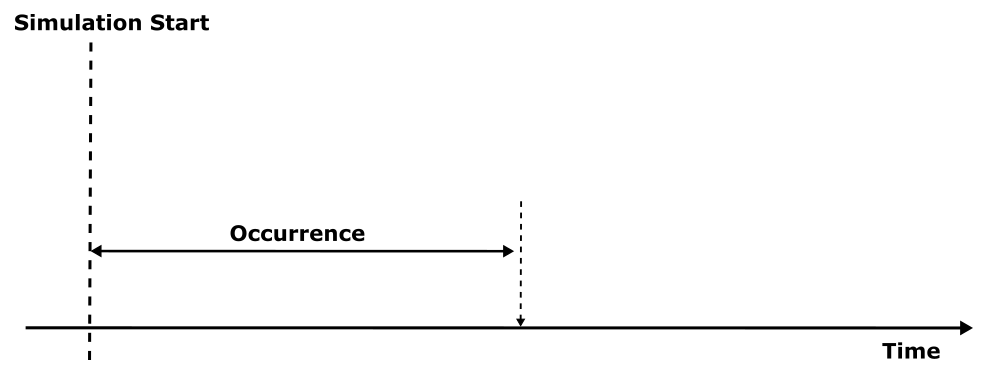

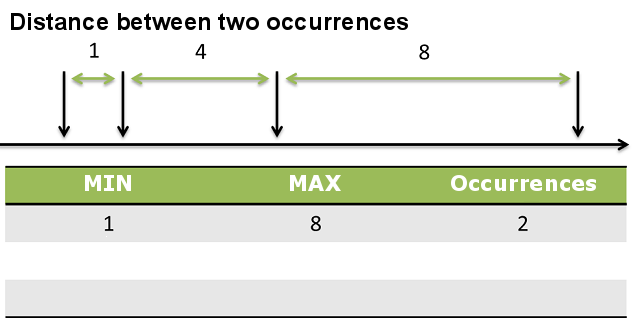

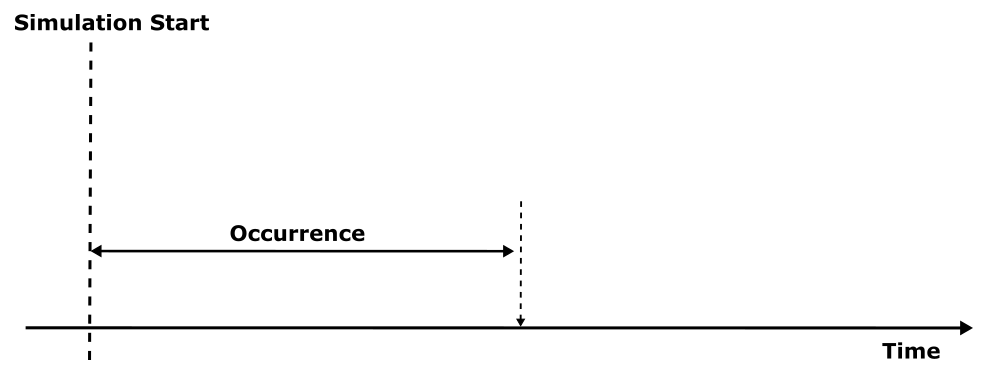

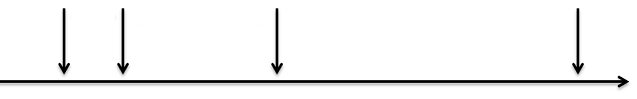

Before, we described the dynamic behavior of a specific process instance. In general however, a process is not only activated once but many times. The action of activating a process is called stimulation. The following stimulation patterns are typically used for specification:

- Single: single activation of a process

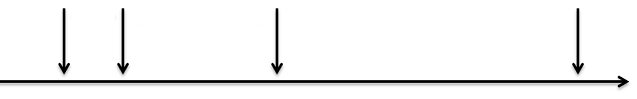

- Periodic: periodic activation of a process with a specific frequency

- VariableRate: periodic activations based on other events, like rotation speed

- Event: activation triggered by a

TriggerEvent

- InterProcess: activations based on an explicit inter-process trigger

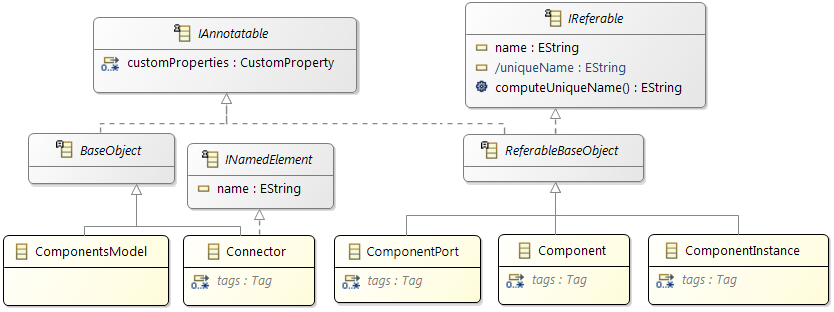

2.2.5 General Concepts

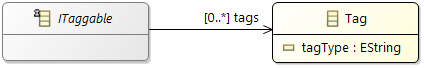

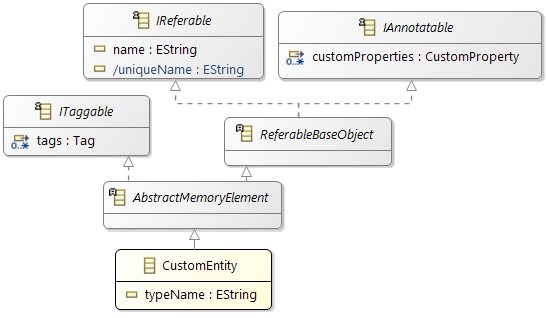

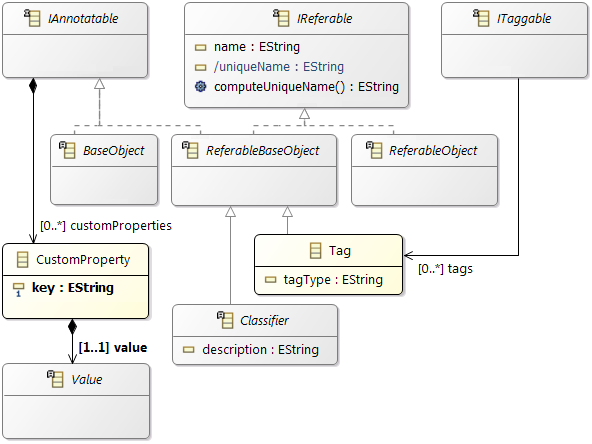

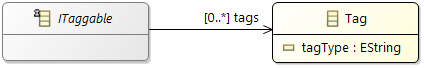

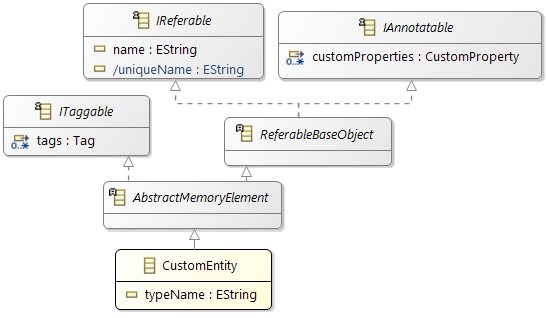

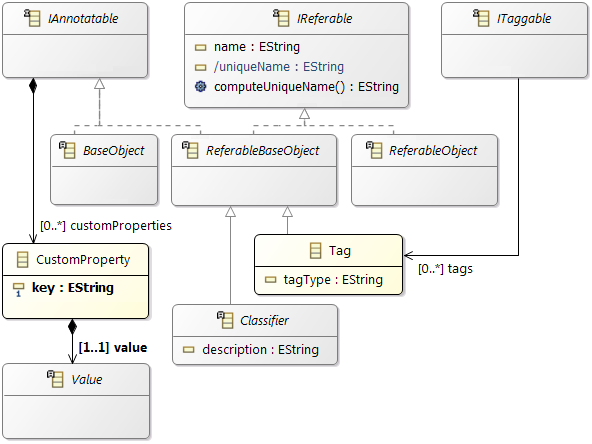

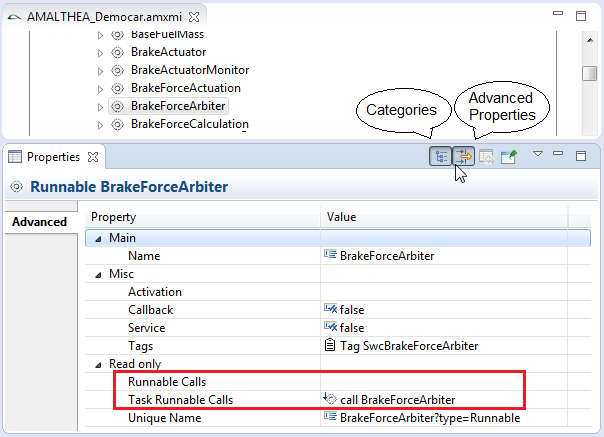

Grouping of elements (Tags, Tag groups)

It is possible to use

Tags for grouping elements of the model.

Custom Properties

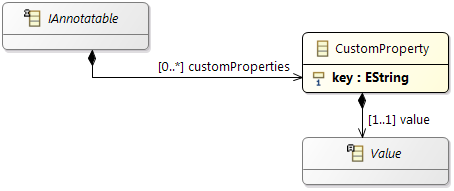

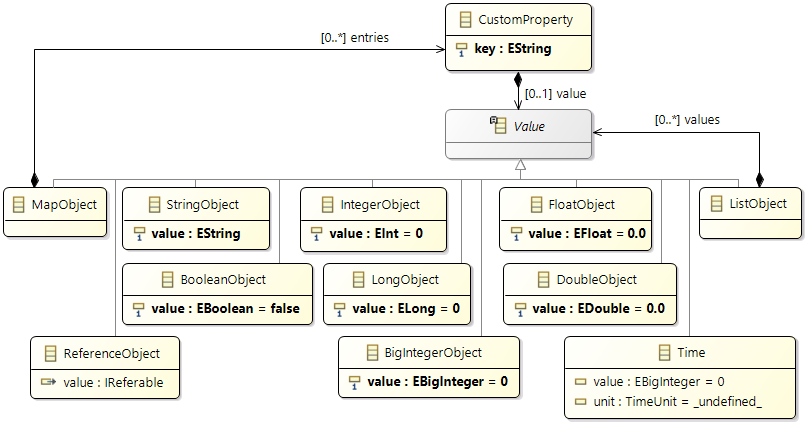

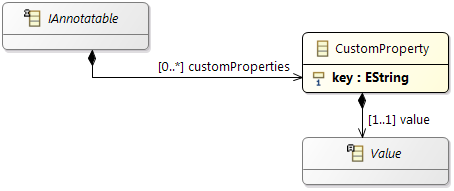

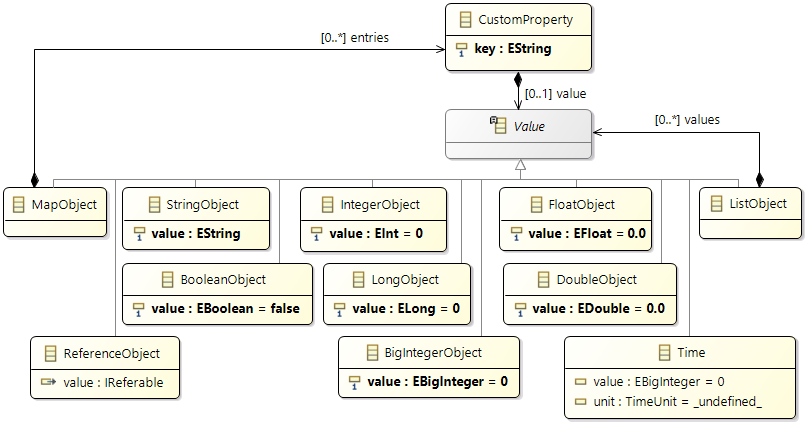

The AMALTHEA model provides

Custom Properties to enhance the model in a generic way. These can be used for different kind of purpose:

- Store attributes, which are relevant for your model, but not yet available at the elements

- Processing information of algorithms can be stored in that way, e.g. to mark an element as already processed

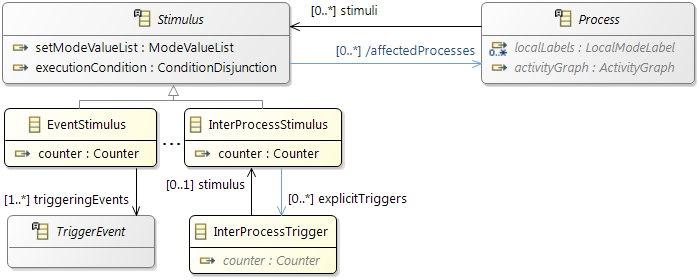

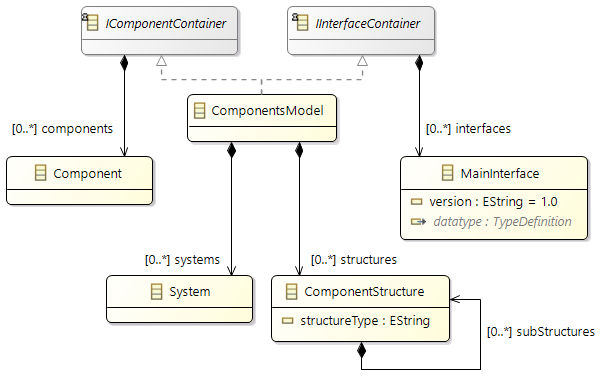

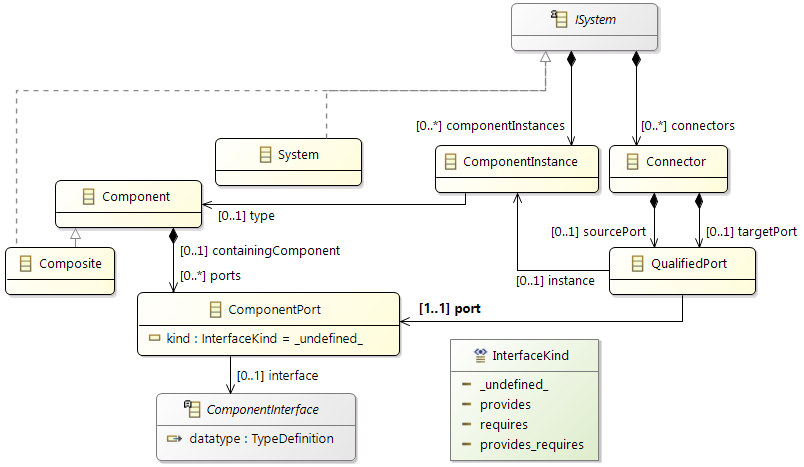

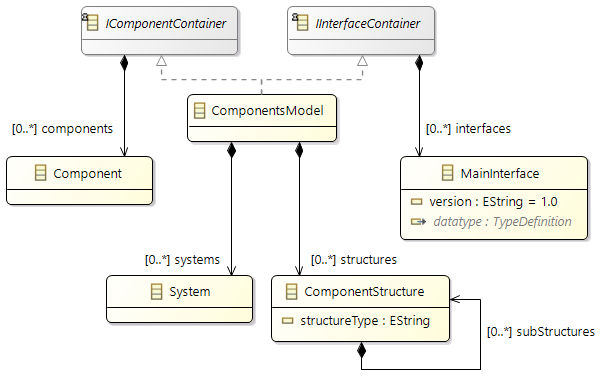

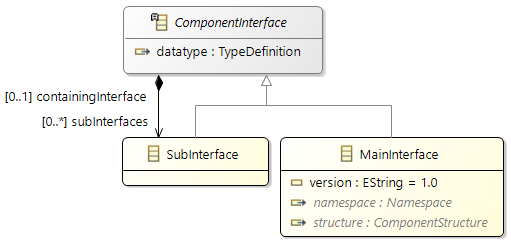

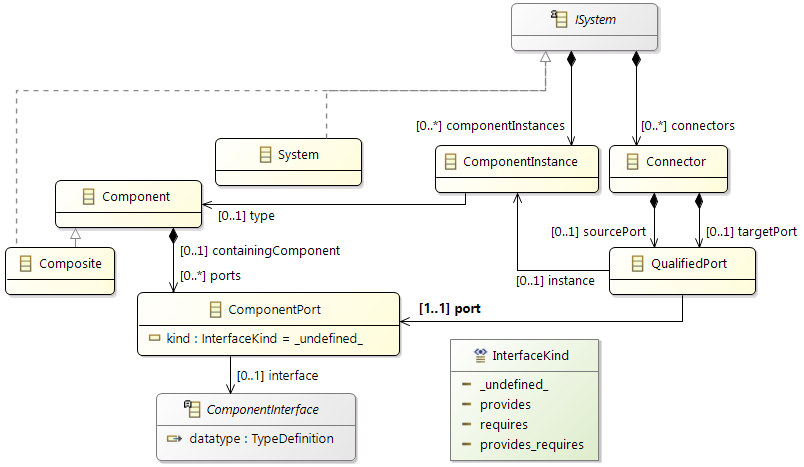

Support of Packages

In Amalthea model

(from APP4MC 0.9.7 version) there is a feature to support grouping of following model elements listed below in a package:

- Component

- Composite

- MainInterface

Above mentioned classes are implementing interface

IComponentStructureMember

ComponentStructure element in Amalthea model represents a package, and this can be referred by above mentioned elements.

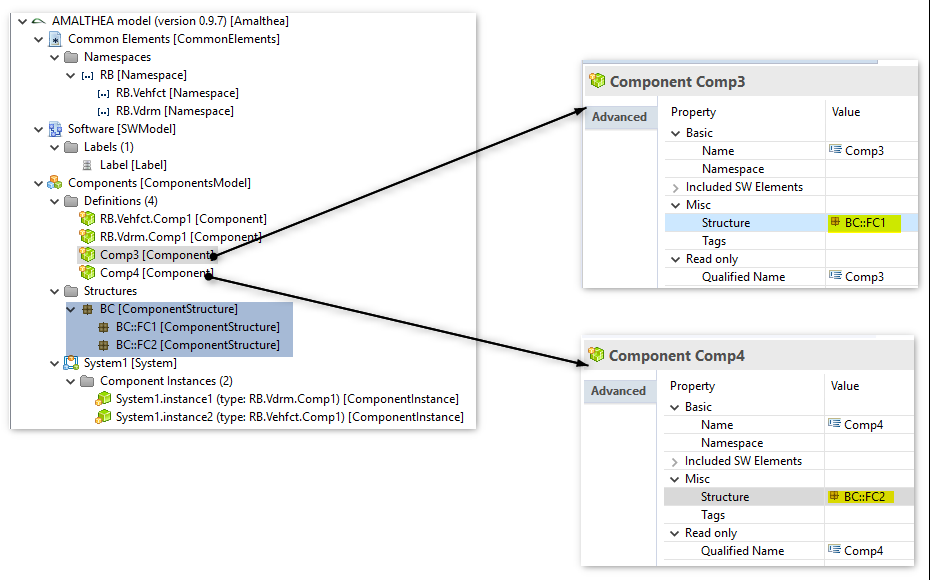

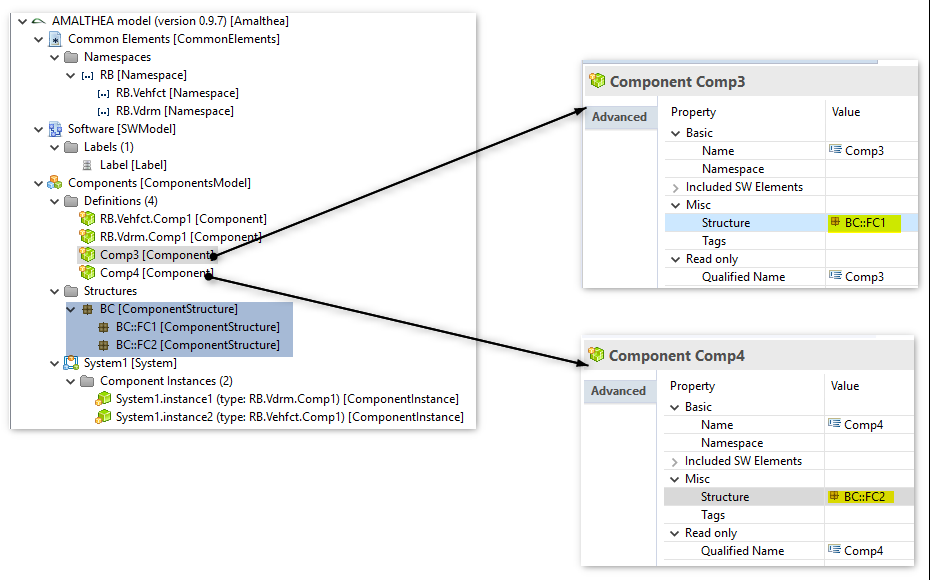

Example of using Package

Amalthea model contains a parent ComponentStructure "BC" and two child ComponentStructure's "FC1", "FC2".

- Component element "Comp3" is associated to ComponentStructure "FC1" and the Component element "Comp4" is associated to ComponentStructure "FC2"

This representation demonstrates the grouping of the elements into different packages.

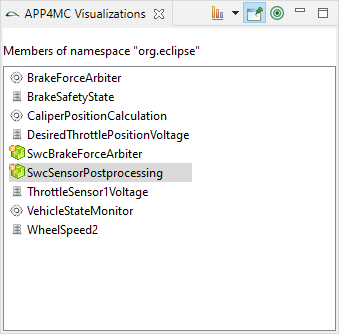

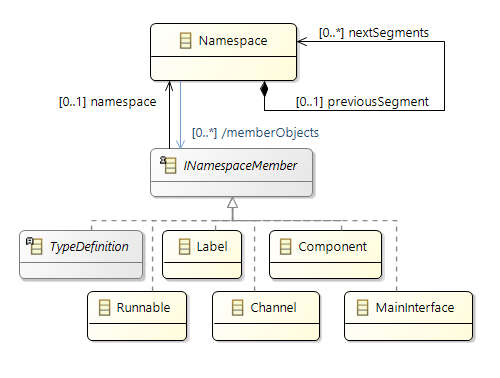

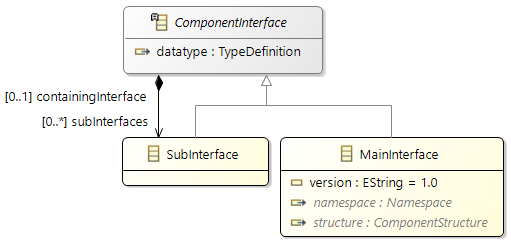

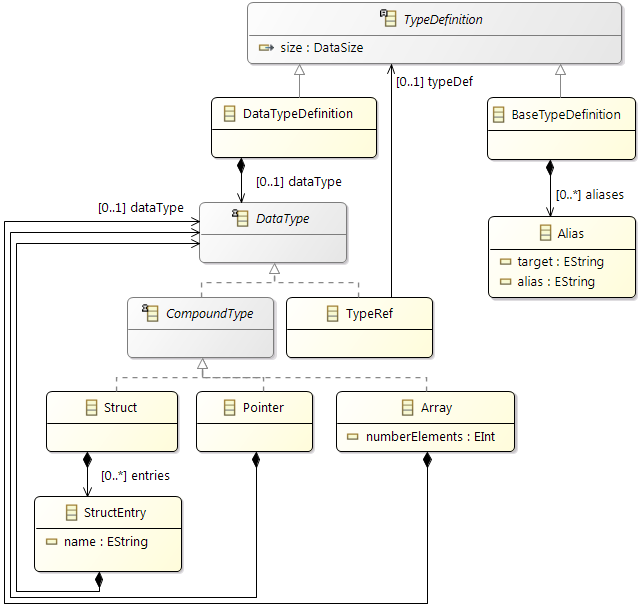

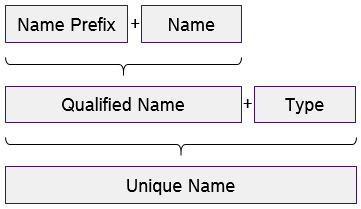

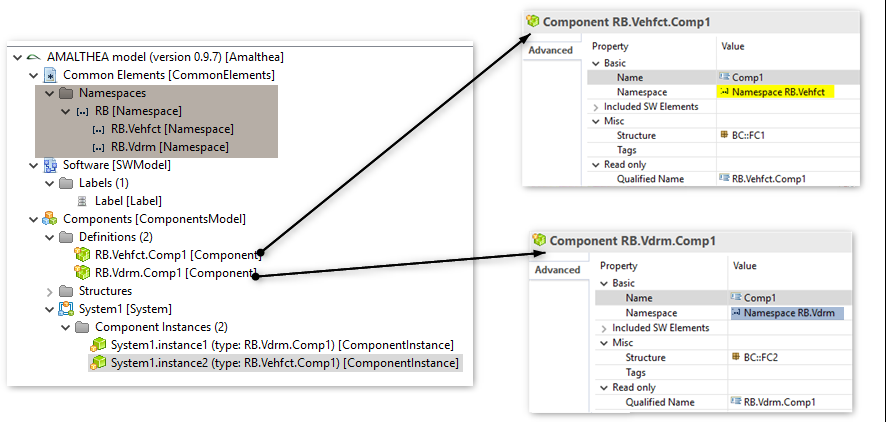

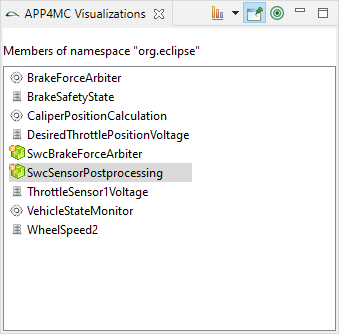

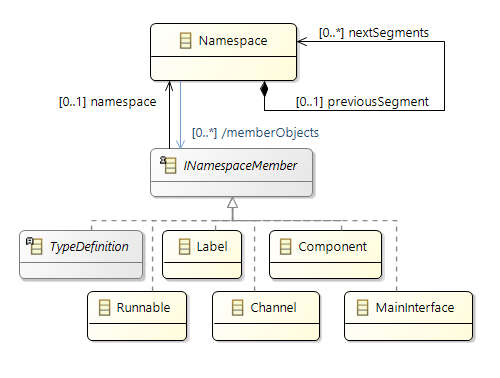

Support of Namespaces

In Amalthea model

(from APP4MC 0.9.7 version) there is a feature to have Namespace for the following model elements (

which are implementing interface

INamespaceMember

):

- BaseTypeDefinition

- Channel

- Component

- Composite

- DataTypeDefinition

- Label

- MainInterface

- Runnable

- TypeDefinition

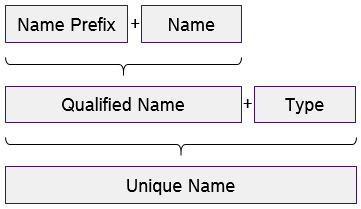

Advantage of having Namespace is, there can be multiple elements defined with the same name but with different Namespace

(this feature will also be helpful to support c++ source code generation ). If Namspace is specified in Amalthea model for a specific model element, then its unique name is built by considering the "Namespace text + name of the element"

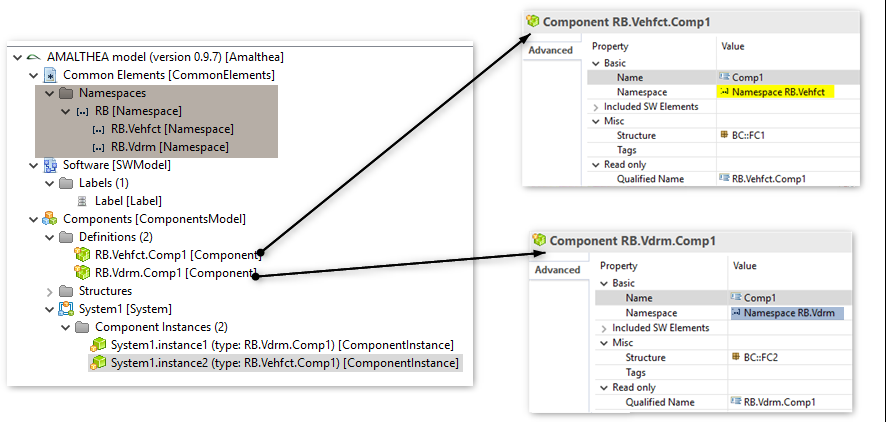

Example of using Namespace

As shown in the below screenshot, there are two Component elements with the name "Comp1" but referring to different Namespace objects, which is making them unique. In this case, display in the UI editor is also updated by showing the Namespace in the prefix for the Amalthea element name.

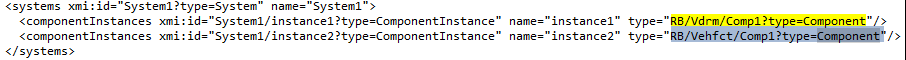

Below is the text representation of the Amalthea model which shows the ComponentInstance elements referring to different Component elements. As mentioned above, highlighted text shows that unique name of the Component element

__ is used all the places where it is referenced __(to make it unique)

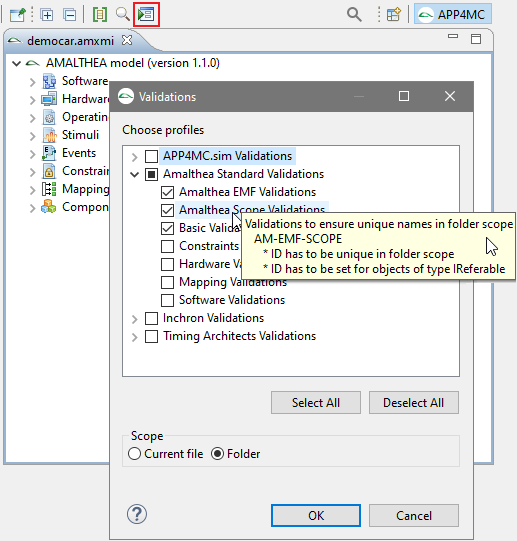

Additional Information:

- As soon as Namespace elements are used in Amalthea model, AMXMI file is updated with additional attribute xmi:id for all elements implementing IReferable interface.

- On Amalthea model element implementing INamed interface, in addition to getName() API, getQualifiedName() API is available which returns <namespace string value>.getName()

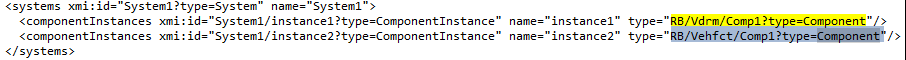

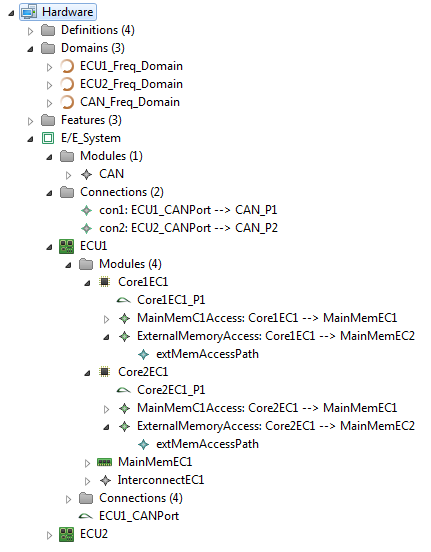

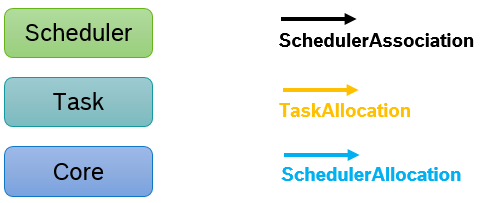

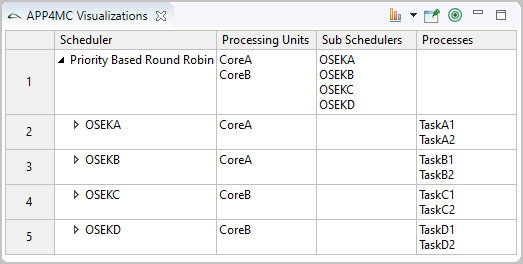

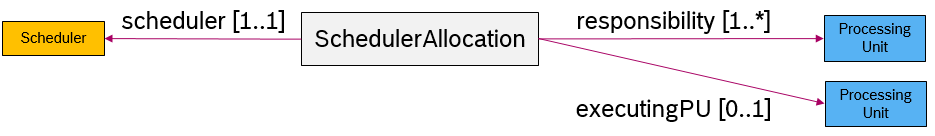

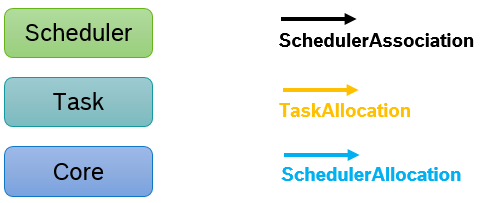

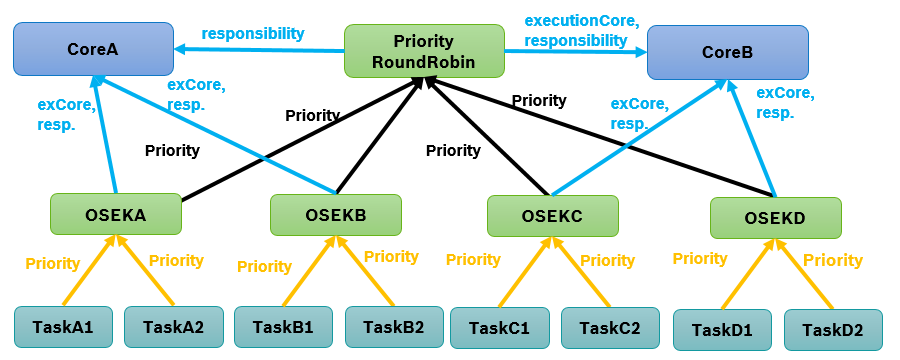

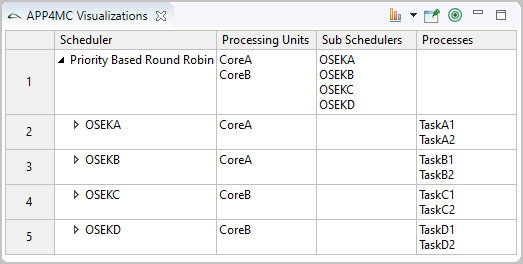

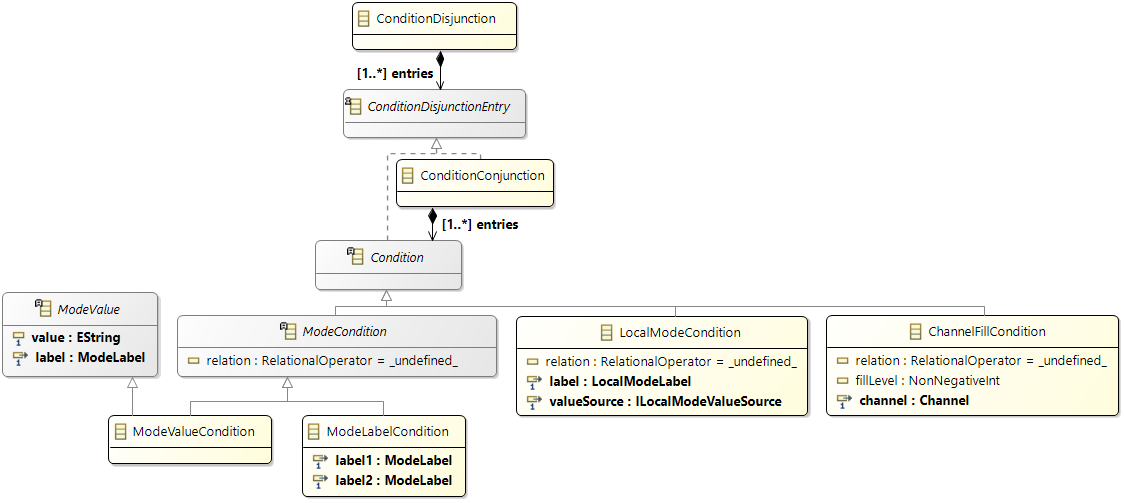

2.2.6 Scheduling

Scheduler to Core assignment

We distinguish between physical mapping and responsibility

-

Executing Core means a scheduler produces algorithmic overhead on a core

-

Responsibility means a scheduler controls the scheduling on core(s)

Task to Scheduler assignment

Tasks have a core affinity and are assigned to a scheduler

-

Core Affinity Specifies the possible cores the task can run on. If only one core is specified, the task runs on this core. If multiple cores are specified, the task can migrate between the cores.

-

Scheduler specifies the unique allocation of the task to a scheduler.

The scheduling parameters are determined by the scheduling algorithm and are only valid for this specific task – scheduler combination. Therefore the parameters are specified in the TaskAllocation object.

Scheduler hierarchies

Schedulers can be arranged in a hierarchy via SchedulerAssociations. If set, the parent scheduler takes the initial decision and delegates to a child-scheduler. If the child-scheduler is a reservation based server, it can only delegate scheduling decisions to its child scheduler. If it is not a server, it can take scheduling decisions.

The scheduling parameters for the parent scheduler are specified in the SchedulerAssociation, just as it would have for task allocations.

If a reservation based server has only one child (this can either be a process or a child scheduler), the scheduling parameters specified in this single child allocation will also be passed to the parent scheduler. The example below shows this for the EDF scheduler on the right hand side.

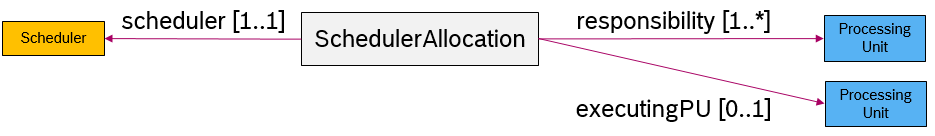

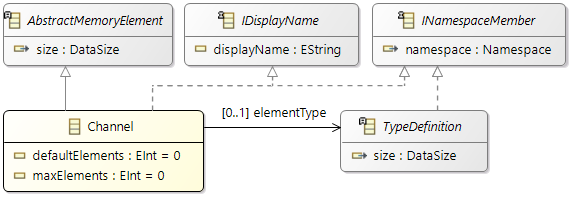

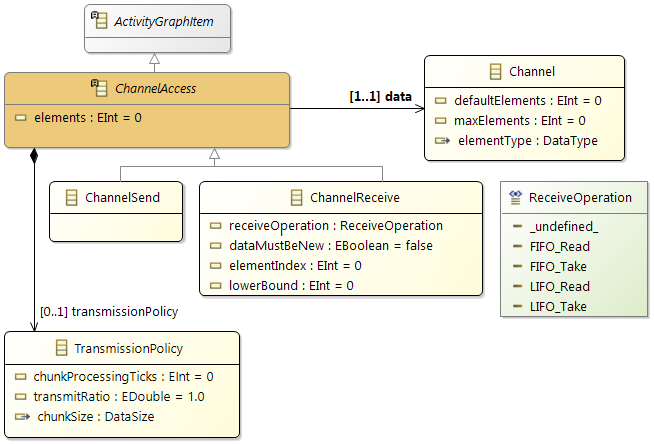

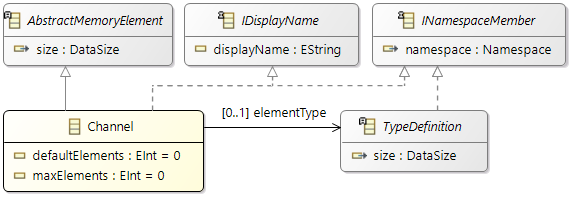

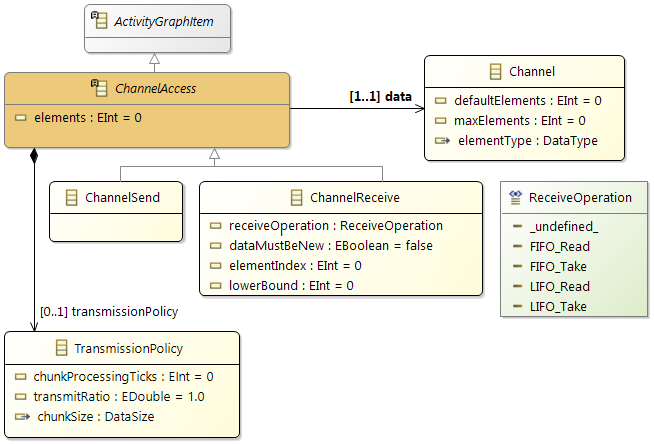

2.2.7 Communication via channels

Channel

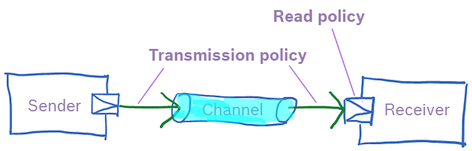

Sender and receiver communicating via a channel by issuing send and receive operations; read policy and transmission policy define communication details.

A channel is specified by three attributes:

-

elementType: the type that is sent to or read from the channel.

-

defaultElements: number of elements initially in the channel (at start-up).

-

maxElements (integer) denoting a buffer limit, that is, the channel depth. In other words, no more than maxElements elements of the given element type may be stored in the channel.

Channel Access

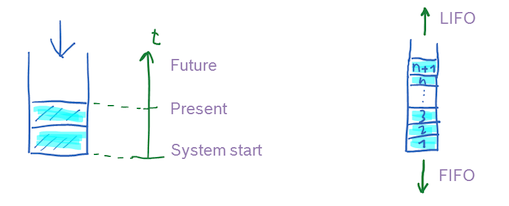

In the basic thinking model, all elements are stored as a sequential collection in the channel.

Sending

A runnable may send elements to a channel by issuing send operations.

The send operation has a single parameter:

-

elements (integer): Number of elements that are written.

Receiving

A runnable may receive elements from a channel by issuing receive operations.

The receive operation is specified with a

receive policy that defines the main behaviour of the operation:

-

LIFO (last-in, first-out) is chosen if processing the last written elements is the primary focus and thereby missing elements is tolerable.

-

FIFO (first-in, first-out) is chosen if every written element needs to be handled, that is, loss of elements is not tolerable.

-

Read will received elements without modifying the channel

-

Take will remove the received elements from the channel

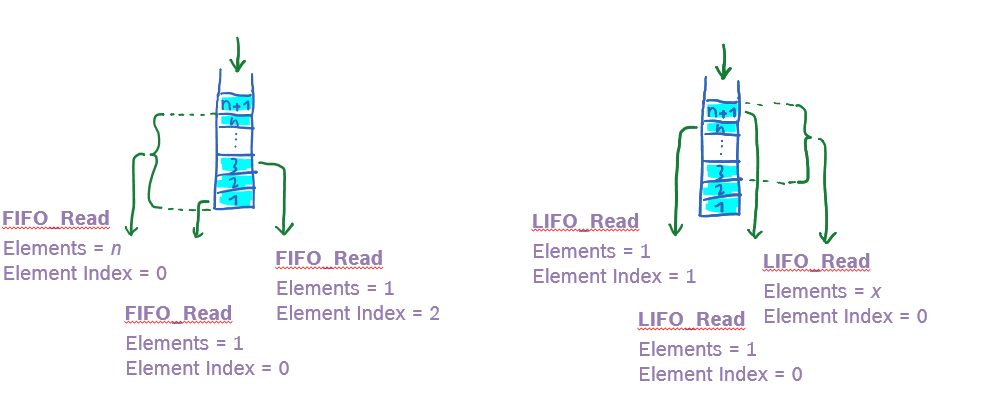

The receive policy defines the direction a receive operation takes effect with LIFO accesses are from top of the sequential collection, while with FIFO accesses are from bottom of the sequential collection-and they define if the receive operation is destructive (take) or non-destructive) read.

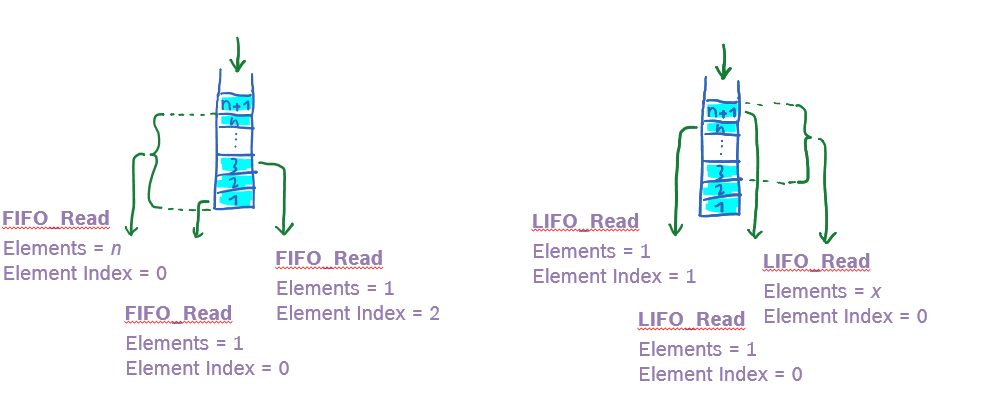

Each operation further has two parameters and two attributes specifying the exact behavior. The two parameters are:

-

elements (integer): Maximum number n of elements that are received.

-

elementIndex (integer): Position (index i) in channel at which the operation is effective. Zero is the default and denotes the oldest (FIFO) or newest element (LIFO) in the channel.

In the following several examples are shown, of how to read or take elements out of a channel with the introduced parameters.

Two attributes further detail the receive operation:

-

lowerBound (integer): Specify the minimum number of elements returned by the operation. The value must be in the range [0,n], with n is the value of the parameter elements. Default value is n.

-

dataMustBeNew (Boolean): Specify if the operation must only return elements that are not previously read by this Runnable. Default value is false.

Transmission Policy

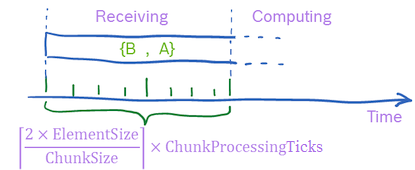

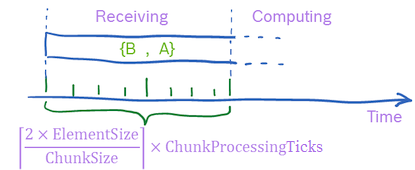

To further specify how elements are accessed by a runnable in terms of computing time, an optional transmission policy may specify details for each receive and send operation. The intention of the transmission policy is to reflect computing demand (time) depending on data.

The transmission policy consists of the following attributes:

-

chunkSize: Size of a part of an element, maximum is the element size.

-

chunkProcessingTicks (integer): Number of ticks that will be executed to process one chunk (algorithmic overhead).

-

transmitRatio (float): Specify the ratio of each element that is actually transmitted by the runnable in percent. Value must be between [0, 1], default value is 1.0.

Example for using transmission policy to detail the receiving phase of a runnable execution. Two elements are received, leading to transmission time as given in the formula. After the receiving phase, the runnable starts with the computing phase.

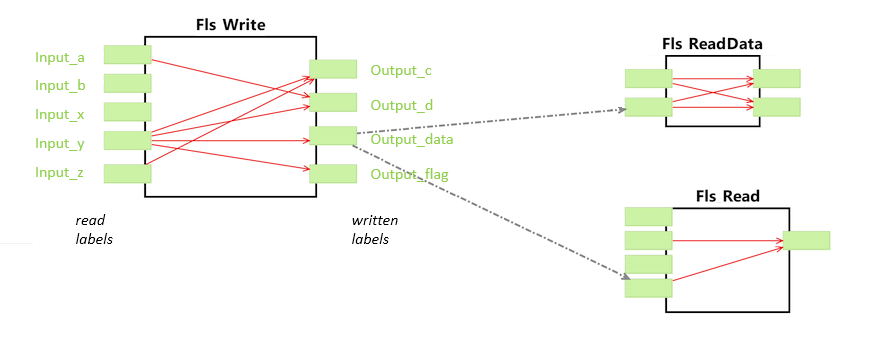

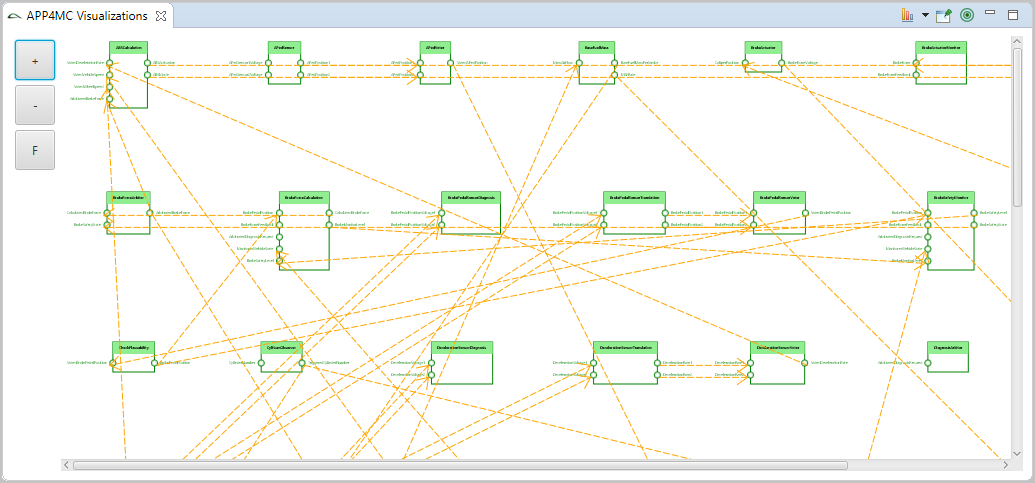

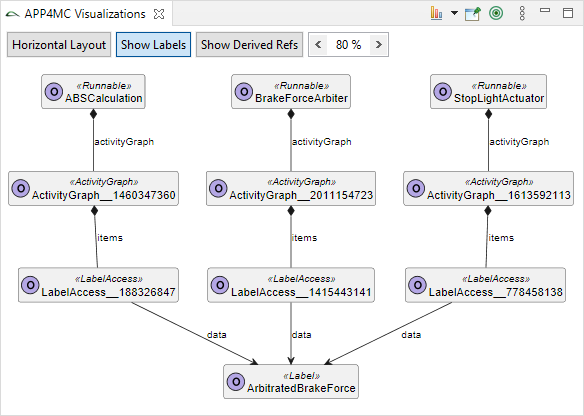

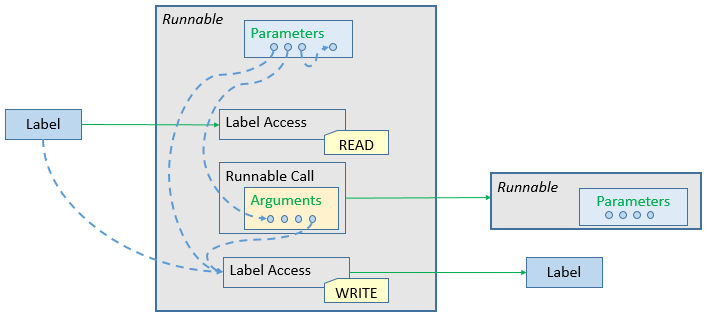

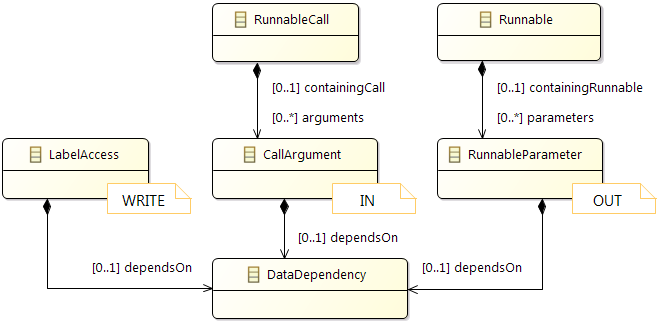

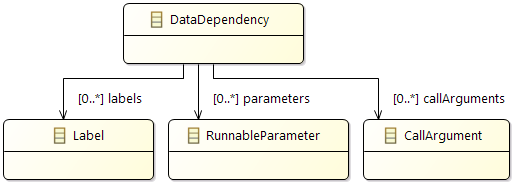

2.2.8 Data Dependencies

Overview

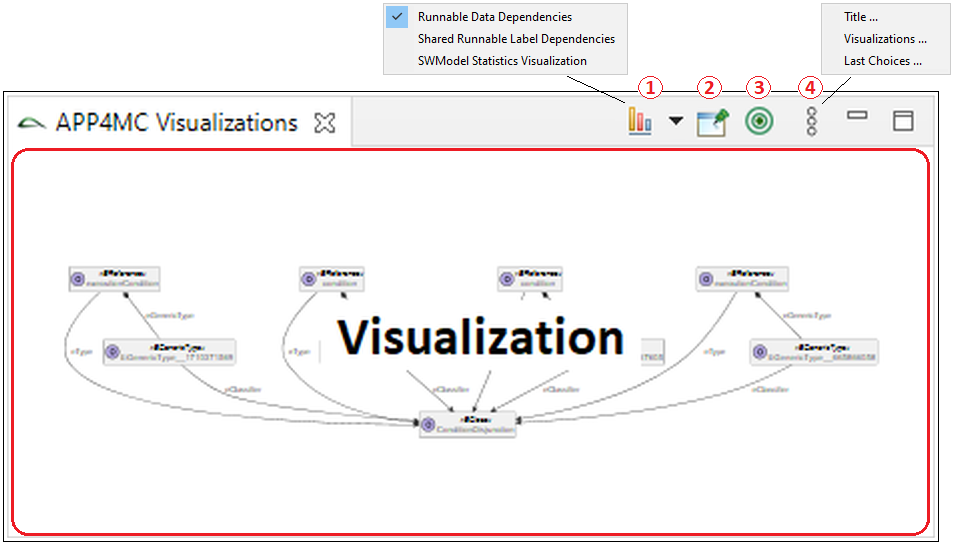

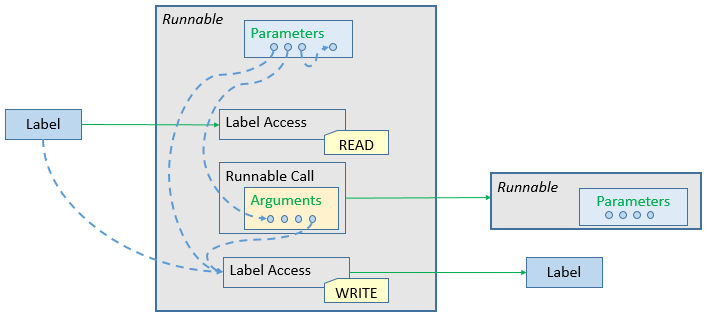

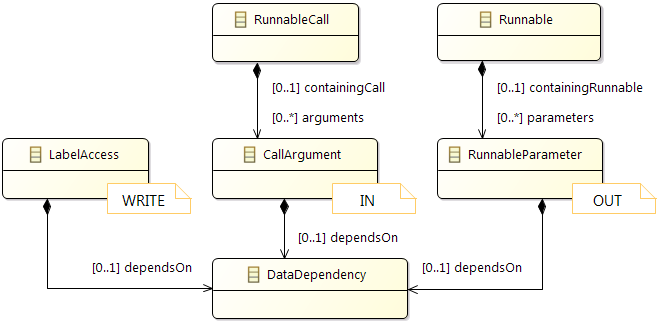

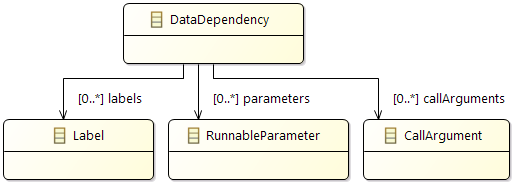

It is possible to specify potential data dependencies for written data. More specifically, it is now possible to annotate at write accesses what other data potentially have influenced the written data. A typical "influence" would be usage of data for computing a written value. As such data often comes from parameters when calling a runnable, it is now also possible to specify runnable parameters in Amalthea and their potential influence on written data.

Semantics of the new attributes in Amalthea is described in detail below. In general, these data dependency extensions are considered as a way to explicitly model details that help for visualization or expert reviews. For use cases such as timing simulation the data dependency extensions are of no importance and should be ignored.

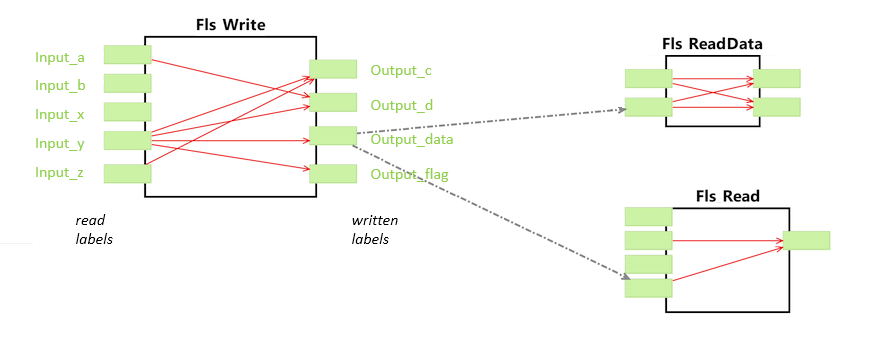

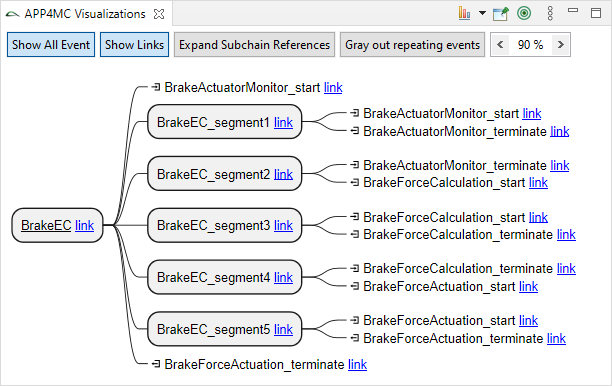

Internal Dataflow

In Embedded Systems, external dataflow is specified with reads and writes to labels, which are visible globally. This is sufficient for describing the inter-runnable communication and other use-cases like memory optimization. Nevertheless, for description of signal flows along an event chain, it is also necessary to specify the internal dataflow so that the connection between the read labels and the written labels in made.

Internal dataflow is specified as dependency of label writes to other labels, parameters of the runnable or event return values of called runnables. With this information, the connection of reads to writes of label can be drawn.

The internal dependencies are typically generated through source code analysis. The analysis parses the code and determines all writes to labels. For each of those positions, a backward slicing is made on the abstract syntax tree to derive all reads that influence this write. This collection is then stored as dependency at the write access.

Based upon this data a developer can now track a signal flow along from the sensor over several other runnables to the actuator. Existing event-chains can be automatically validated to contain a valid flow by checking if the segments containing a read label event and write label event within the same runnable are connected by an internal dependency. Without internal dependencies, this would introduce a huge manual effort. Afterwards the event-chains can be simulated and their end-to-end latencies determined with the usual tools.

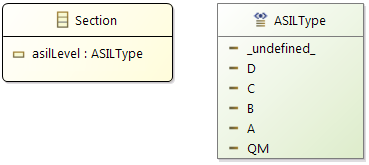

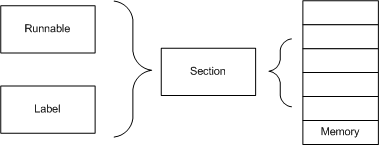

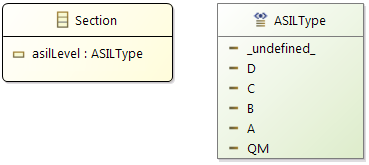

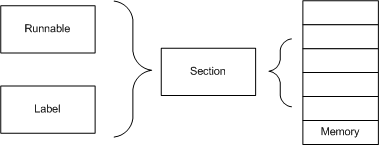

2.2.9 Memory Sections

Purpose

Memory Sections are used for the division of the memory (RAM/ROM) into different blocks and allocate the "software" memory elements (

e.g. Labels), code accordingly inside them.

Each Memory Section has certain specific properties (

e.g. faster access of the elements, storing constant values). By default compiler vendors provide certain Memory Sections (

e.g. .data, .text) and additional Memory Sections can be created based on the project need by enhancing the linker configuration.

Definition

A "Memory Section" is a region in memory (RAM/ROM) and is addressed with a specific name. There can exist multiple "Memory Sections" inside the same Memory (RAM/ROM) but with different names. Memory Section names should be unique across the Memory (RAM/ROM).

Memory Sections can be of two types:

- Virtual Memory Section

- Physical Memory Section

Virtual Memory Section

"Virtual Memory Sections" are defined as a part of data specification and are associated to the corresponding Memory Elements (e.g. Label's) during the development phase of the software. Intention behind associating "Virtual Memory Sections" to Memory elements like Label's is to control their allocation in specific Memory (e.g. Ram1 or Ram2) by linker.

As a part of linker configuration – It is possible to specify if a "Virtual Memory Section"

(e.g. mem.Sec1) can be part of certain Memory

(e.g. Ram1/Ram2/SYSRAM but not Ram3).

Example:

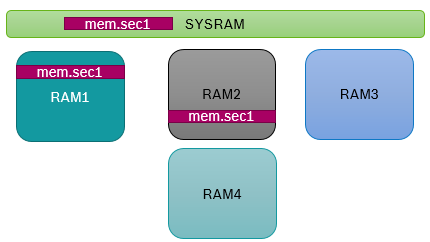

Software should be built for ManyCore ECU – containing 3 Cores

(Core1, Core2, Core3). Following RAMs are associated to the Cores: Ram1 – Core1, Ram2 – Core2, Ram3 – Core3, and also there is SYSRAM.

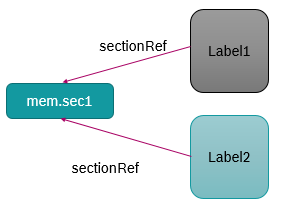

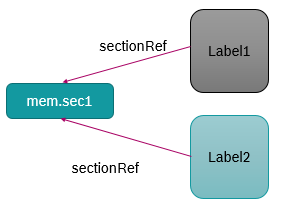

Virtual Memory Section : mem.sec1

(is defined as part of data specification) is associated to Label1 and Label2.

In Linker configuration it is specified that mem.sec1 can be allocated only in Ram1 or Ram2.

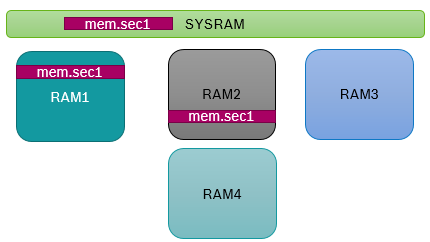

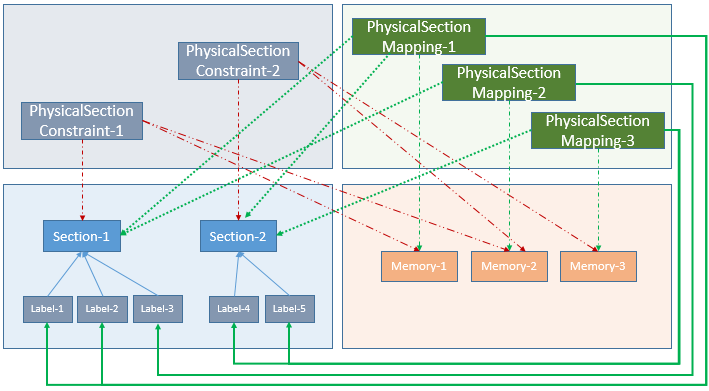

Below diagram represents the

linker configuration content

- w.r.t. possibility for physical allocation of mem.sec1 in various memories .

Based on the above configuration – Linker will allocate Label1, Label2 either in Ram1/Ram2/SYSRAM but not in Ram3/Ram4.

Physical Memory Section

"Physical Memory Sections" are generated by linker. The linker allocates various memory elements (e.g. Label's) inside "Physical Memory Sections".

Each "Physical Memory Section" has following properties:

- Name – It will be unique across each Memory

- Start and End address – This represents the size of "Physical Memory Section"

- Associated Physical Memory

(e.g. Ram1 or Ram2)

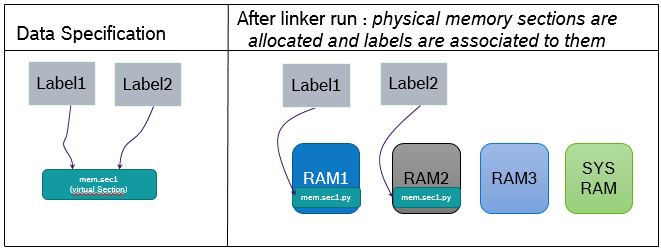

Example:

There can exist mem.sec1.py inside Ram1 and also in Ram2. But these are physically two different elements as they are associated to different memories (Ram1 and Ram2) and also they have different "start and end address".

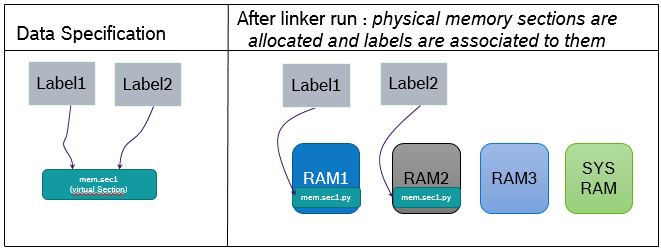

Below diagram represents the information w.r.t. virtual memory sections

(defined in data specification and associated to memory elements) and physical memory sections

(generated after linker run).

Modeling Memory Section information in AMALTHEA

- As described in the above concept section:

- Virtual memory sections are used:

- To specify constraints for creation of Physical memory sections by linker

- To control allocation of data elements (e.g. Labels) in a specific memory

(e.g. Ram1/Ram2/SYSRAM)

- Physical memory sections are containing the data elements after linker run

(representing the software to be flashed into ECU)

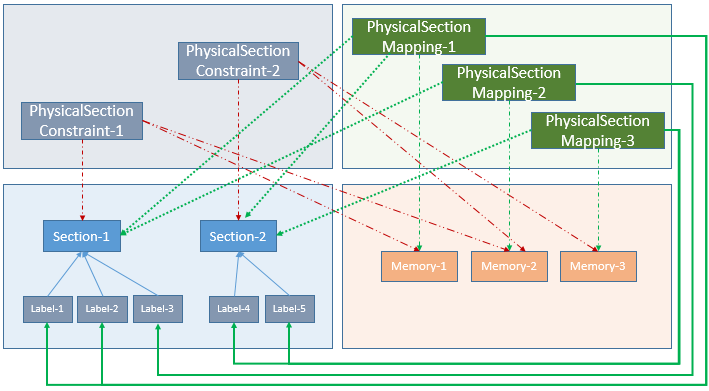

Below figure represents the modeling of "Memory Section" (both virtual and physical) information in AMALTHEA model:

Below are equivalent elements of AMALTHEA model used for modeling the Memory Section information:

-

Section

- This element is equivalent to Virtual Memory Section defined during the SW development phase.

- As a part of data specification defined in the sw-development phase, a Section object

(with specific name) is associated to Label and Runnable elements.

-

PhysicalSectionConstraint

- This element is equivalent to the constraint specified in the linker configuration file, which is used to instruct linker for the allocation of Physical Memory Sections in specified Memories.

- PhysicalSectionContraint is used to specify the combination of Virtual Memory Section and Memories

(which can be considered by linker for generation of Physical Memory Sections).

Example:

PhysicalSectionConstraint-1 is specifying following relation "Section-1" <--> "Memory-1", "Memory-2". This means that the corresponding Physical Memory Section for "Section-1" can be generated by linker in "Memory-1" or in "Memory-2" or in both.

-

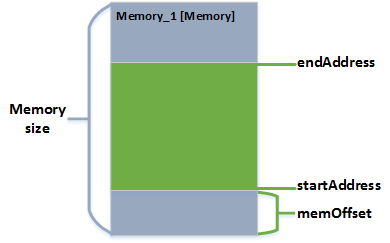

PhysicalSectionMapping

- This element is equivalent to Physical Memory Section generated during the linker run.

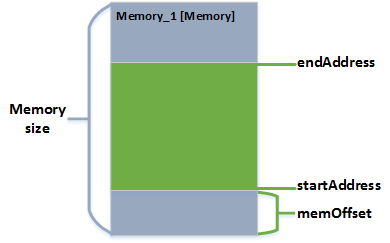

- Each PhysicalSectionMapping element:

- Contains the Virtual Memory Section

(e.g. Section-1) which is the source.

- is associated to a specific Memory and it contains the start and end memory address

(difference of start and end address represents the size of Physical Memory Section).

- contains the data elements

(i.e. Labels, Runnables part of the final software).

Note: There is also a possibility to associate multiple Virtual Memory Section's as linker has a concept of grouping Virtual Memory Sections while generation of Physical Memory Section.

Example:

For the same Virtual Memory Section

(e.g. Section-1), linker can generate multiple Physical Memory Sections in different Memories

(e.g. PhysicalSectionMapping-1, PhysicalSectionMapping-2). Each PhysicalSectionMapping element is an individual entity as it has a separate start and end memory address.

2.3 Examples

2.3.1 Modeling Example 1

General information

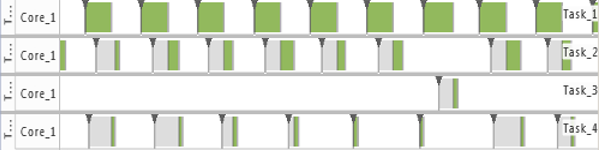

Modeling Example 1 describes a simple system consisting of 4 Tasks, which is running on a dual core processor.

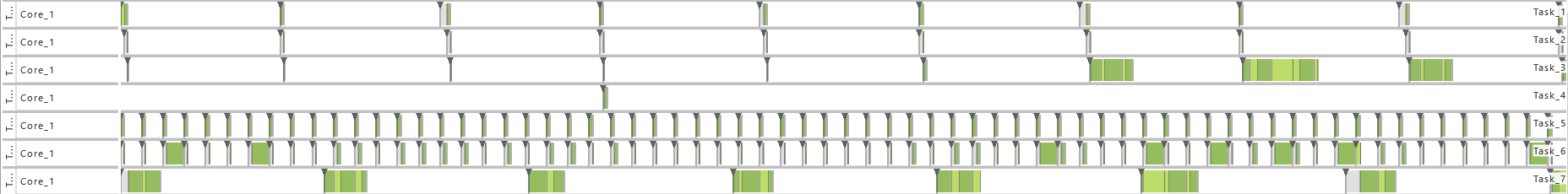

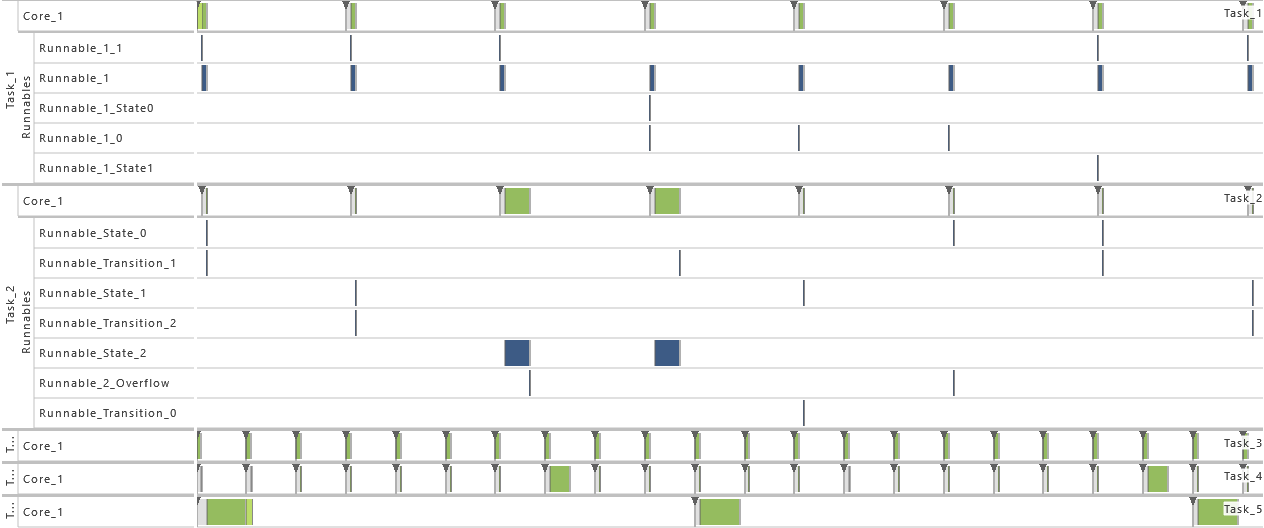

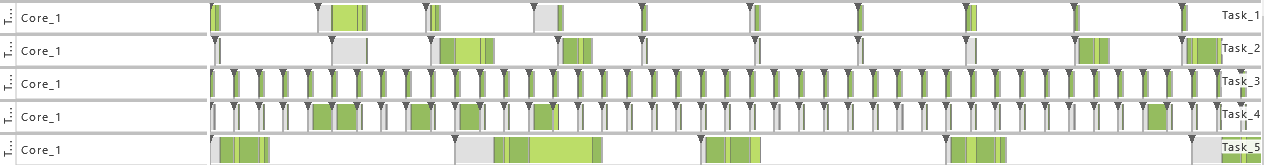

The following figure shows the execution footprint in a Gantt chart:

In the following sections, the individual parts of the AMALTHEA model for Modeling Example 1 are presented followed by a short description of its elements.

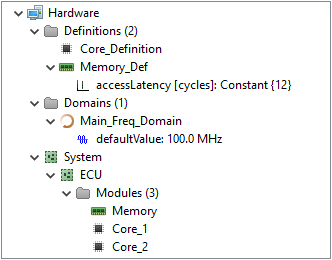

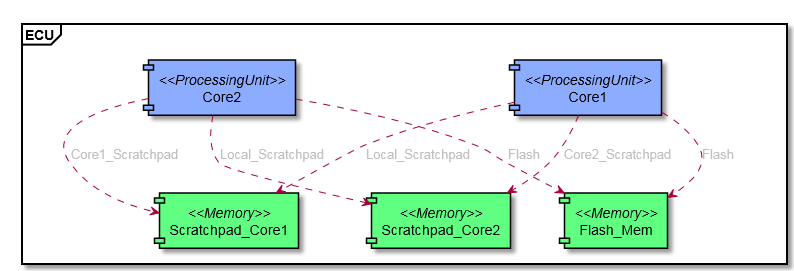

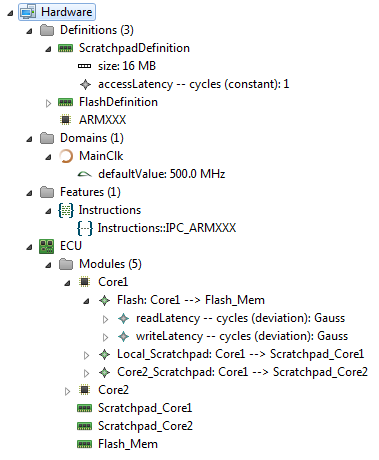

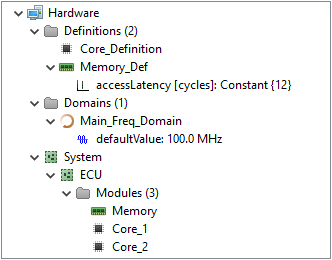

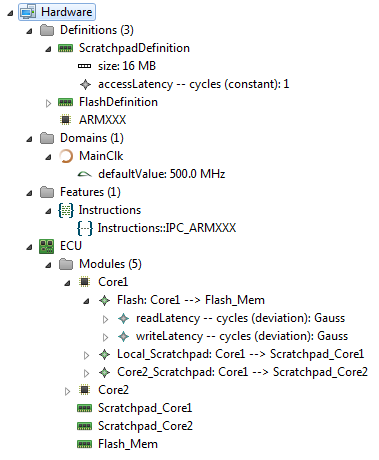

Hardware Model

The hardware model of Modeling Example 1 consists as already mentioned of a dual core processor.

The following gives a structural overview on the modeled elements.

There, the two cores, 'Core_1' and 'Core_2', have a static processing frequency of 100 MHz each, which is specified by the corresponding quartz oscillator 'Quartz'.

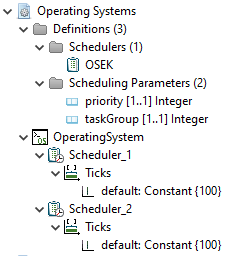

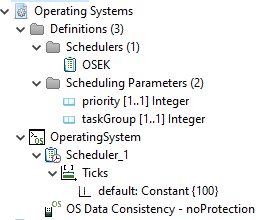

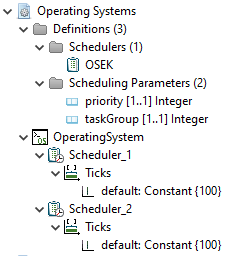

Operating System Model

The operating system (OS) model defines in case of Modeling Example 1 only the needed Scheduler.

Since a dual core processor has to be managed, two schedulers are modeled correspondingly.

In addition to the scheduler definition used by the scheduler, in this case OSEK, a delay of 100 ticks is set, which represents the presumed time the scheduler needs for context switches.

| Scheduler |

Type |

Algorithm |

Delay |

|

Scheduler_1

|

Constant |

OSEK |

100 ticks |

|

Scheduler_2

|

Constant |

OSEK |

100 ticks |

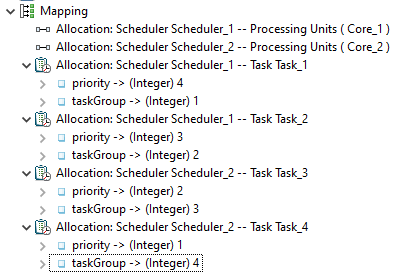

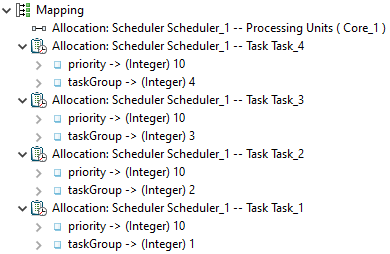

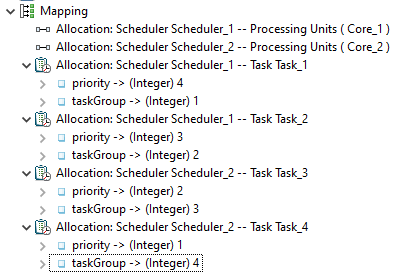

Mapping Model

The mapping model defines allocations between different model parts.

On the one hand, this is the allocation of processes to a scheduler. In case of Example 1, 'Task_1' and 'Task_2' are managed by 'Scheduler_1', while the other tasks are managed by 'Scheduler_2'. Scheduler specific parameters are set here, too. For the OSEK scheduler these are 'priority' and 'taskGroup'. Each task has a priority assigned according its deadline, meaning the one with the shortest deadline, 'Task_1', has the highest priority, and so on.

On the other hand the allocation of cores to a scheduler is set. For Modeling Example 1 two local schedulers were modeled. As a consequence, each scheduler manages one of the processing cores.

A comprehension of the modeled properties can be found in the following tables:

Executable Allocation with Scheduling Parameters

| Scheduler |

Process |

priority |

taskGroup |

| Scheduler_1 |

Task_1 |

4 |

1 |

| Scheduler_1 |

Task_2 |

3 |

2 |

| Scheduler_2 |

Task_3 |

2 |

3 |

| Scheduler_2 |

Task_4 |

1 |

4 |

Core Allocation

| Scheduler |

Core |

| Scheduler_1 |

Core_1 |

| Scheduler_2 |

Core_2 |

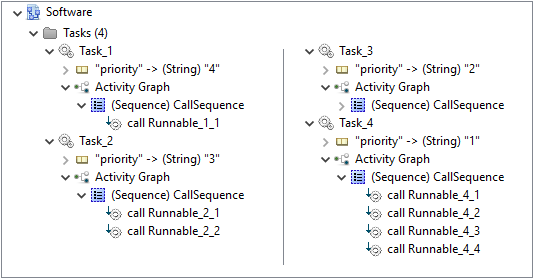

Software Model

Tasks

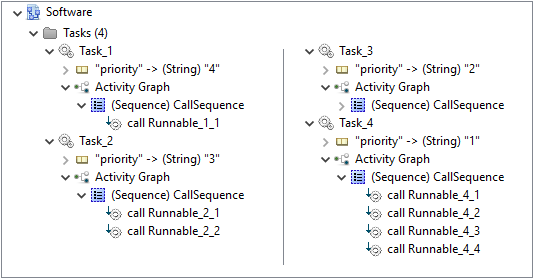

As already mentioned above, the software model of Modeling Example 1 consists exactly of four tasks, named 'Task_1' to 'Task_4'. Each task is preemptive and also calls a definitive number of Runnables in a sequential order.

A comprehension of the modeled properties can be found in the following table:

| Task |

Preemption |

MTA* |

Deadline |

Calls |

|

Task_1

|

Preemptive |

1 |

75 ms |

1) Runnable_1_1 |

|

Task_2

|

Preemptive |

1 |

115 ms |

1) Runnable_2_1 |

| 2) Runnable_2_2 |

|

Task_3

|

Preemptive |

1 |

300 ms |

1) Runnable_3_1 |

| 2) Runnable_3_2 |

| 3) Runnable_3_3 |

|

Task_4

|

Preemptive |

1 |

960 ms |

1) Runnable_4_1 |

| 2) Runnable_4_2 |

| 3) Runnable_4_3 |

| 4) Runnable_4_4 |

*MTA = Multiple Task Activation Limit

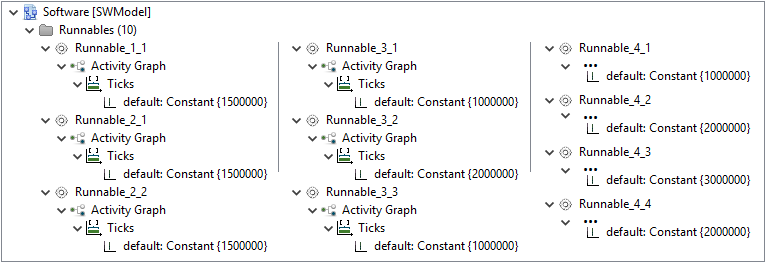

Runnables

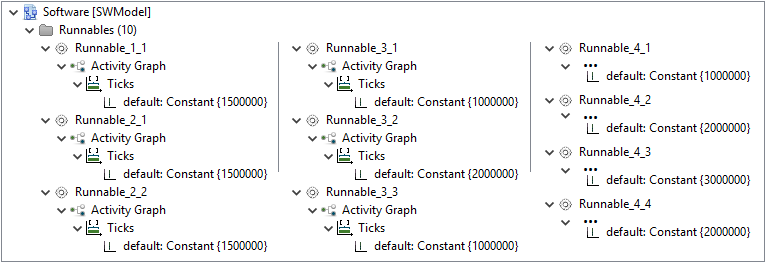

In addition to the task, the software model also contains a definition of Runnables.

For Modeling Example 1, ten individual Runnables are defined.

The only function of those in this example is to consume processing resources.

Therefore, for each Runnable a constant number of instruction cycles is stated.

A comprehension of the modeled properties can be found in the following table:

| Runnable |

InstructionCycles |

| Runnable_1_1 |

1500000 |

| Runnable_2_1 |

1500000 |

| Runnable_2_2 |

1500000 |

| Runnable_3_1 |

1000000 |

| Runnable_3_2 |

2000000 |

| Runnable_3_3 |

1000000 |

| Runnable_4_1 |

1000000 |

| Runnable_4_2 |

2000000 |

| Runnable_4_3 |

3000000 |

| Runnable_4_4 |

2000000 |

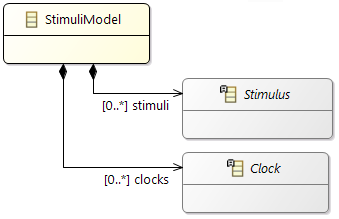

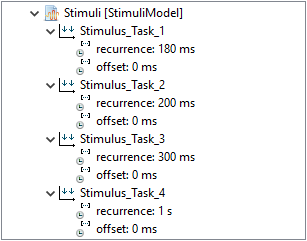

Stimuli Model

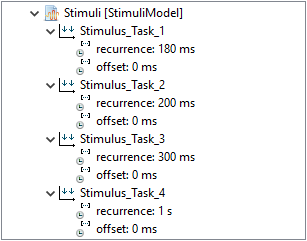

The stimulation model defines the activations of tasks.

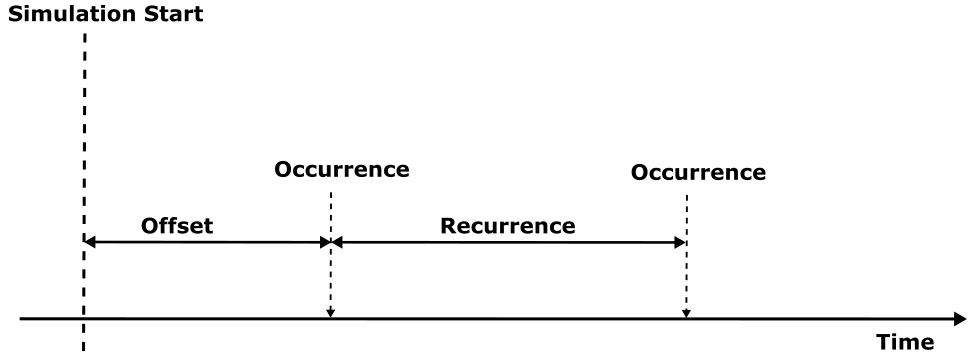

Since the four tasks of Modeling Example 1 are activated periodically, four stimuli according their recurrence are modeled.

A comprehension of the modeled properties can be found in the following table:

| Stimulus |

Type |

Offset |

Recurrence |

| Stimulus_Task_1 |

Periodic |

0 ms |

180 ms |

| Stimulus_Task_2 |

Periodic |

0 ms |

200 ms |

| Stimulus_Task_3 |

Periodic |

0 ms |

300 ms |

| Stimulus_Task_4 |

Periodic |

0 ms |

1 s |

2.3.2 Modeling Example 2

General information

Modeling Example 2 describes a simple system consisting of 4 Tasks, which is running on a single core processor.

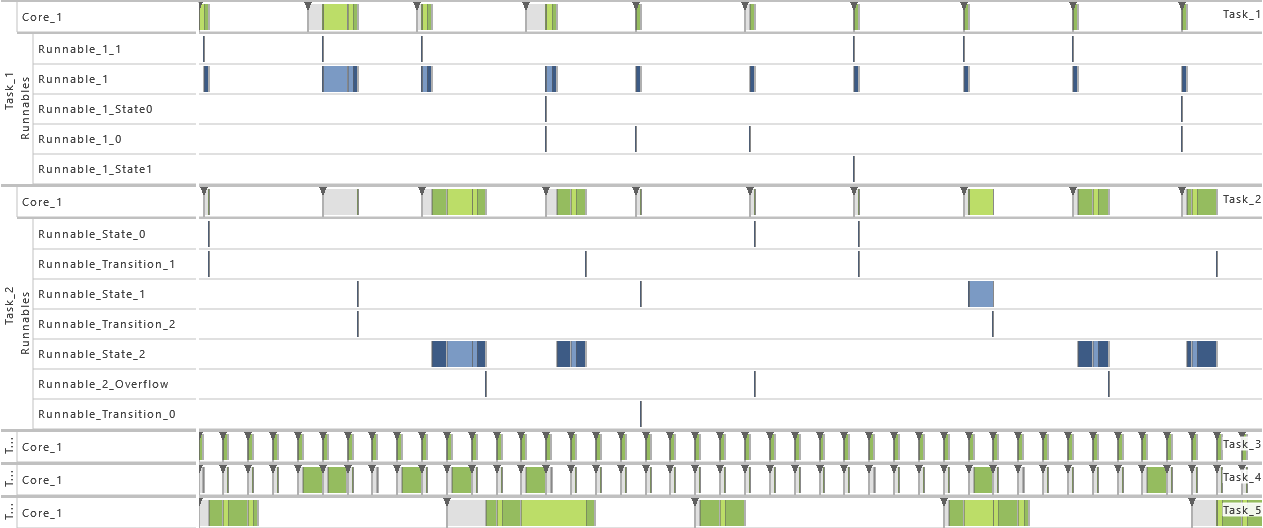

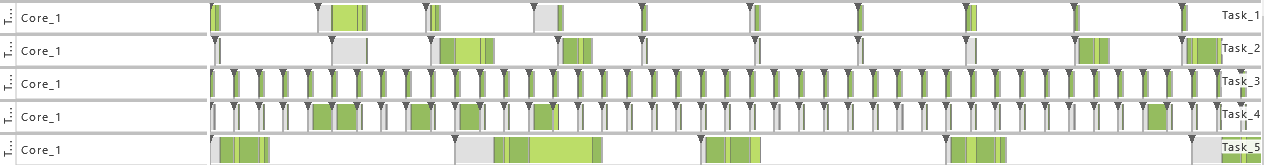

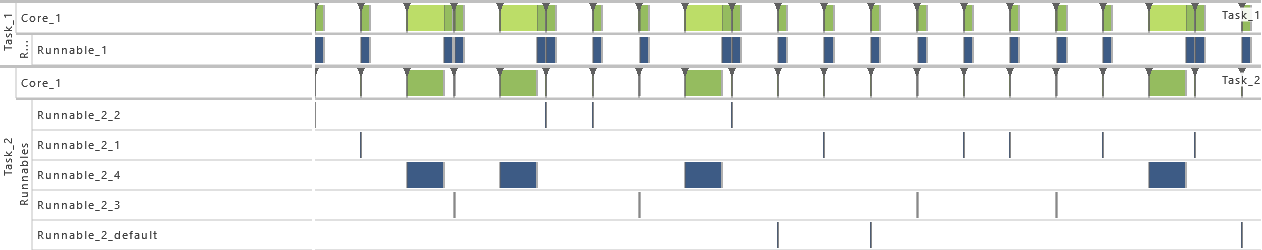

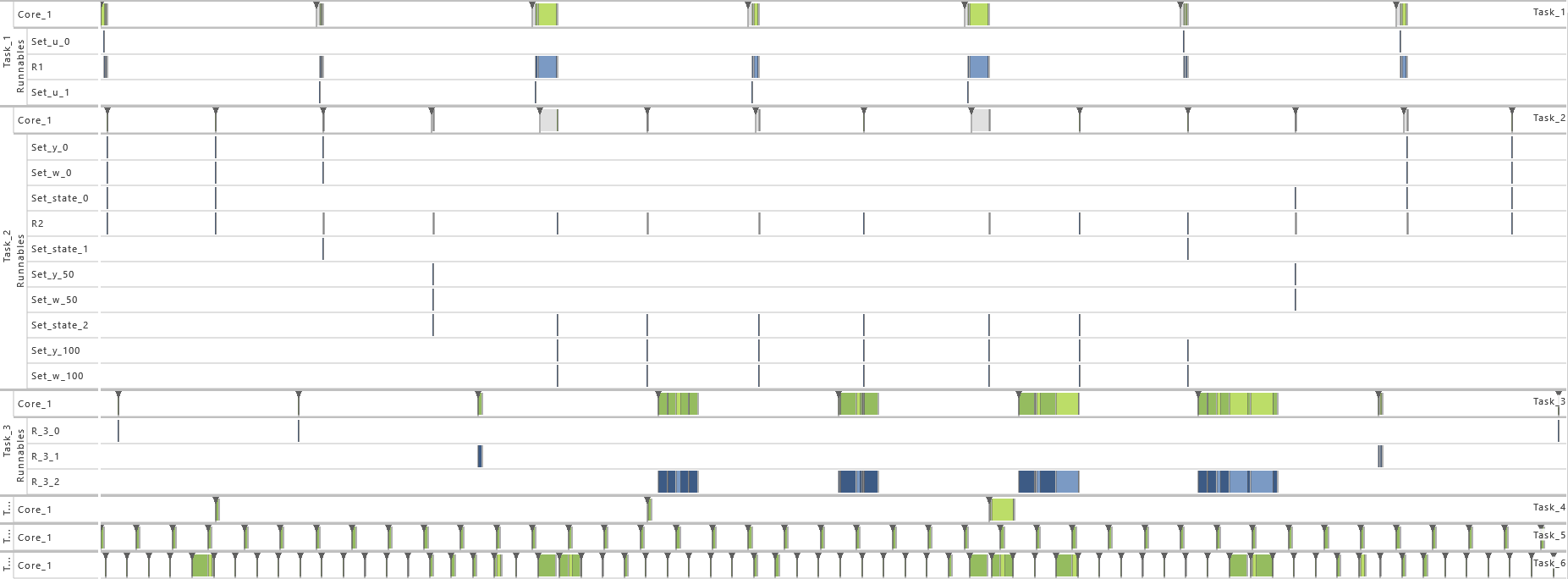

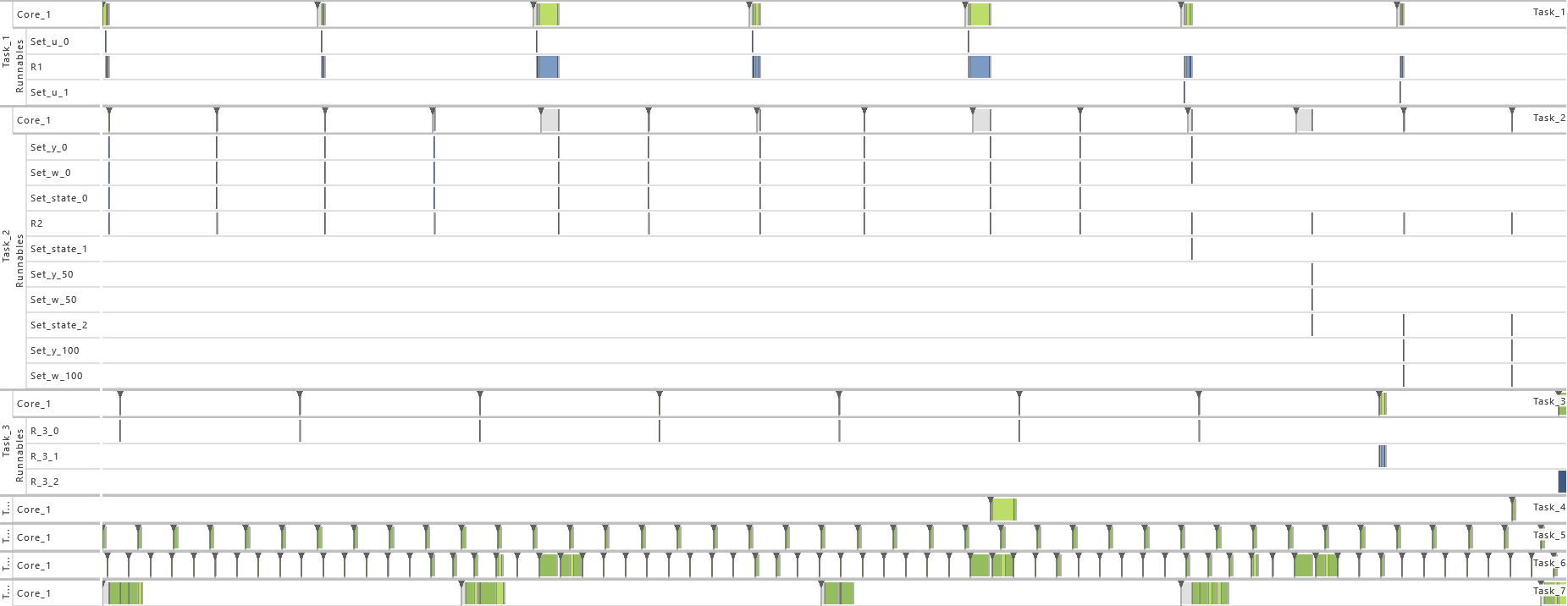

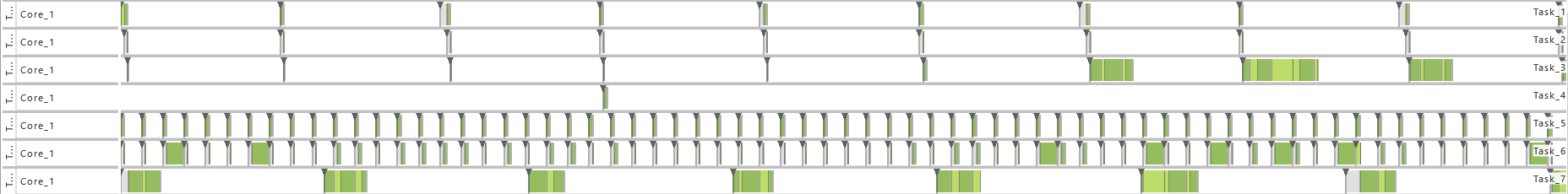

The following figure shows the execution footprint in a Gantt chart:

In the following sections, the individual parts of the AMALTHEA model for Modeling Example 2 are presented followed by a short description of its elements.

Hardware Model

The hardware model of Modeling Example 2 consists as already mentioned of a single core processor.

The following gives a structural overview on the modeled elements.

There, the core, 'Core_1' , has a static processing frequency of 600 MHz each, which is specified by the corresponding quartz oscillator 'Quartz_1'.

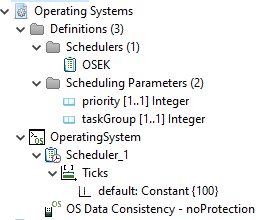

Operating System Model

The operating system (OS) model defines in case of Modeling Example 2 only the needed Scheduler.

Since only a single core has to be managed, a single scheduler is modeled correspondingly.

In addition to the scheduler definition used by the scheduler, in this case OSEK, a delay of 100 ticks is set, which represents the presumed time the scheduler needs for context switches.

| Scheduler |

Type |

Algorithm |

Delay |

|

Scheduler_1

|

Constant |

OSEK |

100 ticks |

Mapping Model

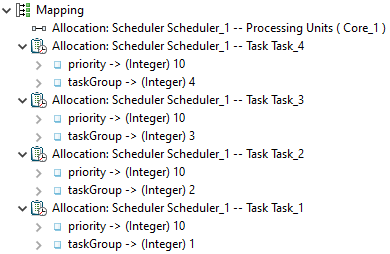

The mapping model defines allocations between different model parts.

On the one hand, this is the allocation of processes to a scheduler. Since there is only one scheduler available in the system, all four tasks are mapped to 'Scheduler_1'. Scheduler specific parameters are set here, too. For the OSEK scheduler these are 'priority' and 'taskGroup'. All tasks have assigned the same priority (10) to get a cooperative scheduling.

On the other hand the allocation of cores to a scheduler is set. As a consequence, the scheduler manages the only available processing core.

A comprehension of the modeled properties can be found in the following tables:

Executable Allocation with Scheduling Parameters

| Scheduler |

Process |

priority |

taskGroup |

| Scheduler_1 |

Task_1 |

10 |

1 |

| Scheduler_1 |

Task_2 |

10 |

2 |

| Scheduler_1 |

Task_3 |

10 |

3 |

| Scheduler_1 |

Task_4 |

10 |

4 |

Core Allocation

| Scheduler |

Core |

| Scheduler_1 |

Core_1 |

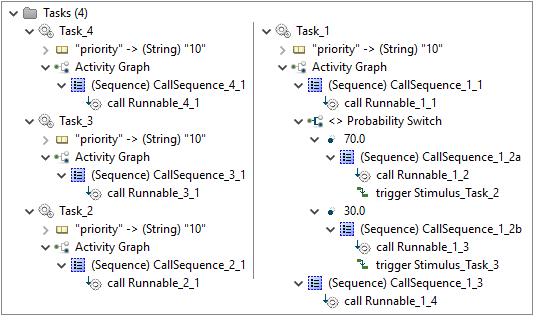

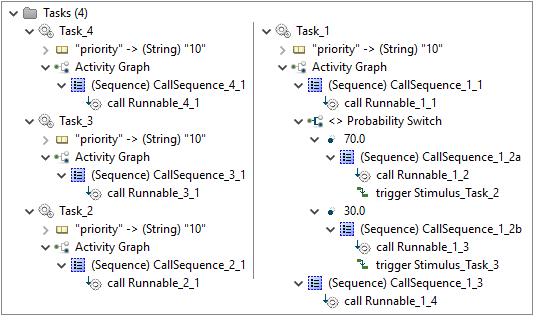

Software Model

Tasks

As already mentioned above, the software model of Modeling Example 2 consists exactly of four tasks, named 'Task_1' to 'Task_4'. 'Task_2' to'Task_4' call a definitive number of Runnables in a sequential order. 'Task_1' instead contains a call graph that models two different possible execution sequences. In 70% of the cases the sequence 'Runnable_1_1', 'Runnable_1_2', 'Task_2', 'Runnable_1_4' is called, while in the remaining 30% the sequence 'Runnable_1_1', 'Runnable_1_3', 'Task_3', 'Runnable_1_4' is called. As it can be seen, the call graph of 'Task_1' contains also interprocess activations, which activate other tasks.

A comprehension of the modeled properties can be found in the following table:

| Task |

Preemption |

MTA* |

Deadline |

Calls |

|

Task_1

|

Preemptive |

3 |

25 ms |

1.1) Runnable_1_1 |

| 1.2) Runnable_1_2 |

| 1.3) Task_2 |

| 1.4) Runnable_1_4 |

| 2.1) Runnable_1_1 |

| 2.2) Runnable_1_3 |

| 2.3) Task_3 |

| 2.4) Runnable_1_4 |

|

Task_2

|

Preemptive |

3 |

25 ms |

1) Runnable_2_1 |

|

Task_3

|

Preemptive |

3 |

25 ms |

1) Runnable_3_1 |

|

Task_4

|

Preemptive |

3 |

25 ms |

1) Runnable_4_1 |

*MTA = Multiple Task Activation Limit

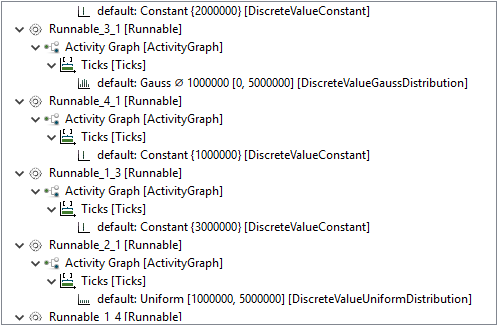

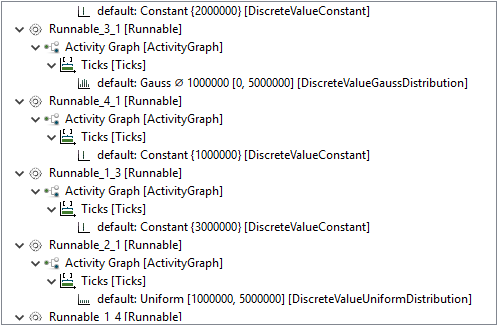

Runnables

In addition to the task, the software model also contains a definition of Runnables.

For Modeling Example 2, seven individual Runnables are defined.

The only function of those in this example is to consume processing resources.

Therefore, for each Runnable a number of instruction cycles is stated.

The number of instruction cycles is thereby either constant or defined by a statistical distribution.

A comprehension of the modeled properties can be found in the following table:

| Runnable |

Type |

Instructions |

|

Runnable_1_1

|

Constant |

1000000 |

|

Runnable_1_2

|

Constant |

2000000 |

|

Runnable_1_3

|

Constant |

3000000 |

|

Runnable_1_4

|

Constant |

4000000 |

|

Runnable_2_1

|

Uniform Distribution |

1000000 |

| 5000000 |

|

Runnable_3_1

|

Gauss Distribution |

mean: 1000000 |

| sd: 50000 |

| upper: 5000000 |

|

Runnable_4_1

|

Constant |

4000000 |

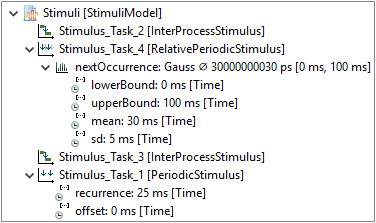

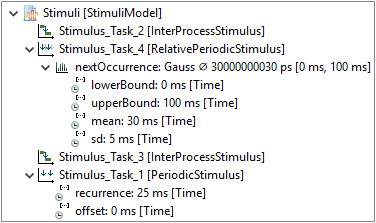

Stimulation Model

The stimulation model defines the activations of tasks.

'Task_1' is activated periodically by 'Stimulus_Task_1'

'Stimulus_Task_2' and 'Stimulus_Task_3' represent the inter-process activations for the corresponding tasks.

'Task_4' finally is activated sporadically following a Gauss distribution.

A comprehension of the modeled properties can be found in the following table:

| Stimulus |

Type |

Parameters |

|

Stimulus_Task_1

|

Periodic |

offset: 0 ms |

| recurrence: 25 ms |

|

Stimulus_Task_2

|

Inter-Process |

|

|

Stimulus_Task_3

|

Inter-Process |

|

|

Stimulus_Task_4

|

Sporadic (Gauss) |

mean: 30 ms |

| sd: 5 ms |

| upper: 100 ms |

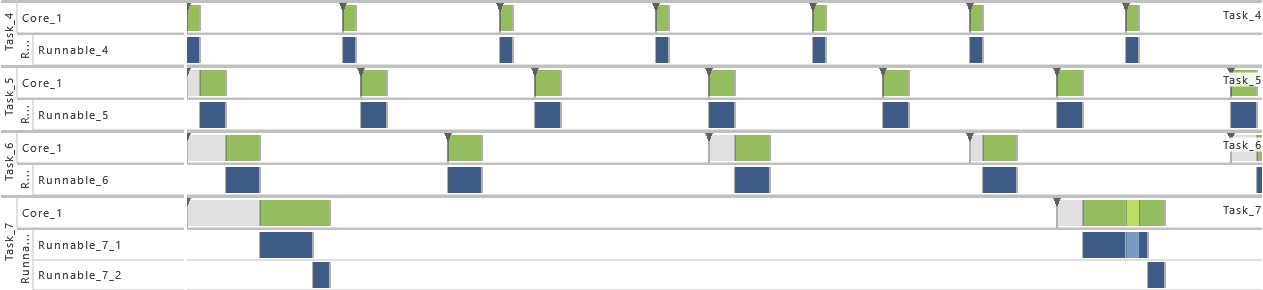

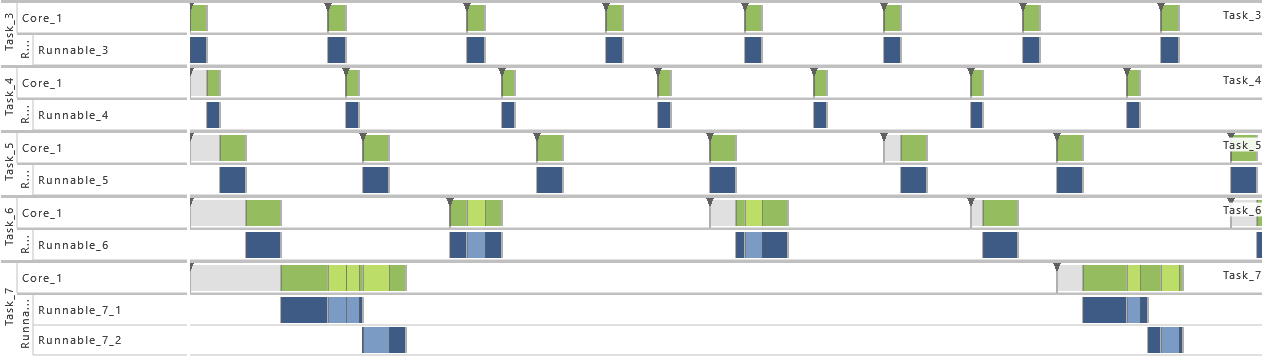

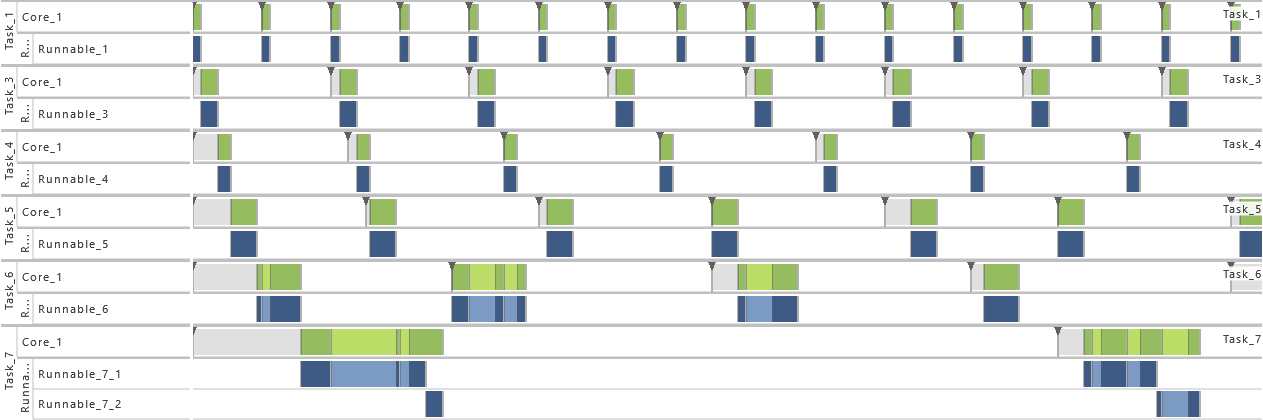

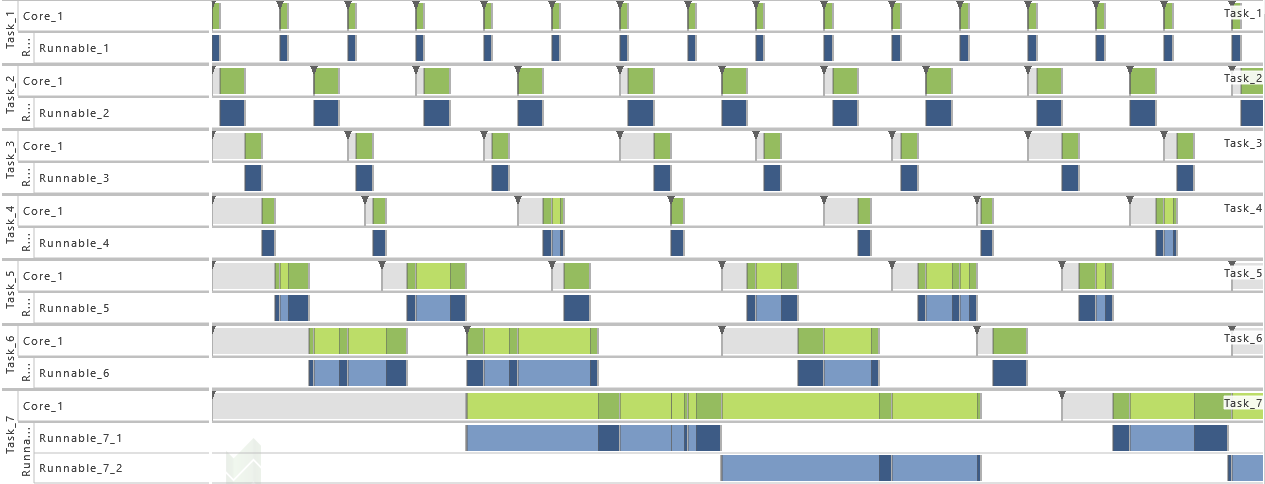

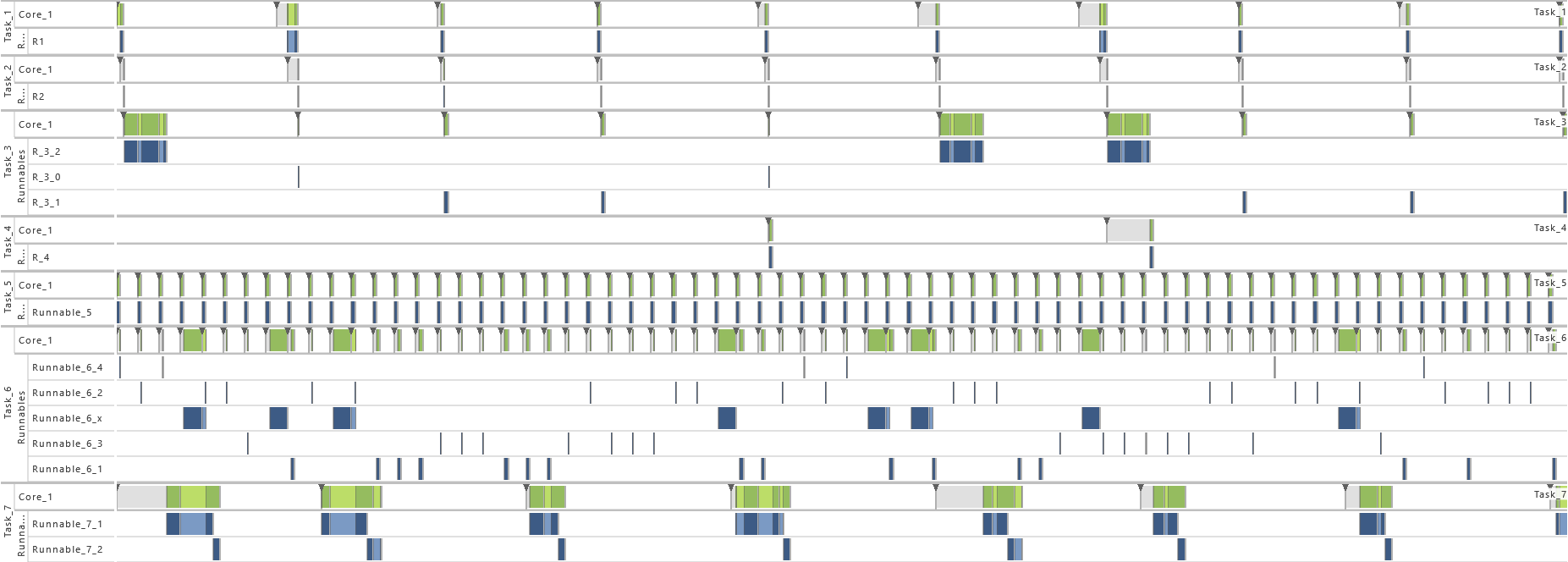

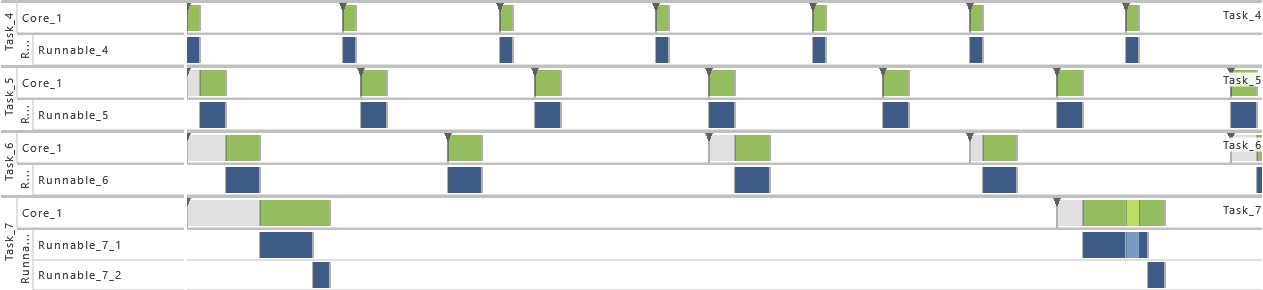

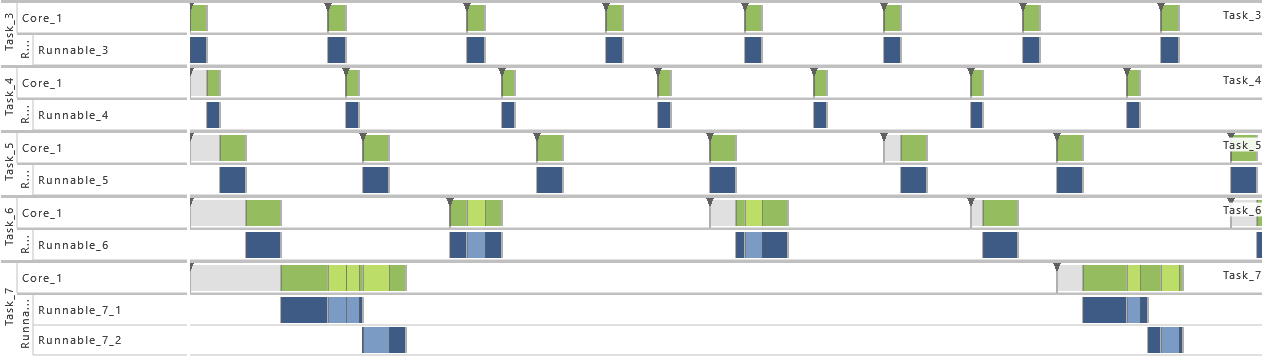

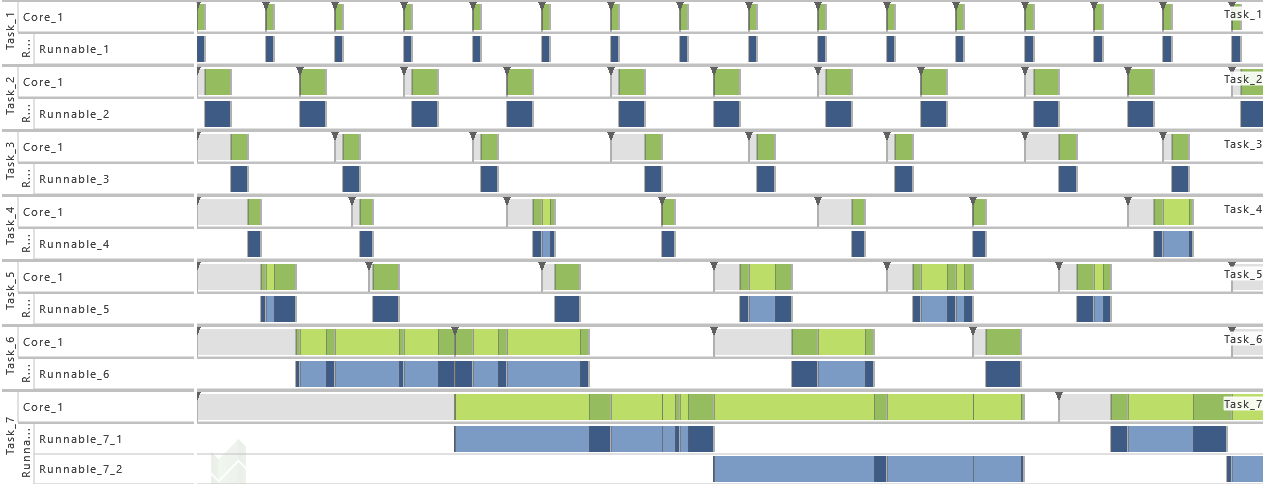

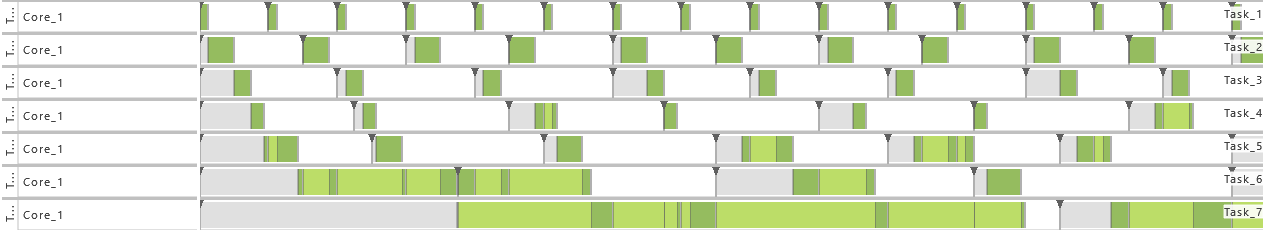

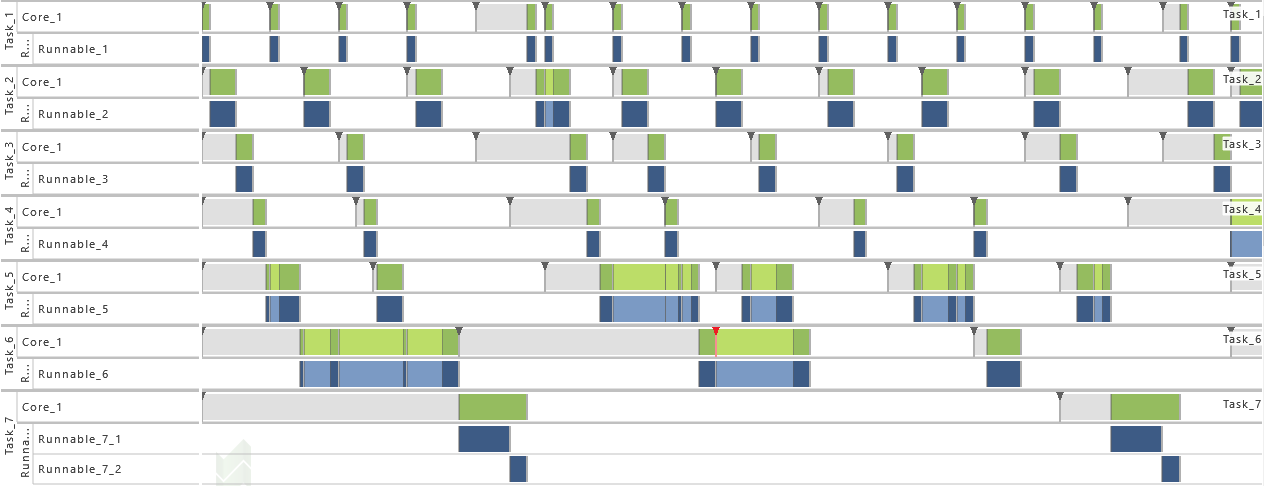

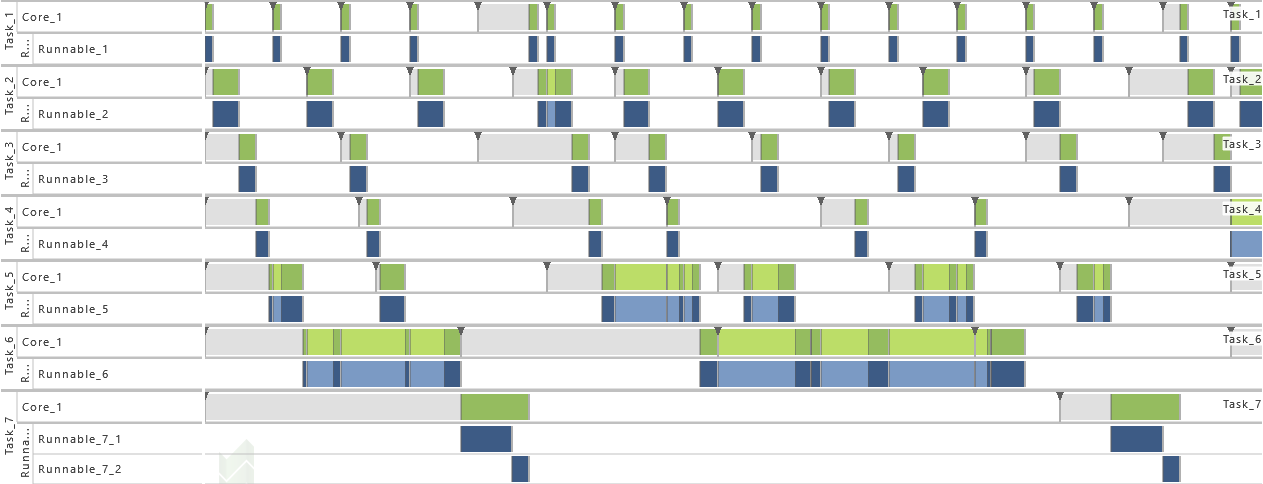

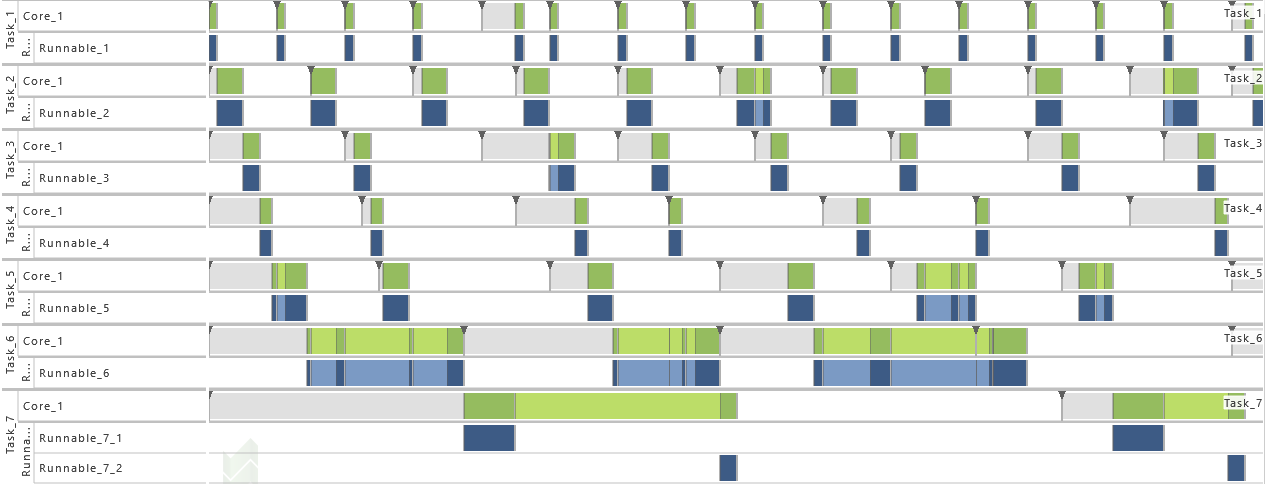

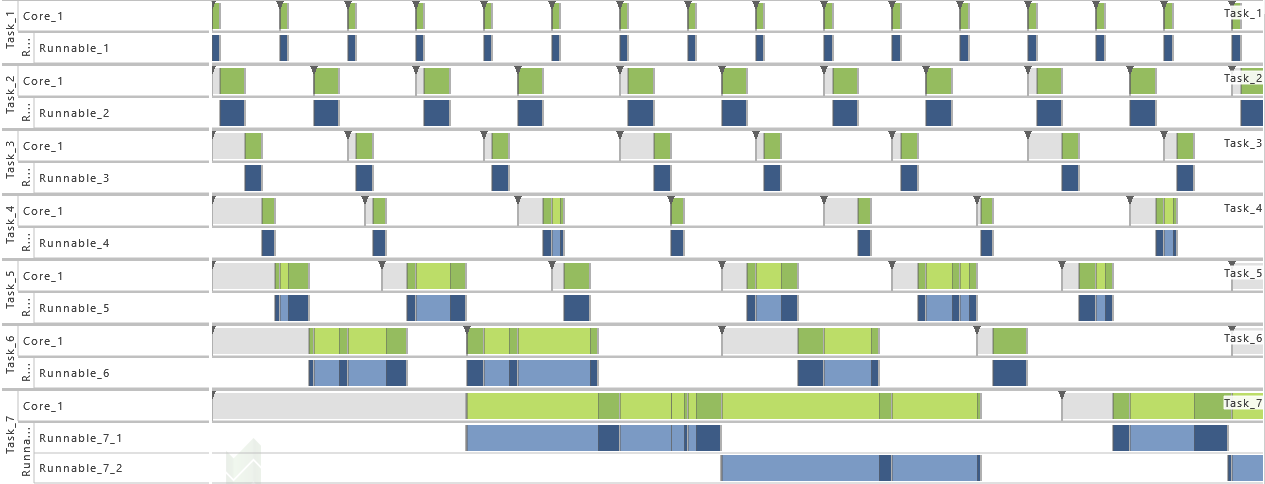

2.3.3 Modeling Example "Purely Periodic without Communication"

This system architecture pattern consists of a task set, where each task is activated periodically and no data accesses are performed. The execution time for each task is determined by the called runnable entities as specified in the table below. All tasks contain just one runnable except of T7, which calls at first R7,1 and after that R7,2.

The table below gives a detailed specification of the tasks and their parameters. The tasks are scheduled according fixed-priority, preemptive scheduling and if not indicated otherwise, all events are active in order to get a detailed insight into the system's behavior.

| Task |

Priority |

Preemption |

Multiple Task Activation Limit |

Activation |

Execution Time |

| T1 |

7 |

FULL |

1 |

Periodic |

R1 |

Uniform |

| Offset = 0 |

Min = 9.95 |

| Recurrence = 80 |

Max = 10 |

| T2 |

6 |

FULL |

1 |

Periodic |

R2 |

Uniform |

| Offset = 0 |

Min = 29.95 |

| Recurrence = 120 |

Max = 30 |

| T3 |

5 |

FULL |

1 |

Periodic |

R3 |

Uniform |

| Offset = 0 |

Min = 19.95 |

| Recurrence = 160 |

Max = 20 |

| T4 |

4 |

FULL |

1 |

Periodic |

R4 |

Uniform |

| Offset = 0 |

Min = 14.95 |

| Recurrence = 180 |

Max = 15 |

| T5 |

3 |

FULL |

1 |

Periodic |

R5 |

Uniform |

| Offset = 0 |

Min = 29.95 |

| Recurrence = 200 |

Max = 30 |

| T6 |

2 |

FULL |

1 |

Periodic |

R6 |

Uniform |

| Offset = 0 |

Min = 39.95 |

| Recurrence = 300 |

Max = 40 |

| T7 |

1 |

FULL |

1 |

|

R7,1 |

Uniform |

| Min = 59.95 |

| Periodic |

Max = 60 |

| Offset = 0 |

R7,2 |

Uniform |

| Recurrence = 1000 |

Min = 19.95 |

|

Max = 20 |

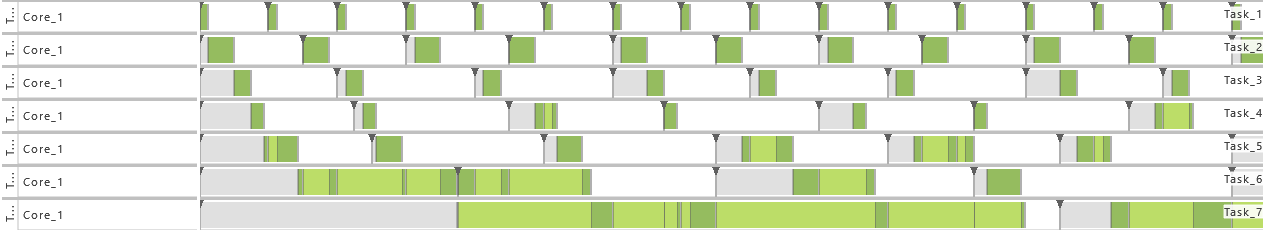

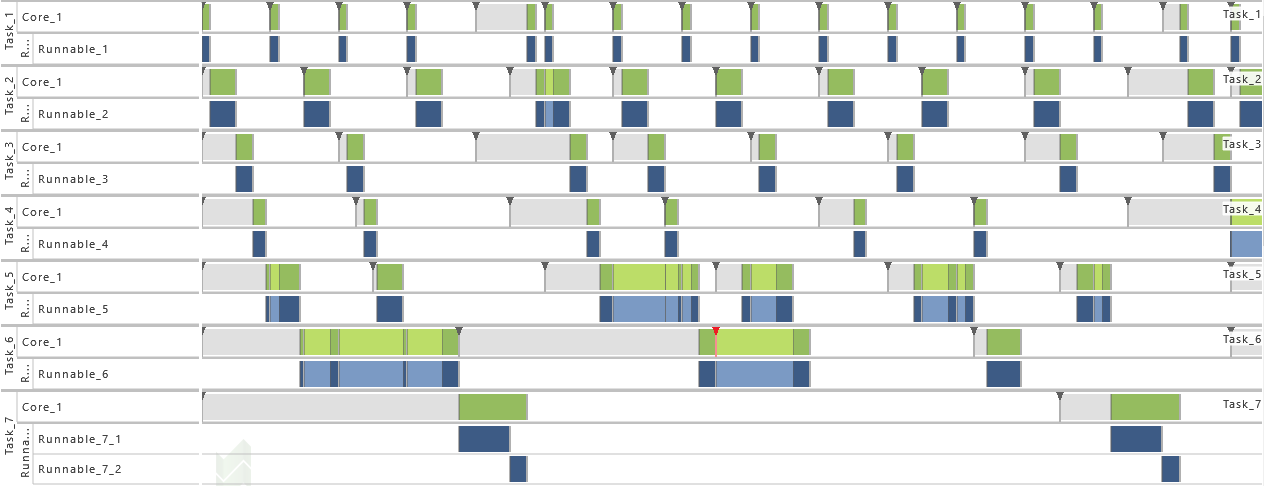

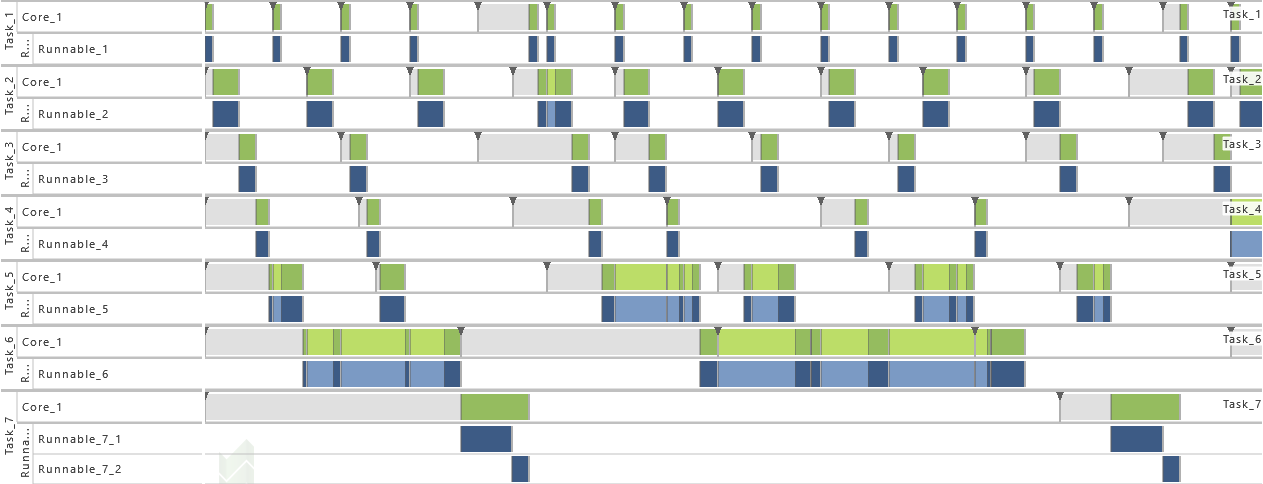

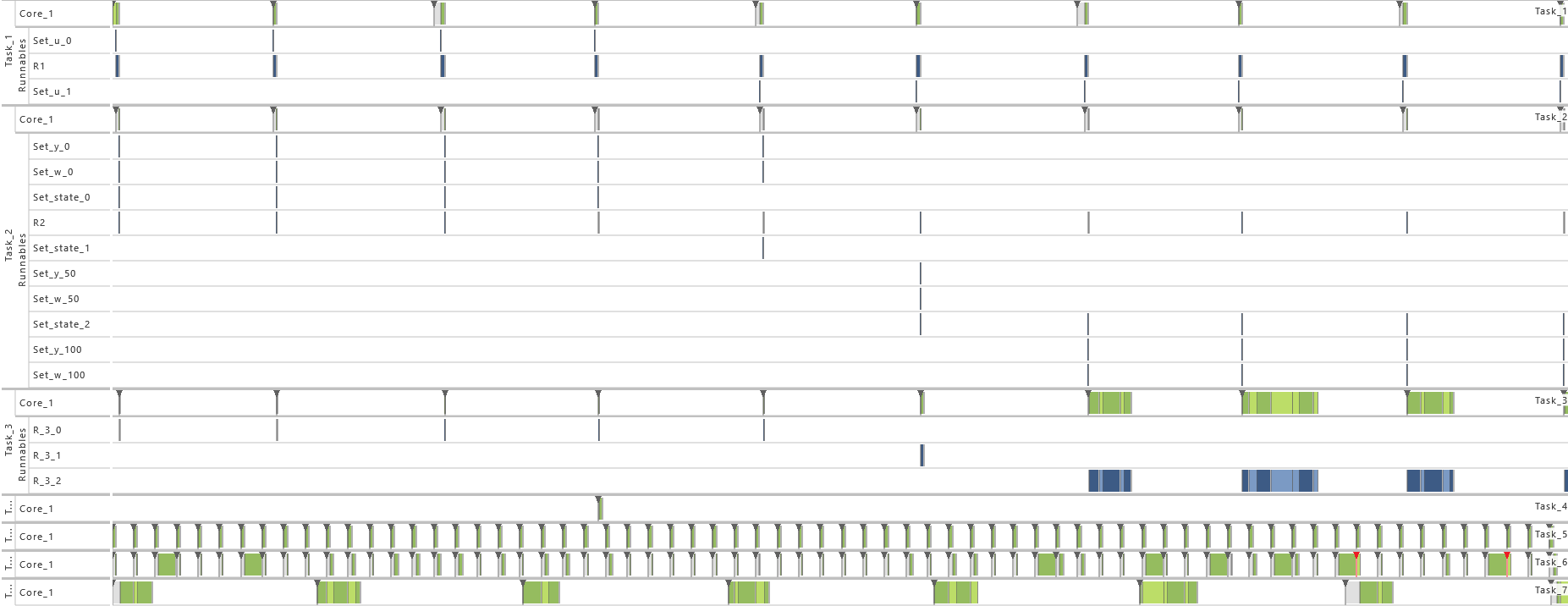

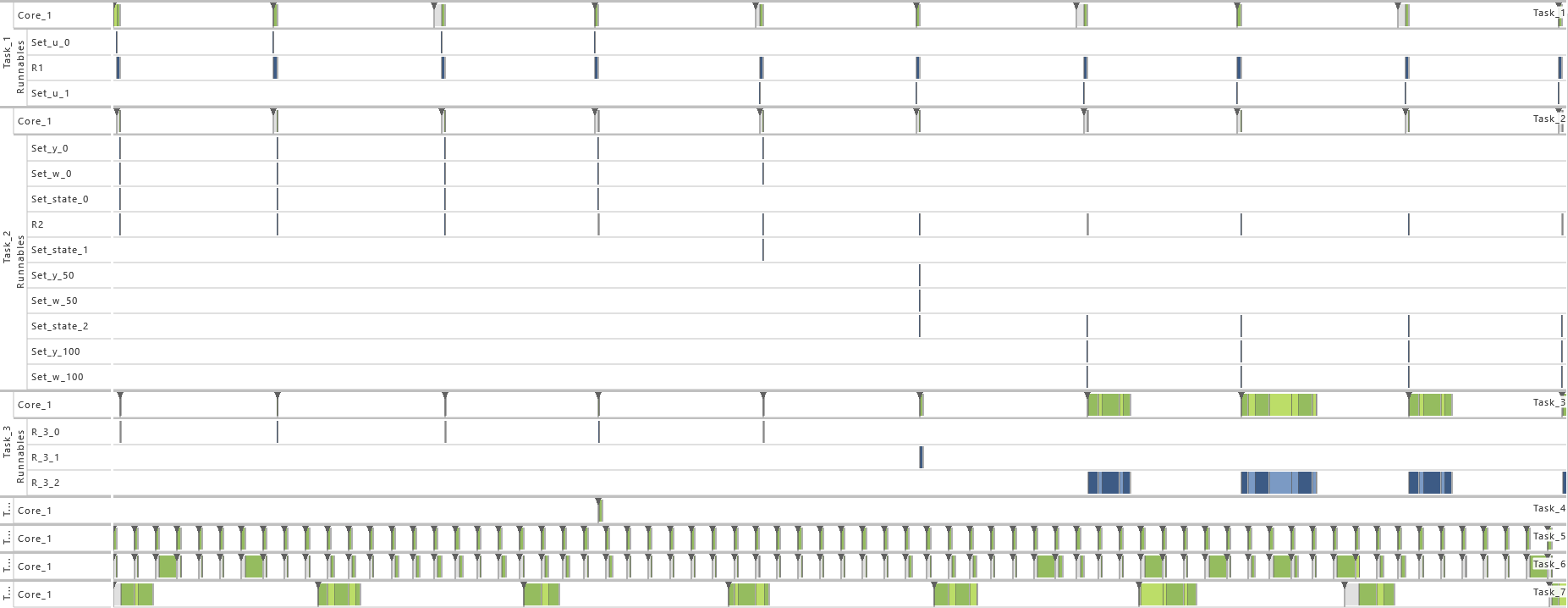

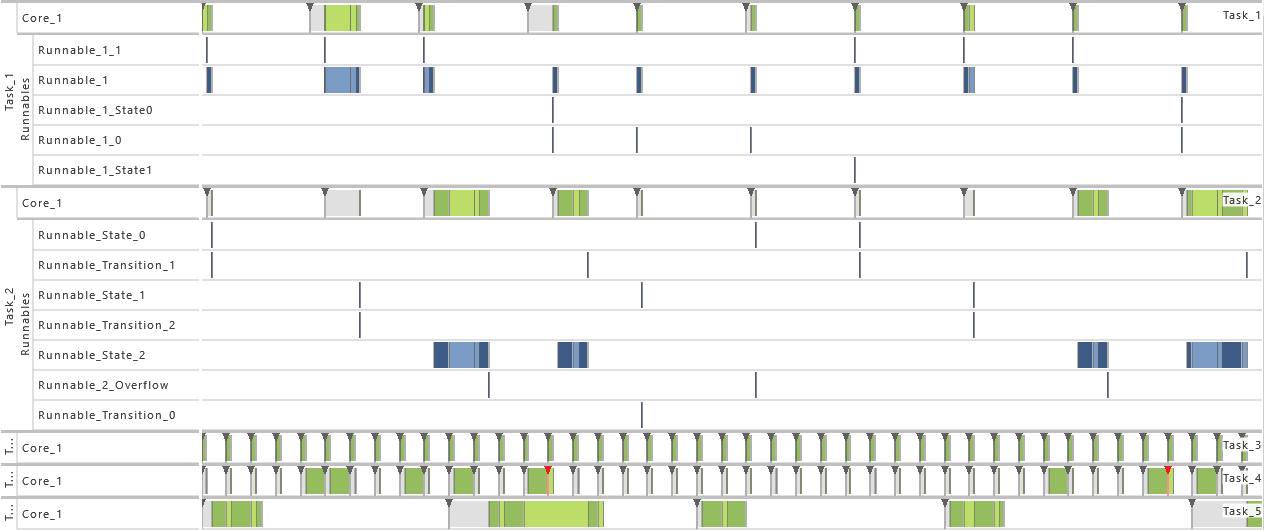

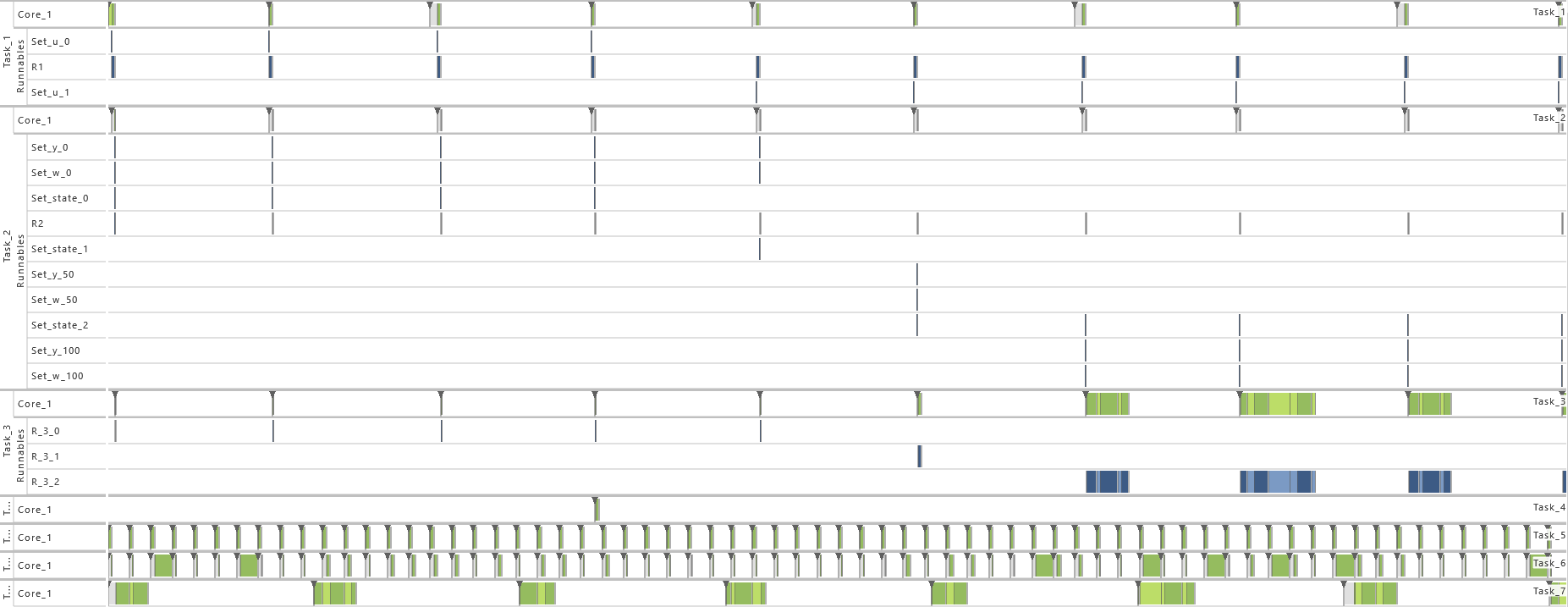

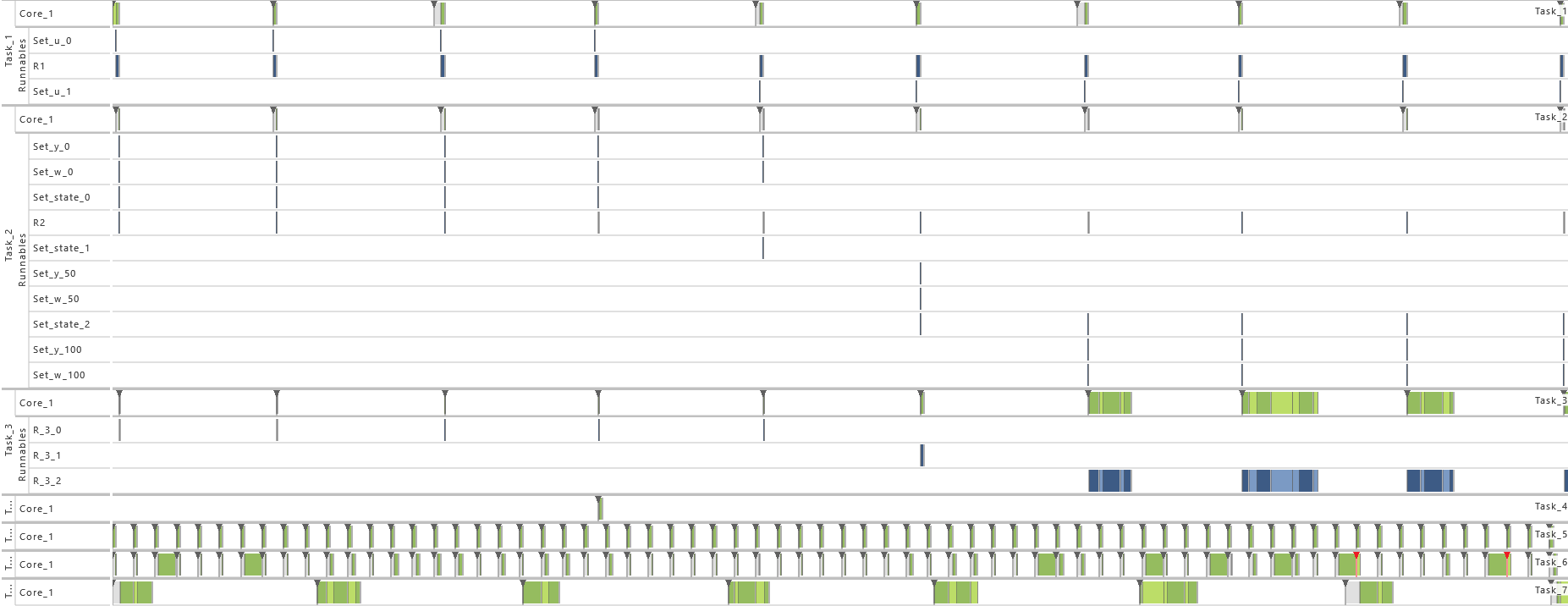

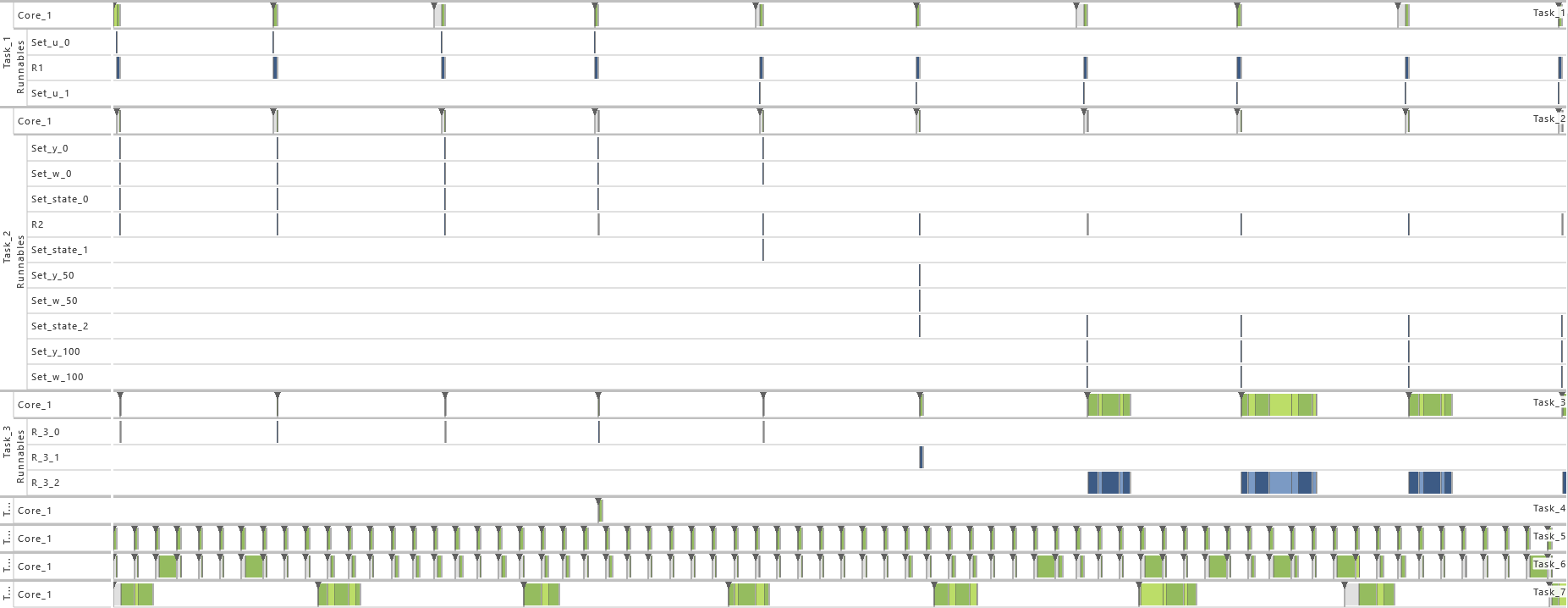

In order to show the impact of changes to the model, the following consecutive variations are made to the model:

-

1) Initial Task Set

- For this variation, the Tasks T4, T5, T6, and T7 of the table above are active.

-

2) Increase of Task Set Size I

- For this variation, the Tasks T3, T4, T5, T6, and T7 are active. That way the utilization of the system is increased.

-

3) Increase of Task Set Size II

- For this variation, the Tasks T1, T3, T4, T5, T6, and T7 are active. That way the utilization of the system is increased.

-

4) Increase of Task Set Size III

- As from this variation on, all tasks (T1 - T7) are active. That way the utilization of the system is increased.

-

5) Accuracy in Logging

- For this variation, just task events are active. That way, only a limited insight into the system's runtime behavior is available.

-

6) Schedule

- As from this variation on, T7 is set to non-preemptive. That way, the timing behavior is changed, which results in extinct activations (see red mark in the figure below).

-

7) Activation

- As from this variation on, the maximum number of queued activation requests for all tasks is set to 2. That way, the problem with extinct activations resulting from the previous variation is solved.

-

8) Schedule Point

- For this variation, a schedule point is added to T7 between the calls of R7,1 and R7,2. That way, the timing behavior is changed in particular.

-

9) Scheduling Algorithm

- For this variation, the scheduling algorithm is set to Earliest Deadline First. That way, the timing behavior is changed completely.

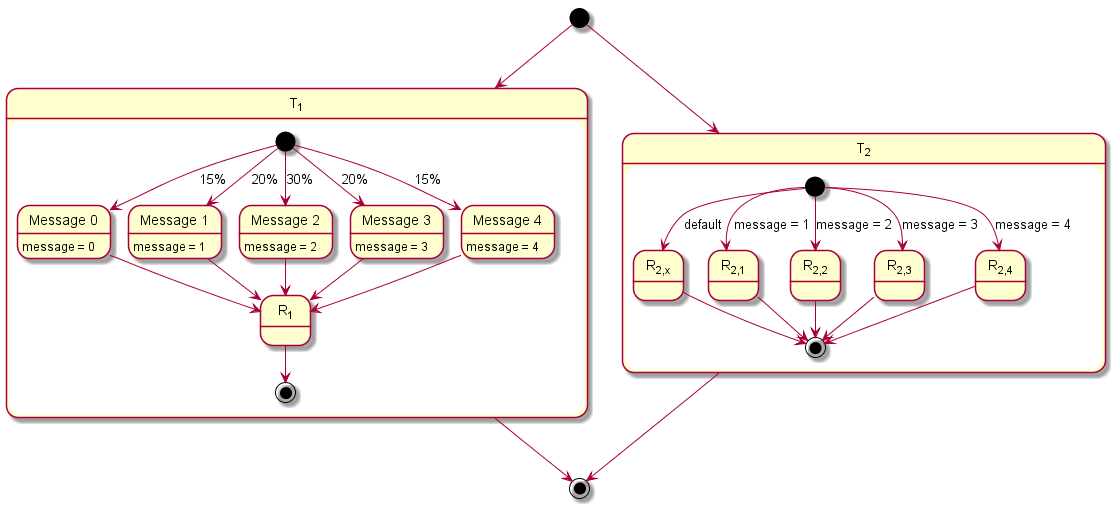

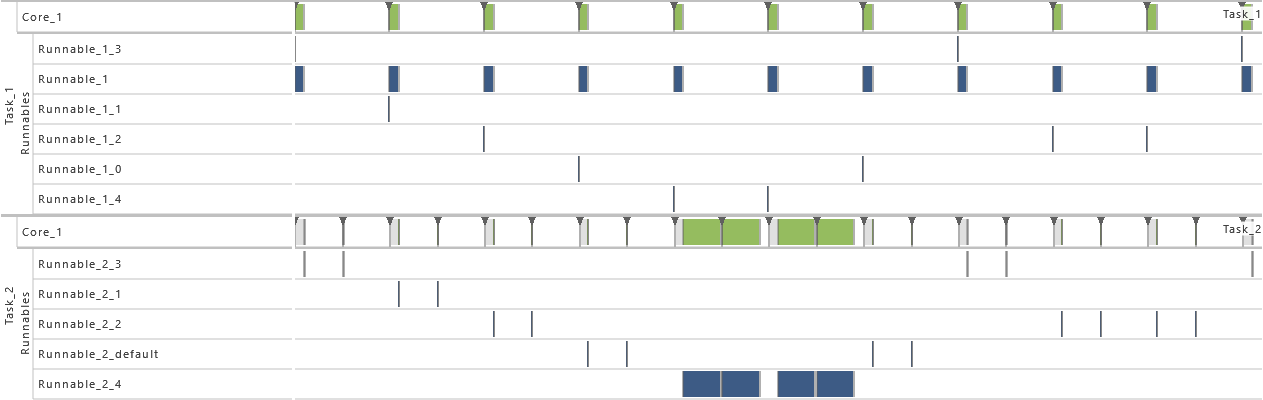

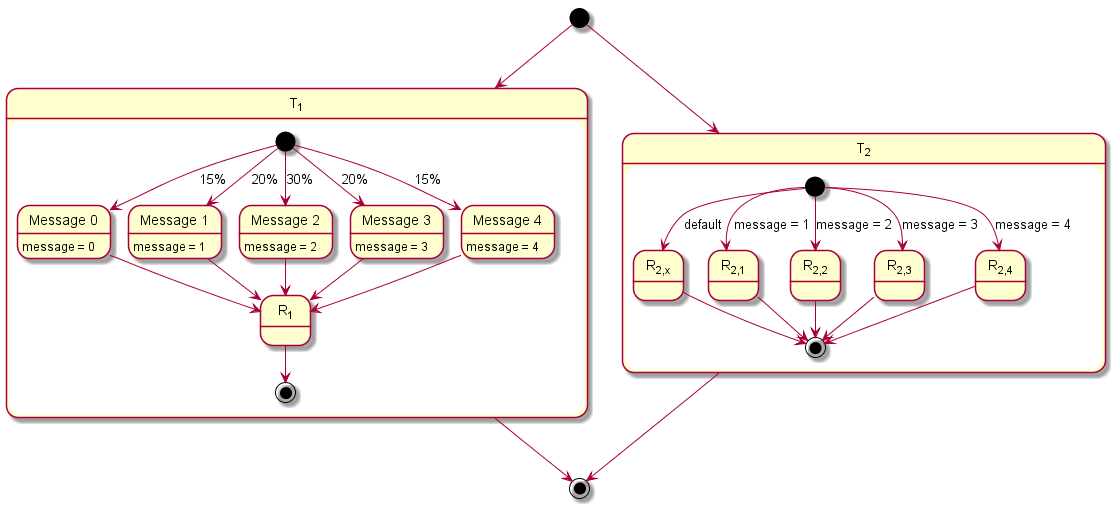

2.3.4 Modeling Example "Client-Server without Reply"

This system architecture pattern extends the modeling example "Purely Periodic without Communication" by adding an one-way communication between tasks. It consists of two tasks T1, and T2. Task T1 sends a message to Task T2 before runnable R1 is called. In 20% of the cases Message 1, in 30% of the cases Message 2, in 20% of the cases Message 3, in 15% of the cases Message 4, and in 15% of the cases any other message than the previously mentioned ones is sent. Task T2 reacts on the contents of the message by calling different runnables. In case of Message 1 runnable R2,1, in case of Message 2 runnable R2,2, in case of Message 3 runnable R2,3, in case of Message 4 runnable R2,4, and in case of any other message than the previous mentioned ones runnable R2,x is called as default.

The table below gives a detailed specification of the tasks and their parameters. The tasks are scheduled according fixed-priority, preemptive scheduling and if not indicated otherwise, all events are active in order to get a detailed insight into the system's behavior.

| Task |

Priority |

Preemption |

Multiple Task Activation Limit |

Activation |

Execution Time |

| T1 |

2 |

FULL |

1 |

Periodic |

R1 |

Uniform |

| Offset = 0 |

Min = 9.9 * 106 |

| Recurrence = 100 * 106 |

Max = 10 * 106 |

| T2 |

1 |

FULL |

1 |

|

R2,x |

Uniform |

| Min = 99 |

| Max = 100 |

| R2,1 |

Uniform |

| Min = 990 |

| Max = 1 * 103 |

| Periodic |

R2,2 |

Uniform |

| Offset = 15 * 106 |

Min = 49.5 * 103 |

| Recurrence = 60 * 106 |

Max = 50 * 103 |

|

R2,3 |

Uniform |

| Min = 990 * 103 |

| Max = 1 * 106 |

| R2,4 |

Uniform |

| Min = 39.6 * 106 |

| Max = 40 * 106 |

In order to show the impact of changes to the model, the following consecutive variations are made to the model:

-

1) Initial Task Set

- As defined by the table above.

-

2) Exclusive Area

- For this variation, all data accesses are protected by an exclusive area. Therefore, the data accesses in T1 as well as all runnables in T2 (R2,x, R2,1, R2,2, R2,3, and R2,4) are protected during their complete time of execution via a mutex and priority ceiling protocol. That way, blocking situations appear.

-

3) Inter-process Activation

- As from this variation on, task T2 gets activated by an inter-process activation from task T1 instead of being activated periodically. The interprocess activation is performed right after the message

message is written in T2 and consequently before the runnable R1 is called. That way, a direct connection between T1 and T2 is established.

-

4) Priority Ordering

- As from this variation on, the priority relation between task T1 and T2 is reversed. As a consequence, the priority of task T1 is set to 1 and the priority of task T2 is set to 2. That way, a switch from asynchronous to synchronous communication is considered.

-

5) Event Frequency Increase

- As from this variation on, the periodicity of T1 is shortened. For this, the value for the period of task T1 is cut in half to 50 * 106 time units. That way, the utilization of the system is increased.

-

6) Execution Time Fluctuation

- As from this variation on, the execution time distribution is widened for both tasks. Therefore, the maximum of every uniform distribution is increased by 1 percent so that they vary now by 2 percent. That way, the utilization of the system is increased, which results in extinct activations.

-

7) Activation

- As from this variation on, the maximum number of queued activation requests for both tasks is set to 2. That way, the problem with extinct activations resulting from the previous variation is solved.

-

8) Accuracy in Logging of Data State I

- For this variation, the data accesses in task T1 and task T2 are omitted. Instead, the runnable entities R2,x, R2,1, R2,2, R2,3, and R2,4, each representing the receipt of a specific message, are executed equally random, meaning each with a probability of 20%. That way, only a limited insight into the system's runtime behavior is available.

-

9) Accuracy in Logging of Data State II

- For this variation, just task events are active. That way, only a limited insight into the system's runtime behavior is available.

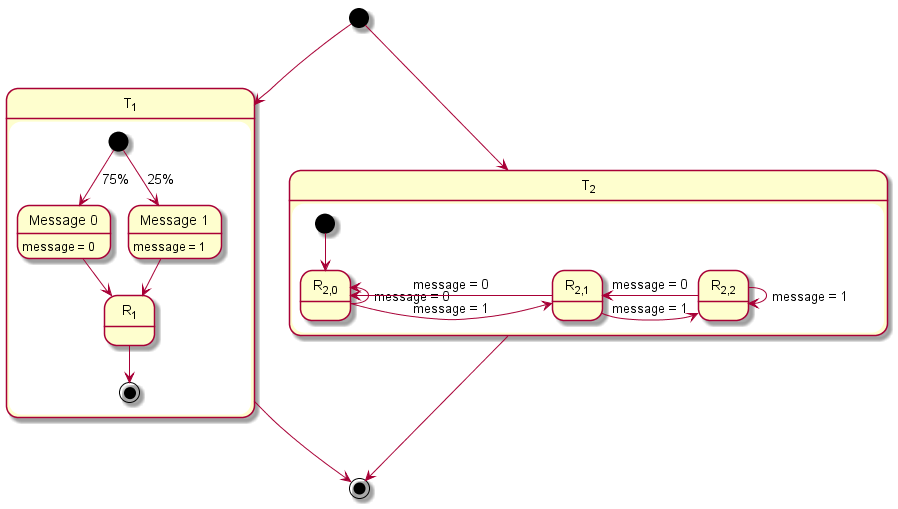

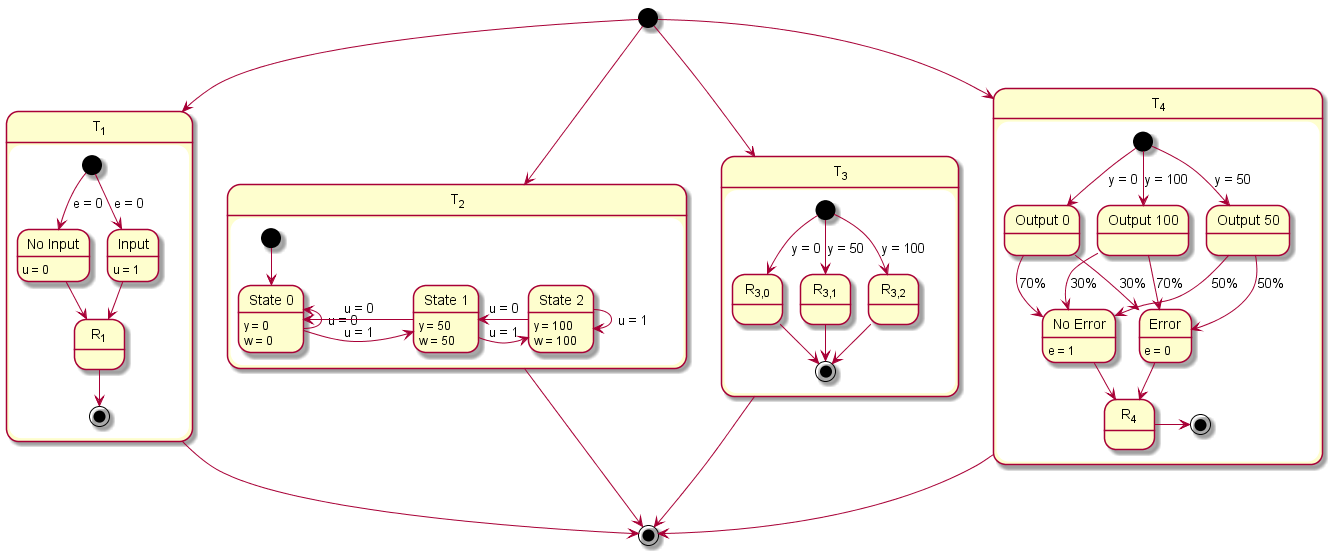

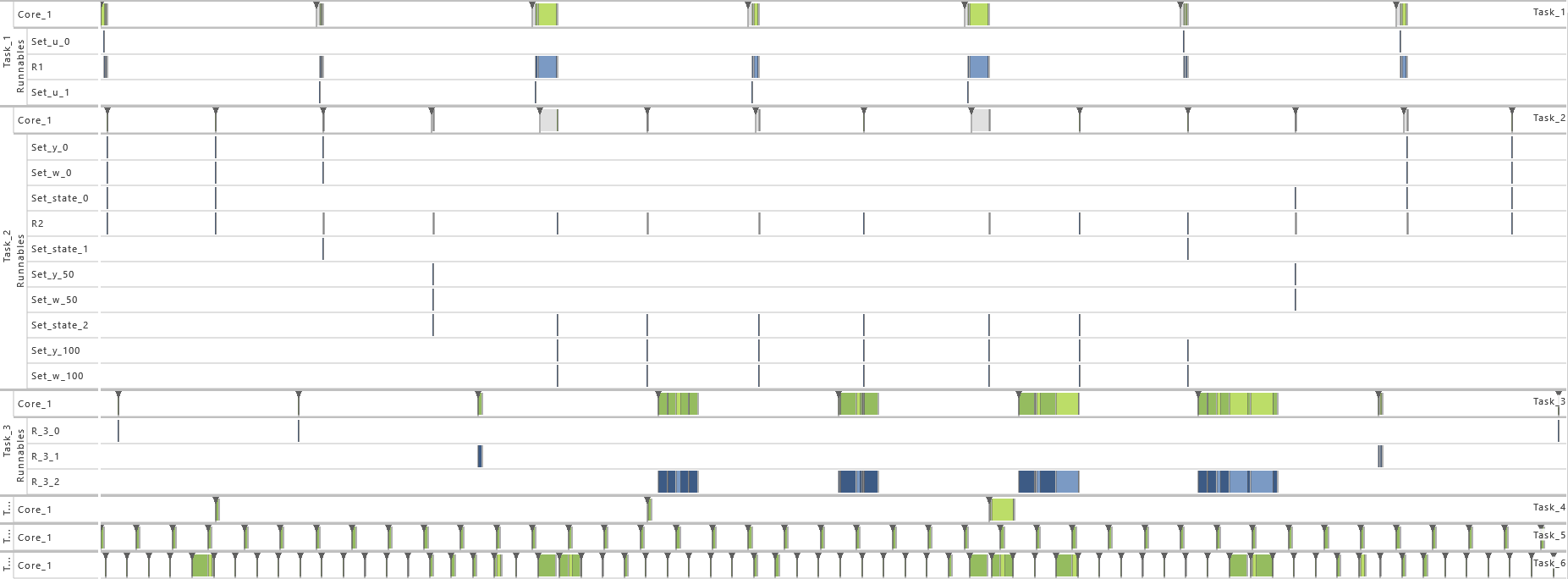

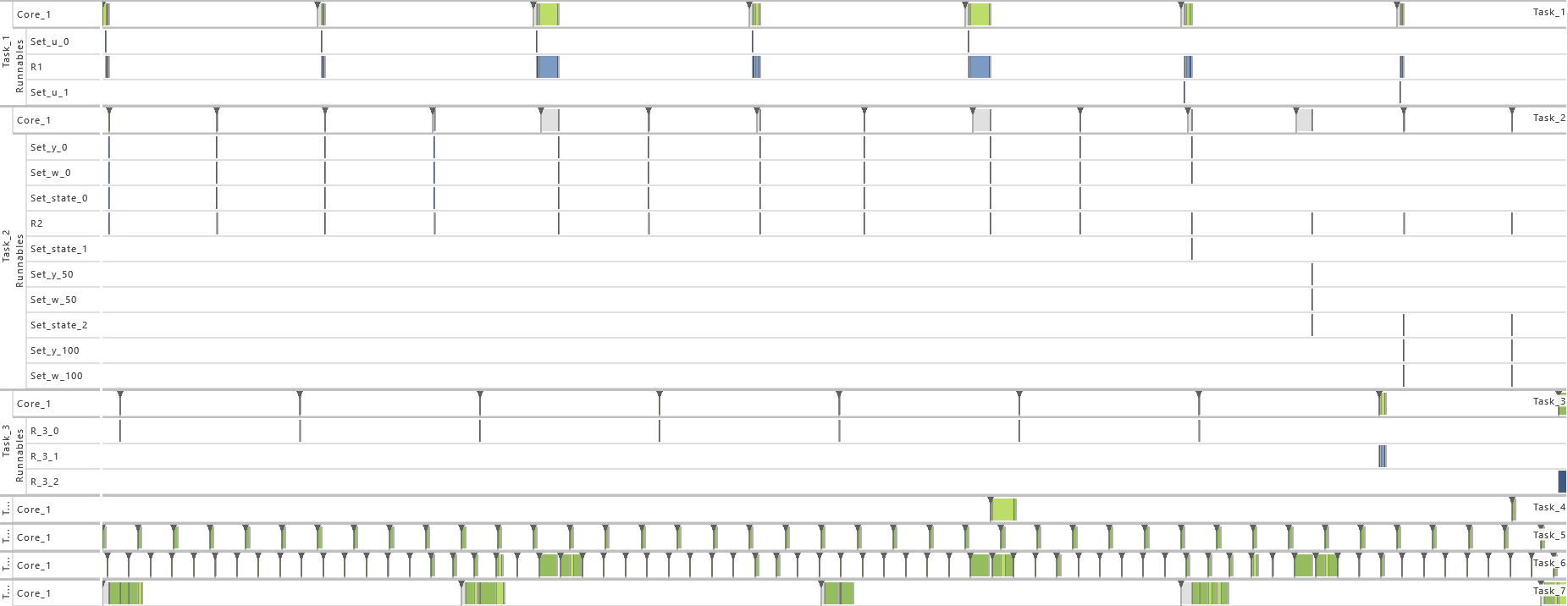

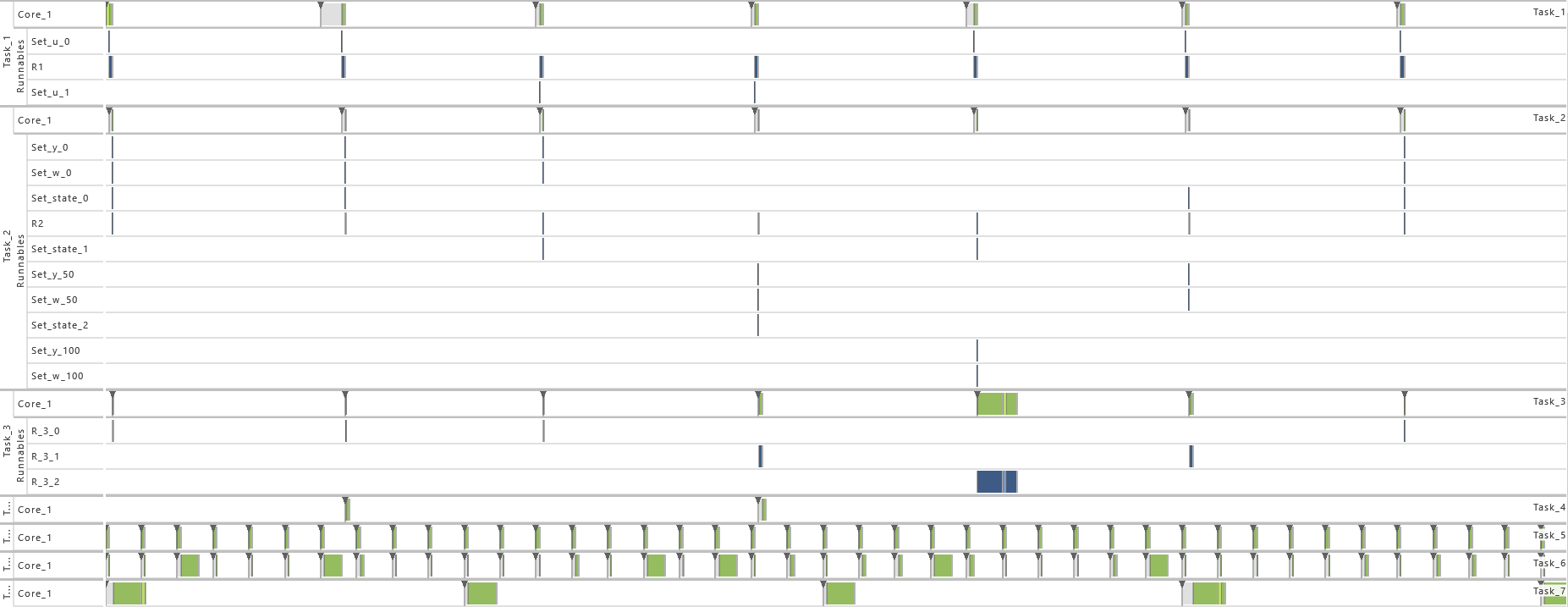

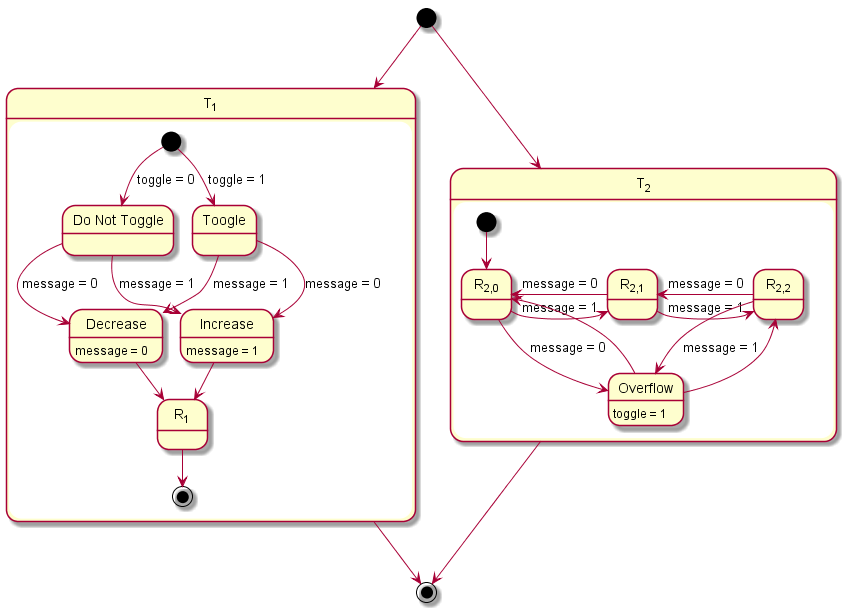

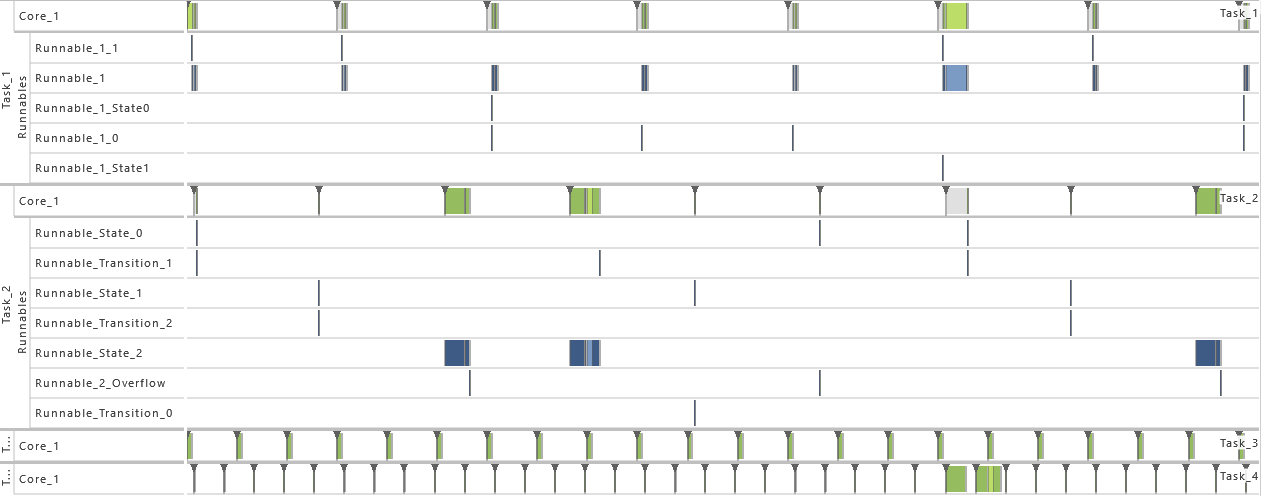

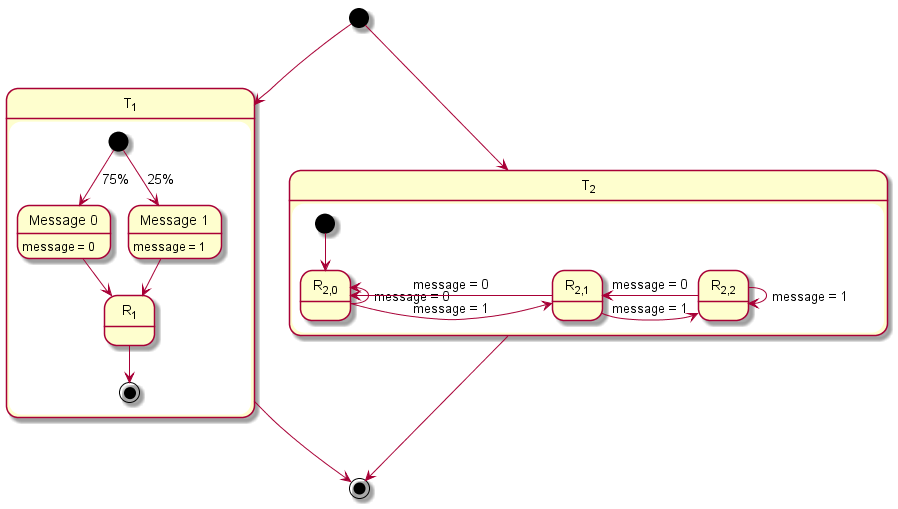

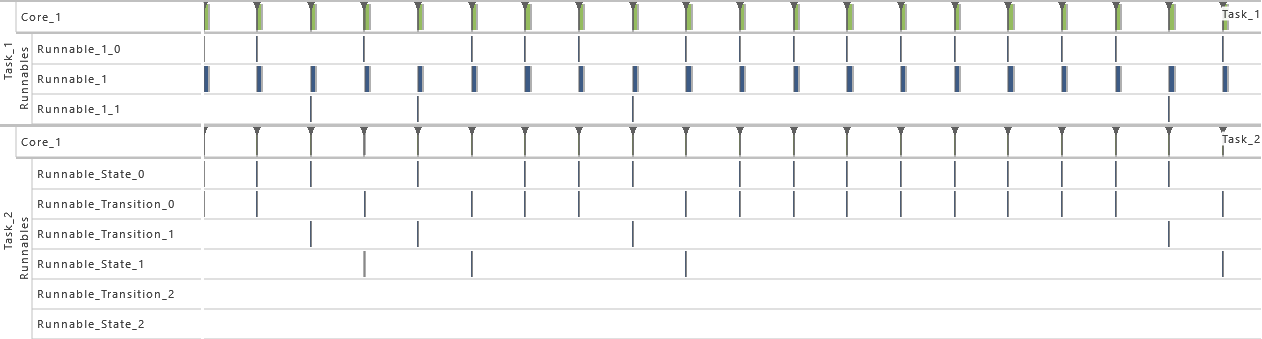

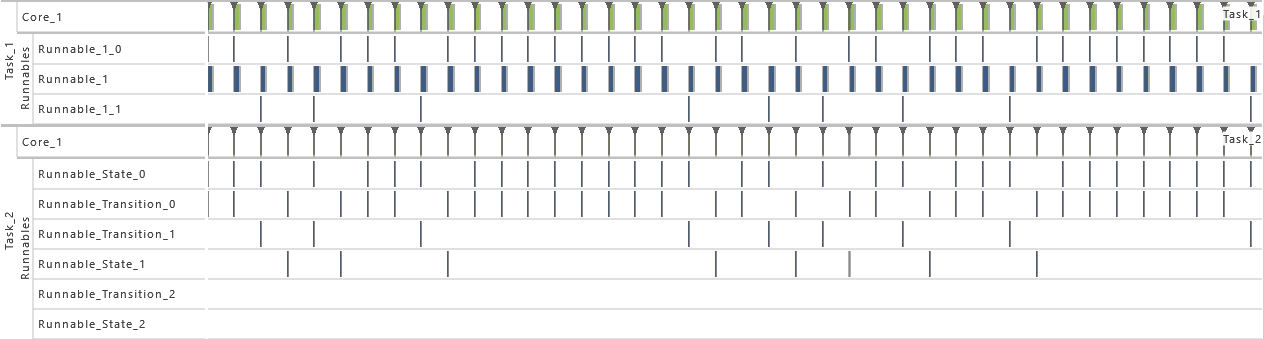

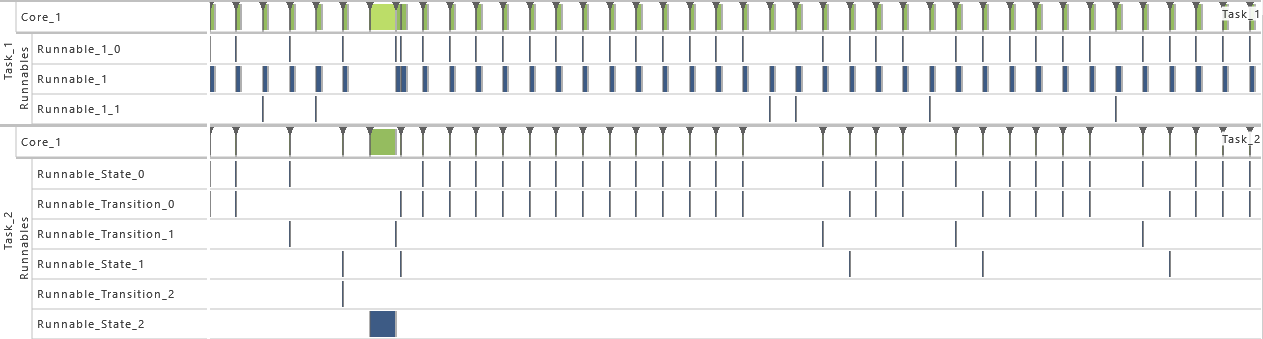

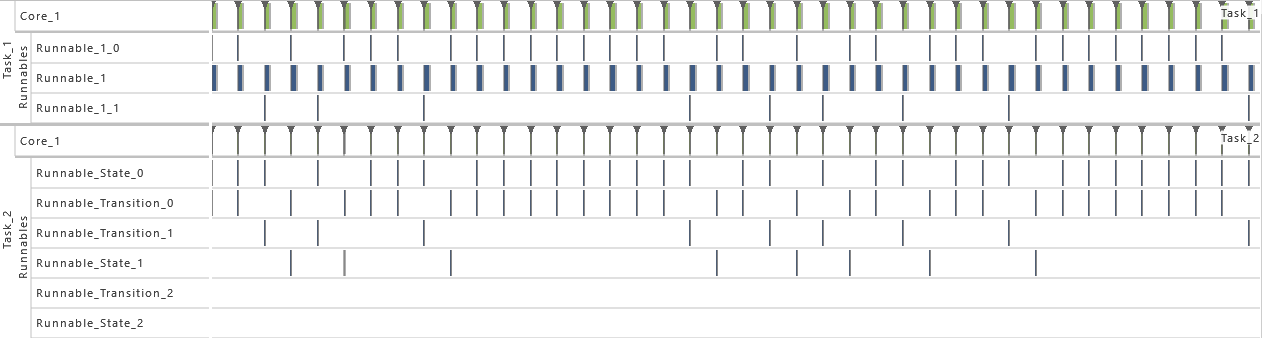

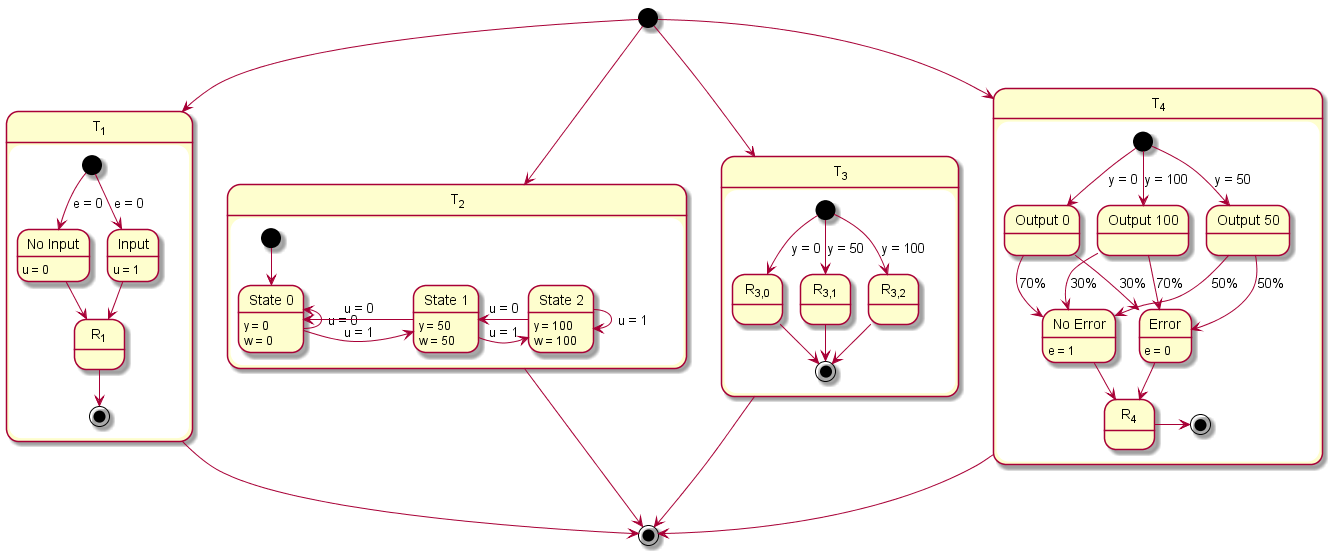

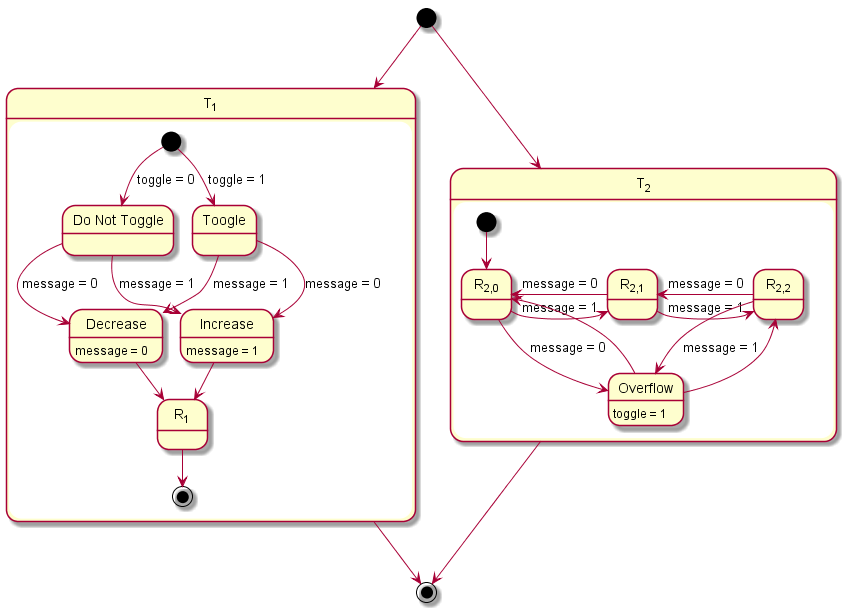

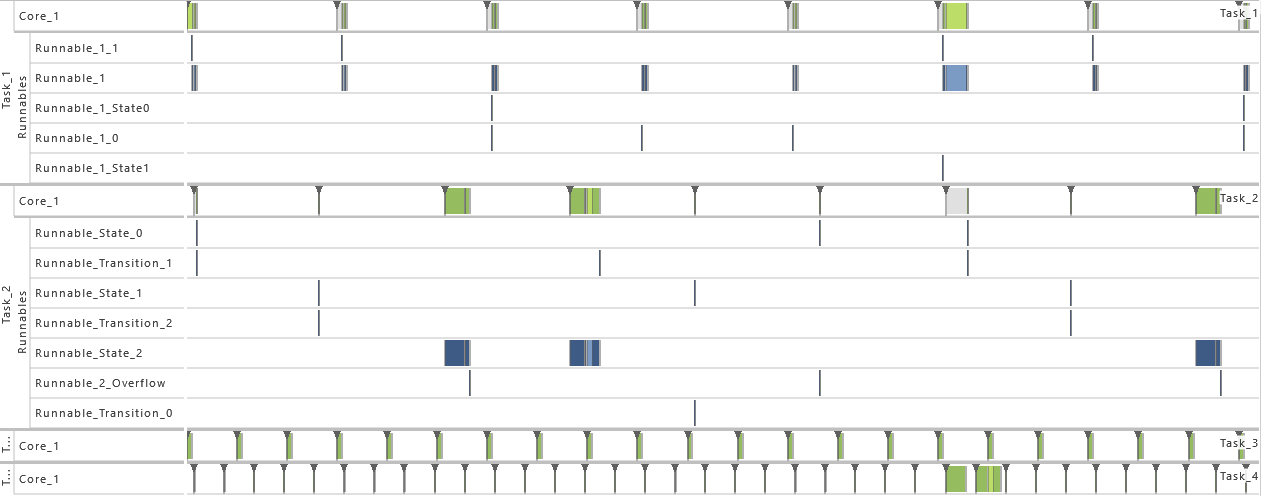

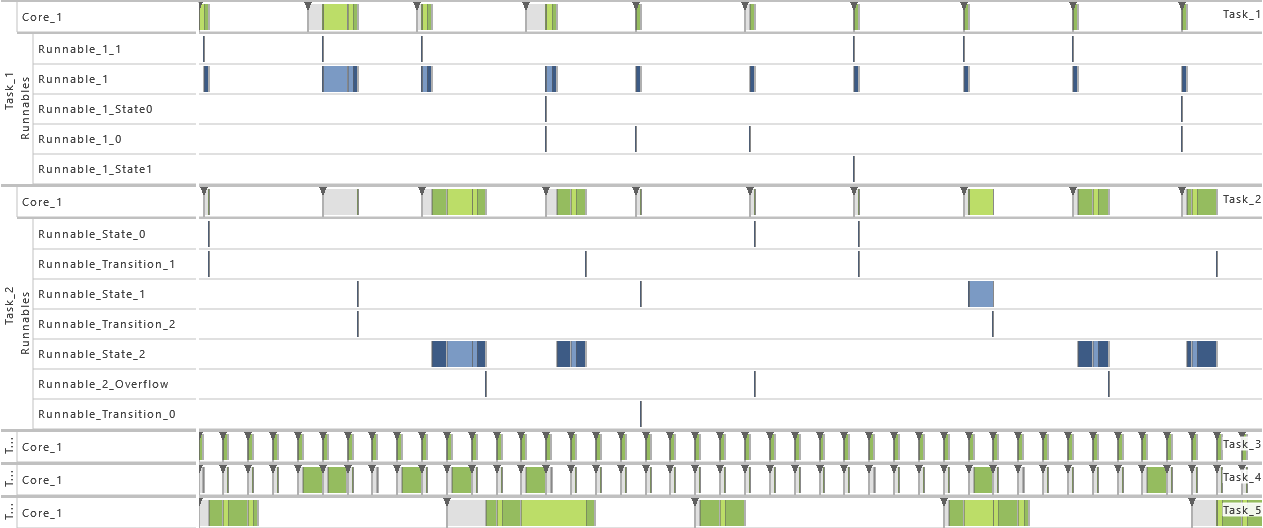

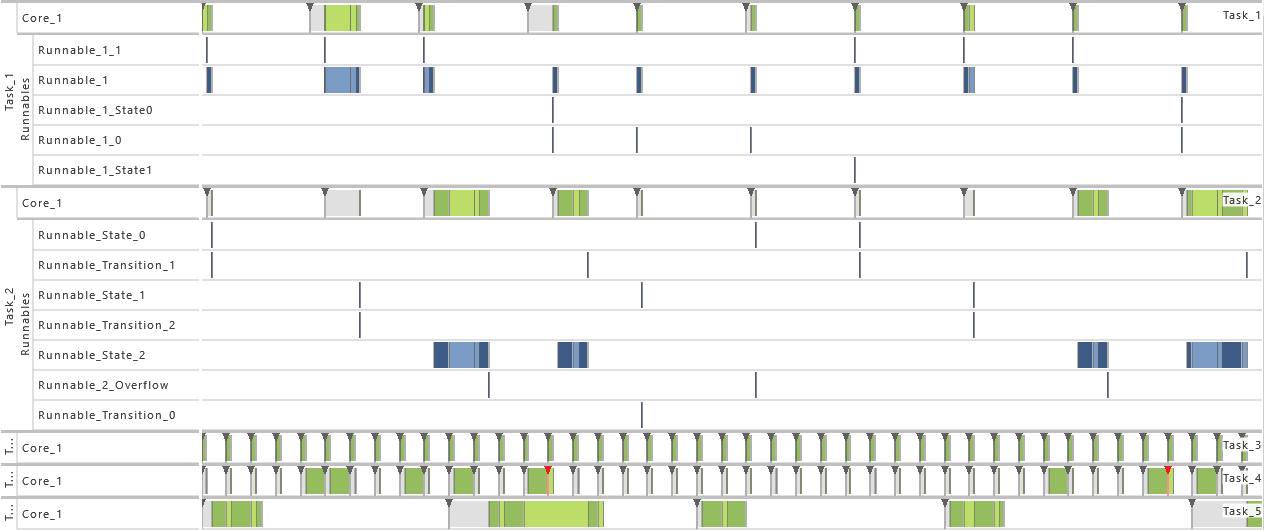

2.3.5 Modeling Example "State Machine"

In this system architecture pattern the modeling example "Client Server without Reply" is extended in such a way that now the task that receives messages (T2) not only varies its dynamic behavior and consequently also its execution time according the transmitted content but also depending on its internal state, meaning the prior transmitted contents. To achieve, this task T1 sends a message to task T2 with either 0 or 1 before runnable R1 is called. The value 0 is used in 75 % of the cases and 1 in the other cases as content of the message. Starting in state 0, T2 decreases or increases the state its currently in depending on the content of the message, 0 or 1 respectively. The runnable R

2,1, R

2,2, and R

2,3 represent then the three different states that the system can be in.

The table below gives a detailed specification of the tasks and their parameters. The tasks are scheduled according fixed-priority, preemptive scheduling and if not indicated otherwise, all events are active in order to get a detailed insight into the system's behavior.

| Task |

Priority |

Preemption |

Multiple Task Activation Limit |

Activation |

Execution Time |

| T1 |

2 |

FULL |

1 |

Periodic |

R1 |

Uniform |

| Offset = 0 |

Min = 9.9 * 106 |

| Recurrence = 100 * 106 |

Max = 10 * 106 |

| T2 |

1 |

FULL |

1 |

|

R

2,1

|

Uniform |

| Min = 99 |

| Max = 100 |

| Periodic |

R

2,2

|

Uniform |

| Offset = 15 * 106 |

Min = 99 * 103 |

| Recurrence = 60 * 106 |

Max = 100 * 103 |

|

R2,3 |

Uniform |

| Min = 49.5 * 106 |

| Max = 50 * 106 |

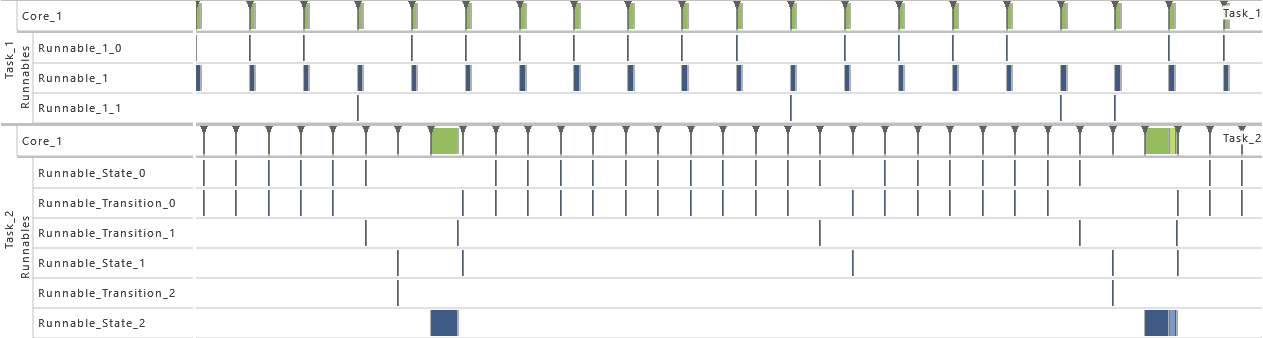

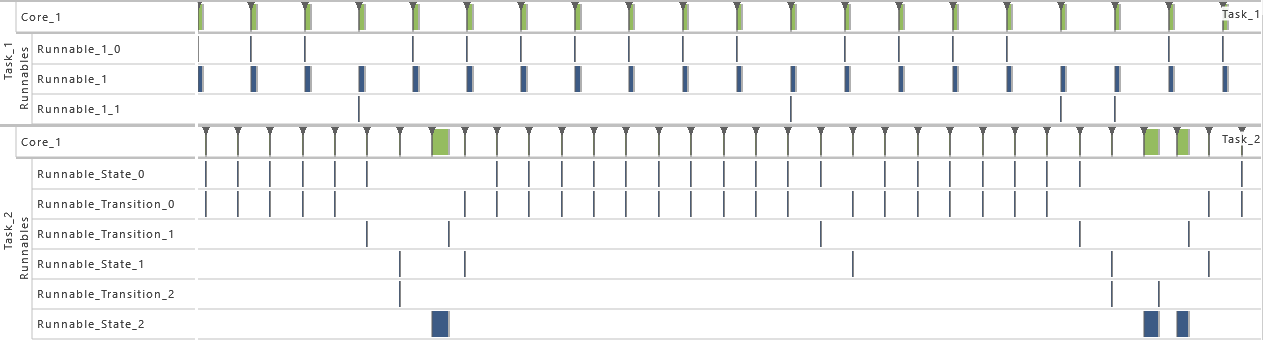

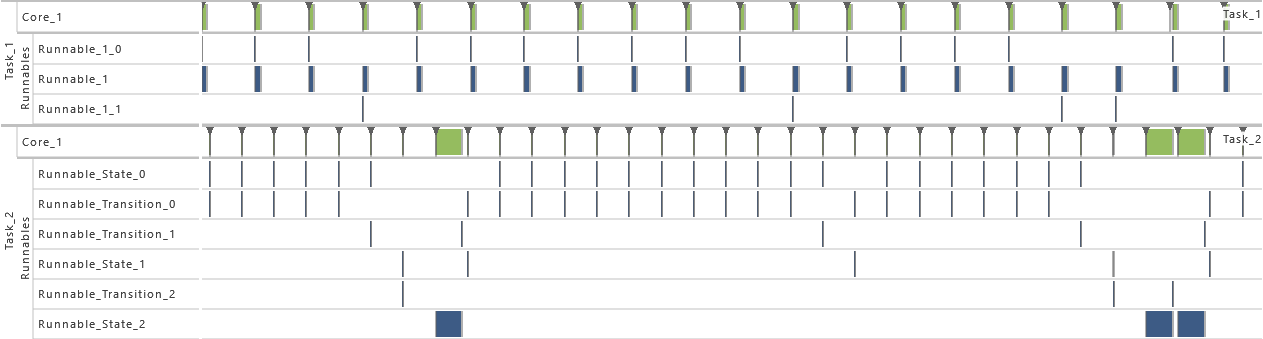

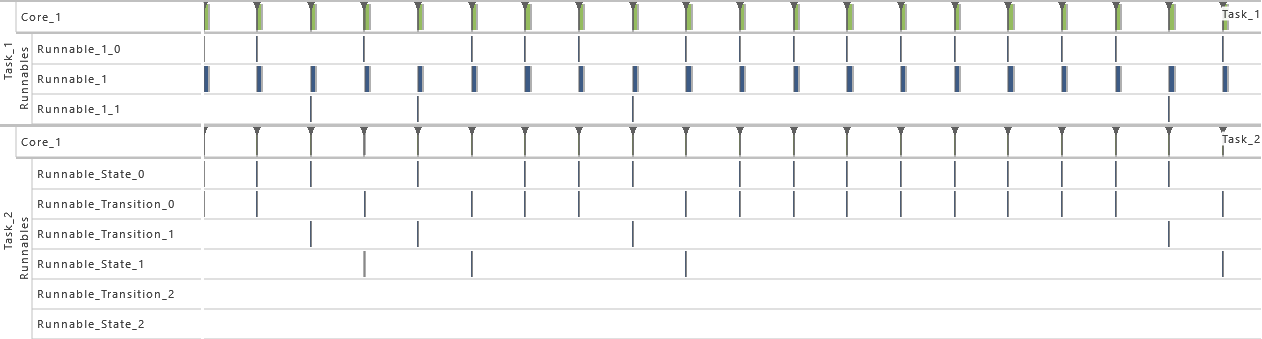

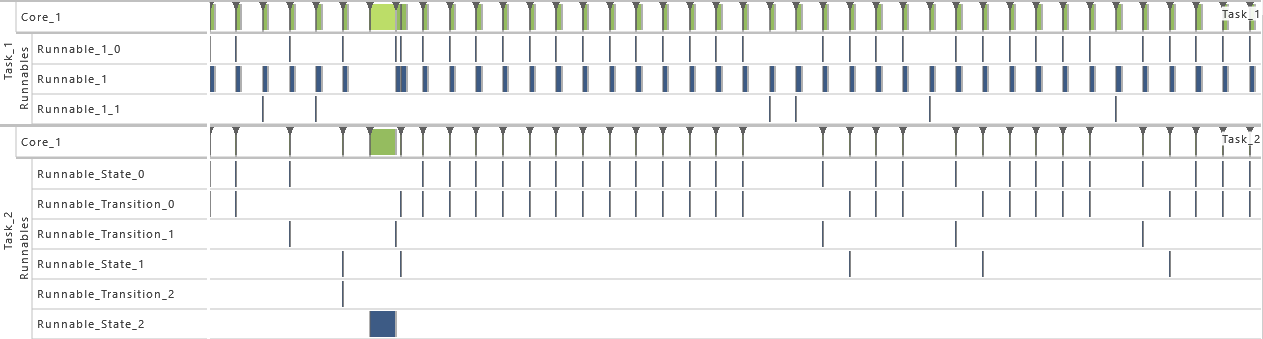

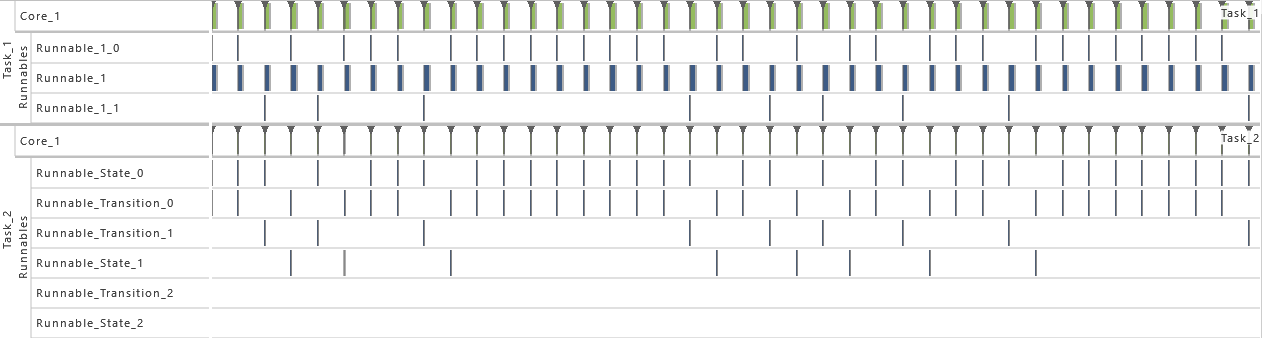

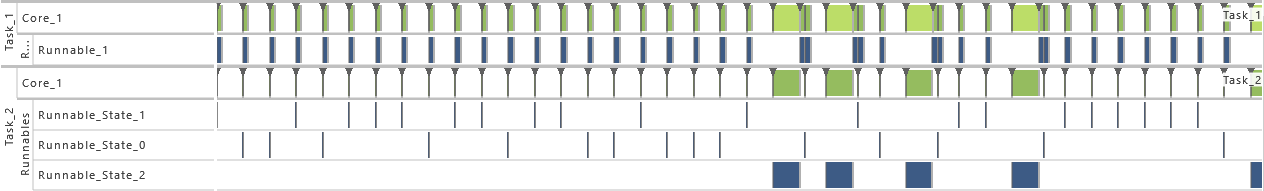

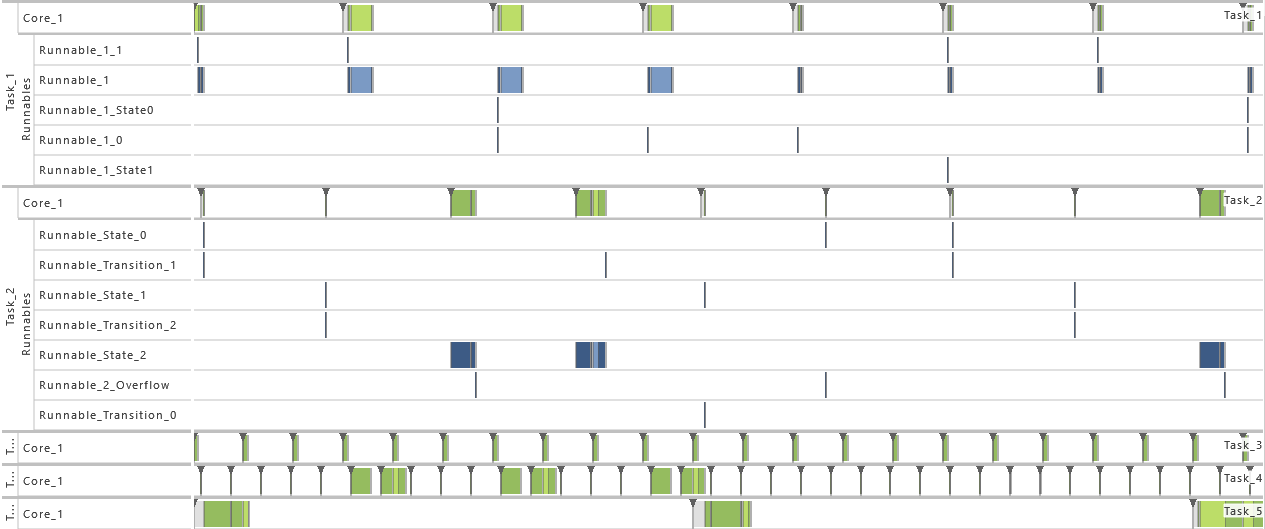

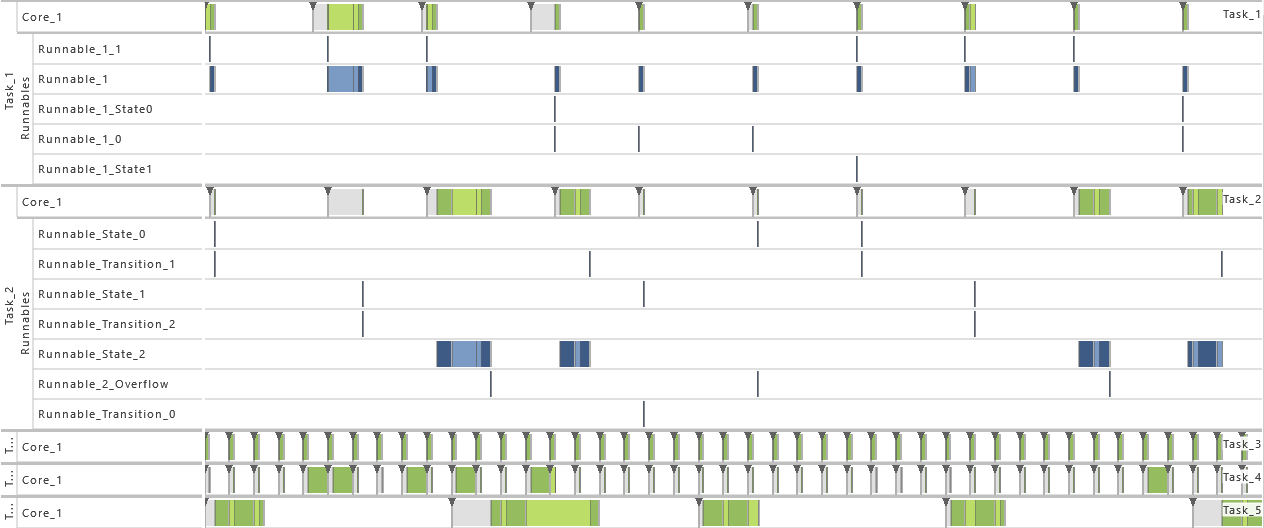

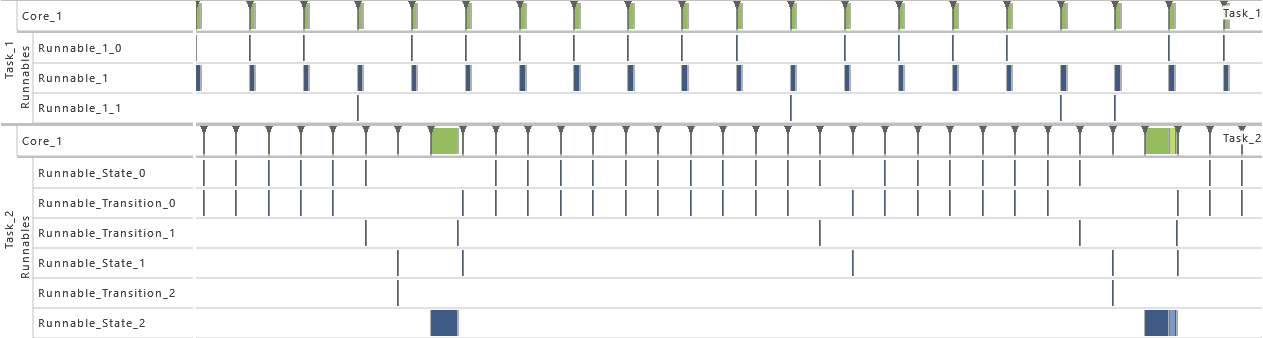

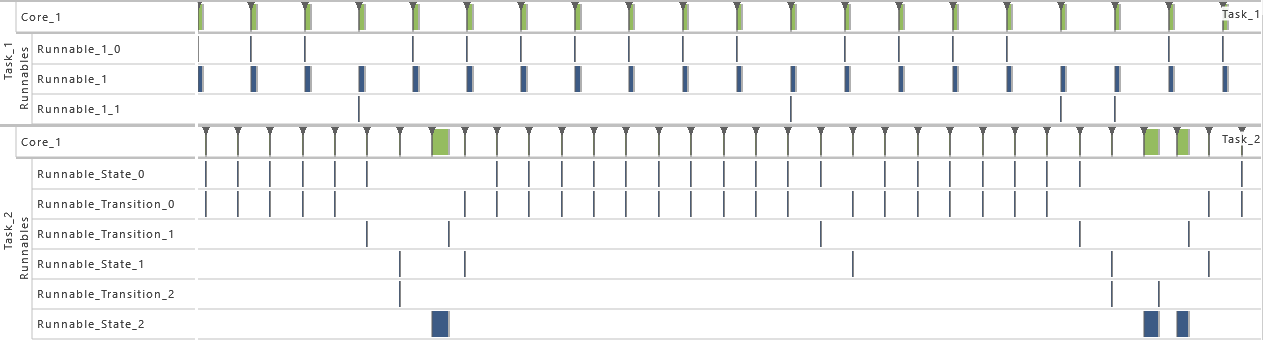

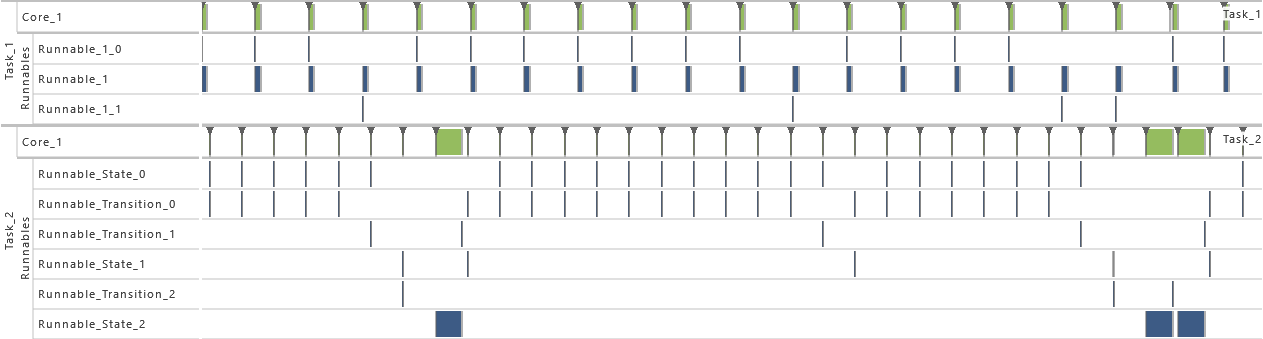

In order to show the impact of changes to the model, the following consecutive variations are made to the model:

-

1) Initial Task Set

- As defined by the table above.

-

2) Exclusive Area

- For this variation, all data accesses are protected by an exclusive area. Therefore, the data accesses in T1 as well as all runnables in T2 (R2,1, R2,2, and R2,3) are protected during their complete time of execution via a mutex and priority ceiling protocol. That way, blocking situations appear.

-

3) Priority Ordering

- As from this variation on, the priority relation between task T1 and T2 is reversed. As a consequence, the priority of task T1 is set to 1 and the priority of task T2 is set to 2. That way, the timing behavior is changed fully.

-

4) Inter-process Activation

- As from this variation on, task T2 gets activated by an inter-process activation from task T1 instead of being activated periodically. The interprocess activation is performed right after the message

message is written in T1 and consequently before the runnable R1 is called. That way, a direct connection between T1 and T2 is established.

-

5) Event Frequency Increase

- As from this variation on, the periodicity of T1 is shortened. For this, the value for the period of task T1 is halved to 50 * 106. That way, the utilization of the system is increased, which results in extinct activations.

-

6) Activation

- As from this variation on, the maximum number of queued activation requests for both tasks is set to 2. That way, the problem with extinct activations resulting from the previous variation is solved.

-

7) Execution Time Fluctuation

- As from this variation on, the execution time distribution is widened for both tasks. Therefore, the maximum of the uniform distribution is increased by 1 percent so that the uniform distribution varies now by 2 percent. That way, the utilization of the system is further increased.

-

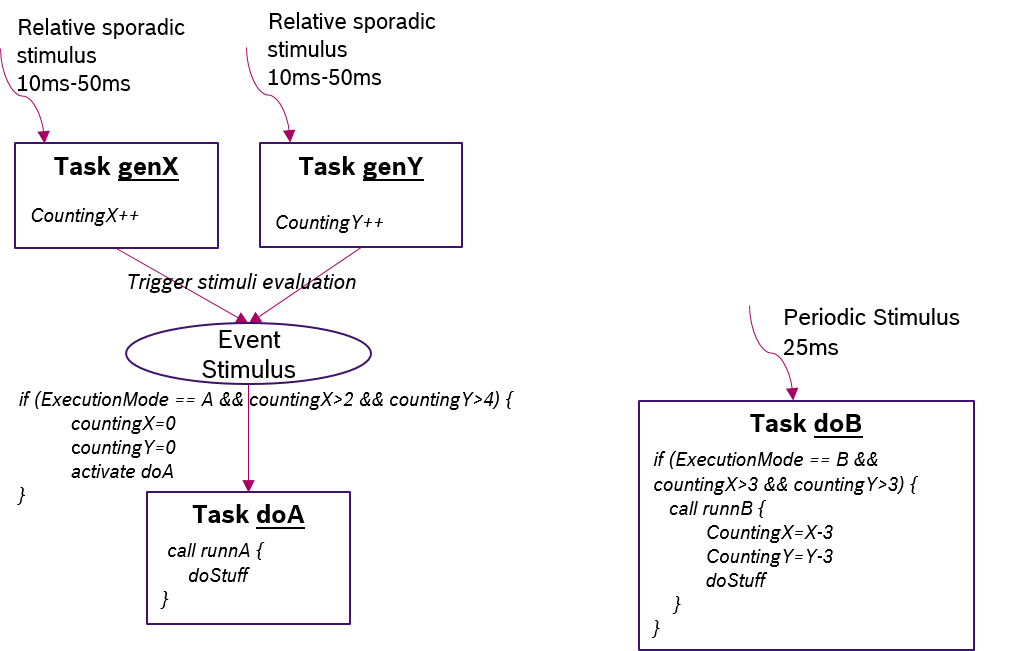

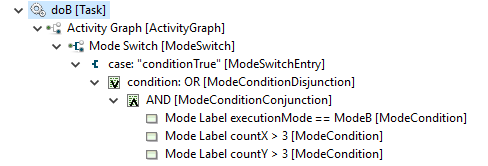

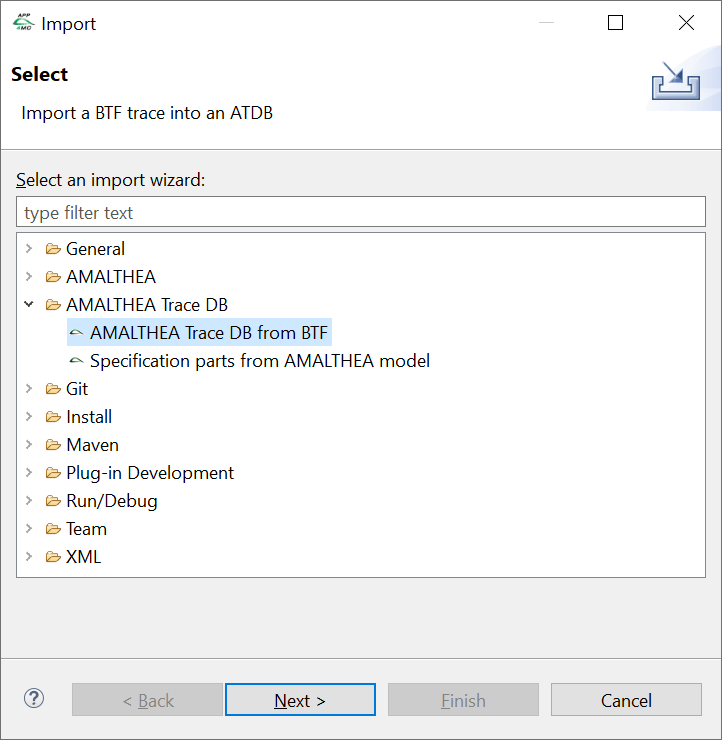

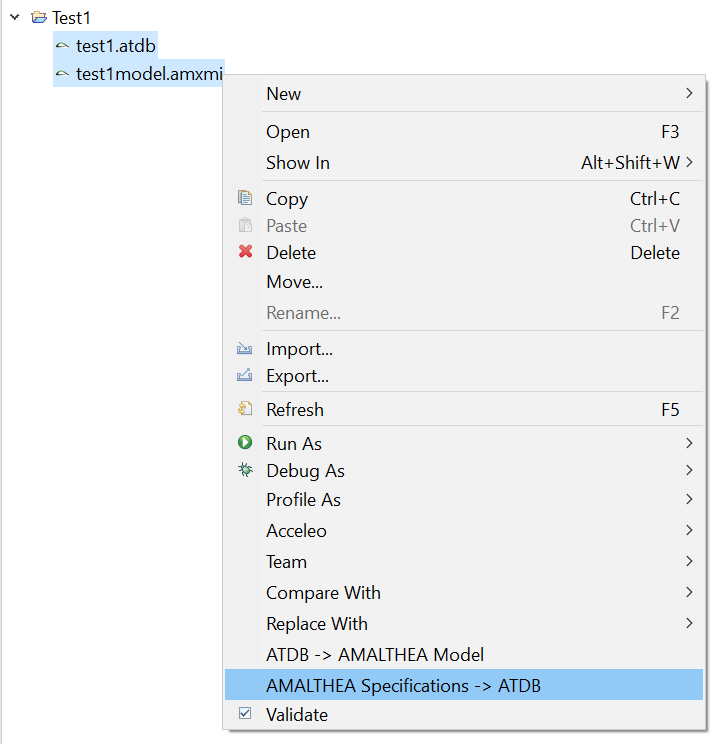

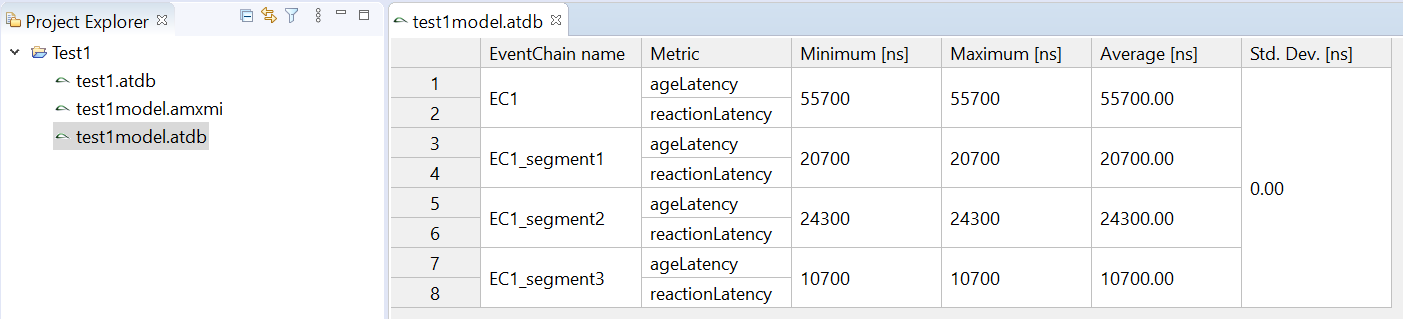

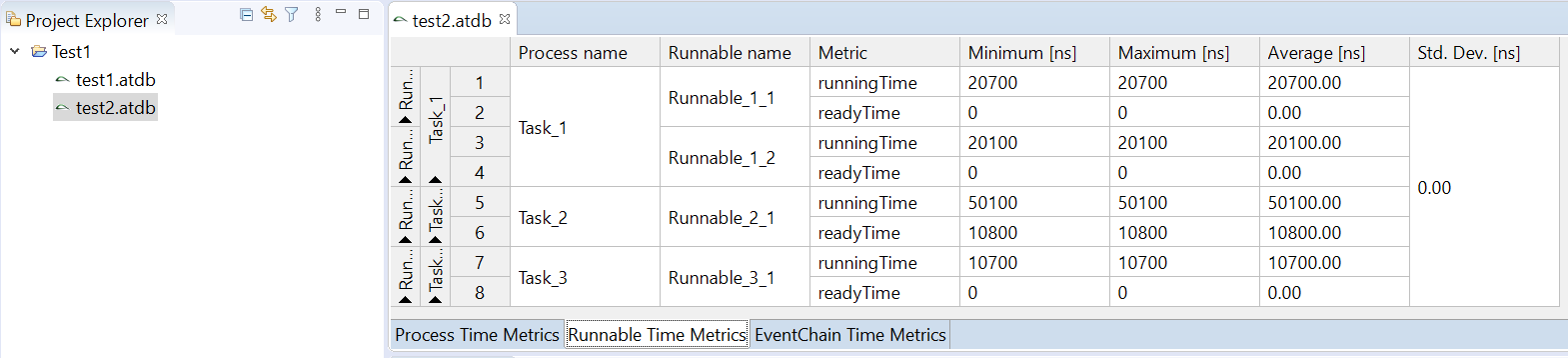

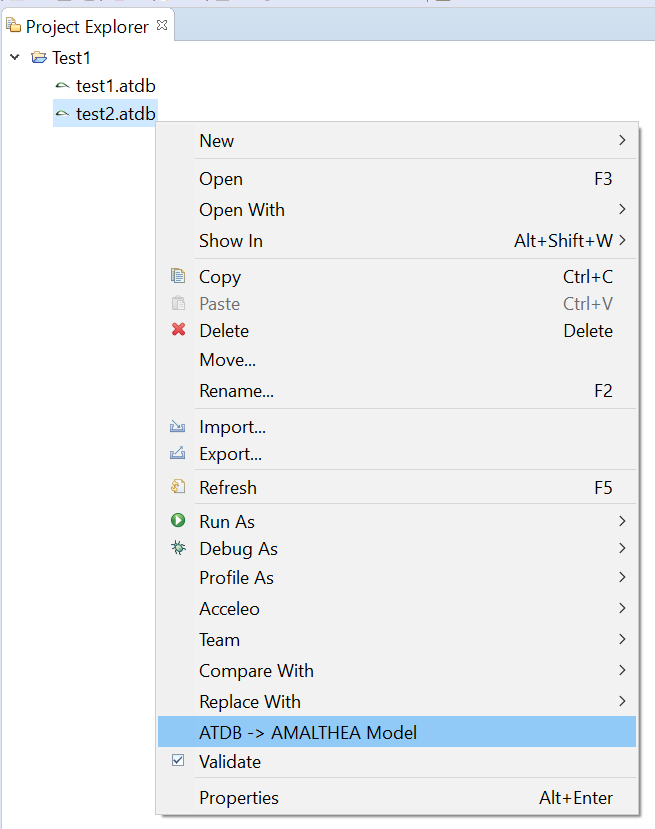

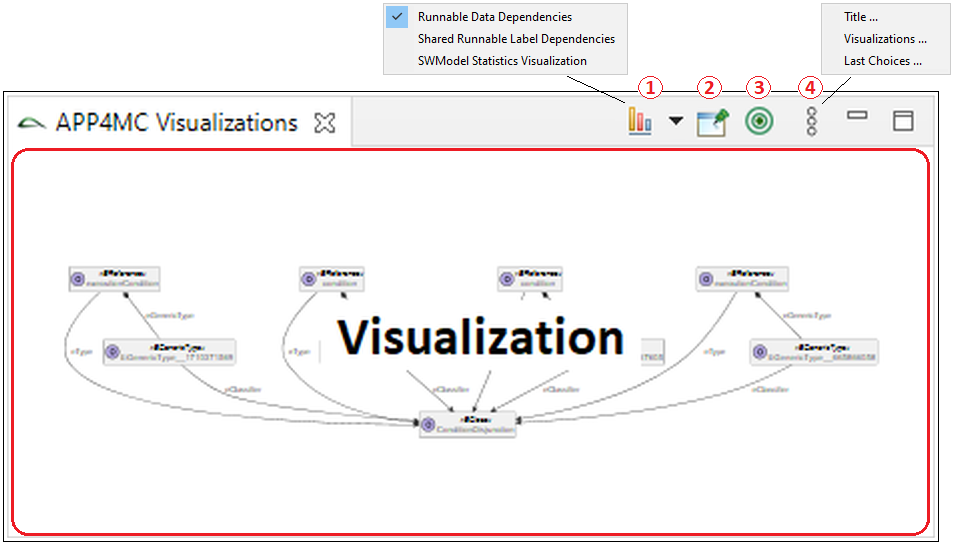

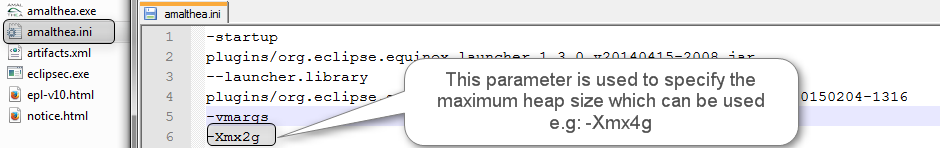

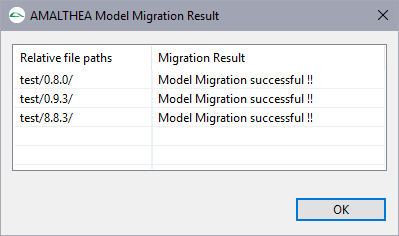

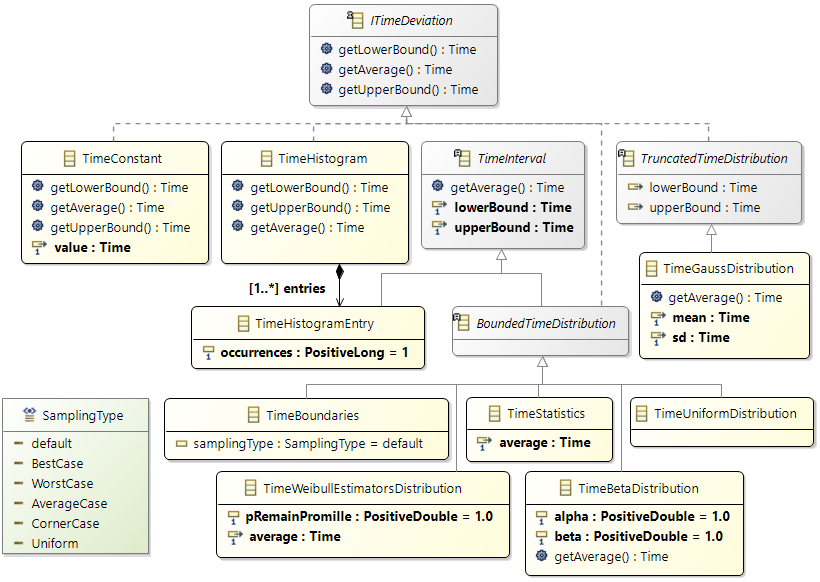

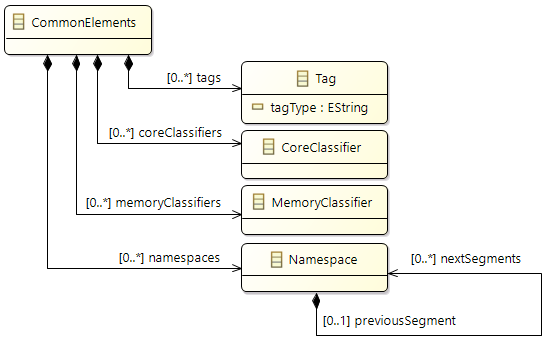

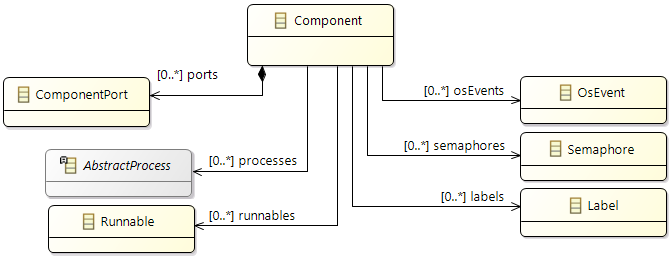

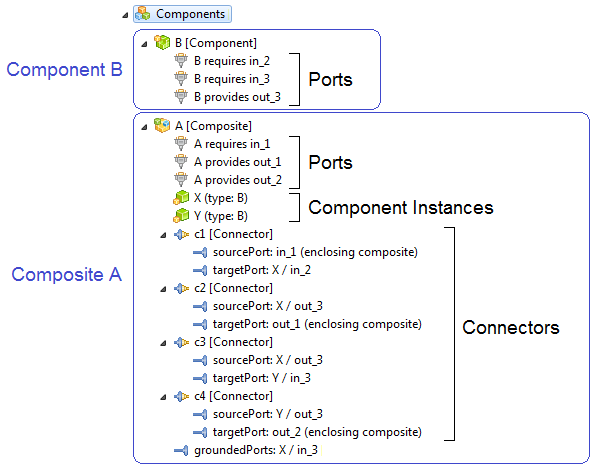

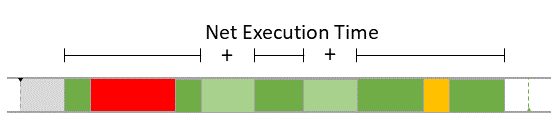

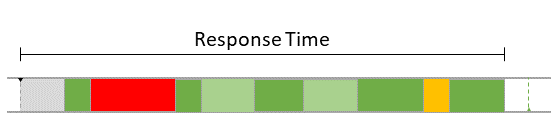

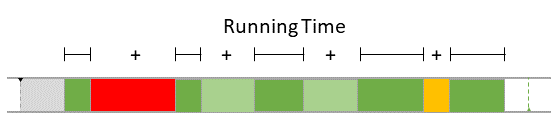

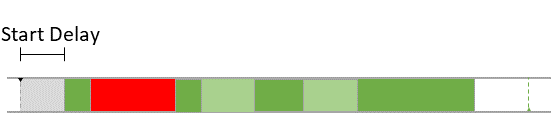

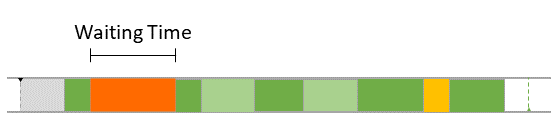

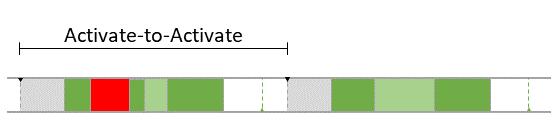

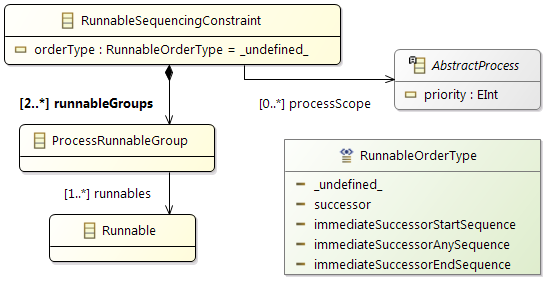

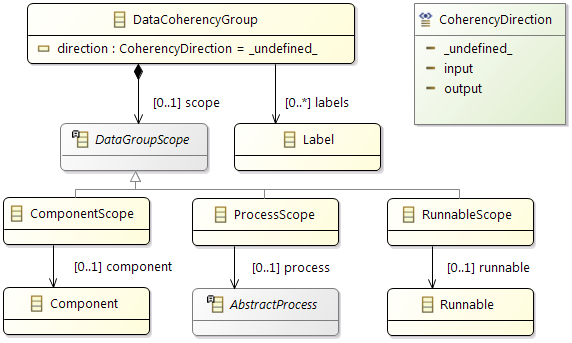

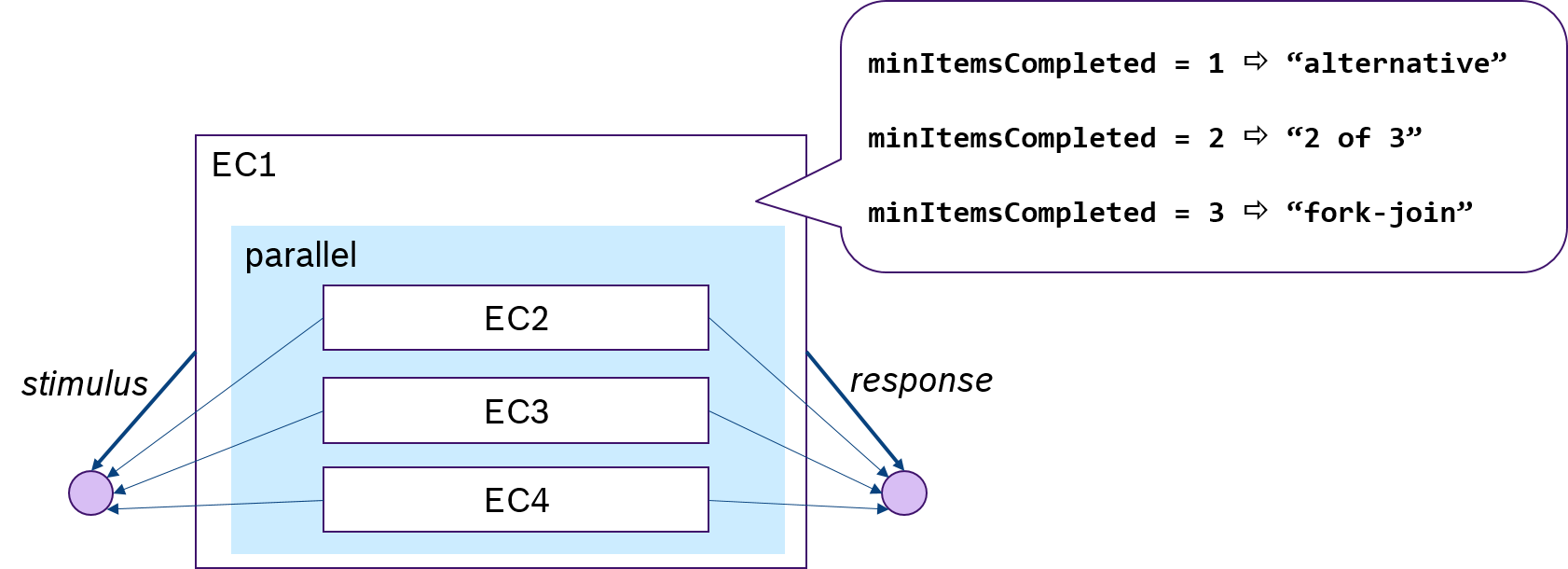

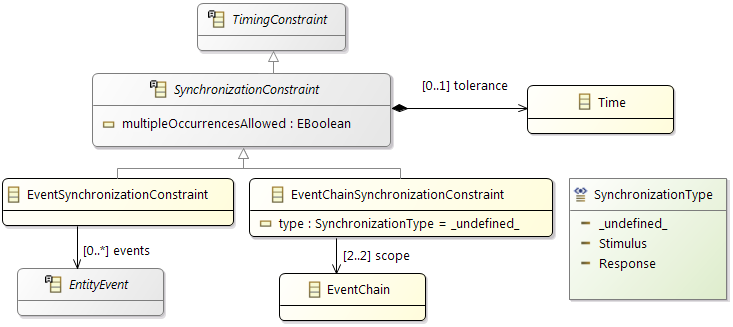

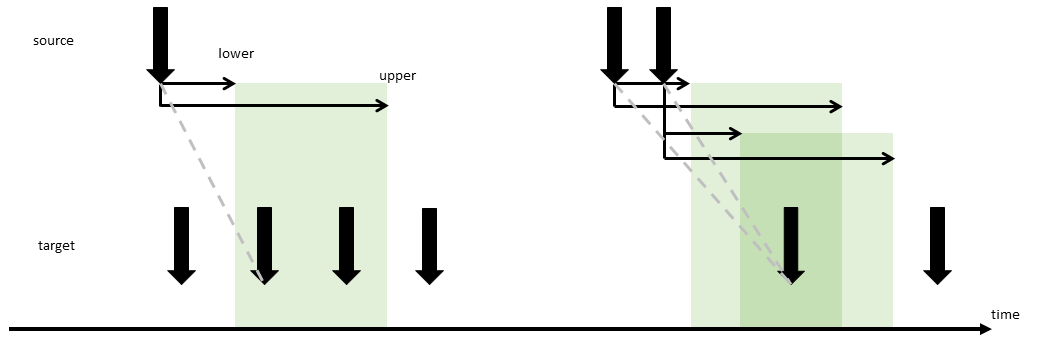

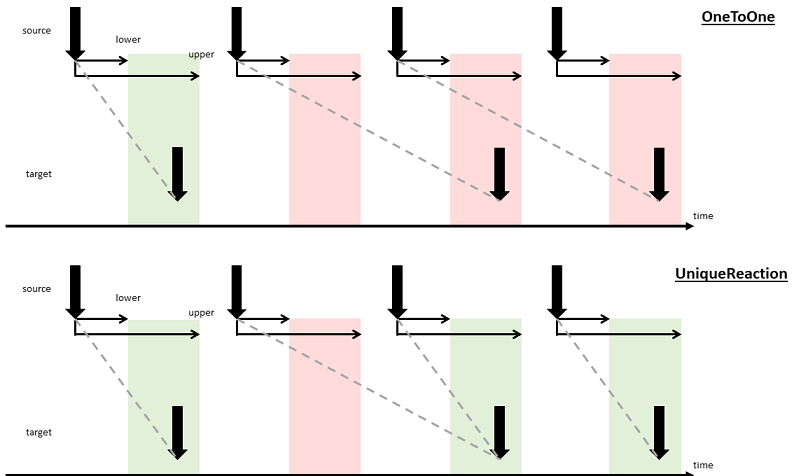

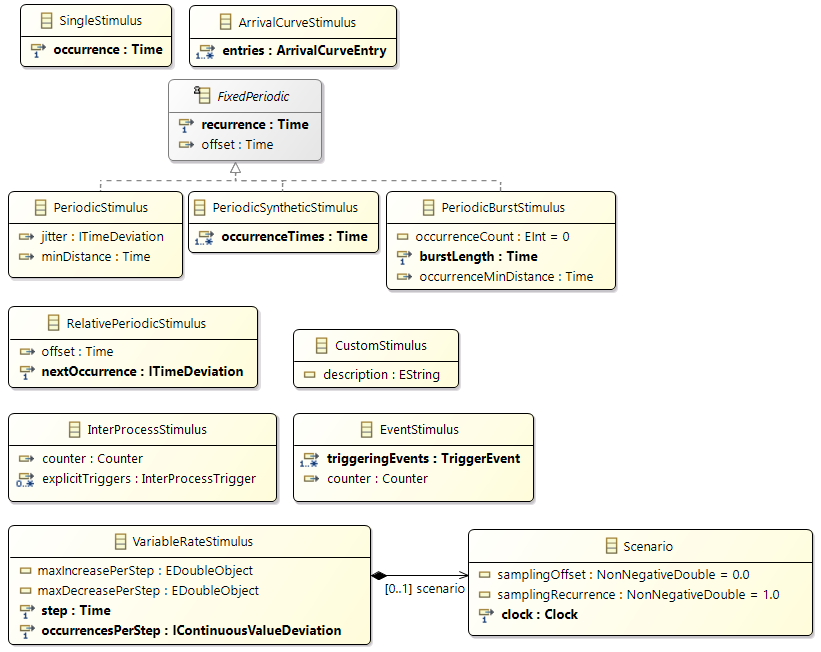

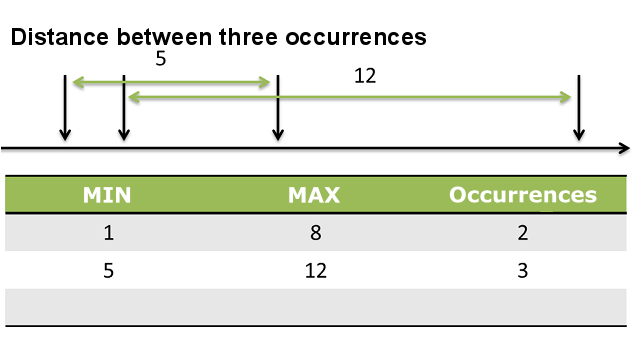

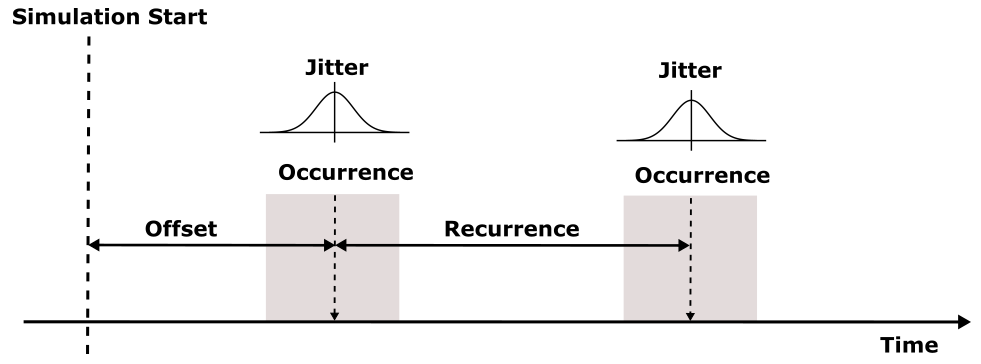

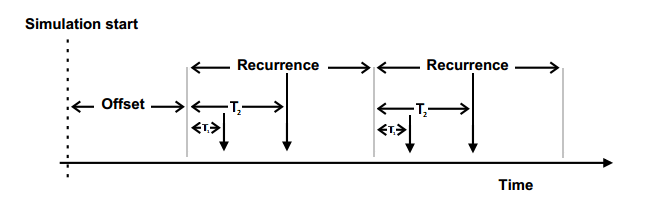

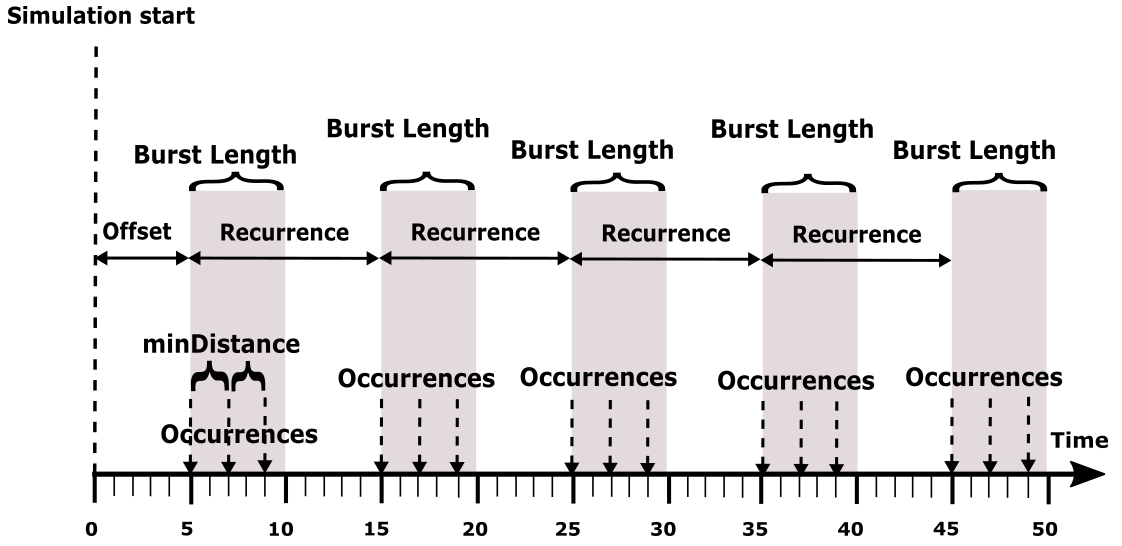

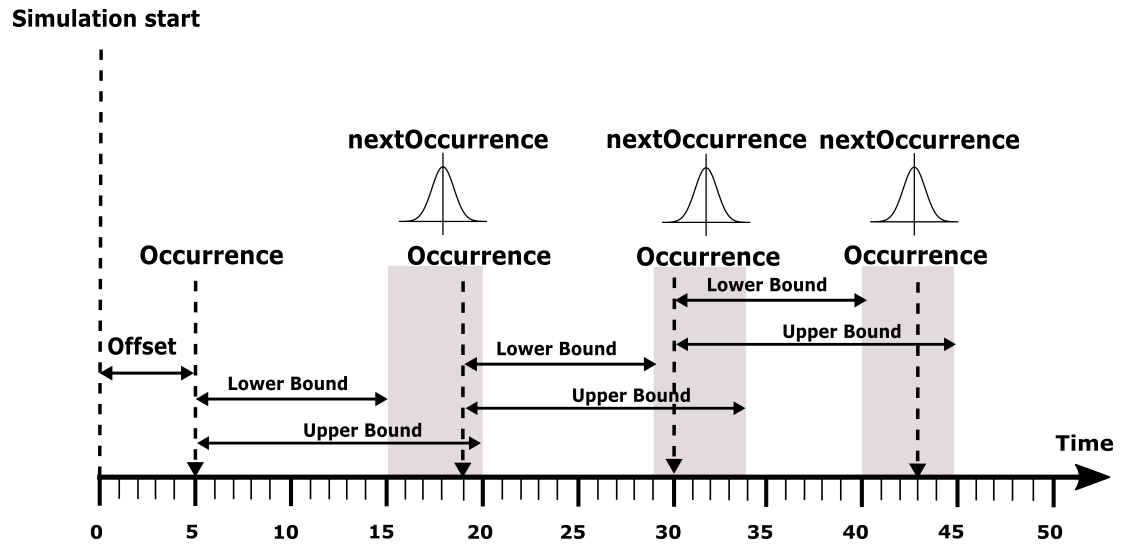

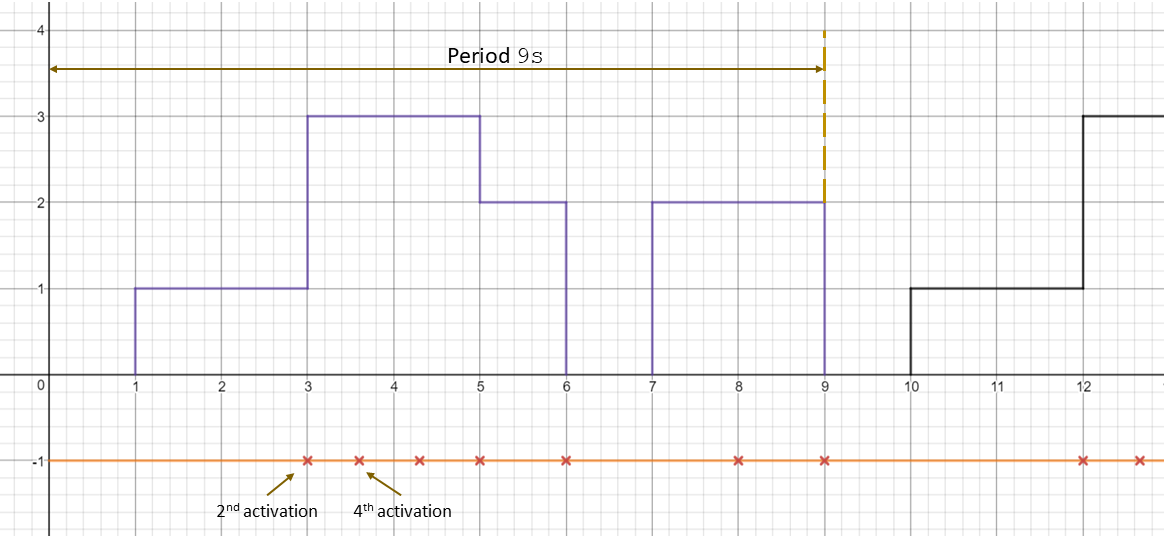

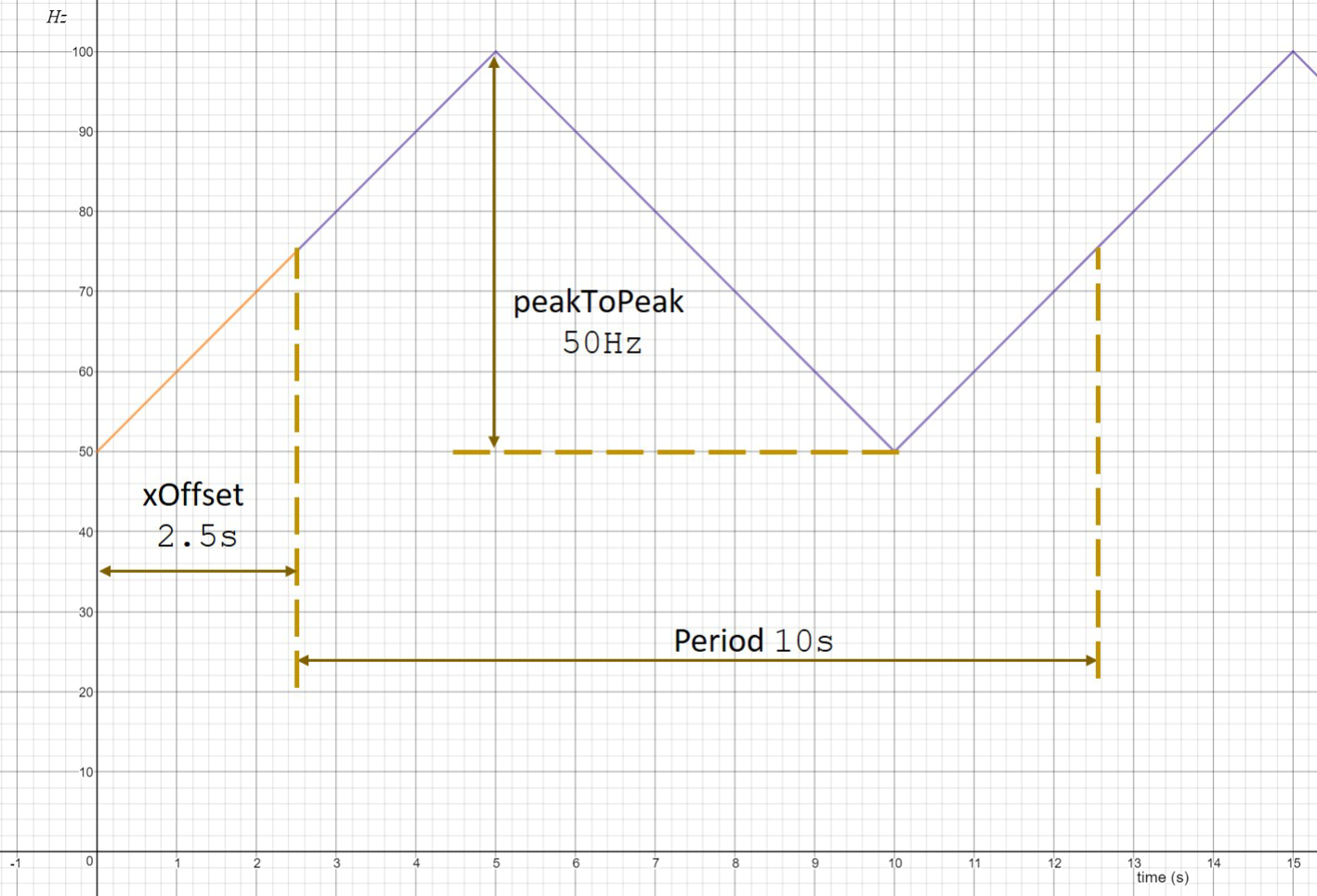

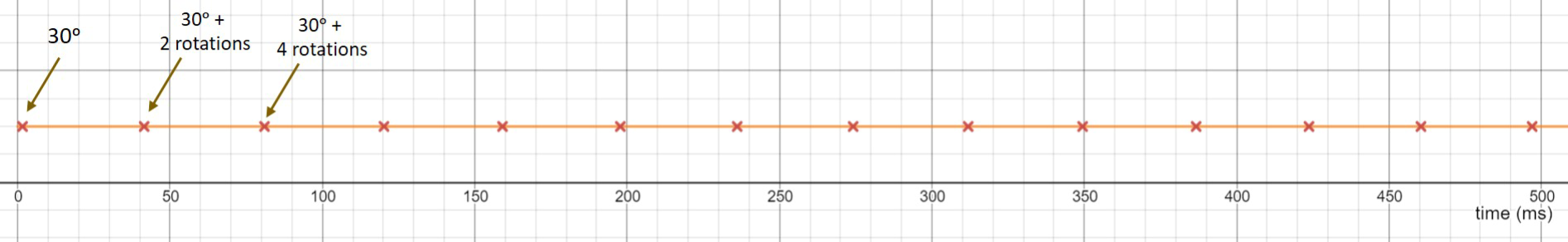

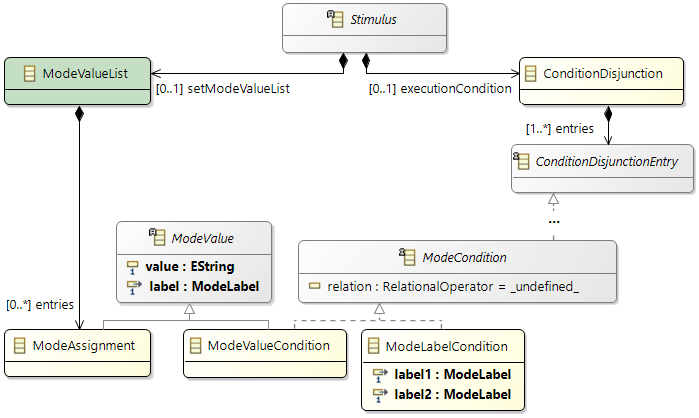

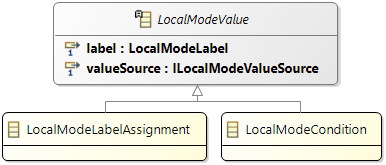

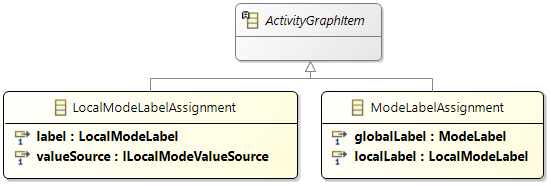

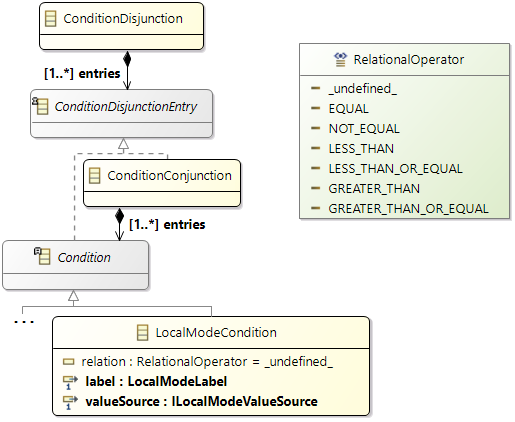

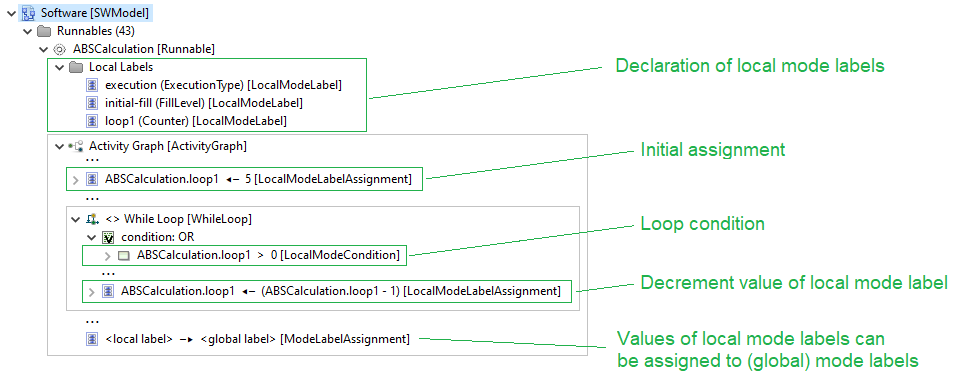

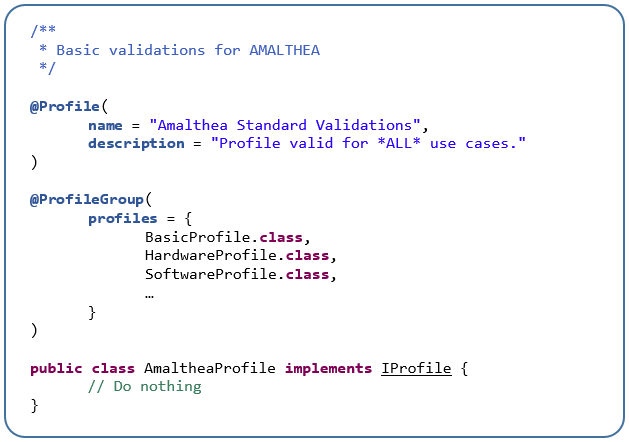

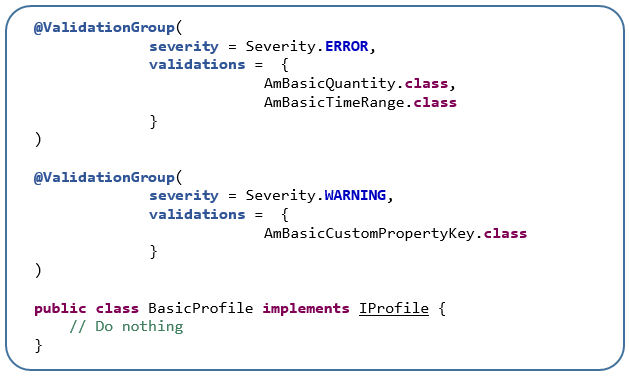

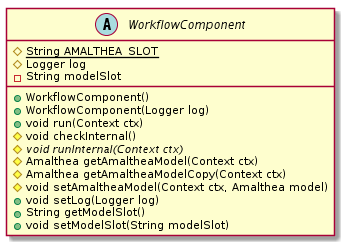

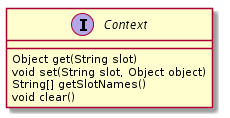

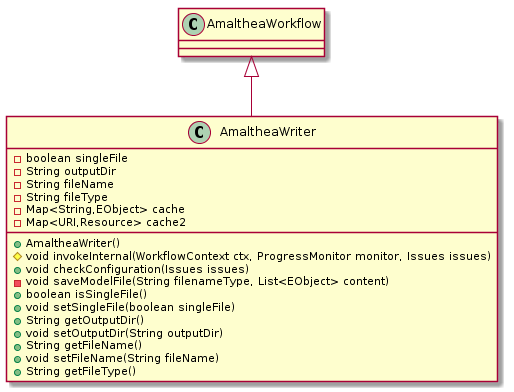

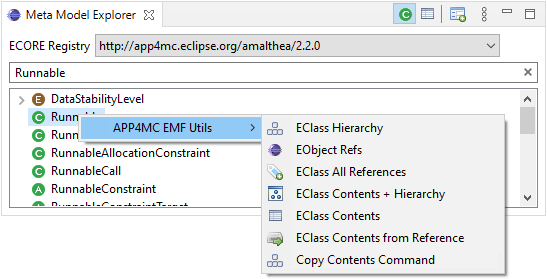

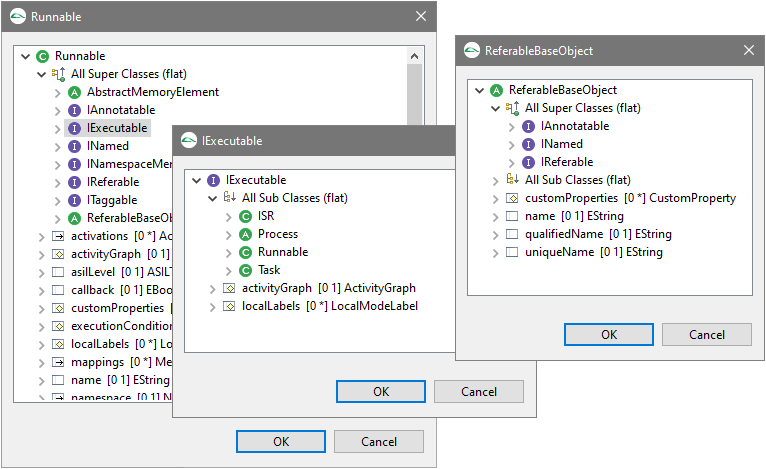

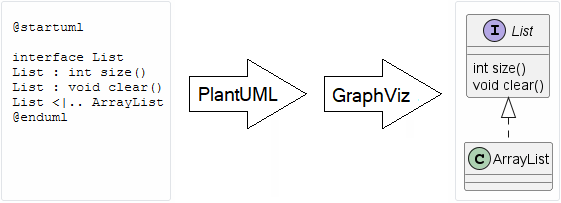

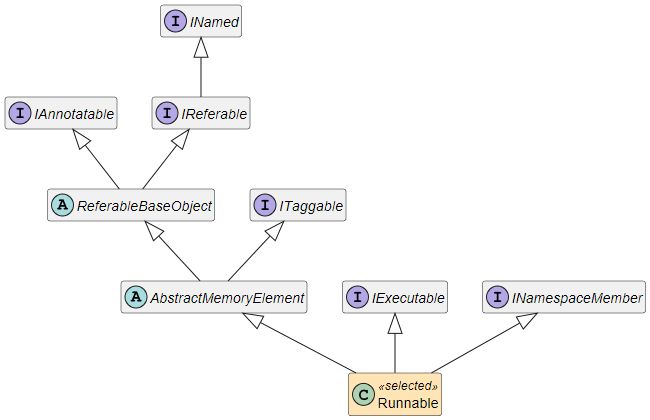

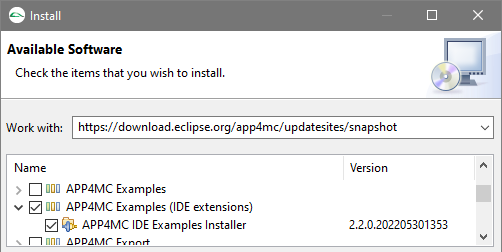

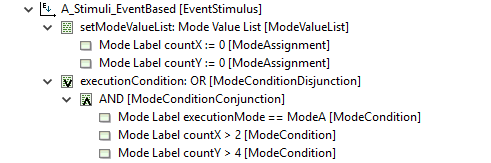

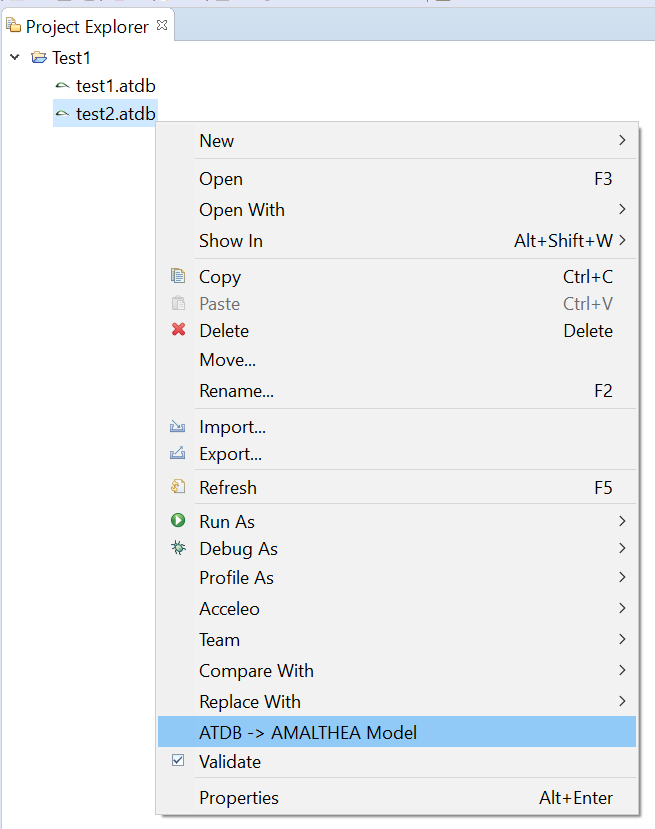

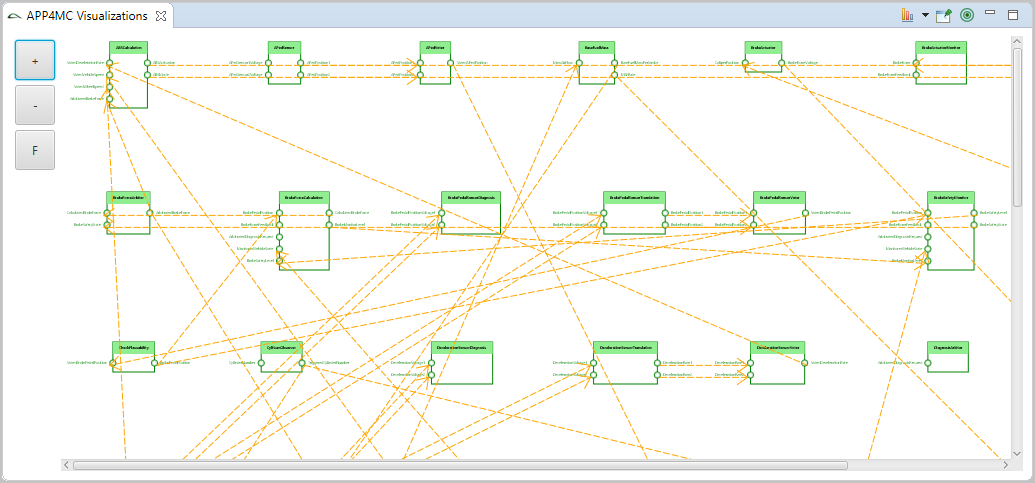

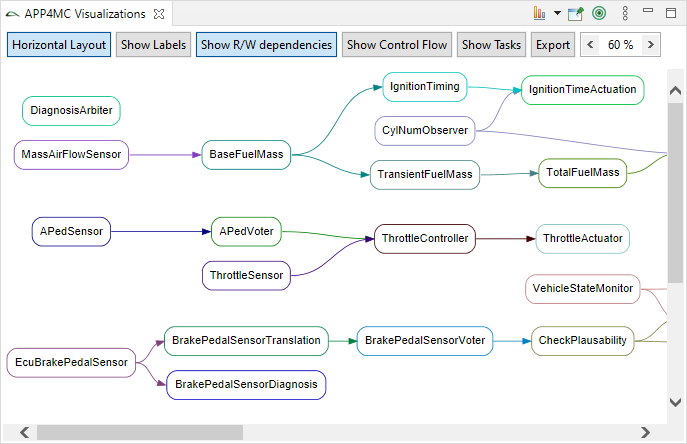

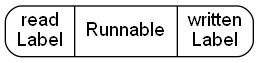

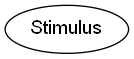

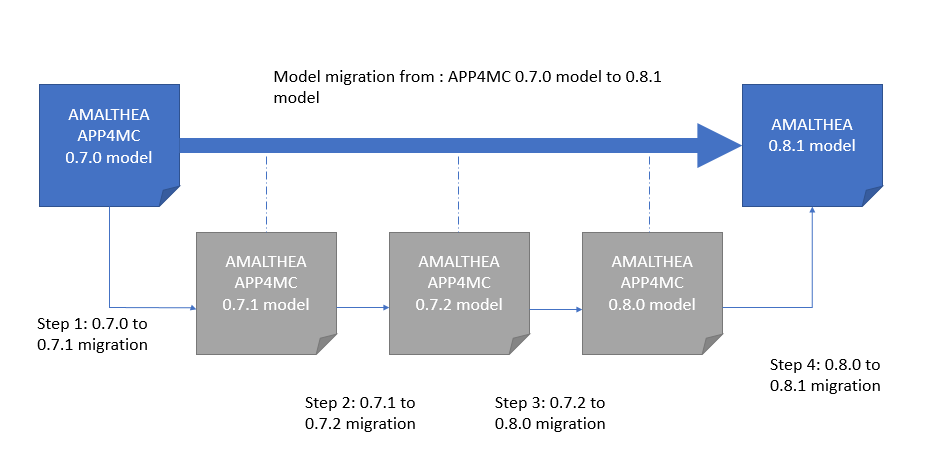

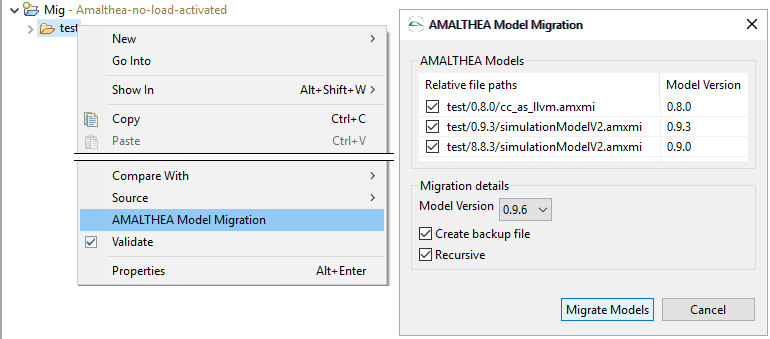

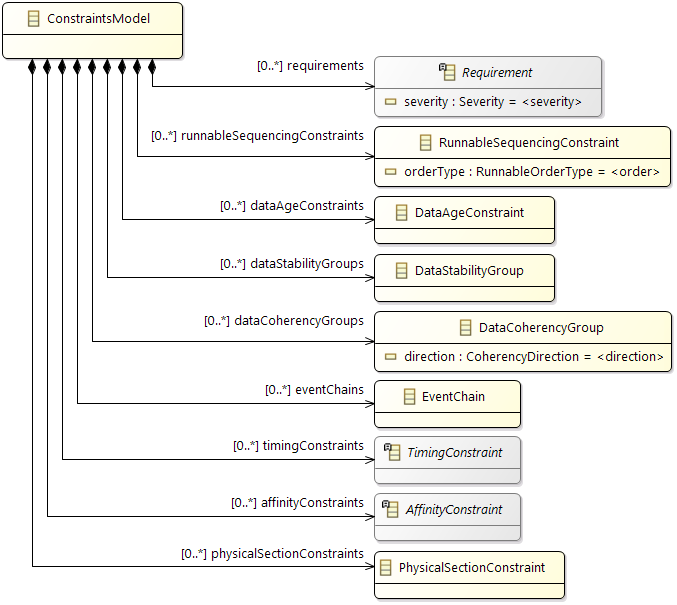

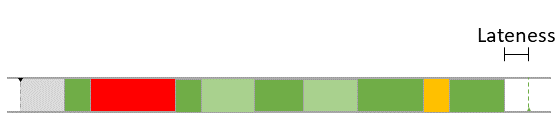

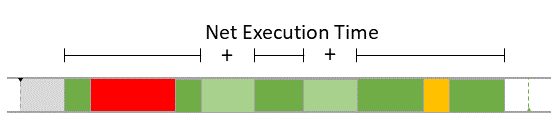

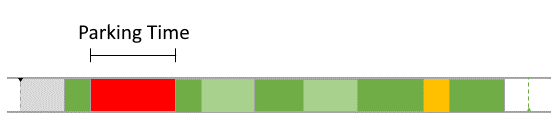

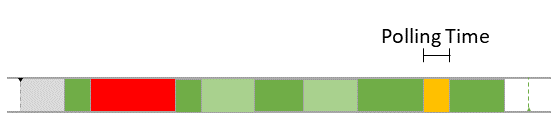

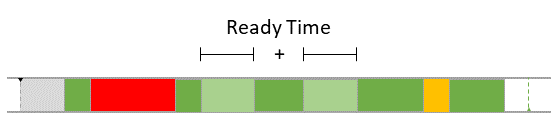

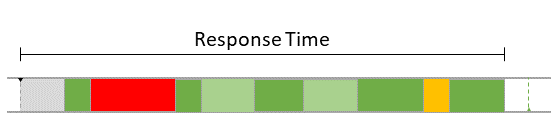

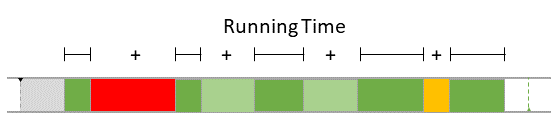

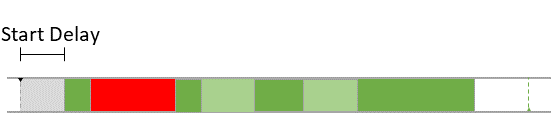

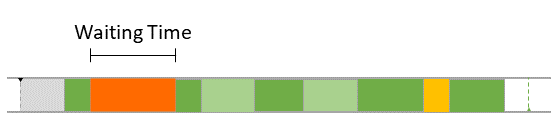

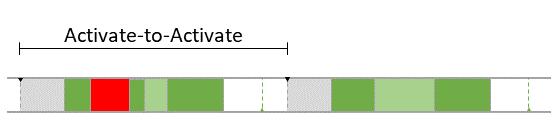

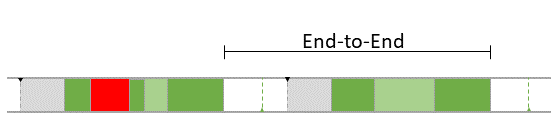

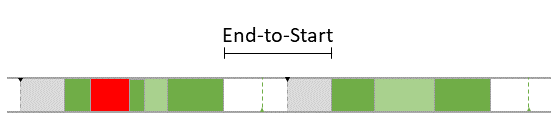

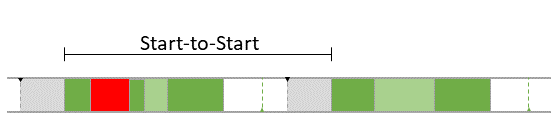

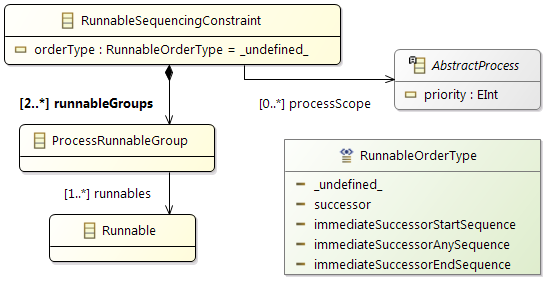

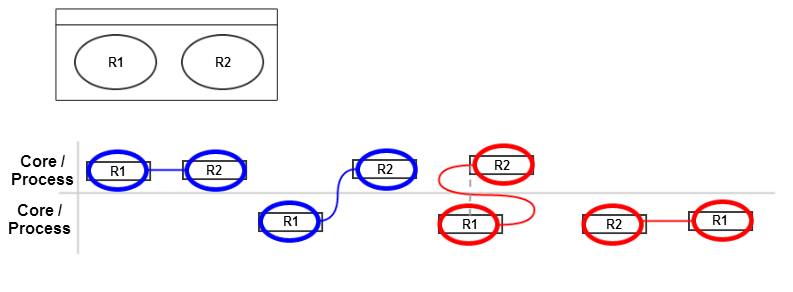

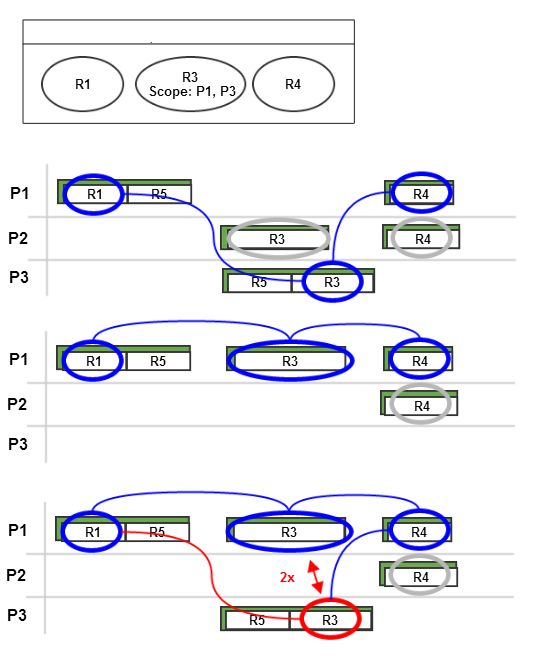

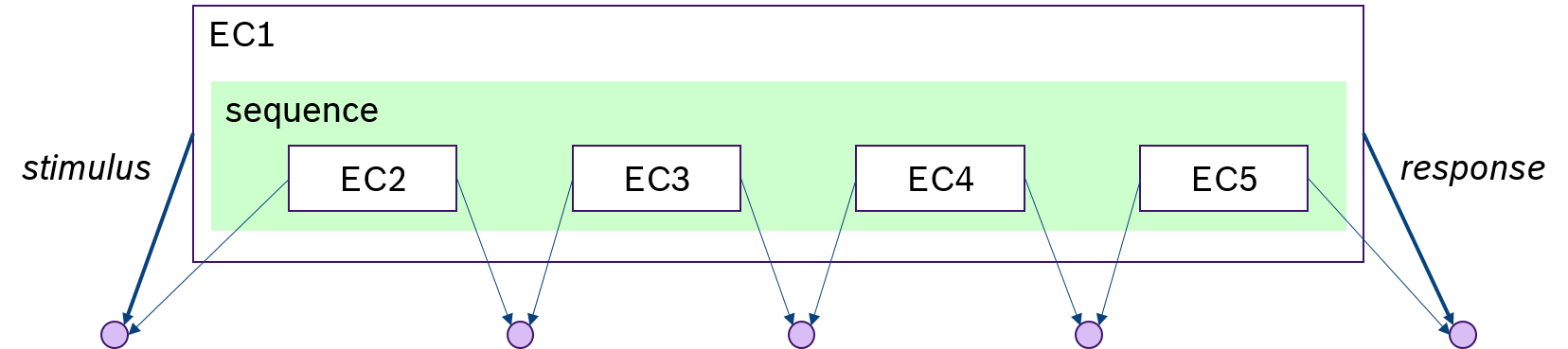

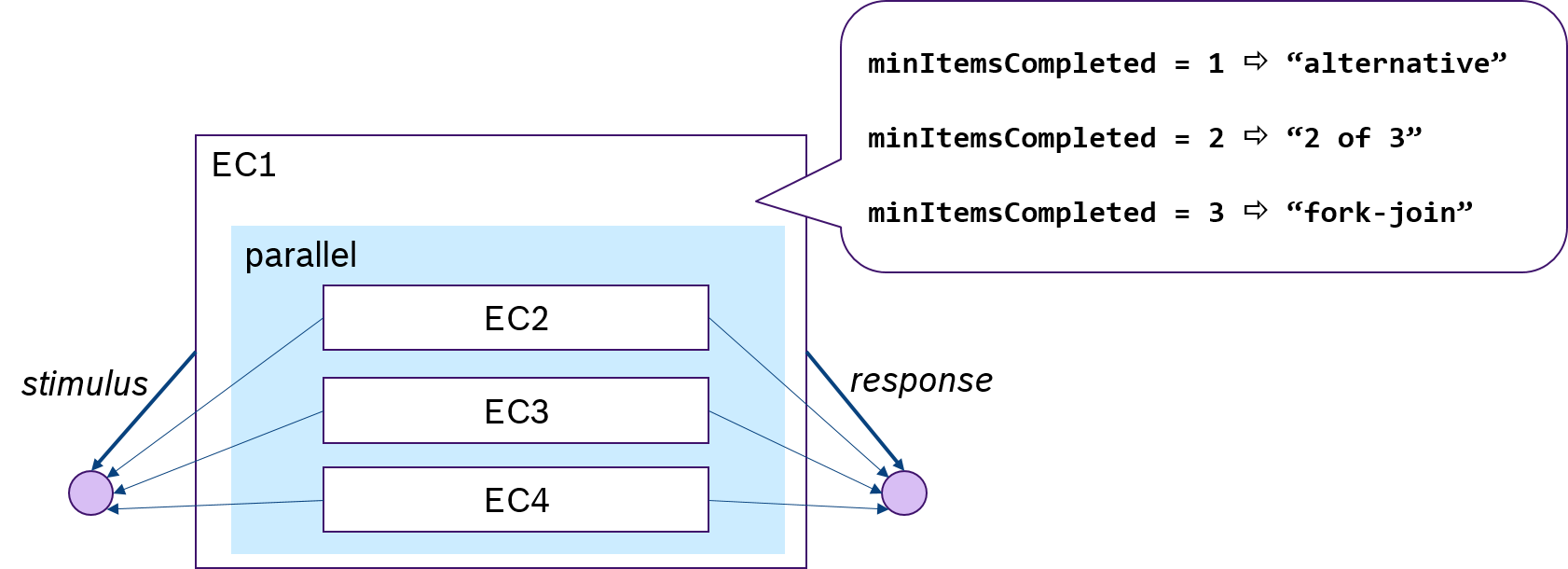

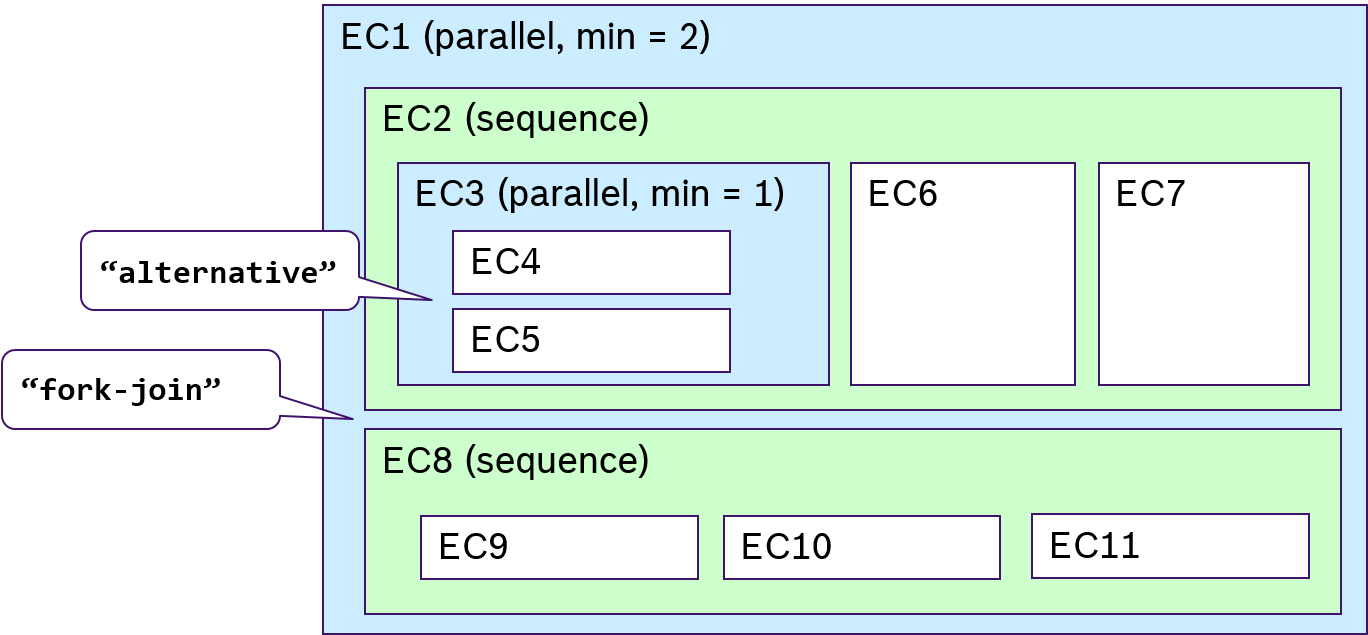

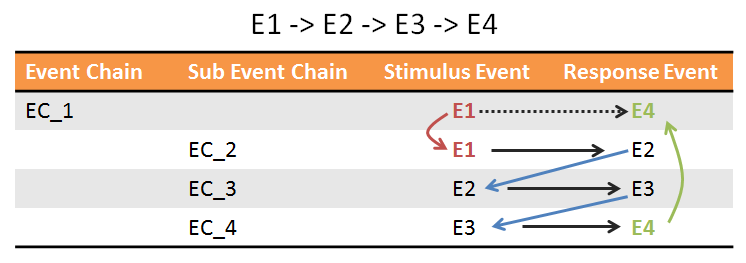

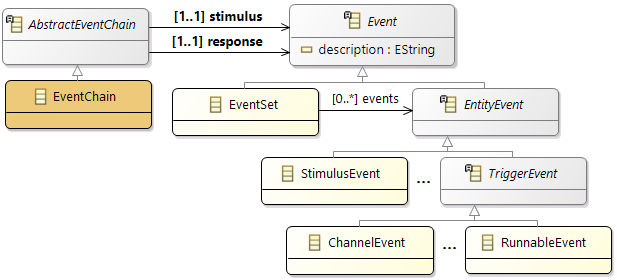

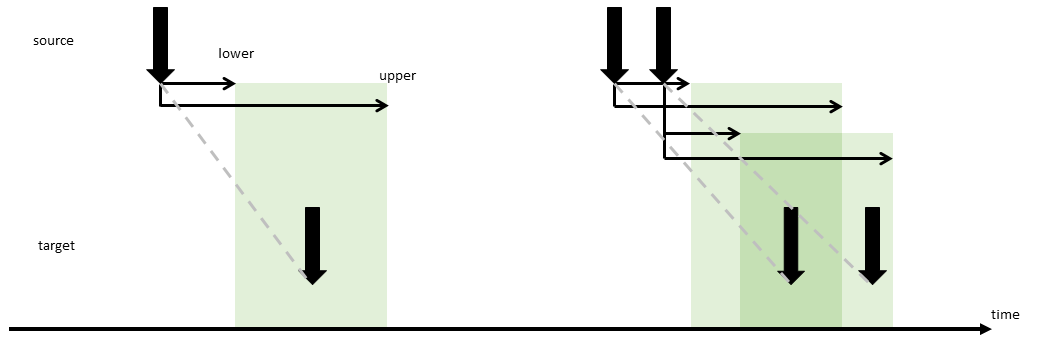

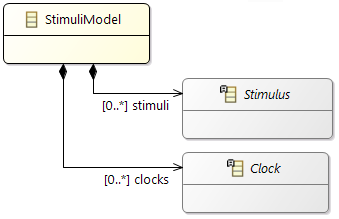

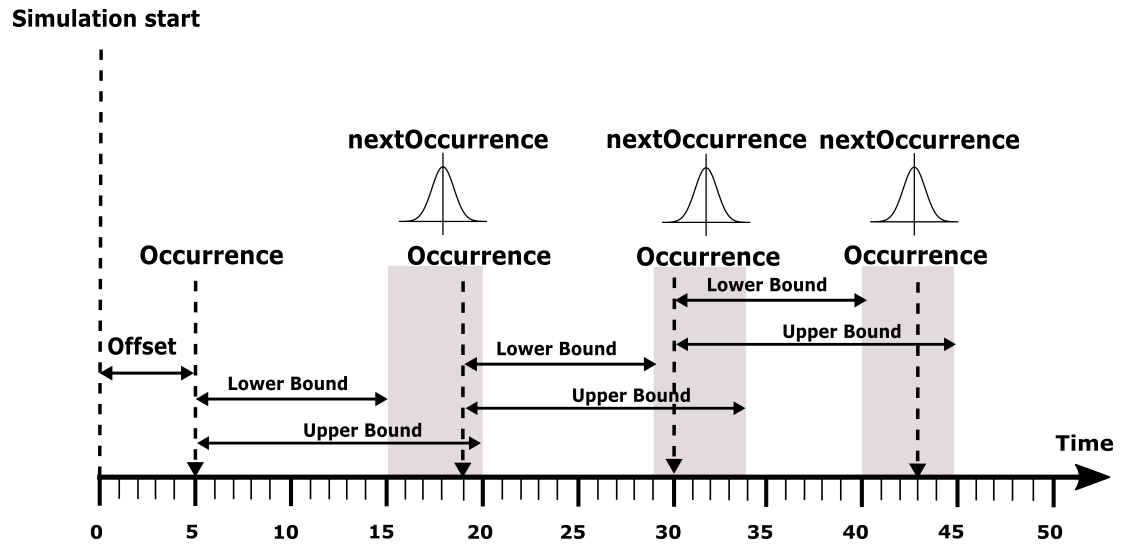

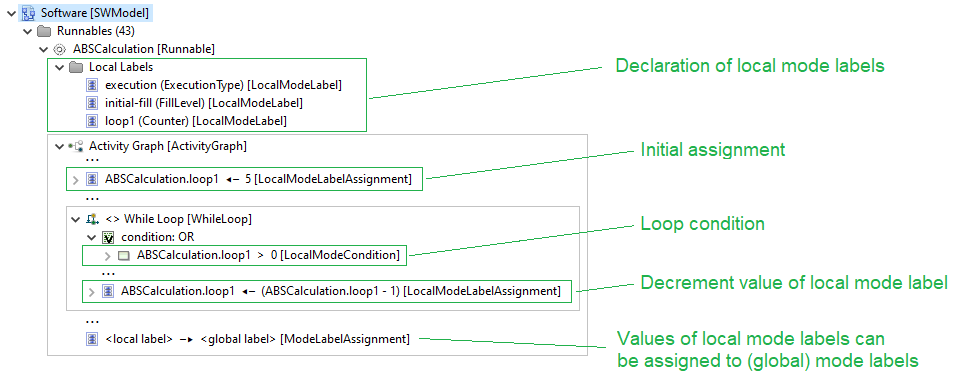

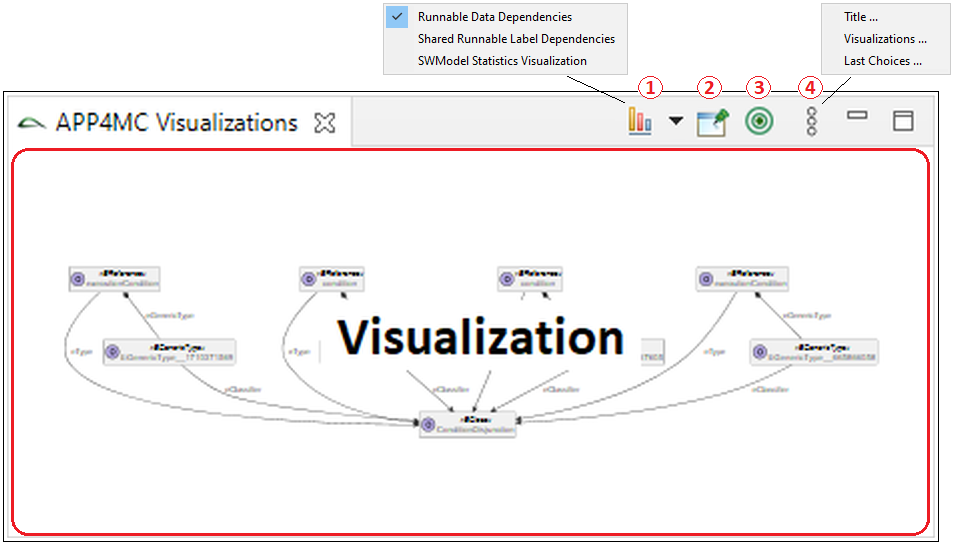

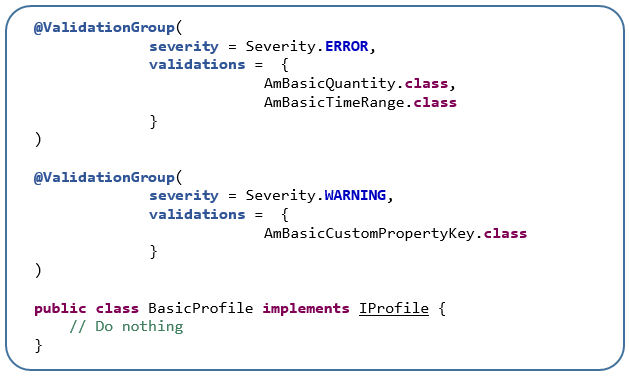

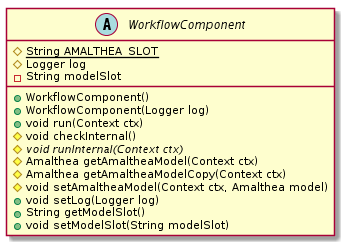

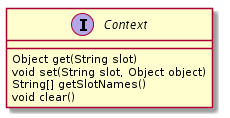

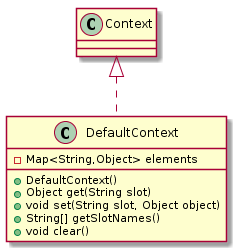

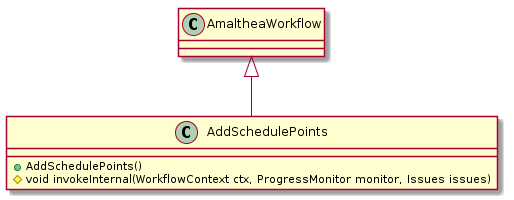

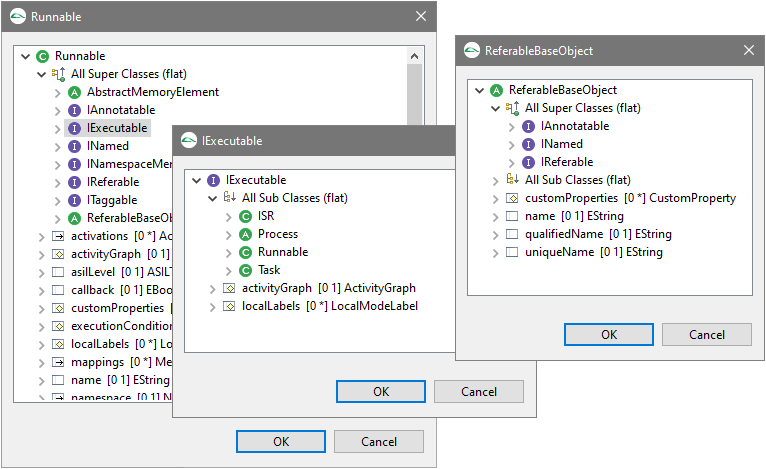

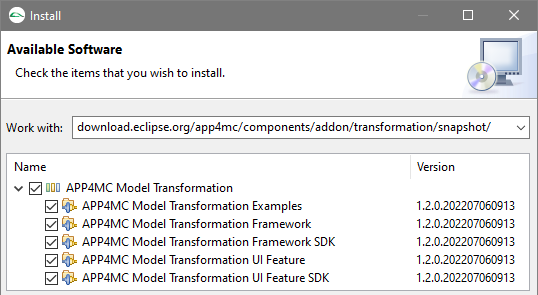

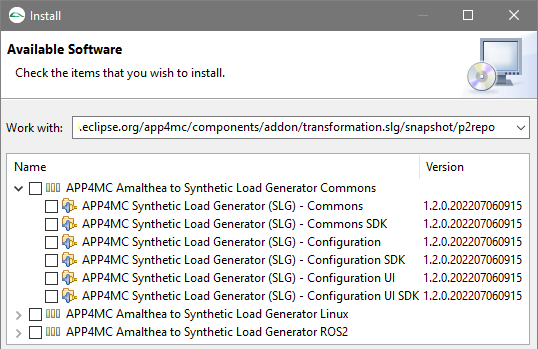

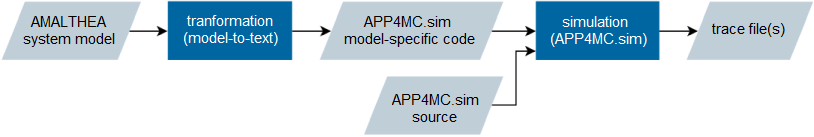

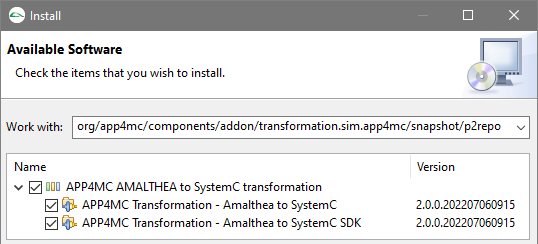

8) Accuracy in Logging of Data State I