This is the multi-page printable view of this section. Click here to print.

Concepts

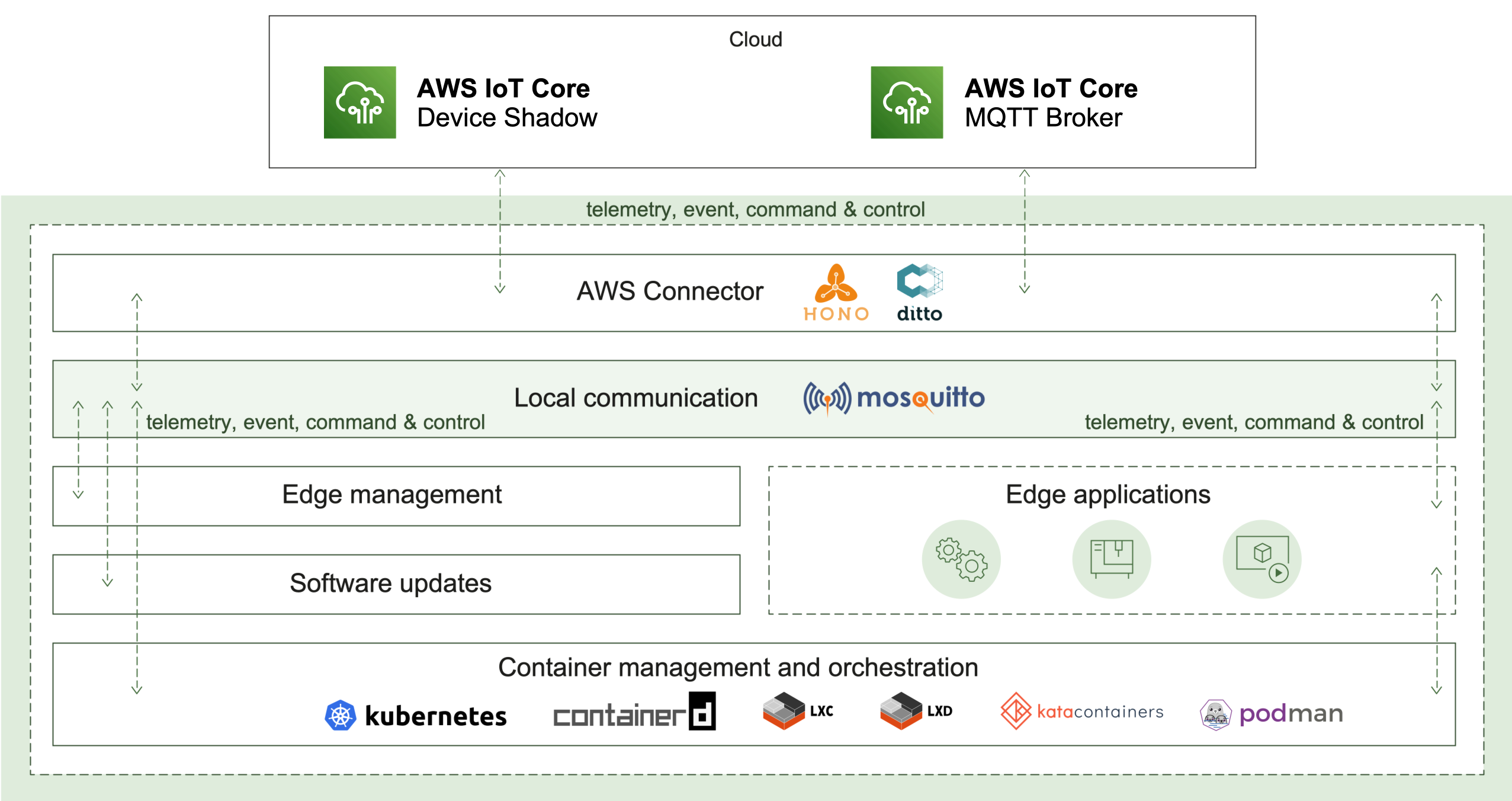

1 - AWS Connector

AWS Connector enables the remote connectivity to an AWS IoT cloud ecosystem. It provides the following use cases:

- Enriched remote connection

- Optimized - to pass the messages via a single underlying connection

- Secured - to protect the edge identity and data via TLS with basic and certificate-based authentication

- Maintained - with a reconnect exponential backoff algorithm

- Synchronized - on a connectivity recovering via a message buffering

- Application protection - AWS Connector is the only one component with a remote connectivity i.e. all local applications are protected from exposure to the public network

- Offline mode - local applications don’t need to care about the status of the remote connection, they can stay fully operable in offline mode

- Device Shadow - messages sent to the Twin Channel are converted to messages more suitable for AWS Device Shadow service and sent to it.

How it works

The AWS Connector plays a key role in two communication aspects - local and remote.

Cloud connectivity

To initiate its connection, the edge has to be manually or automatically provisioned. The result of this operation is different parameters and identifiers. Currently, AWS Connector supports MQTT transport as a connection-oriented and requiring less resources in comparison to AMQP. Once established, the connection is used as a channel to pass the edge telemetry and event messages. The IoT cloud can control the edge via commands and responses.

In case of a connection interruption, the AWS Connector will switch to offline mode. The message buffer mechanism will be activated to ensure that there is no data loss. Reconnect exponential backoff algorithm will be started to guarantee that no excessive load will be generated to the IoT cloud. All local applications are not affected and can continue to operate as normal. Once the remote connection is restored, all buffered messages will be sent and the edge will be fully restored to online mode.

Local communication

Ensuring that local applications are loosely coupled, Eclipse Hono™ MQTT definitions are in use. The event-driven local messages exchange is done via a MQTT message broker - Eclipse Mosquitto™. The AWS Connector takes the responsibility to forward these messages to the IoT cloud and vice versa.

Monitoring of the remote connection status is also enabled locally as well, along with details like the last known state of the connection, timestamp and a predefined connect/disconnect reason.

2 - Container management

Container management enables a lightweight standard runtime which is capable to run containerized applications with all advantages of the technology: isolation, portability and efficiency. The deployment and management are available both locally and remotely via an IoT cloud ecosystem of choice. The following use cases are provided:

- Standardized approach - with OCI (Open Container Initiative) compliant container images and runtime

- Lightweight runtime - with a default integration of

containerdand a possibility for another container technology of choice like podman, LXC and more - Isolation - with a default isolation from other containerized applications and the host system

- Portability - with an option to run one and the same containerized application on different platforms

- Pluggable architecture - with extension points on different levels

How it works

A container image packs the application executable along with all its needed dependencies into a single artifact that can be built by a tooling of choice. The built image is made available for usage by being pushed to a container image registry where the runtime can refer it to.

To create a new container instance, the container management uses such an image reference and a configuration for it to produce a fully functional container.

The container lifecycle (start, update, stop, remove) and environment (memory constraints, restart policy, etc.) are also handled by the runtime.

The container management continuously ensures the applications availability via state awareness and restart policies, provides monitoring via flexible logging and fine-grained resources management.

All of that is achieved on top of an underlying runtime of choice (containerd by default) that takes care of the low-level isolation mechanisms.

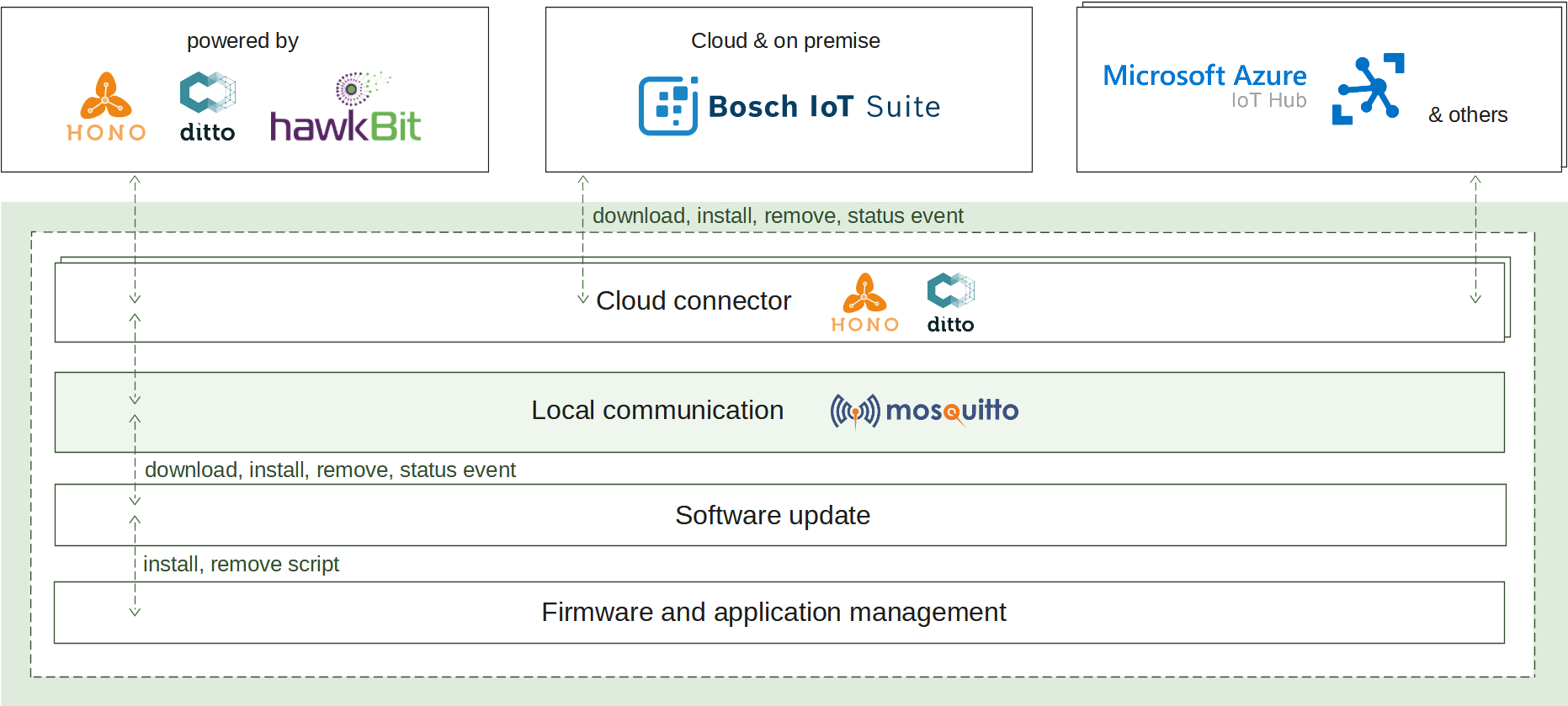

3 - Software update

Software update enables the deployment and management of various software artifacts, both locally and remotely via an IoT cloud ecosystem of choice. It provides the following use cases:

- Robust download - with a retry and resume mechanism when the network connection is interrupted

- Artifact validation - with an integrity validation of every downloaded artifact

- Universal installation - with customizable install scripts to handle any kind of software

- Operation monitoring - with a status reporting of the download and install operations

How it works

When the install operation is received at the edge, the download process is initiated. Retrieving the artifacts will continue until they are stored at the edge or their size threshold is reached. If successful, the artifacts are validated for integrity and further processed by the configured script. It is responsible to apply the new software and finish the operation. A status report is announced on each step of the process enabling its transparent monitoring.

On start up, if there have been any ongoing operations, they will be automatically resumed as the operation state is persistently stored.

What’s next

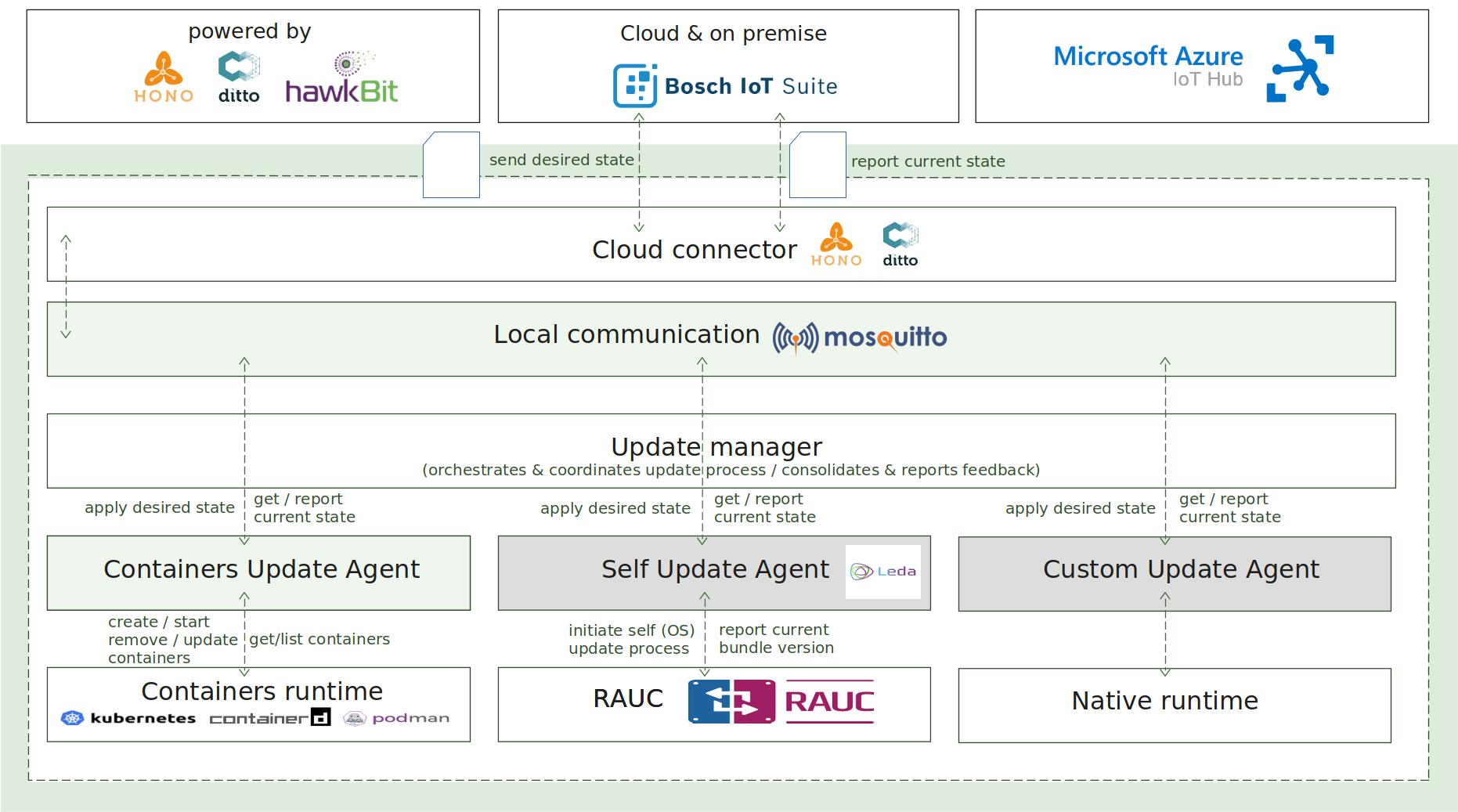

4 - Update manager

Update manager enables a lightweight core component which is capable to easily perform complex OTA update scenarios on a target device. The following capabilities are provided:

- Lightweight - consists of a single core component which orchestrates the update process

- Flexible deployment - supports different deployment models - natively, as an executable or container

- Unified API - all domain agents utilize a unified Update Agent API for interacting with the Update Manager

- MQTT Connectivity - the connectivity and communication between the Update Manager and domain agent is MQTT-based

- Multi-domain integration - easily integrates, scales and performs complex update operations across multiple domains

- Default update agents - integrates with the Kanto provided out-of-the box domain update agent implementation for deployment of container into the Kanto container management

- Pluggable architecture - provides an extensible model for plug-in custom orchestration logic

- Asynchronous updates - asynchronous and independent update process across the different domains

- Multi-staged updates - the update process is organized into different stages

- Configurable - offers a variety of configuration options for connectivity, supported domains, message reporting and etc

How it works

The update process is initiated by sending the desired state specification as an MQTT message towards the device, which is handled by the Update Manager component.

The desired state specification in the scope of the Update Manager is a JSON-based document, which consists of multiple component definitions per domain, representing the desired state to be applied on the target device. A component in the same context means a single, atomic and updatable unit, for example, OCI-compliant container, software application or firmware image.

Each domain agent is a separate and independent software component, which implements the Update Agent API for interaction with the Update Manager and manages the update logic for concrete domain. For example - container management.

The Update Manager, operating as a coordinator, is responsible for processing the desired state specification, distributing the split specification across the different domain agents, orchestrating the update process via MQTT-based commands, collecting and consolidating the feedback responses from the domain update agents, and reporting the final result of the update campaign to the backend.

As extra features and capabilities, the Update Manager enables reboot of the host after the update process is completed and reporting of the current state of the system to the backend.

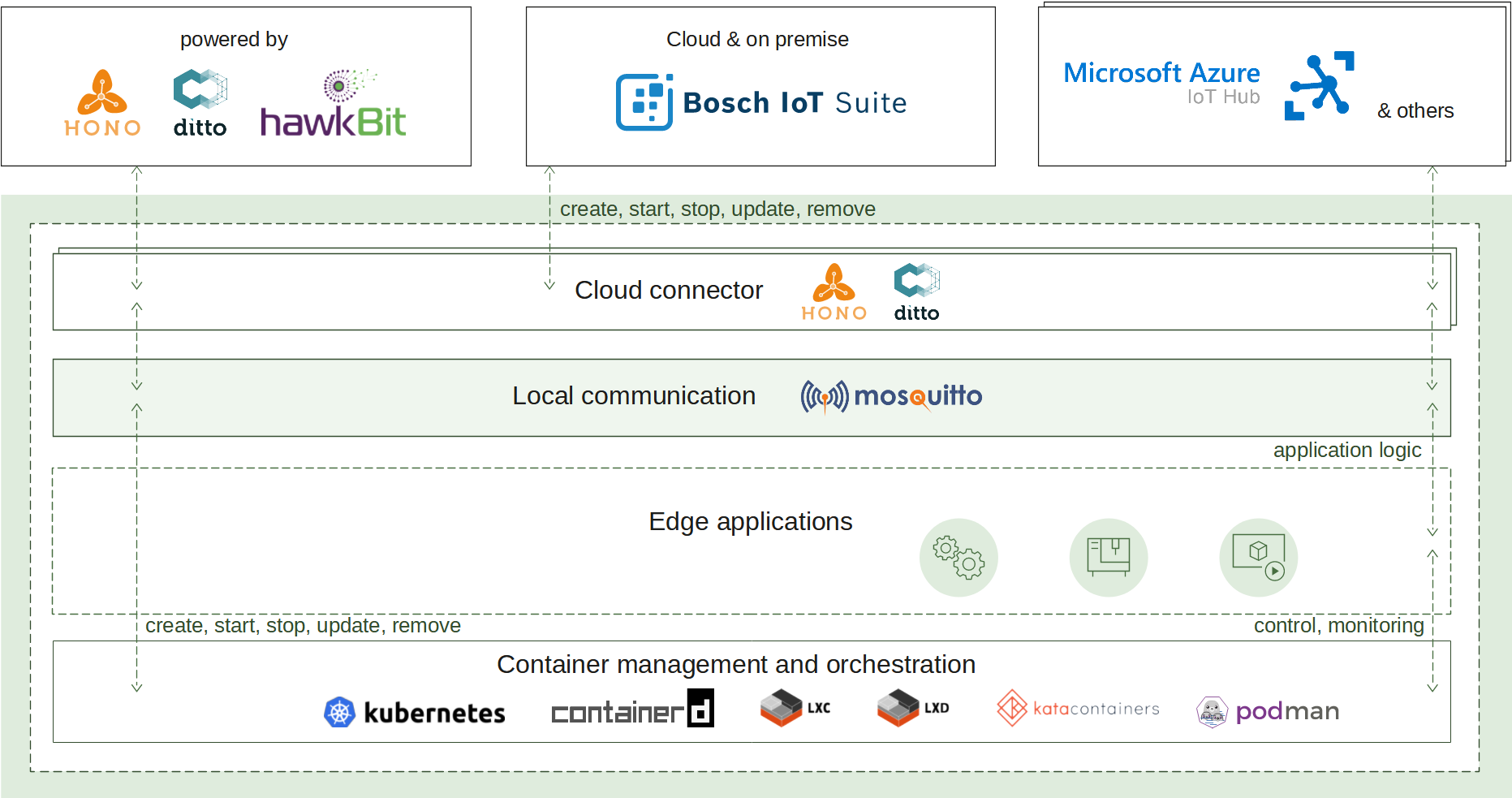

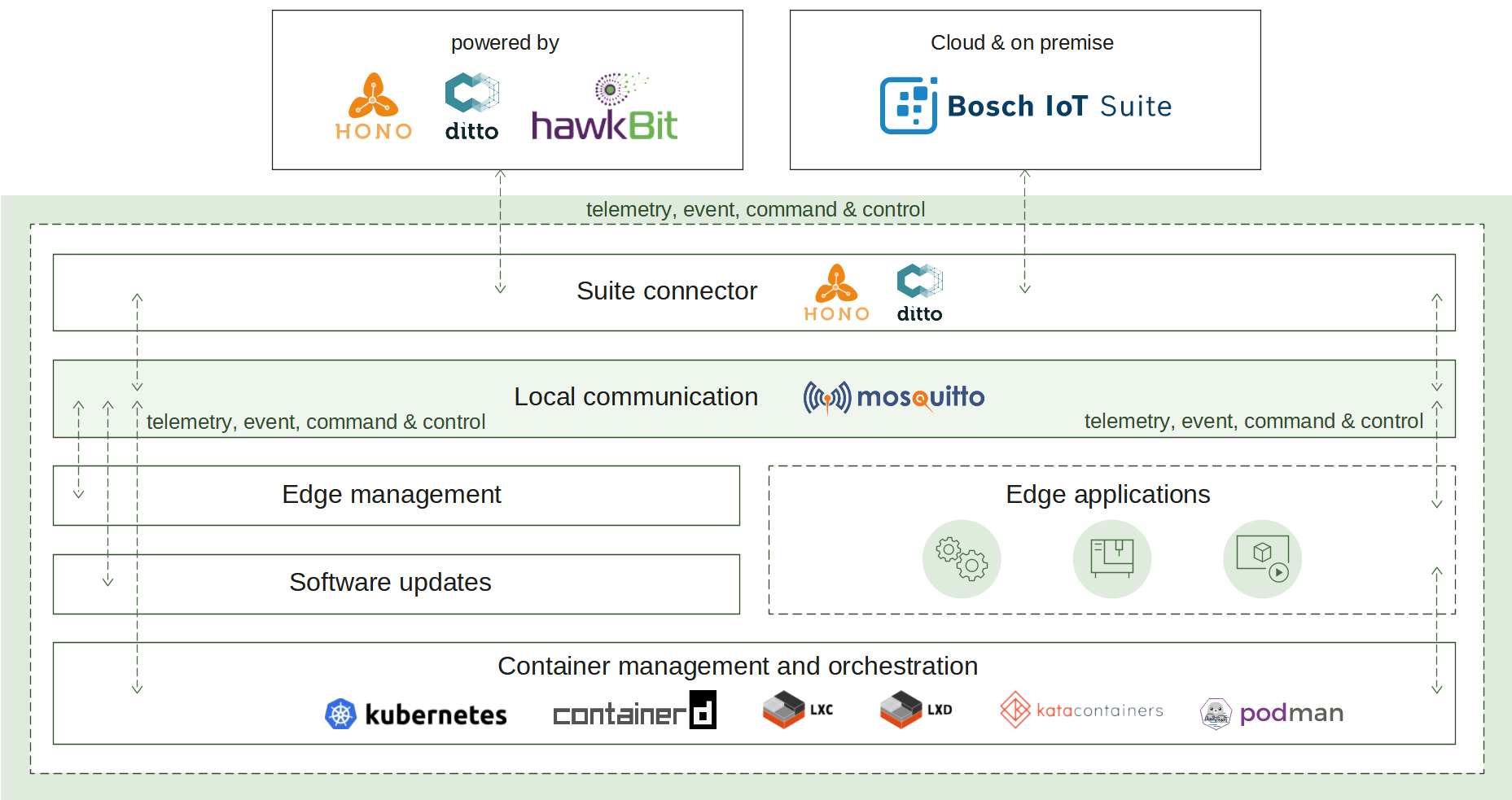

5 - Suite connector

Suite connector enables the remote connectivity to an IoT cloud ecosystem of choice, powered by Eclipse Hono™ (e.g. Eclipse Cloud2Edge and Bosch IoT Suite). It provides the following use cases:

- Enriched remote connection

- Optimized - to pass the messages via a single underlying connection

- Secured - to protect the edge identity and data via TLS with basic and certificate-based authentication

- Maintained - with a reconnect exponential backoff algorithm

- Synchronized - on a connectivity recovering via a message buffering

- Application protection - suite connector is the only one component with a remote connectivity i.e. all local applications are protected from exposure to the public network

- Offline mode - local applications don’t need to care about the status of the remote connection, they can stay fully operable in offline mode

How it works

The suite connector plays a key role in two communication aspects - local and remote.

Cloud connectivity

To initiate its connection, the edge has to be manually or automatically provisioned. The result of this operation is different parameters and identifiers. Currently, suite connector supports MQTT transport as a connection-oriented and requiring less resources in comparison to AMQP. Once established, the connection is used as a channel to pass the edge telemetry and event messages. The IoT cloud can control the edge via commands and responses.

In case of a connection interruption, the suite connector will switch to offline mode. The message buffer mechanism will be activated to ensure that there is no data loss. Reconnect exponential backoff algorithm will be started to guarantee that no excessive load will be generated to the IoT cloud. All local applications are not affected and can continue to operate as normal. Once the remote connection is restored, all buffered messages will be sent and the edge will be fully restored to online mode.

Local communication

Ensuring that local applications are loosely coupled, Eclipse Hono™ MQTT definitions are in use. The event-driven local messages exchange is done via a MQTT message broker - Eclipse Mosquitto™. The suite connector takes the responsibility to forward these messages to the IoT cloud and vice versa.

The provisioning information used to establish the remote communication is available locally both on request via a predefined message and on update populated via an announcement. Applications that would like to extend the edge functionality can further use it in Eclipse Hono™ and Eclipse Ditto™ definitions.

Monitoring of the remote connection status is also enabled locally as well, along with details like the last known state of the connection, timestamp and a predefined connect/disconnect reason.

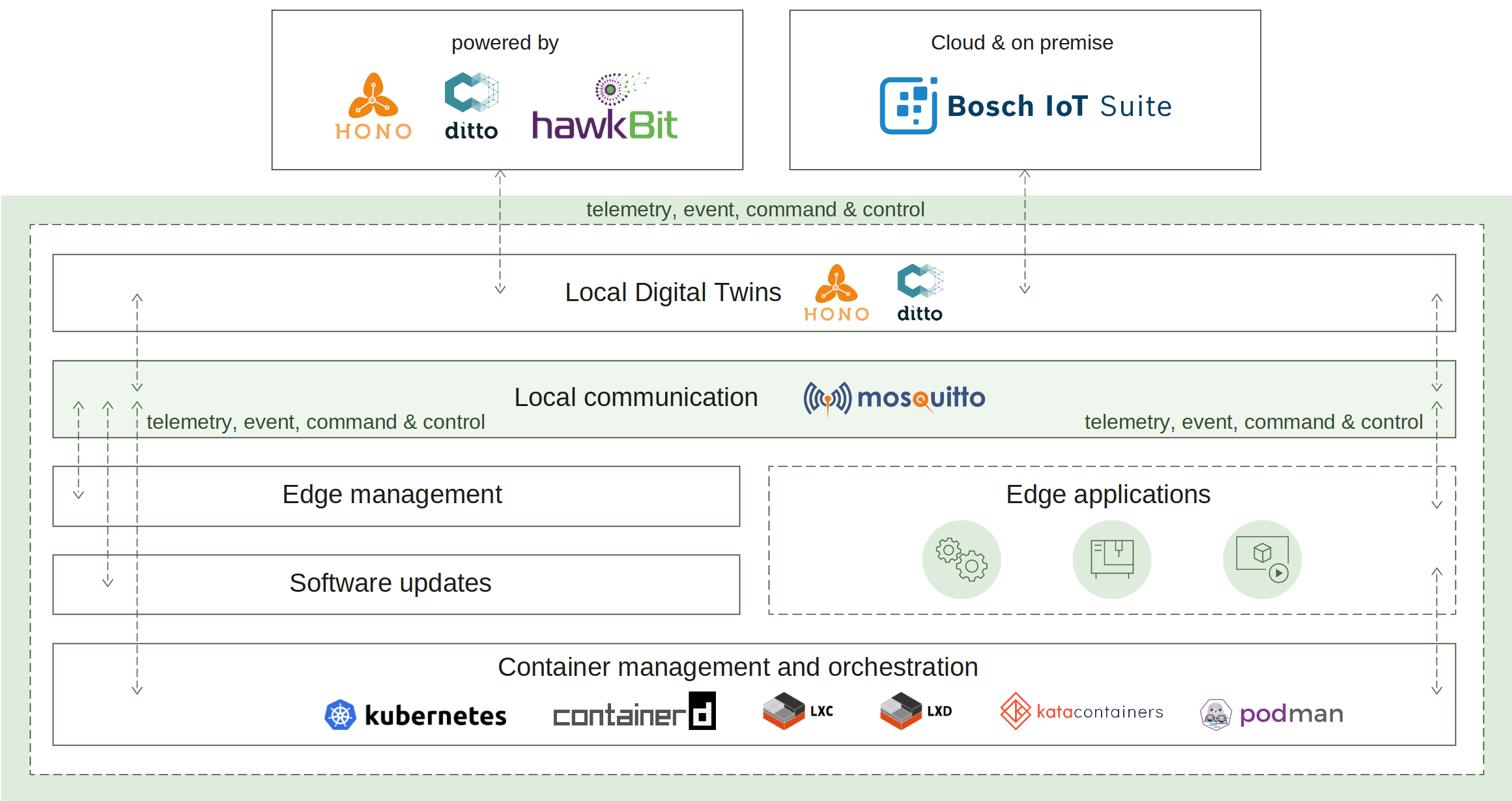

6 - Local digital twins

Local digital twins enables the digital twin state on a local level even in offline scenarios. It provides the following use cases:

- Mirrors the applications

- Persistency - digital twins are stored locally

- Cloud connectivity - provide connectivity to the cloud (similar to Suite connector)

- Offline scenarios - local applications stay fully operable as if the connection with the cloud is not interrupted Synchronization - when connection to the cloud is established the last known digital twin state is synchronized

How it works

Similar to the Suite connector service the local digital twins service needs to establish a connection to the cloud. To do this this the edge has to be manually or automatically provisioned. This connection is then used as a channel to pass the edge telemetry and event messages. Once a connection is established the device can be operated via commands.

To ensure that the digital twin state is available on a local level even in offline mode (no matter of the connection state) the local digital twins service persist all changes locally. Such capabilities were implemented to support offline scenarios and advanced edge computing involving synchronization with the cloud after disruptions or outages. The synchronization mechanisms were also designed in a way to significantly reduce data traffic, and efficiently prevent data loss due to long-lasting disruptions.

Upon reconnection, the local digital twins will notify the cloud of any changes during the offline mode and synchronize the digital twins state.

The synchronization works in both directions:

- Cloud -> Local digital twins - desired properties are updated if such changes are requested from the cloud.

- Local digital twins -> Cloud - local digital twins state is sent to the cloud (e.g. all current features, their reported properties values, and any removed features while there was no connection).

What’s next

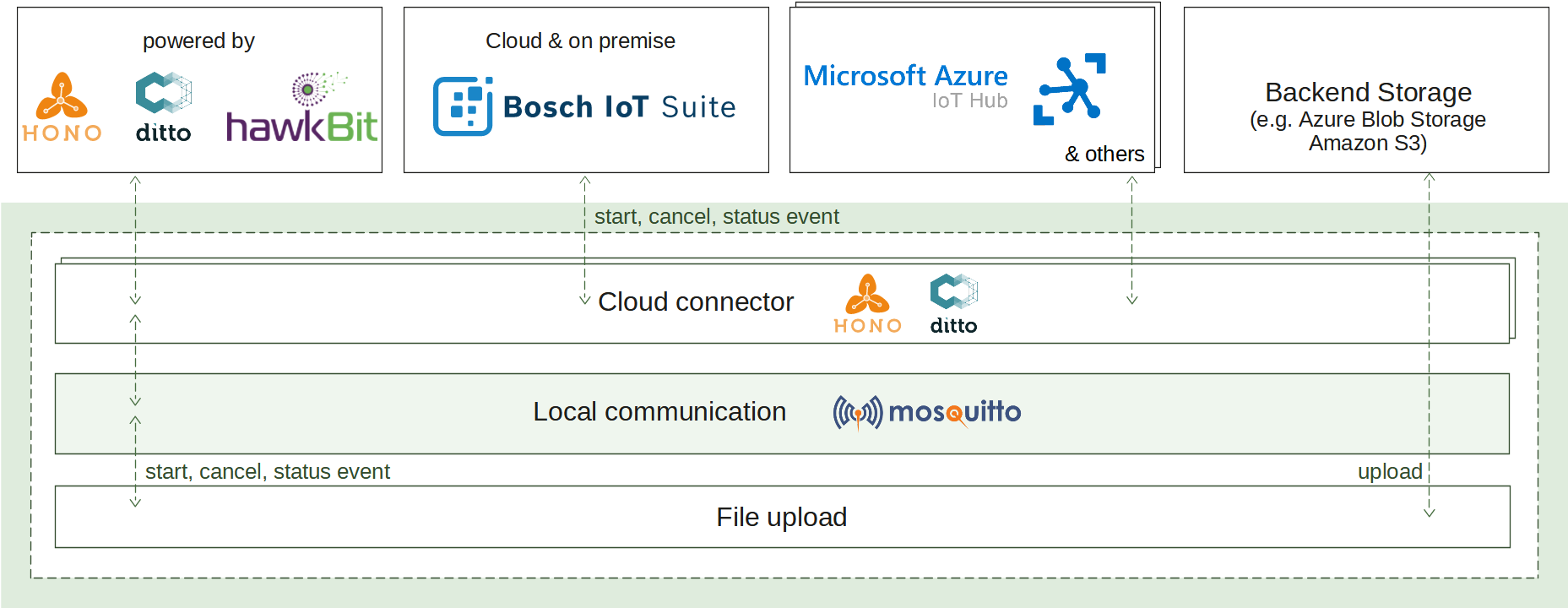

7 - File upload

File upload enables sending of files to a backend storage of choice. It can be used both locally and remotely via a desired IoT cloud ecosystem. The following use cases are provided:

- Storage diversity - with ready to use integrations with Azure Blob Storage, Amazon S3 and standard HTTP upload

- Automatic uploads - with periodically triggered uploads at a specified interval in a given time frame

- Data integrity - with an option to calculate and send the integrity check required information

- Operation monitoring - with a status reporting of the upload operation

How it works

It’s not always possible to inline all the data into exchanged messages. For example, large log files or large diagnostic files cannot be sent as a telemetry message. In such scenarios, file upload can assist enabling massive amount of data to be stored to the backend storage.

There are different triggers which can initiate the upload operation: periodic or explicit. Once initiated, the request will be sent to the IoT cloud for confirmation or cancellation transferred back to the edge. If starting is confirmed, the files to upload will be selected according to the specified configuration, their integrity check information can be calculated and the transfer of the binary content will begin. A status report is announced on each step of the upload process enabling its transparent monitoring.