Understanding Aidge’s architecture#

Modular by design, flexible by nature#

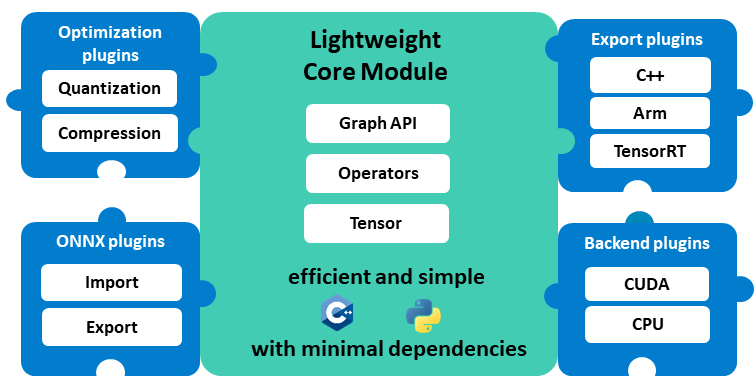

Aidge is built on a robust and flexible modular architecture, a foundational pillar that sets it apart. This modularity isn’t just a technical feature; it’s crucial for Aidge’s customization, extensibility, and adaptability to the diverse and evolving demands of AI deployment.

Core principles driving our design:

Plugin-driven: we engineered a lightweight core module designed for seamless expansion with easily integrated plug-ins.

Dependency minimization: this approach simplifies integration and management, avoiding conflicts within your current workflows.

By breaking down the framework into distinct, interchangeable components, Aidge offers key advantages:

Pick and choose: integrate only the functionalities you need.

Customize freely: replace existing functionalities with your own implementations.

Expand easily: add new capabilities without touching the core system.

Code engagement: quickly understand, explore, and contribute to relevant parts of the codebase.

Discover how easy it is to add an operator to the C++ export by doing our tutorial.

Module ecosystem overview#

Category |

Modules |

Description |

|---|---|---|

Core foundation |

|

The essential building blocks for manipulating AI models and data, indispensable for all other modules. |

Execution backends |

|

Enable Aidge to run on specific hardware devices like CPUs and NVIDIA GPUs, providing concrete implementations. |

Export modules |

|

Generate deployable code for various target environments, seamlessly integrating AI models into diverse systems. |

Optimization suite |

|

Offer advanced techniques to optimize AI models, reducing size, improving speed, and minimizing resource consumption. |

Interoperability layer |

|

Facilitate smooth interaction between Aidge and other popular AI frameworks (ONNX, PyTorch) for broad compatibility. |

Development tools |

|

Visualize and explore AI model architectures and data flow within Aidge for easier understanding and debugging. |