Aidge workflow#

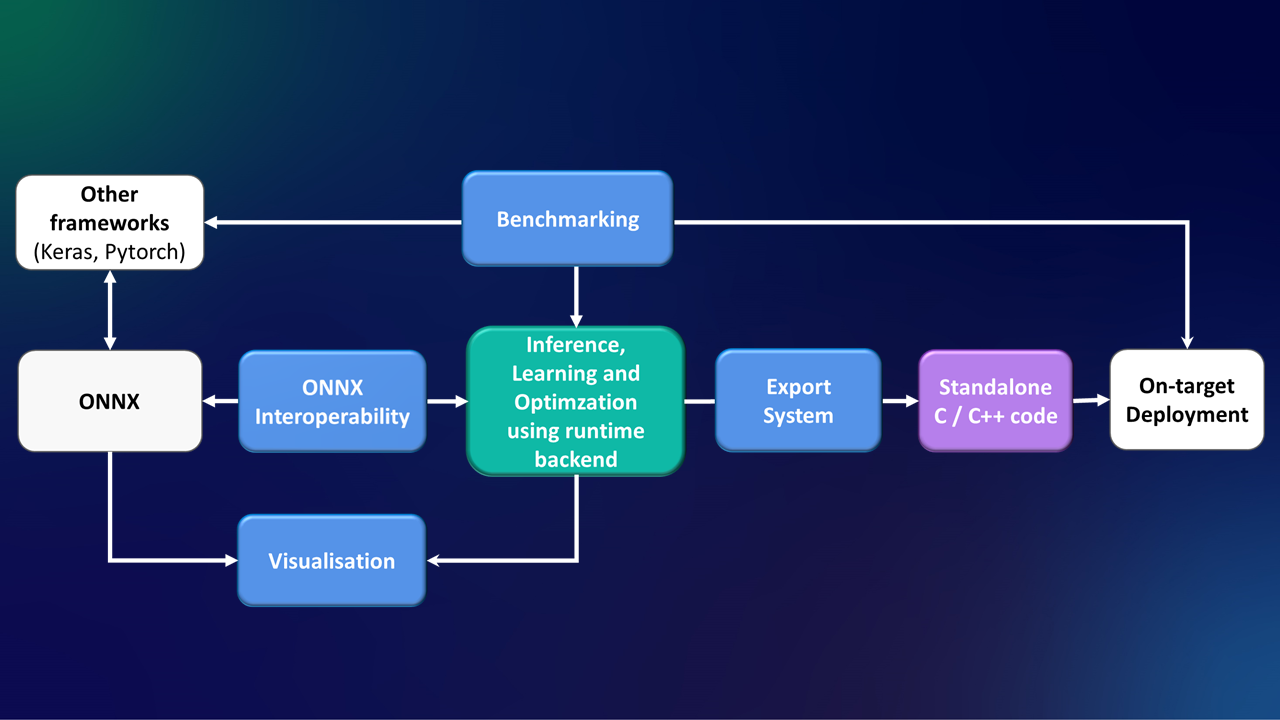

Aidge provides a comprehensive workflow to streamline the development and deployment of AI models for edge devices. Here is an overview of a typical workflow and the key functionalities Aidge offers.

Aidge guides you through the process of preparing, optimizing, and deploying your AI models:

Define a model

Start by loading a (pre-trained) AI model from an ONNX file, or alternatively, define your model using Aidge’s API. Either way, your model will be accessible at any time via Aidge’s graph intermediate representation.

Visualize and analyze the model

Utilize Aidge’s tools to visualize and analyze your model’s architecture, helping you understand its structure and identify areas for improvement.

Optimize the model (optional)

Train your model or apply powerful optimization techniques (such as quantization, pruning, and simplification) to reduce model size, improve energy efficiency, and meet the specific constraints of embedded hardware.

Save the model

Save your optimized model in ONNX whenever you need to.

Export the model

Generate the source code of your optimized model tailored for your embedded hardware platform, making it ready for deployment.

Benchmark the model

Evaluate the optimized model’s performance at runtime or at execution time to ensure it meets your performance targets on the target hardware.

Each of these functionalities is encapsulated within Aidge’s modular structure.