Export Code Structure#

This guide provides an overview of the code structure of Aidge export modules.

Introduction#

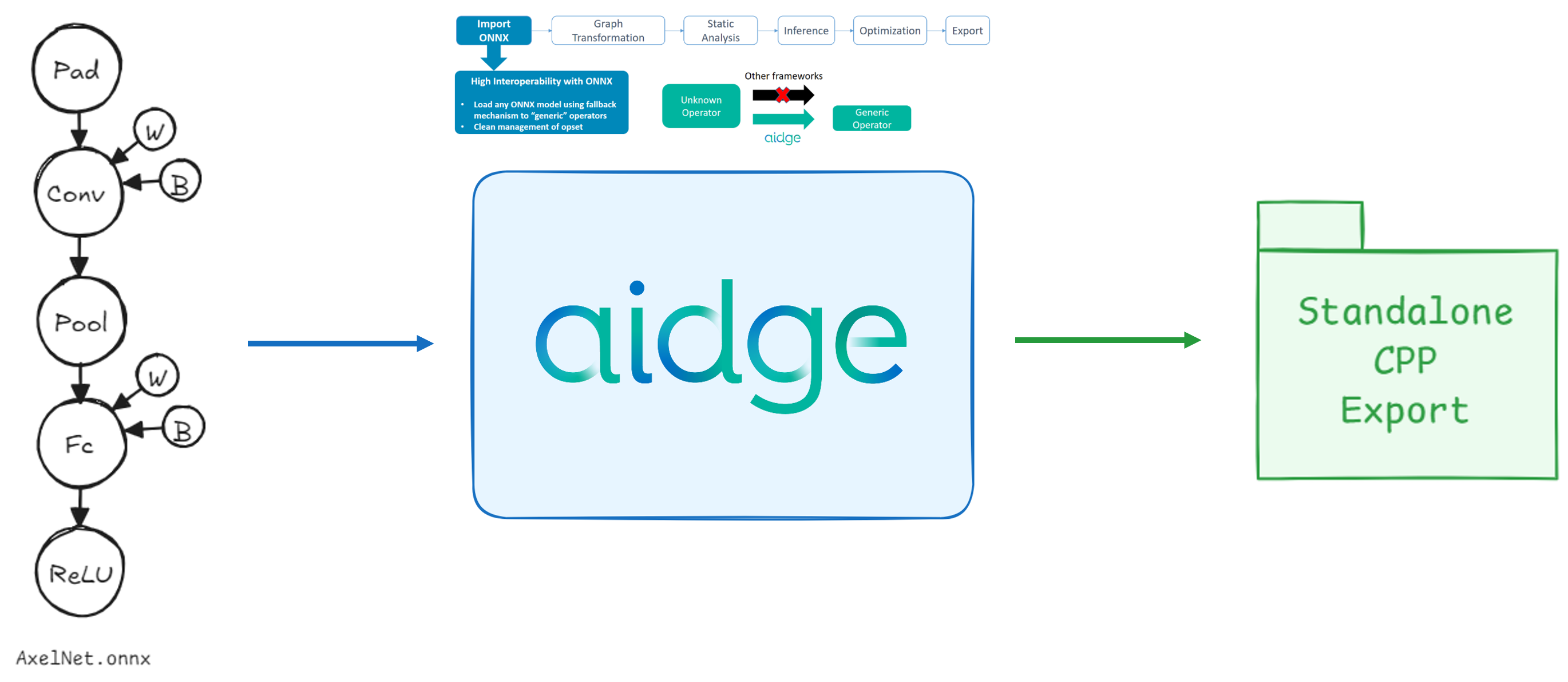

In Aidge, an export module refers to any module that generates a standalone program capable of running a neural network on specific hardware targets.

Several export modules are already available in the framework, such as:

Throughout this guide, we will refer primarily to the C++ Export, since all export modules share a similar structure. We will walk through the complete export process using a small model as an example, explaining each step and mechanism involved.

Graph Preparation#

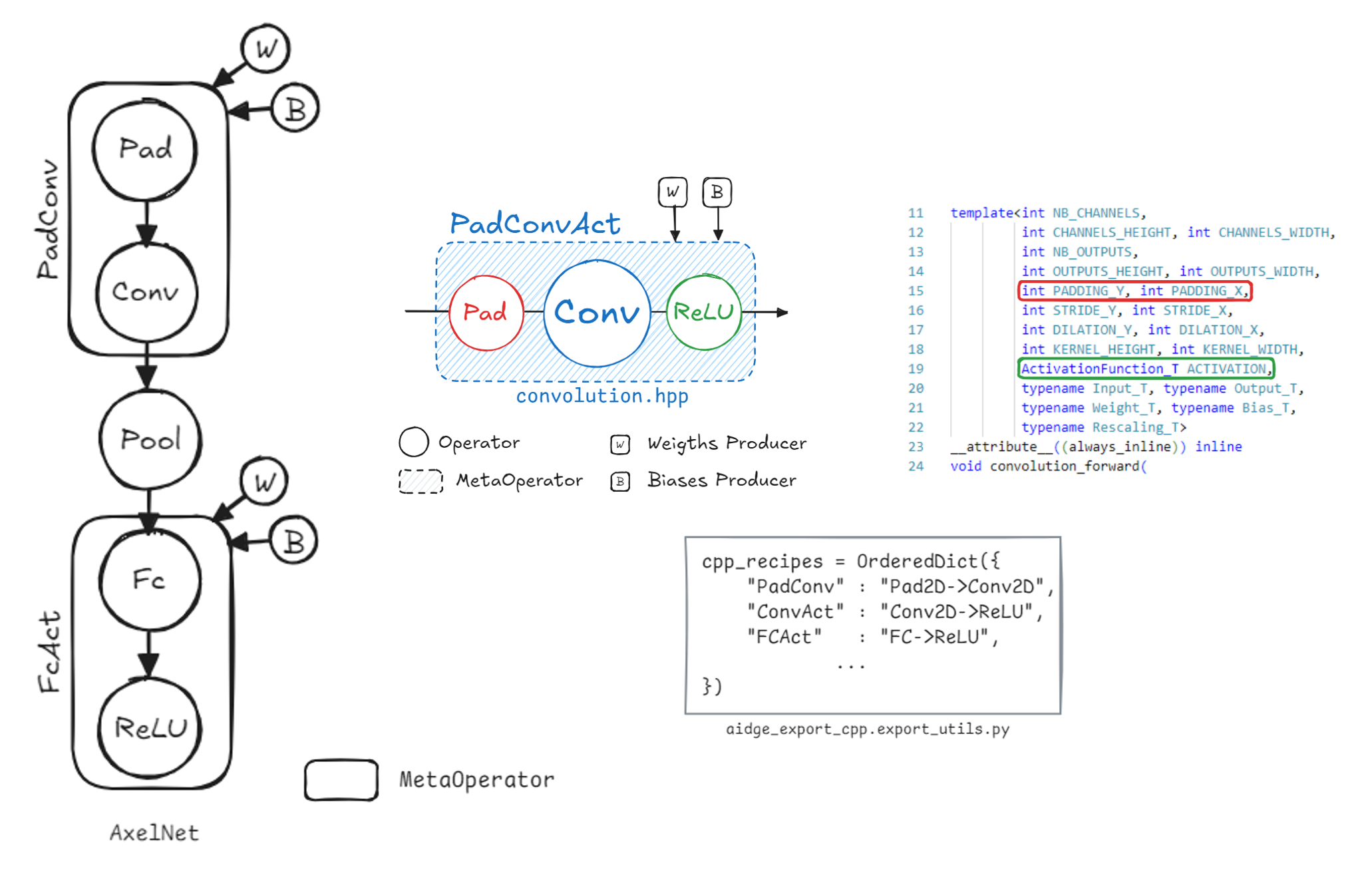

Before generating the standalone export, the Aidge graph undergoes several transformations to fit the targeted export backend.

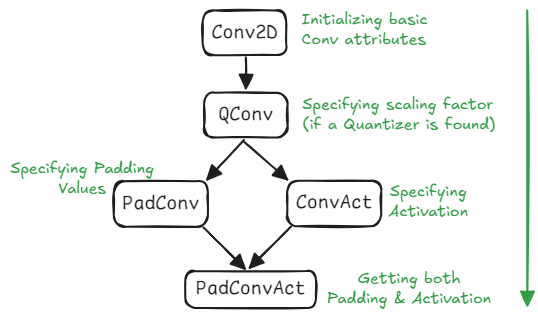

One key step in this process is the fusion of operators into MetaOperators, groups of operators designed to match specific kernel implementations.

For example, as shown above, the convolution kernel supports both padding and activation (e.g., ReLU), so these operations are fused together into a single convolution operation.

This transformation is achieved by applying a set of regular expression-based recipes to the graph. Each export module provides its own recipe set. For more details, refer to:

Additional transformations, such as setting data formats or data types, are applied within the export() function defined in export.py.

Most helper functions used in this process are implemented in export_utils.py.

For a detailed walkthrough, see the “Quantized LeNet C++ Export” tutorial.

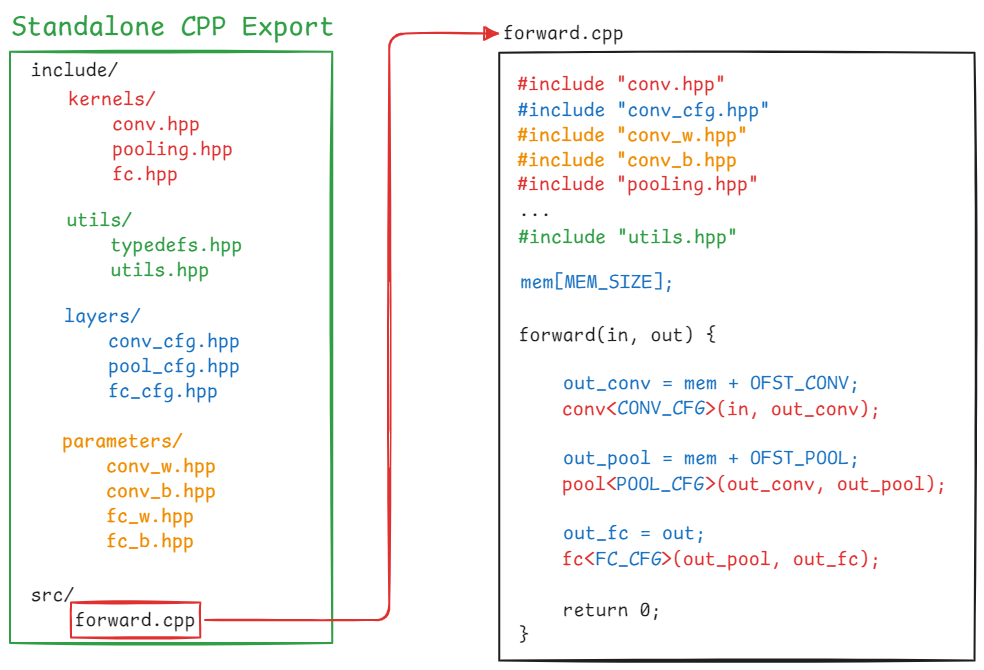

Standalone Export Structure#

The Standalone Export refers to the generated code that runs the exported model independently.

Before exploring the internal structure of the export module itself, let’s first review the structure of the generated export:

In the generated export:

forward.cppcontains the function responsible for running inference.The generated folders include:

kernels/ (red): Implementation code for each supported kernel.

utils/ (green): Utility source files.

layers/ (blue): Configuration files for each model layer. These define parameters such as kernel size, dilation, and memory offsets for outputs.

parameters/ (yellow): Serialized model parameters, such as weights and biases.

(Note: newer exports may support fallback mechanisms that allow partial reuse of existing export kernels.)

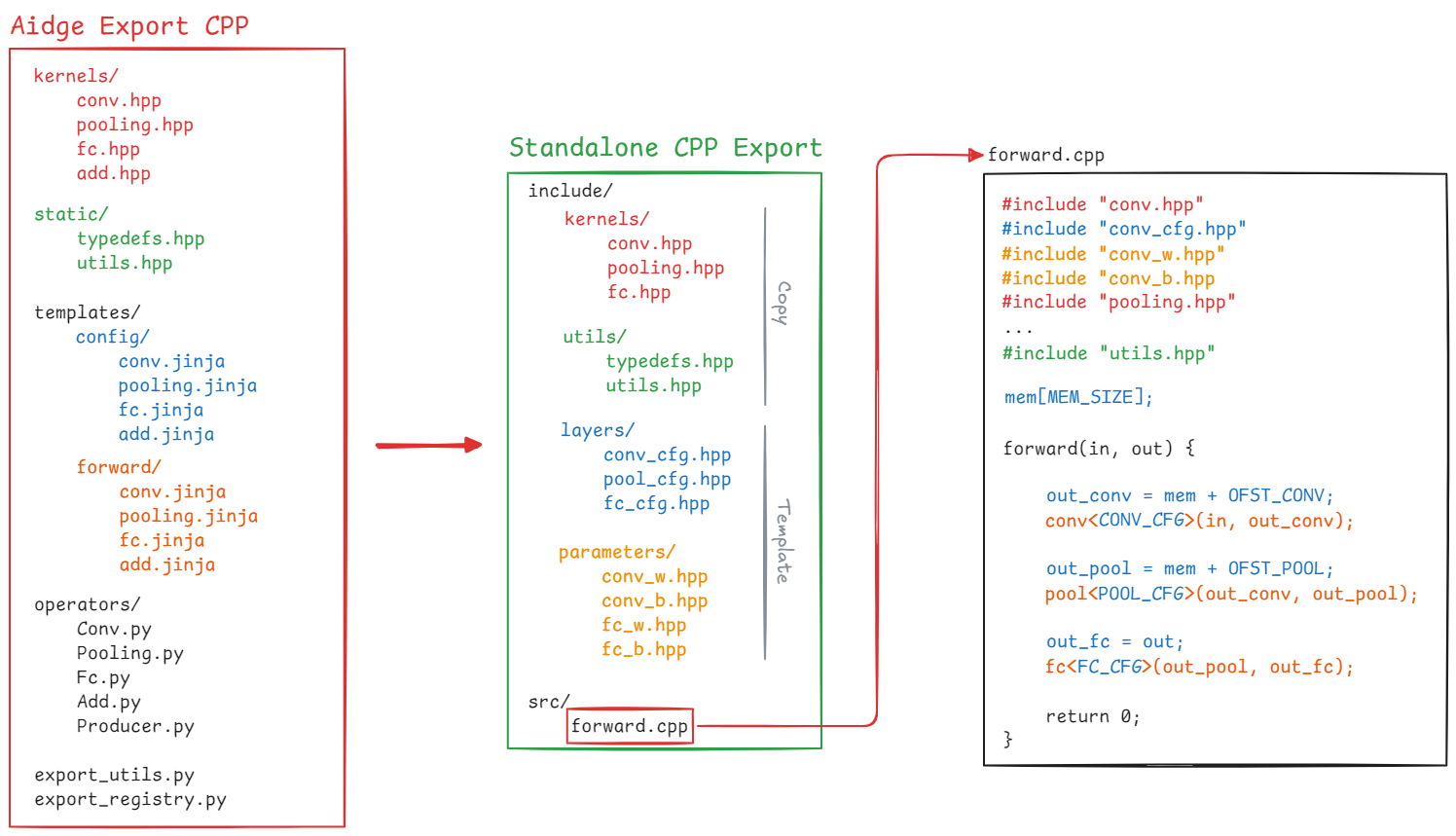

Export Module Structure#

The Export Module is the Aidge component that generates the Standalone Export.

Copied and Generated Files#

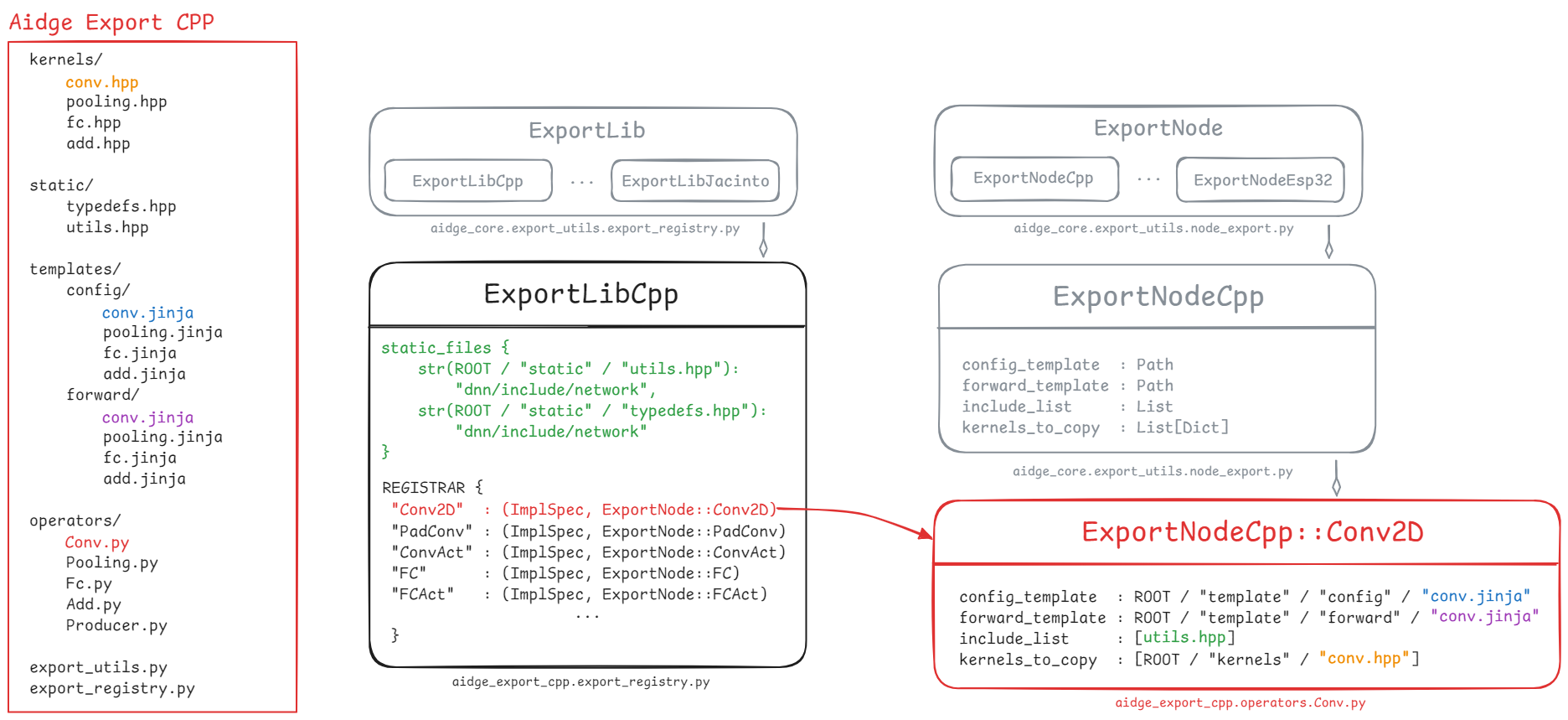

In the diagram above, the export module structure includes two main categories of files:

Copied files: Files in

kernelsandstaticare copied directly into the generated export.Generated files: Configuration and parameter files are dynamically generated based on the model’s layers.

Each kernel type has two template files:

A configuration template (in

layers/)A forward template (used for function calls in

forward.cpp)

Parameters are generated using a third template, parameters.jinja.

Operators#

The operators/ folder is a crucial part of the export module. It contains Python scripts that connect Aidge’s intermediate representation (IR) to the actual implementation used by the export.

The main classes defining export behavior are:

Each export module defines its own ExportLib (in export_registry.py), which maintains a registry mapping Aidge operators to their:

ImplSpec (implementation specification)

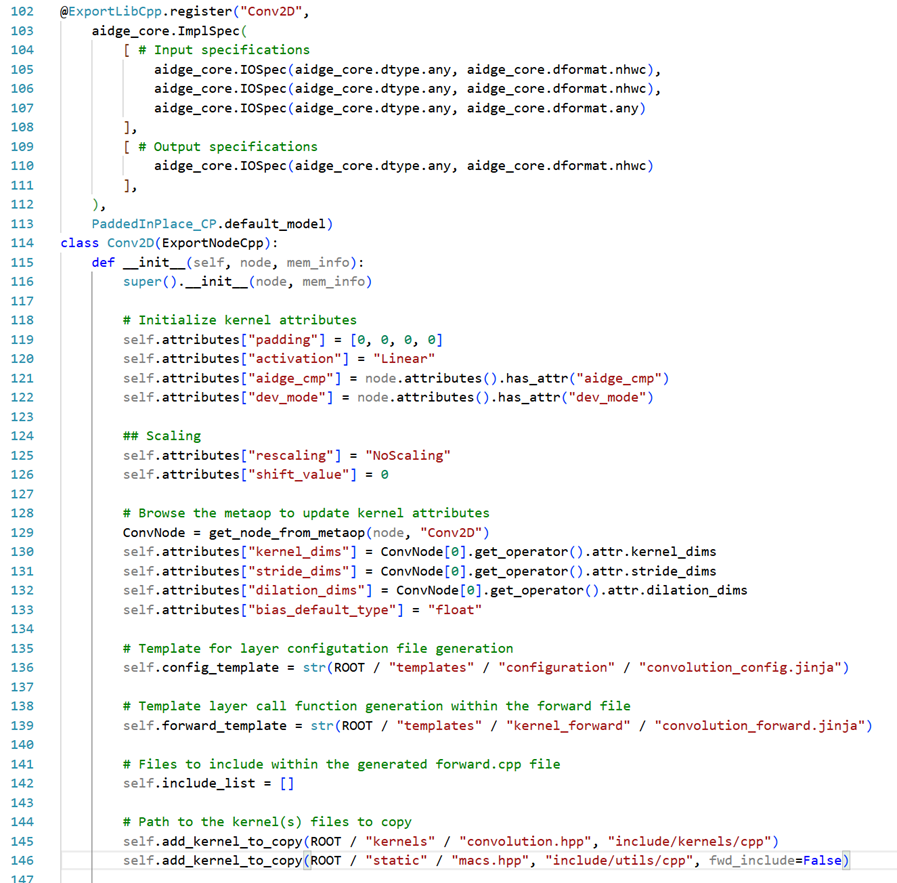

ImplSpec defines the constraints and supported configurations for each kernel, for example, the C++ convolution kernel supports only NHWC data formats for inputs and weights.

ExportNode holds all information required to generate files for a specific operator, including:

config_template- Path to the layer configuration templateforward_template- Path to the kernel call template inforward.cppinclude_list- Required includes forforward.cppkernels_to_copy- List of kernel implementation files to include (one or more)

Each operator’s ExportNode is defined in the corresponding file under operators/ (e.g., operators/Conv.py).

In the example above, the Conv2D operator’s ImplSpec specifies that only NHWC input, weight, and output formats are supported.

The attributes dictionary defines the variables available to Jinja templates when generating configuration and forward files.

Because the convolution implementation supports multiple combinations (e.g., padding + activation), several variants such as Conv2D, PadConv, and ConvAct are registered as subclasses of the same base operator.

For instance, PadConvAct inherits from previous convolution variants, extending or overriding parameters as needed.

Base classes initialize defaults (e.g., padding = 0, activation = Linear), and derived classes modify them.

Finally, the Producer.py file manages parameter exports (corresponding to producers in the Aidge graph).

Adding a New Kernel#

Now that the export structure has been described, let’s go through the process of adding a new kernel to the export. The procedure is straightforward, simply follow these steps:

Create the kernel implementation and place the file in the

kernelsfolder.Create the configuration template file, defining all the parameters required to execute the kernel function.

Add the forward template file, which generates the kernel call inside the

forward.cppfile.Register the kernel in the

ExportLibby creating a dedicated Python file within the operators folder.(Optional) If your kernel combines multiple operators, you can create a recipe to fuse them into a

MetaOperatorand register it as well. These recipes are typically defined in theexport_utils.pyfile.

And that’s it, your new operator is now supported in the export system !

For more detailed instructions on adding a kernel, refer to the tutorial: “Add a Custom Operator to the CPP Export”.