Add a custom operator to the CPP Export#

This notebook details the main steps to detect unsupported operators and add them in the aidge_export_cpp module. For this example, we will try to replace the ReLU nodes with Swish nodes, which are not natively supported in Aidge. To do so, we will go through the following steps :

Import the ONNX model

Replace ReLU Operators with Swish Operators

Schedule the graph

Add Swish to the CPP Export

Export & Test

[ ]:

%pip install aidge-core \

aidge-backend-cpu \

aidge-export-cpp \

aidge-onnx \

aidge-model-explorer

1. Import the ONNX model#

Import the required modules#

aidge_core: Hold the core features of Aidgeaidge_onnx: Import models from ONNX to Aidgeaidge_backend_cpu: CPU kernels implementation (Aidge inferences)aidge_export_cpp: CPP export moduleaidge_model_explorer: Model vizualizer tool

[ ]:

# Utils

import matplotlib.pyplot as plt

import numpy as np

# Aidge Modules

import aidge_core

import aidge_onnx

import aidge_backend_cpu

import aidge_export_cpp

import aidge_model_explorer

[ ]:

aidge_core.Log.set_console_level(aidge_core.Level.Error)

Load the model#

aidge_onnx module to load the ONNX LeNet file.[ ]:

# Download the model

file_url = "https://huggingface.co/EclipseAidge/LeNet/resolve/main/lenet_mnist.onnx?download=true"

file_path = "lenet_mnist.onnx"

aidge_core.utils.download_file(file_path, file_url)

# Load the model

model = aidge_onnx.load_onnx(file_path)

Aidge offers a powerful visualization tool derived from the google model explorer, allowing to check the current state of the graph.

[ ]:

aidge_model_explorer.visualize(model, "Imported LeNet")

Modify the graph#

[ ]:

aidge_core.remove_flatten(model)

aidge_model_explorer.visualize(model, "Removed Flatten")

2. Replace ReLU operators with Swish operators#

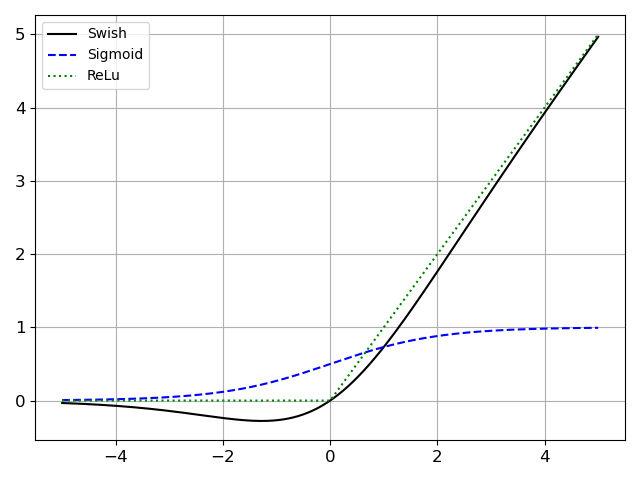

ReLU vs Swish activation functions :

The Swish activation function is defined as:

The size of the beta vector used in the Swish formula depends on the dimension of its second input. Therefore the first step consists in forwarding the dimensions through the model for each node to know the dimensions of its inputs.

digit.npy file.sudo apt-get install git-lfs

git lfs install

git lfs pull

[ ]:

# Get the input

digit = np.load("digit.npy", allow_pickle=True)

input_tensor = aidge_core.Tensor(digit)

# Print the input

img = digit.squeeze()

plt.imshow(img, cmap="gray")

plt.axis("off")

plt.show()

[ ]:

# Forward the dimensions in the graph

model.forward_dims([input_tensor.dims])

ReLU operators within the graph and replace them with Swish operators.Swish operator does not exist within Aidge, we will create a GenericOperator (which basically is an empty Operator) by providing a type name, the number of edges (inputs and outputs) as well as a name for the node.[ ]:

# Get the ReLU nodes within the graph

matches = aidge_core.SinglePassGraphMatching(model).match("ReLU")

print("Number of match : ", len(matches))

for switch_id, match in enumerate(matches):

# Get ReLU node

node_ReLU = match.graph.root_node()

# We instantiate Swish as a generic operator

node_swish = aidge_core.GenericOperator(

"Swish", nb_data=1, nb_param=0, nb_out=1, name=f"swish_{switch_id}"

)

node_swish.get_operator().attr.betas = [1.0] * node_ReLU.get_operator().get_input(

0

).dims[1]

# Replace ReLU node

## Note: ignore new outputs to avoid adding MaxPooling optional output to the graph outputs

aidge_core.GraphView.replace(

set([node_ReLU]), set([node_swish]), ignore_new_outputs=True

)

print(

f"Replaced {node_ReLU.name()} ({node_ReLU.type()}) with {node_swish.name()} ({node_swish.type()})"

)

[ ]:

aidge_model_explorer.visualize(model, "myModel", embed=True)

ReLU operators have successfully been replaced with Swish generic operators.GenericOperators are displayed in red on the model explorer.3. Schedule the graph#

In order to later export our model, we need to generate a scheduler, which is an ordered version of our model.

To create the scheduler, we need for each operator :

To have an implementation (even an empty one) for the selected backend;

To have a

forward_dims()function defines, describing how the dimensions should be affected by the operator.

As we just created a GenericOperator for the Swish node, we need to specify these :

[ ]:

class GenericImpl(aidge_core.OperatorImpl):

# no need to define forward() function in python as we do not intend to run a scheduler on the model

def forward(self):

pass

for node in model.get_nodes():

if node.type() == "Swish":

node.get_operator().set_forward_dims(

lambda x: x

) # to propagate dimensions in the model

node.get_operator().set_impl(

GenericImpl(node.get_operator())

) # Setting implementation

Now, before generating the scheduler, we need to perform 3 last steps :

Set the backend for each node (here we choose the “cpu” backend from the aidge_backend_cpu module);

Set the datatype to

float32;Forward the dimensions through the model.

These three steps can be done at once using the compile() function :

model.compile("cpu", aidge_core.dtype.float32, dims=[[1, 1, 28, 28]])

[ ]:

model.set_backend("cpu")

model.set_datatype(aidge_core.dtype.float32)

model.forward_dims(dims=[[1, 1, 28, 28]])

Eventually we can generate the scheduler.

[ ]:

scheduler = aidge_core.SequentialScheduler(model)

scheduler.generate_scheduling()

4. Add Swish to the CPP Export#

In order for an operator to be exported, we need to create 3 files :

swish_kernel.hpp: Holds the implementation of the kernel;swish_config.jinja: Template to generate the configuration file of the kernel, holding inputs sizes, memory offsets, etc…swish_forward.jinja: Template to generate the kernel call within theforward.cppfile.

You will find these 3 files within the swish_export_files/ folder of the current workspace.

“Supporting a kernel” in the context of an export, basically means adding these files and store their paths within a dedicated ExportNode, as shown in the cell below :

[ ]:

@aidge_export_cpp.ExportLibCpp.register_generic(

"Swish", aidge_core.ImplSpec(aidge_core.IOSpec(aidge_core.dtype.float32))

)

class SwishCPP(aidge_core.export_utils.ExportNodeCpp):

def __init__(self, node, mem_info):

super().__init__(node, mem_info)

self.config_template = "swish_export_files/swish_config.jinja"

self.forward_template = "swish_export_files/swish_forward.jinja"

self.include_list = []

self.kernels_to_copy = [

"swish_export_files/swish_kernel.hpp",

]

Note that we used the register_generic() function here, to register within the ExportLib a generic operator. We could also register simple Operators or MetaOperators using respectively the register() and register_metaop() functions.

5. Export and Test#

export() helper function.[ ]:

# Creating the label

label = np.array(7)

label = aidge_core.Tensor(label)

label.set_datatype(aidge_core.dtype.int8)

# Exporting the model

export_folder_name = "my_export"

aidge_export_cpp.export(

export_folder_name=export_folder_name,

model=model,

scheduler=scheduler,

labels=label,

)

Compile and run the export.

[ ]:

from subprocess import CalledProcessError

print("\n### Compiling the export ###")

try:

for std_line in aidge_core.utils.run_command(["make"], cwd=export_folder_name):

print(std_line, end="")

except CalledProcessError as e:

raise RuntimeError(0, f"An error occurred, failed to build export.") from e

print("\n### Running the export ###")

try:

for std_line in aidge_core.utils.run_command(

["./bin/run_export"], cwd=export_folder_name

):

print(std_line, end="")

except CalledProcessError as e:

raise RuntimeError(0, f"An error occurred, failed to run export.") from e