Information Handling

For the sake of processing information that is beeing seen or heard, the input has to be further dealt with by cognitve structures. Within SCM, this functionality is called information handling. The following chapter gives insights into how the (mostly visual) information is handled by the driver. It is further described how the process is linked to the driver’s allocation of their field of view. The cognitive buffer, so-called mental model, is introduced to explain what and how information is stored in it. Subchapters on the topics of Extrapolation, Reliability and Tau Theory provide further insights on how visual information is primarily dealt with.

Overview: Information Handling

The simulation framework provides ground truth information about the world and all agents in it. It is initially handled by the SensorDriver which receives an update of the complete external information at the beginning of every time step. All this information is then primarily filtered by the Sensory Perception of the model. As the next step, visual perception models, thresholds and mechanisms are apllied to the remaining ground truth information. Finally, it is stored as described in Types of Information, so the driver can update their mental environment representation based on the perceived information. This part of the model therefore serves as a perception filter, which alters the ground truth data in the world to the mental representation SCM utilizes. The application of visual perception models depends heavily on the current allocation of the driver’s field of view which is determined by the Gaze Control. The gaze behaviour algorithm of Gaze Control is executed directly before any further information processing in the same time step.

Detailed description of the submodule’s features

The (mostly) visual perception of ground truth information is divided into separate types of information:

own vehicle data concerns all information about the vehicle of the driver (e.g. speed, acceleration, …)

surrounding objects data concerns all information about surrounding objects and agents on a microscopic traffic level (e.g. time headway, time to collision, …)

mesoscopic information concerns all information about the traffic environment on a mesoscopic traffic level (e.g. traffic signs, distances to end of lanes, …)

infrastructure information concerns all information about the static environment (e.g. traffic signs, lane markings, …)

The different perception models for these types of information are explained in more detail below.

Perception of information from the driver’s own vehicle

The perception of the driver’s own vehicle information is currently no perception model at all. The information about its state being mostly non-visual values is simply transferred to the data store. The same holds true for optical HMI signals which are currently not modelled. SCM transfers a range of information about the driver’s own vehicle in every time step, regardless of any perception states of the driver’s sensory system. Those are e.g. the vehicle’s absolute velocity, longitudinal acceleration, velocity and position, as well as lateral velocity and position. Furthermore, the vehicle’s heading angle, information on current lane crossing movements, whether the vehicle has collided with any object and the current lane ID as well as lateral distances to the lane markings are processed. For a complete list, please see the developer guide.

The model currently currently contains a placeholder for the perception of blind spot warnings in the vehicle’s left or right mirror. It is integrated into the assignment of the visual perception models for microscopic traffic information from surrounding vehicles. This is simply due to the fact that this mechanism is currently the only one which incorporates the current allocation of the driver’s field of view. The mechanism takes effects if the driver fixates the AOI LEFT_REAR or RIGHT_REAR (see Gaze Control for further explanations).

Visual perception of microscopic information from surrounding objects and agents

The visual perception of microscopic information from surrounding objects and agents is performed individually for every relevant AOI (see Gaze Control for further explanations) within ego’s field of view. With exception to the AOIs LEFT_SIDE / RIGHT_SIDE / LEFTLEFT_SIDE and RIGHTRIGHT_SIDE, only one object, i.e. the one closest to the ego vehicle, is saved to the driver’s mental model. For the side AOIs, the process is repeated once for every object present in the ground truth information. If no object exists inside the observed AOI, no data is assigned in the data store. Existing objects will update the following data with several values from the ground truth. Those are, amonst others: acceleration, brake light activation, collision data, time headway to and the ID as well as maximum height of surrounding objects, state of the indicators, lane crossing information, relative lateral and longitudinal distances to the object, TTC and more. For a complete list, please refer to the developer guide.

One has to be aware of the fact, that data is only processed further, if it is in the driver’s field of view. This is what the perception models are based on, which is called focus area specific. Relevant information for the focus area specific processing are the acceleration and acceleration change, absolute as well as lateral and longitudinal velocities, lane boundary distances and the change of longitudinal velocity.

Currently, only fovea and UFOV (see Gaze Control for further explanations) cause the focus area specific data to be processed. Information from both is perceived at equal quality.

The time related values concerning gap information, gap change rate, TTC, and TTC change rate are also calculated in this step.

Visual perception of mesoscopic information from the traffic environment

The visual perception of mesoscopic information from the traffic environment currently involves no models of visual perception. The relevant information is simply transferred to the data store, which allows it to easily be complemented by any visual perception models in the future. The mesoscopic information currently used by SCM consists of the mean velocity values for three lanes: the lane the ego vehicle is currently in and the ones directly to the left and right of it.

Visual perception of infrastructure information from the traffic environment

The visual perception of infrastructure information from the traffic environment currently involves no models of visual perception, too. In general, three types of information are simply transferred into the driver’s mental model:

The traffic signs for all known lanes are perceived and current knownledge on traffic signs is updated.

The longitudinal lane markings for all known lanes are perceived and updated.

There is a check to discover if the number of existing lanes has changed and, if necessary, the new number of lanes is set.

Additionally, further information is provided to SCM. It describes the current maximum visibility distance specified by the world, the lane ID and distances from the next exit and entry. Furthermore, several data about the surrounding lanes (e.g. left lane, left-left lane) is transferred. The lane data provides information on the existence of the lanes, curvature, widths, distance measures and curvature at specific points of interest for the agent. A complete list of variables can be found here.

Calculation of kinematic values

The driver controls their longitudinal motion using acceleration demands. For the calculation of the acceleration, several kinematic values are taken into account, e.g. the kinematic delta values distance, velocity and acceleration in respect to the leading vehicle. Each of these have an influence on the overall acceleration needed to fulfill the following Wiedemann-mechanism.

Model by Wiedemann (1974): The original model states that the driver swings between an upper and lower boundary. Instead, the SCM driver tries to hold an equilibrium following distance when there is a leading vehicle in front of them and there is no critical situation. Since they are not perfect in compensating what the leading vehicle is doing, they are constantly over or under the equilibrium distance and therefore need to increase or decrease their velocity. For a closer look, please see the original work: Wiedemann, R. (1974). Simulation des Straßenverkehrsflusses (Dissertation). Germany: University of Karlsruhe, Institut für Verkehrswesen.

Calculation of time headways

The driver attempts to follow a leading vehicle by a certain time headway, which is the distance between the front of the driver’s vehicle and the back of the leading vehicle with reference to the driver’s current velocity. There is a total of three time headways marking different limits.

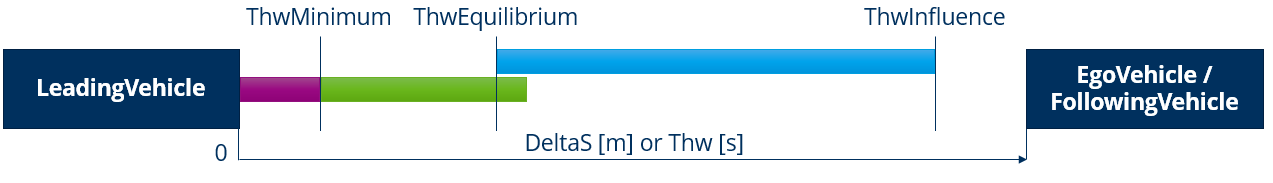

Fig. 14 Time Headways (DeltaS - relative longitudinal distance; Thw - time headway; ThwMinimum/ThwEquilibrium/ThwInfluence - minimum/equilibrium/influencing time headway)

Depending on whether the driver is above or below these limits, the actions taken into account may vary. The calculation of the minimum and equilibrium time headway is based on a time-to-brake (TTB) approach. It is assumed that the leading vehicle might brake and that the driver will need a certain amount of time to react and change their pedal position until they are able to brake as well. The time headways are calculated in a manner that gives the driver the chance to avoid a collision with the leading vehicle. The current velocity and acceleration of both agents are regarded as well as an insecurity factor due to obsolete information. For the minimum time headway, it is assumed that both agents will brake with the maximum deceleration possible until standstill. The remaining distance between the driver’s front and the leading vehicle’s back shall be the queueing distance. For the equilibrium time headway it is assumed that both agents will brake with their comfort deceleration but not until standstill. Instead a certain braking period is assumed for the leading vehicle.

Influencing time headway marks the beginning, where a driver starts to consider the leading vehicle’s behaviour. Its distance is set in a manner, so that the driver only needs to release the gas pedal to decelerate with the engines drag in order to equalize the differential velocity to the leading vehicle at equilibrium distance.

Equilibrium time headway marks the following distance the driver tries to accomplish during normal driving. As mentioned in the model by Wiedemann, the driver does this by accelerating or braking with an intensity up to their comfort acceleration or comfort deceleration.

Minimum time headway marks the lowest following distance comfortable to the driver. There is a potentially dangerous situation when the gap is less than the minimum time headway. To minimize that risk, the driver tries to increase the distance to the leading vehicle by decelerating with the maximum brake performance.

Calculation of the legal velocity

The legal velocity is perceived seperately for every lane and saved togehther with the infrastructure information of each lane. It is derived from traffic signs related to speed limitations. When no speed limit has been detected yet, the driver assumes that there is no speed limit. When a new speed regulation sign enters the driver’s perception and is detected by the driver’s gaze, the (former) current speed limit is stored in the model as the previous speed limit and replaced by the new value.

This legal velocity may be exceeded by each agent depending on the driver’s individual characteristics (TODO reference to explanation of relevant driver parameter).

Depending on the driver’s individual anticipation parameter and the velocity difference between the  and freshly detected

and freshly detected  , the influencing distance for the speed limit ist calculated.

This determines the point at which the driver starts following the current instead of the previous speed limit sign.

This feature is implemented to reach a more realistic behaviour where some drivers speed up early while decelerating to new more strict speed limits late, whereas more conservative drivers only start accelerating once they have passed the speed limit sign and start decelerating early to pass a new, lower speed limit sign with (at most) the new speed limit.

, the influencing distance for the speed limit ist calculated.

This determines the point at which the driver starts following the current instead of the previous speed limit sign.

This feature is implemented to reach a more realistic behaviour where some drivers speed up early while decelerating to new more strict speed limits late, whereas more conservative drivers only start accelerating once they have passed the speed limit sign and start decelerating early to pass a new, lower speed limit sign with (at most) the new speed limit.

Calculation of the target velocity

The target velocity is the velocity that is reached by the driver during free driving, thus when the driver is not following a leading vehicle. If they are following a leading vehicle, the agent’s target velocity is determined using the time headways described in the chapter before. The target velocity is limited by the following influences:

the desired velocity of the driver

speed limitations and a certain degree to exceed this limit (see legal velocity)

maximum speed the vehicle can technically achieve

environmental and traffic conditions

curvature of the road

physical sight distance

lane width

right overtaking prohibition (see Application of right overtaking prohibition)

passing slow platoons

The target velocity is the set by the lowest of all these influences.

Transition of mental information due to position changes of own vehicle or surrounding vehicles

Transition is a central mechanism of SCM’s mental model. It ensures that the various data held in the driver’s mind is moved under certain circumstances. Generally, transition of mental information is mainly caused by two different conditions: movement of the agent themselves and movement of other agents between AOIs.

Transition of data due to lane change of the ego agent

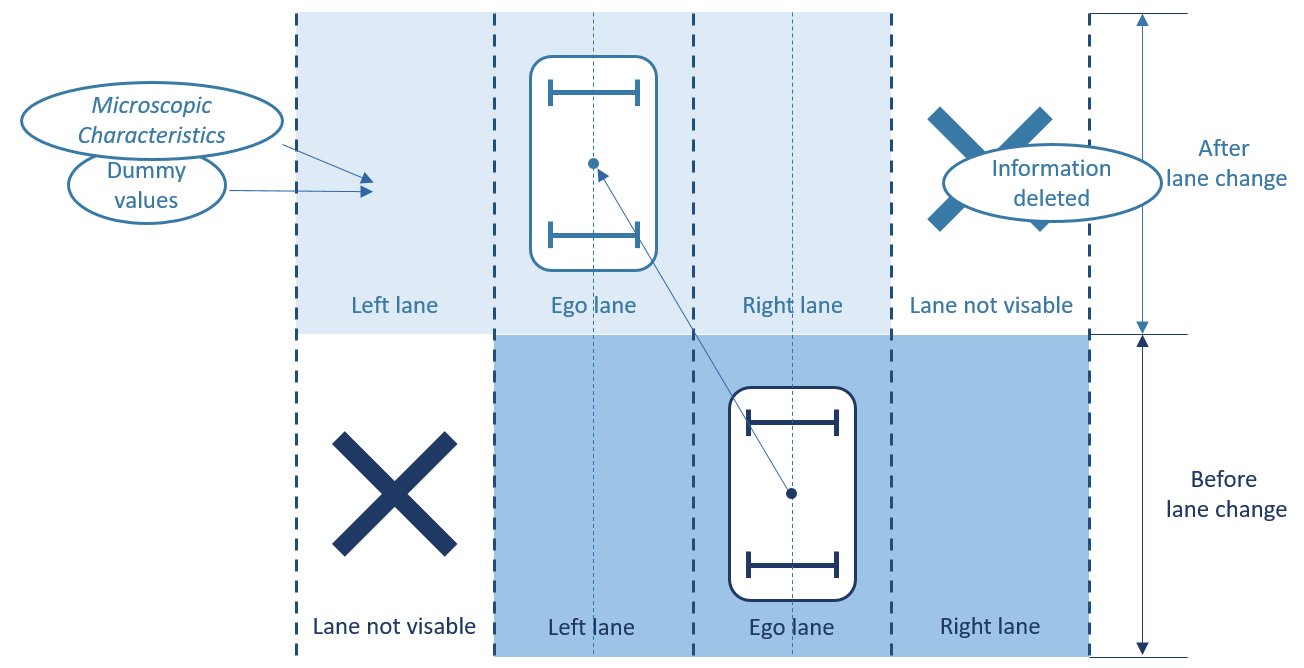

The greatest effect is caused by a lane change of the agent themselves. In the following, the transition of mental information is described exemplarily for a lane change to the left (shown in Fig. 15). For a lane change to the right, the transition is correspondingly inverse.

The previous ego lane becomes the right lane.

The previous left lane becomes the ego lane.

The previous right lane is no longer visible, therefore the information will be deleted.

The information of the new left lane is temporarily filled with dummy values until the driver actively takes up this information about environmental objects again. All other information is currently being recorded ground-truth and is not linked to the gaze movement.

Fig. 15 Transition of mental information due to an ego lane change

Transition of surrounding object data

The transition of the surrounding objects is performed between the extrapolation of the known data and the update of the AOIs in vision. This guarantees that the data of an agent, who moves out of the current field of view, is not lost in the information update.

First, the directions of the transition is determined in longitudinal and lateral direction in accordance to the following criteria:

Direction |

Criteria |

|---|---|

Forward |

Rear-area and overlapping in longitudinal direction |

Forward |

Side-area and longitudinal obstruction indicating object in front of agent |

Backward |

Side-area and longitudinal obstruction indicating object behind agent |

Backward |

Front-area and overlapping in longitudinal direction |

Left |

Lateral position to lane center is greater than that lane’s half width |

Right |

Lateral position to lane center is less than that lane’s negative half width |

Next, the detected transition is executed starting with the determination of the AOI that the object will end up in (targeted AOI). In case of a longitudinal transition, that is merely the logical choice resulting from the current AOI and the transition direction (e.g. a right rear vehicle will only forward transition into a right side). In the lateral direction, the distance to the front and rear as well as the length of every involved object needs to be determined. With this information it is possible to determine the exact location of the observed object in the new lane and thus its new AOI (e.g. between the front and front far object, so it will become the new front far object itself). The only restrictions in this functionality are that no multiple transitions are allowed and that the resulting AOI exists. Transitions into non-existent areas (e.g. into the ego vehicle, behind rear vehicle, …) will result in the deletion of the data.

With this information the actual transition may be performed. The object data will be copied and subsequently removed from the original AOI. Any occupants of the targeted AOI will be longitudinally transitioned themselves before the previous one is performed. Exception to this is the occupied side area. By definition, all objects with a longitudinal overlap to the ego vehicle are considered in the side area. Thus, objects transitioning into the side area in front of the current side object will replace it without any further consideration of the occupant. On the other hand, all data of objects that would be behind the occupant in the side area will be deleted. Lastly, the lane dependent data (ID of the currently occupied lane, lateral position in the lane, …) will be adjusted in case of a lane change.

Note: In case of multiple transitions (e.g. forward AND right, …) the previous two steps (determination of target AOI/ transition) will be performed sequentially, starting with the longitudinal part and followed by the lateral portion.

Multi-detection of agents in surrounding AOIs

Observing an object, that is also listed in another (outside of the current field of view) area or more may be possible. Once identified, the object observed in the current time step will be kept. All other detected multiples will be removed.

Adjustment of front far objects

The extrapolation and subsequent transition of objects may move front objects out of their previous lane. When this lane also contains a front far object and no other vehicle transitions into the front area, the front far object will need to be moved into the front area. This is due to the definition, that the front object is always the first object completely ahead of ego.

Calculation of lane mean velocity based on mesoscopic and microscopic information

This mechanism ensures that the information regarding the mean velocity of a lane is enriched by the directly perceived velocity information of individual surrounding vehicles. Therefore, the velocities of all non-empty AOIs of a lane are added up and their mean value is calculated. These mean values are stored in a time buffer, so that a mean value of the corresponding lane can be formed over the last time steps (temporal development is thus taken into account). The temporal development is also carried out for the handed over mean velocity from the SensorDriver. The mean velocity perceived by the model for a lane results from the mean value of the two temporal mean values from the buffers described above.