Implementation Architecture

AlgorithmScm

As previously described, SCM is a driver behaviour model providing an accurate simulation of the occurrence of critical traffic situations and road accidents which may result from those critical situations.

To explain in detail how the model is set up and what dependencies influence the simulation outcome, this section of the SCM user guide provides an overview on the internal structure of the module representing the driver’s mental processes (“AlgorithmScm”) and the high-level signal flow throughout the model.

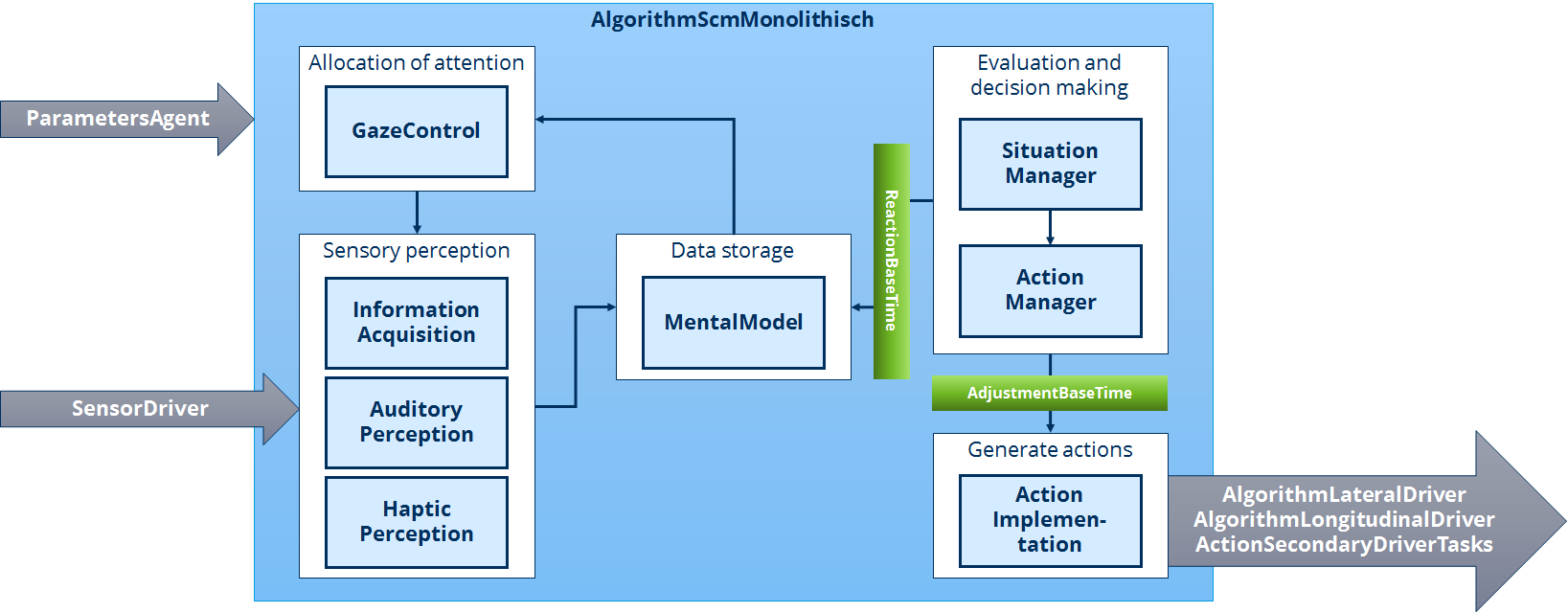

The internal structure and information flow of AlgorithmScm is illustrated by Fig. 66. The depicted submodules and their information exchange are briefly described subsequently.

Fig. 66 Internal structure and information flow of AlgorithmScm

First of all, the driver behaviour parameters for SCM and also the model parameters of the driver’s own car are provided by the module ParametersAgent and are distributed throughout AlgorithmScm and all its submodules for further use.

The submodule GazeControl is the first submodule called by AlgorithmScm within one time step. It simulates the gaze behaviour of the driver and thereby strongly determines, which information can be perceived from the real world. This is described in more detail in the section Gaze Control. Since humans perceive their environment mainly by visual perception and the field of perception of both eyes is rather limited, this mechanism is crucial for the simulation of perception errors, which in turn may induce critical situations.

Depending on the current gaze direction, the simulation of human sensory perception is modelled in the submodule InformationAcquisition (which is mainly considered with visual perception and therefore is strongly depending on the gaze direction). Its functions are explained in the chapter Information Handling. The ground-truth information that has to be sensory processed by these submodules, is provided by the module SensorDriver.

As perception also means recognition, all the perceived information must be implemented in a mental representation of the environment. This is realized by the submodule MentalModel which consists of several structures holding different kinds of information. This differentiation is explained in Types of Information. The data for all the surroundings currently not perceived by sensory perception (e.g. areas behind the vehicle while looking ahead) is extraploated by SCM.

All cognitive evaluation and decision-making processes are grounded in this mental representation of the driver’s environment. This is handled primarily by the following two submodules:

The SituationManager evaluates the information in the MentalModel with regard to the current traffic situation. The situation is solely described by the state of the environment, excluding what the driver themselves is currently doing. If significant changes occur in the environment, the driver will evaluate them and recognize the surrounding occurances as a different situation. This process is outlined in the subsection Situations

The ActionManager considers the chosen traffic situation from SituationManager and evaluates the MentalModel with regard to appropriate actions. This may also involve opportunistic influences like changing lanes in favour of driving with a desired speed when in a lane suffering from slower traffic. The actions are divided into vehicle lateral guidance (“lateral action state”) and vehicle longitudinal guidance (“longitudinal action state”). (see Action States) The section Decision Making describes the ActionManager’s various tasks in detail.

The action states of the ActionManager only characterize what the driver is going to do, but not how. This is realized by the last submodule, ActionImplementation which generates appropriate command variables (longitudinal acceleration and curvature) for the vehicle’s guidance and also sets commands for all secondary driver tasks (e.g. actuation of indicators or flashind the high beams). These commands and command variables are also the model outputs of AlgorithmScm:

The longitudinal acceleration is transferred to the module AlgorithmLongitudinalDriver which generates appropriate pedal positions and the gear to match this command variable.

The curvature (besides other necessary command variables for lateral guidance) is transferred to the module AlgorithmLateralDriver which generates an appropriate steering wheel angle to match this command variable.

The secondary driver tasks are transferred to the module ActionSecondaryDriverTasks which simply sends them back to the simulation framework.

This describes the principal information flow throughout AlgorithmScm, but as shown in Fig. 66, there are some additional mechanisms involved in this process:

Top-Down information requests: When SituationManager and ActionManager evaluate the MentalModel and try to make decisions based on mental information which is not sufficient enough in terms of age and quality, there is a need for an information update. This can e.g. be the case when the information has only been recognized by peripheral perception several seconds ago and therefore should be updated. For further details, see Gaze Control. That is why a feedback mechanism between MentalModel and GazeControl allows a direct allocation of the driver’s gaze to areas of increased interest. This is called top-down driven allocation of attention.

ReactionBaseTime: This reaction time component is the first of three throughout SCM and its related agent modules. It serves as a cognitive buffer in the decision-making processes. After every decision in the submodules SituationManager and ActionManager, a reaction base-time is drawn from an associated distribution function and is counted down in the subsequent time steps. Only after this timer has reached zero, another decision can be made by either of the two submodules. If both submodules make a decision in the same time step, this counts as one decision and therefore, not two reaction base-times are processed. The distribution function is modelled as a lognormal distribution. The parameters for this distribution function are provided by the module ParametersAgent.

AdjustmentBaseTime: This reaction time component is the second of three throughout SCM and its related agent modules (the third is the “pedal change time” (see Longitudinal and Lateral Control) which is applied in the module AlgorithmLongitudinalDriver). This component is implemented to simulate a specific characteristic in human drivers’ longitudinal guidance: Drivers do not regulate their longitudinal acceleration continuously, but discretely. Accelerations are set and then held more or less constant over short periods, before they are recalculated. This is simulated by the AdjustmentBaseTime which is drawn from an associated distribution function after every adjustment of the vehicles longitudinal acceleration and is than counted down over the subsequent time steps. The acceleration is held constant until the timer reaches zero and is than recalculated and set before the process starts anew. The distribution function is modelled as a lognormal distribution. The parameters for this distribution function are provided by the module ParametersAgent.

Explicit control of vehicle position

It is possible to position the vehicle using the TrajectoryFollower which is not part of the SCM user guide. While it is activated, the SCM driver continues gathering data for his mental model using the regular mechanisms. The driver still derives the situation they are currently in due to the positioning and state of their vehicle compared to its surrounding. Since any of the driver’s lateral and longitudinal actions are overruled by the TrajectoryFollower anyways, they won’t take any actions though. All manouevres which are active during the external control activation are viewed as completed and the lateral action state is reset to the default lane keeping value. After the deactivation of the external control module/TrajectoryFollower, the agent is prevented from lane changing for a period determined by LANE_CHANGE_PREVENTION_THRESHOLD (currently default 1000ms).

SCM Lifecycle

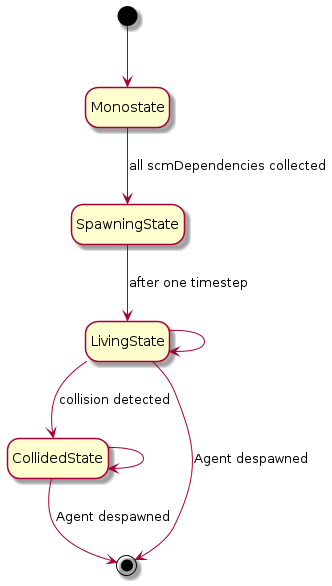

The following picture shows the SCM state machine or the lifecycle of SCM in the simulation.

Fig. 67 Lifecycle of SCM states

States

Monostate

This is the initial state of the std::variant state machine before a valid SCM model can be constructed. Nothing happens here.

SpawningState

As soon as all needed scmDependencies are collected, the state switches to SpawningState. It’s a special state for initializing an agent when spawning in simulation. Here, the behaviour slightly differs from the regular simulation. For instance, SCM uses ground truth data to have a complete understanding of its environment, e.g. other agents in all directions and already passed and upcoming traffic signs (e.g. current speed limitations).

LivingState

The state machine advances to the LivingState after one timestep. This is the regular state during simulation. SCM receives all simulation updates from other modules and the simulation core, processes this data and sends the output back to subscribed modules and core.

CollidedState

If a collision is detected, the SCM state machine goes in this state. As there currently no post crash behaviour intended, SCM itself simply does nothing anymore except calling UpdateOutput() as other modules expect a valid signal for their own UpdateInput() method.

End of SCM lifetime

As long as the simulation is running, SCM is either in LivingState or CollidedState (only exception is when a new agent is spawned exactly in the last time step of the simulation) and thus present in the simulation. Despawning is solely done by simulation core and is none of SCM business so if that happens than the agent simply drops out of the simulation.