Agent Components

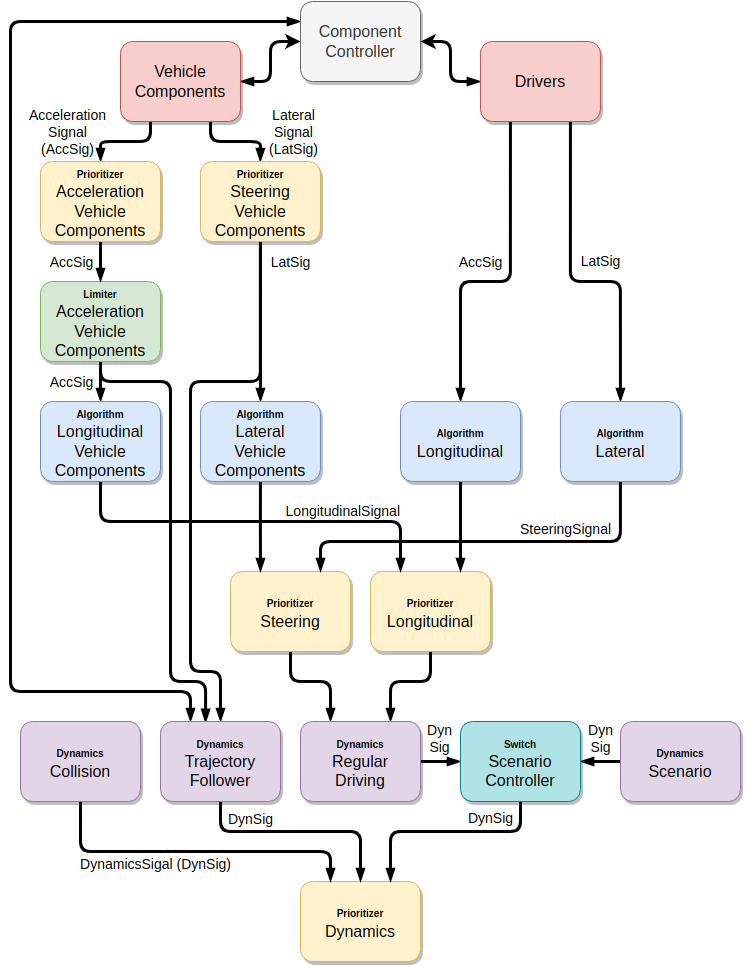

An agent in openPASS is composed of multiple modules, which are connected by corresponding signals. As shown in the next figure, the modules can be roughly divided into the groups drivers, vehicle components, algorithms, dynamic modules, and prioritizers. Thereby, modules can consist of several submodules, such as sensor (reading from an interface) and action (writing to an interface).

Modules for longitudinal and lateral dynamics

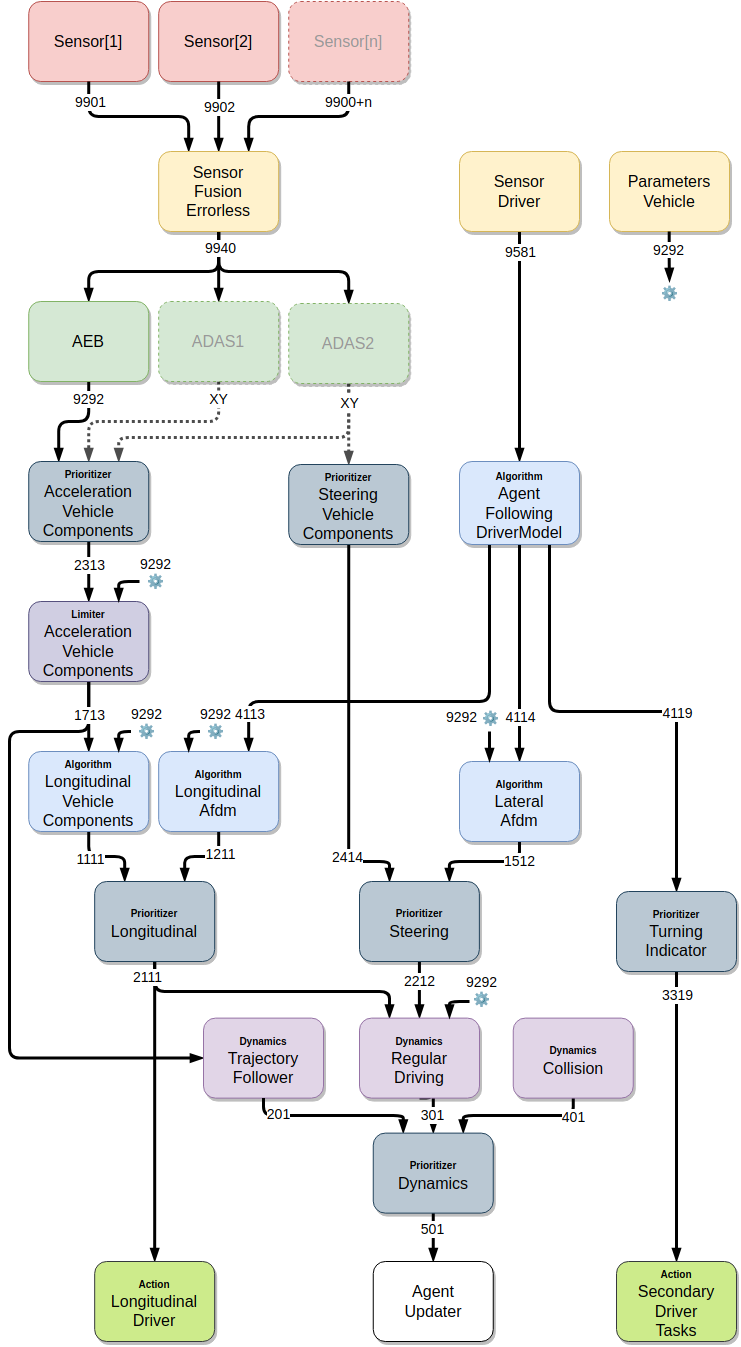

By statistic means, based on corresponding probabilities defined in the ProfilesCatalog, each individual agent is composed from a superset of all possible (valid) combinations, which is defined by the SystemConfigBlueprint. This config defines all available framework modules and agent modules and connects them by corresponding channels, which in turn have a specific signal type.

The next figure gives an exhaustive overview over the current superset:

Components and channel communication

./draw.io/ComponentsChannelCommunicationDiagram.drawio

Modules that can be parametrized by the user are explained in the Simulation User Guide. Therefor the following section only contains the components not listed in the user guide.

Action_LongitudinalDriver

Updates the agents pedal positions and gear. The input for this module is prepared by the AlgorithmLongitudinal Module.

Action_SecondaryDriver

Updates the agents braking light, indicator, horn and all light switches (headlight, high beam, flasher). The input for this module is prepared by the driver module.

Overview of the module’s functionalities

Action_SecondaryDriver gathers the secondary driving tasks and transfers them to output signals. Three graded driving tasks are defined and exampled as follows:

Primary driving tasks: direct control of vehicle movements, like steering, pedal operation, shifting, etc.

Secondary driving tasks: indirect control of vehicle movements, but supporting and complementing driving actions, like activation of indicator, horn, light, etc.

Tertiary driving tasks: all the other activities, which are not related to vehicle control, like radio operation, etc.

Table list of the input paramemter:

Variable |

Description |

Value |

|---|---|---|

in_indicatorState |

State of the indicator |

left, center/off, right, warn |

in_hornSwitch |

Horn activation |

true/false |

in_headLightSwitch |

Light activation |

true/false |

in_highBeamLightSwitch |

High beam light activation |

true/false |

in_flasherSwitch |

Flasher activation |

true/false |

AgentUpdater

The AgentUpdater executes all Set-Methods of the agent dynamics after the DynamicsPrioritizer. This includes position, velocity, acceleration and rotation.

Algorithm_Lateral

This module converts the lateral input of the driver module into a steering wheel angle.

See Algorithm_Lateral.

Algorithm_Longitudinal

This module converts the acceleration input of the driver module into pedal positions and gear.

AlgorithmCar2XSender

This module publishes the below mentioned parameters in each timestep via the RadioInterface provided by the World.

Parameter |

Type |

Unit |

Description |

|---|---|---|---|

SignalStrength |

Double |

W |

Singal strength of the Car2XSender |

SendPositionXEnabled |

Boolean |

If true, the x-coordinate of the agent is sent |

|

SendPositionYEnabled |

Boolean |

If true, the y-coordinate of the agent is sent |

|

SendVelocityEnabled |

Boolean |

If true, the velocity of the agent is sent |

|

SendAccelerationEnabled |

Boolean |

If true, the acceleration of the agent is sent |

|

SendYawEnabled |

Boolean |

If true, the yaw angle of the agent is sent |

Dynamics_Collision

If the number of collision partners of the agent is bigger than in the previous time step, the DynamicsCollision module calculates the collision. Currently the collision is implemented fully inelastic, i.e. all agents will have the same velocity after the collision, while the momentum is conserved. After the collision the agents slow down with a fixed deceleration until fully stopped.

Dynamics_Scenario

See Dynamics_Scenario.

Dynamics_RegularDriving

The module takes care that the motion of the agent fit to the physical limitations, such as friction or maximum possible acceleration based on the current gear. This module uses both the world friction and the vehicle model parameter friction. Thereby it calculates the dynamics of the agents in every time step. The currently covered dynamics are Acceleration, Velocity, and consequently Position, Yaw angle and Yaw rate. The input for this module is the steering wheel angle and the new acceleration of the vehicle.

LimiterAccelerationVehicleComponents

This module limits the AccelerationSignal from the PrioritizerAccelerationVehicleComponents to the constraints given by the vehicle. The DynamicsTrajectoryFollower can then use this signal to calculate a trajectory.

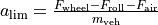

The limit is calculated by  , where the symbols meanings are:

, where the symbols meanings are:

Symbol |

Description |

|---|---|

|

Resulting acceleration limit [m/s²] |

|

Force at wheel (provided by drivetrain) [N] |

|

Force resulting from rolling resistance [N] |

|

Force resulting from air drag [N] |

|

Mass of the vehicle [kg] |

The components are calculated as follows:

Driving force

Symbol |

Description |

|---|---|

|

Resulting torque from drivetrain at current velocity (assuming best gearing selected) [Nm] |

|

Axle transmission ratio [1] |

|

Static radius of the wheels [m] |

The engine torque  is calculated by a simple model, where the torque scales proportional with the current engine speed between 1350 and 5000 rpm, up to maximum engine torque.

From minimum engine speed up to 1350 rpm the torque scales proportional with the engine speed up to half the maximum torque.

From 5000 rpm up to maximum engine speed, the torque scales with 5000 / maxEngineSpeed, up to maximum torque.

is calculated by a simple model, where the torque scales proportional with the current engine speed between 1350 and 5000 rpm, up to maximum engine torque.

From minimum engine speed up to 1350 rpm the torque scales proportional with the engine speed up to half the maximum torque.

From 5000 rpm up to maximum engine speed, the torque scales with 5000 / maxEngineSpeed, up to maximum torque.

Rolling resistance

Symbol |

Description |

|---|---|

|

Mass of the vehicle [kg] |

|

Rolling friction coefficient (constant 0.015) [1] |

Air drag

Symbol |

Description |

|---|---|

|

Density of air [kg/m³] |

|

Vehicle front surface area [m²] |

|

Drag coefficient [1] |

|

Vehicle’s current velocity [m/s] |

OpenScenarioActions

As defined by OpenSCENARIO, OpenScenarioActions is the relaying module for:

Trajectory-actions

LaneChange-actions

UserDefined-actions.

If a

TrajectoryManipulator

LaneChangeManipulator

or a user defined manipulator

raises such an event for the specified agent, the module forwards it as signal to all interested module of the corresponding agent. The modules can than react on the signals content without time delay.

Parameters_Vehicle

The ParametersVehicle module forwards the VehicleModelParameters to all other modules that need them via the ParametersVehicleSignal.

This section includes all vehicle system related parameters, powertrain system related parameters and steering system related parameters. Relevant parameters are presented in the following three tables with the corresponding explanations.

Sensor_Driver

The Sensor_Driver performs queries on the AgentInterface to gather information about the own agent and its surroundings. These are forwarded to the driver modules and the Algorithm modules, which use them for their calculations.

See Sensor_Driver.

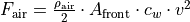

Sensor_OSI

This module is a representation of various sensors and uses OSI for its input and output. Currently, two types of sensors (SensorGeometric2D and ReceiverCar2X) are available.

SensorGeometric2D

The SensorGeometric2D detects all objects in a sector with specified range and opening angle. The input of the sensor is a OSI SensorView generated by the OSI World and its output is a OSI SensorData structure.

For generation of the SensorView, a SensorViewConfiguration has to be provided by the sensor to the OSI World. See image for a visualization of the dataflow.

OSI SensorView dataflow

From OSI development perspective, the OSI World would have to send back a SensorViewConfiguration to the sensor, with the contents describing the actual configuration of the SensorView (since the World is maybe notable to provide the requested information). As we have full control over the simulation environment, this back-channel is skipped and SensorView according to the sensor’S SensorView configuration will always be provided.

To test whether an object is inside our sector we check 1. if it is inside the circle around the sensor with radius the detection range and 2. if it intersects a suitable polygon

Depending on the opening-angle the polygon in 2) has either four (angle < 180°) or five corners (angle >= 180°).

four-corner kite polygon for angle lower than 180°

Object |

intersects circle |

intersects polygon |

detected |

|---|---|---|---|

A |

true |

false |

false |

B |

true |

true |

true |

C |

false |

true |

false |

D |

false |

false |

false |

E |

true |

true |

true |

five-corner polygon for angle greater or equal 180° and greater 360°

Object |

intersects circle |

intersects polygon |

detected |

|---|---|---|---|

A |

false |

true |

false |

B |

true |

true |

true |

C |

true |

true |

true |

D |

true |

false |

false |

E |

false |

false |

false |

For convex BBoxes the above will give correct detection results.

Both polygons are constructed from corner-points consisting out of the intersection between the opening-angle boundaries at maximum detection range and their corresponding tangents.

Function

Construct the polygon based on the opening-angle

Check if detection-field (polygon) intersects with any BBox (object-detection)

Calculate the distance between sensor and object

if (dist <= range && isIntersecting) -> object is in circular sector (object validation)

Cases

For angles < 1.0 * pi a four-corner (kite) polygon can be constructed out of two radiuses and two tangents.

For angles > = 1.0 * pi and < 2.0 * pi a five-corner polygon can be constructed of two radiuses an three tangents.

For opening-angle of exactly 2.0 * pi the distance information suffices. No polygon is needed.

Visual Obstruction

Objects in front of others block the sensors line of sight.

If an object is large enough it might visually obstruct others.

To check if one or multiple objects in combination “shadow” other objects the flag EnableVisualObstruction can be set.

Also the minimum required percentage of the visible area of an object to be detected can be specified.

The implemented algorithm uses the concept of shadow-casting, where the sensor cone is assumed to be the light source and the resulting shadow is calculated for each object.

If an object is shadowed too much, it is removed from the list of detected objects.

Shadow casting is calculated as follows:

Approximate detection field as circular sector.

Calculate the casted shadow of each object inside the detection field.

Remove bounding box of object from shadow, so that it can be detected.

Remove the casted shadow from the detection field.

For each object, check if remaining area is inside the remaining polygon.

Remove objects, if relation

covered object area/total object areais smaller than a parameterizable threshold.

Example

Assume that the vehicle ahead is fully captured by the following vehicle’s sensor cone. The outer points of the bounding box define the cone of the shadow.

Vehicle within sensor range

For calculation of the shadow polygon, these boundary vectors needs to be extended w.r.t. detection range.

In order to calculate the shadow polygon, the bounding vectors needs to be scaled with regard to the detection area. Note that extending only to the edges of the detection area would produce too small a shadow.

Too small shadow with missing area in red

To avoid numerical scaling problems related to the length of the bounding vectors, the shadow is not stretched to a fixed size. Instead, the internal algorithm overestimates the size of the shadow, with the detection range as a guaranteed lower limit.

Overestimated shadow

For this guarantee, the actual detection area must be approximated along the edge of the ideal circle (rather than by a tangential approximation). With regard to typical object sizes and detection areas, the error can be considered negligible.

Approximation of the sensor cone

Next, the object’s bounding box is removed from the shadow.

Approximated sensor cone and shadow with removed bounding box

Finally, the shadow is subtracted from the sensor cone.

Sensor cone with removed shadow

The process is repeated for each object in the detection area.

Shadow of partially occluded vehicle

Sensor cone with removed shadow of partially occluded vehicle

Note that the order of shadow calculation and subtraction is irrelevant.

SensorFusionOSI

The SensorFusionOSI module allows unsorted aggregation of any data provided by sensors. All sampled detected objects can then be broadcasted to connected ADAS.

It collects all SensorDataSignals and merges them into a single SensorDataSignal.

SignalPrioritizer

All channels can only have one source. If one module can have the same input type from multiple sources a prioritizer module is needed in between. All sources then get an output channel to the prioritizer module and the prioritizer gets an output to the module, which uses this signal. If more than an component sends an active signal during the same timestep, the prioritizer forwards only the signal from the input channel with the highest priority. These priorities are set as parameters in the systemconfigblueprint.xml, where the key corresponds the the id of the input channel and the value is the priority (higher value is prioritized). In the following example the channel with id 102 has the highest priority (3) and the channel with id 100 has the lowest priority (1).

<component>

<id>PrioritizerName</id>

<schedule>

<priority>150</priority>

<offset>0</offset>

<cycle>100</cycle>

<response>0</response>

</schedule>

<library>SignalPrioritizer</library>

<parameters>

<parameter>

<id>100</id>

<type>int</type>

<unit/>

<value>1</value>

</parameter>

<parameter>

<id>101</id>

<type>int</type>

<unit/>

<value>2</value>

</parameter>

<parameter>

<id>102</id>

<type>int</type>

<unit/>

<value>3</value>

</parameter>

</parameters>

</component>

One prioritizer module can only handle signals of the same type and the signal class must be derived from ComponentStateSignal. If there is no signal in one time step, then the signal of the previous time step is hold.

Existing prioritizer modules

PrioritizerAccelerationVehicleComponents

PrioritizerSteeringVehicleComponents

PrioritizerTurningIndicator

PrioritizerLongitudinal

PrioritizerSteering

PrioritizerDynamics

ComponentController

Overview

The ComponentController (CC) is used to configure and handle dependencies between other vehicle components.

Example use cases could be:

Cruise control:

driver requesting higher acceleration than cruise control overrides the latter

driver braking deactivates cruise control

Lane keeping assistant:

cannot be activated by driver, if emergency braking is currently active

stays active, when emergency braking occurs (i. e. by other ADAS)

The responsibilies of the CC are:

Handling of all dependencies between VehicleComponents in case a component wants to activate

Make information about driver, TrajectoryFollower and other VehicleComponents available to each other

Determine the highest allowed activation state of a component and notify the affected component about this state

To achieve this tasks, each component is assigned a maximum allowed state in each time step. This state is of type ComponentState, which defines Disabled, Armed or Active as allowed states. Drivers can be in a state of either Active or Passive.

State handling inside Vehicle Component

Within a vehicle component, the information flow should be implemented as follows:

The vehicle component retrieves the information of other components and the current maximum allowed state from the CC. Other components include drivers, trajectory followers and all other vehicle components connected to the CC.

Based on that information the vehicle component determines its current desired state.

The desired state is sent to the CC.

The CC handles all the dependencies between different components and determines the maximum allowed state for each component based on the configuration of the CC.

Used signals

The CC communicates with the controlled components via framework signals.

Inputs to the ComponentController:

Source |

Contents |

Signal |

|---|---|---|

TrajectoryFollower |

Current state |

ComponentStateSignal |

Driver |

Current state, pedal activity |

DriverStateSignal |

VehicleComponent |

Current state, desired state, generic ADAS parameters |

VehicleCompToCompCtrlSignal |

Output to other components:

Destination |

Contents |

Signal |

|---|---|---|

TrajectoryFollower |

Current max. reachable state |

ComponentStateSignal |

Driver |

List of all ADAS with names, stati and types |

AdasStateSignal |

VehicleComponent |

Current max. reachable state, list of all ADAS with names, stati and types |

CompCtrlToVehicleCompSignal |